What Makes a Good Metric?

Over the last nine years, I’ve spent a lot of my time thinking about audience, metrics, culture, and journalism. In that period one thing has remained a constant: a broad sentiment that the metrics we have aren’t good enough. A few years ago at the first Newsgeist Europe, I stood up in defense of page views

The other side of this coin is the restless search for a better metric. Many people across the industry instinctively feel that our data isn’t complete without a number built around our higher ambitions or that captures the quality of a piece or publisher in a way that Reach and Time Spent cannot.

This is entirely understandable, not least because there may well be practical applications for such a measure at the industry level. That’s why Frederic Filloux and my brilliant former colleagues, Graham Tackley and Matt McCallister, have spent so much time and effort attempting to capture concepts like

A key thing to question is the belief that, because one thing can be measured, that another thing must be measured. This principle can lead to vast expense, the creation of aggregated metrics that can obscure detail or the use of proxies that can misinform and result in terrible decisions across an organization. Language is often bent out of shape to bridge the abyss between realism and idealism. Beware naming metrics after the initial aspiration rather than the finished product. Think about, for example, what the term “Unique Users” implies.

All metrics have the capacity to go wrong. Decent culture has to be established around any number to avoid warped behavior.

The simplest example here is around Quality. If you work for an organization set up in good faith to produce quality journalism, along with all the processes, expertise, and structures that entails, do you actually require an internal metric? What damage might it do? If a piece fails to get a very large number of page views, there is no danger that anyone could imply it lacked quality. But what if your metric explicitly claimed to capture it? The question “should this be a metric?” needs to be asked before “how can we build this metric?”

Obviously, all metrics have the capacity to go wrong. Decent culture has to be established around any number to avoid warped behavior. So while page views have many decent qualities, if pursued unchecked and with no editorial constraint, you can end up with abysmal nonsense. That for me is a crucial, but separate, issue.

With a little help from the Twitter hivemind, I began trying to boil down the key qualities of a good metric. I’m thinking here of business-wide metrics that you’d expect an entire organization to be familiar with. (If you were working on short-term or very specific projects, you might relax around some of these characteristics.) In terms of applying the system to the creation or evaluation of metrics, I’d score each characteristic using a traffic-light system and be wary of any metrics with a couple of red indicators, or with no green indicators.

The Five Characteristics of a Good Metric

Relevant

Does it align with core business goals? If you move the number, will it result in positive change?

Measurable

Is the value subjective? Can you define it? Is it possible to technically capture the data?

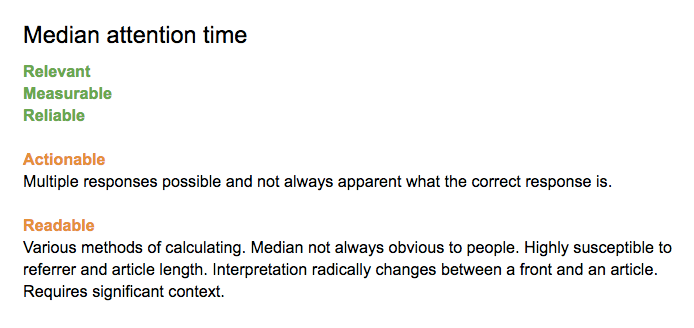

Actionable

Can you do something positive and impactful with the data?

Reliable

Is the metric technically robust? Will it be relevant in a year? Can it track progress over time?

Readable

Can it be easily misunderstood? Does it require a lot of context? Could it lead to bizarre behaviors? Does it suffer a lag that makes it difficult to evaluate?

In practice, here’s how you might use the above scale to measure median attention time.

A Few Notes and Alternatives

Relevant

This wasn’t originally in my list, but Lucy Kueng, Martin Robbins and others all correctly pointed out the need for it.

Responsive

The excellent Phil Wills made this point: “sometimes metrics are great for taking stock of the situation, but lag changes significantly, which makes them much less helpful for evaluating experiments. Subscription churn would normally fail on this.” I really like this one and am currently trying to decide whether I could legitimately bundle it into Readable as above or whether it needs its own spot.

Readable

Both Anita Zielina and Nathan Good felt that Readable could lose its place on the list, on the grounds that it could fall into Actionable. Anita also felt that Communicable was a separate characteristic. I’ve kept Readable in there because I think it’s essential for a business-wide metric and it’s a little more particular than just Actionable.

Scalable/portable

Laura Oliver and Guardian Audience Editor Peter Martin made interesting points about how flexible a metric is in terms of multiple applications (one of the reasons I like page views is that it can be used to measure pure scale but also internal promotion which is an interesting proxy for editorial value) and also whether it can be used beyond a single organization. I think both these things make a metric much more attractive but possibly aren’t essential.

Progressive

Former Guardian CDO Tanya Cordrey made the case for the ability to “track progress and demonstrate improvement over time.” It’s a point worth underlining but I’m also hoping it’s covered in the list by Reliability.

A Killer Question

The FT’s Robin Kwong has a great tip for anyone thinking about a new metric and the culture it requires around it: Ask yourself how you’d game it. And on a similar theme, David Bauer invokes the always relevant Goodhart’s Law.