The Future is Quantum

The intelligence community doesn’t have to worry about such threats yet, however. Since quantum physicists began working in earnest to try and create a quantum computer in the late 1990s, they have yet to build anything approaching a working machine, never mind running anything as complex as Grover’s or Shor’s algorithms at scale. That’s due to one simple reason: to create an effective quantum computer, it’s necessary to isolate qubits from the outside environment enough so that interactions with the outside world don’t create errors, a concept known as coherence. At the same time, they have to be sufficiently connected to the environment that they can be manipulated into performing the operations required of them. That combination has proven extremely difficult, especially as engineers have tried to scale up from two qubits to 5 or 10 or 100.

“Even five or six years ago, it wasn’t clear we’d ever be able to make a useable system,” says Robert Schoelkopf, a Yale physicist who has been experimenting to create quantum computers since 1998. “In the beginning, the question was if we would ever be able to make a qubit well enough and isolated from the environment enough that we could have superconductors that we could manipulate with some reasonable fidelity.”

Currently, two leading contenders for quantum computing technology are superconducting circuits and trapped ions, both of which have their benefits and drawbacks. Superconducting circuit qubits, like those in Oliver’s lab at MIT, use the same basic techniques as those used to create modern computer chips, using semiconductor manufacturing tools to deposit, pattern, and etch superconducting aluminum on silicon wafers. For the qubit, two layers of superconducting aluminum are separated by a layer of insulating aluminum oxide; through that, a tiny tunnel called a “Josephson junction” allows millions of electron pairs to flow without resistance, creating quantum states and discrete energy levels just like a giant atom.

By cooling the qubit down to extremely low temperatures, engineers can control the frequencies at which this state resonates to create two phases, which then become the 0 and 1. By hitting the qubit with a microwave pulse, they can drive the transition from one state to another, or leave it in superposition between them. The problem is that, thus far, the circuits can only remain in superposition for relatively small periods of time — currently around 100 microseconds. “We want to make that lifetime as long as possible compared to how long it takes to drive from ground to excited states,” says Oliver. “The question is how many of these gates can I perform before I lose that quantum information.’ Current times are still long enough to perform 1,000 to 10,000 gates depending on the complexity of the operation, a tremendous improvement over the past 15 years, but not yet long enough to do anything useful.

The other leading form of qubits today are trapped ions, which consist of charged particles suspended in vacuum with an electromagnetic field, and hit with pulses from lasers to drive their different states. Compared to superconducting qubits, trapped ions are able to maintain their coherence longer — up to a leisurely second or longer — but they are also much slower to respond from pulses from the outside environment, so the total number of gates possible with both technologies is about the same.

Superconducting qubits and trapped ions have other differences as well. With trapped ions, for example, it’s possible to entangle any qubit with any other in the same trap. With superconducting qubits, however, qubits must each be discretely connected to each other in order to allow entanglement — so in linear architecture, for example, each qubit could only be entangled with its immediate neighbor, while in a square lattice, each could be connected with perpendicularly or diagonally with multiple other qubits. Oliver’s lab has been further experimenting with a three-dimensional architecture that could create connections between qubits that are not immediately adjacent.

Behind those technologies are a number of other techniques for creating qubits that may one day show promise or even exceed the abilities of superconductors and trapped ions. After all, as MIT physicist Seth Lloyd once said, since the natural state of the world is quantum, “Almost anythingbecomes a quantum computer if you shine the right kind of lighton it.” So far, other contenders include: neutral atoms, single atoms suspended in a lattice that can be hit by lasers to excite them to a quantum state without affecting nearby atoms; photons, quanta of light emitted by atoms that can be redirected to interact with themselves with mirrors; quantum dots, consisting of “puddles” of electrons on the nanoscale, each with its own quantum spin; and nitrogen-vacancy centers in diamond, which exploit natural imperfections in diamonds that create free-floating spare electrons that can be manipulated into a quantum state.,,, Another dark horse technology are topological qubits, theorized by Alexei Kitaev at CalTech, which depend on a theoretical quasiparticle called a Majorana fermion. The advantage of these qubits is that they are theoretically much more stable than other kinds; the disadvantage is that to date, no one has successfully demonstrated that such particles exist.

Each of these technologies has its own advantages and disadvantages — some are faster, for example, while others are less error-prone, though none of them are so far ideal. “These days, it’s hard to pick a winner,” says Savoie. “You know, which horse do you want? Well, that one’s slow and gimpy, but this one’s got a sore on its heel. They are all kind of bad.” No matter what technology is used, creating a functional quantum computer requires simultaneously making qubits faster and protecting them from the errors that can cause decoherence in order to maintain their superposition states longer. Errors can result from two sources: control errors and decoherence. A control error is analogous to setting the radio dial slightly off from a station, creating a bit of static. With superconducting qubits, for example, each one will be slightly different than the others, and they must be carefully calibrated in order to respond to the microwave pulses that control their gates.

Decoherence errors are a bit more insidious — the same entanglement that allows qubits to connect with one another can backfire when they become entangled with other atoms in the environment instead. “There is something that’s called the monogamy of entanglement,” says Peter Johnson, a quantum theorist at Harvard who is also part of the founding team of Zapata. “If I am wed to you, I can’t be wed to someone else.” In order to protect against such promiscuity, quantum engineers follow one of two methods. Passive error suppression consists of sending small pulses to dynamically decouple qubits from the environment. Oliver likens it to the way lacrosse players cradle the ball in the stick pocket, moving it rhythmically back and forth as they run down the field. “If you just held the stick, the ball would fall out,” he says. “By making these body motions periodically, it will stay in longer.”

Active error correction, meanwhile, focuses on flooding the zone with so many qubits that they will together compensate for decoherence in any small number of them. “You are kind of hiding the information from the environment,” says Johnson, who poses the following analogy: “Say I wanted to tell you whether the Celtics won last night, and I am going to do this by handing you a penny that is either heads or tails. But there is a 10 percent chance of the coin being flipped.” One way to protect against that problem would be to send three pennies instead of one — so even if one penny came out tails, you could still be relatively sure from the two heads that Boston won the game. Extrapolated out beyond three pennies, it’s easy to see how each additional penny you received would increase your confidence until you got to the point where you absolutely certain of the outcome. Unfortunately, achieving that kind of error correction with a 100-qubit computer would require on the order of 100,000 or a million qubits just to keep errors in check. “That gets costly very quickly,” says Johnson. “A million pennies is $10,000.” Of course, when talking about qubits rather than pennies, that costs gets astronomically larger.

In the short term, most companies working towards creating a quantum computer are focusing just on putting enough qubits together into a single device in order to actually do simple computations. Due to the substantial costs of fabrication, companies have had to choose one technology. By far, the most popular is superconducting qubits, which is the method of choice for IBM, Google, and Intel, who can leverage their existing expertise in lithographic tools for silicon in a new superconducting medium. Several startups are also experimenting with superconducting qubits, including Rigetti Computing, based in Berkeley, California, and Quantum Circuits, spun out of Schoelkopf’s Yale lab. Maryland-based startup IonQ, meanwhile, is pursuing a trapped ion approach, while Microsoft has placed a bet on topological qubits.

As MIT physicist Seth Lloyd once said, since the natural state of the world in quantum, “Almost anything becomes a quantum computer if you shine the right kind of light on it.”

All of these companies are engaged in a race to see who can put the most qubits together into a functioning chip in order to be the first to achieve the quantum supremacy moment that can demonstrate a quantum computer that can best its classical cousins. At the present moment, Google is leading that race with the announcement in March of a 72-qubit processor it dubbed Bristlecone after the prickly distribution of x-shaped qubits in its square array. In its announcement of the technology, Google said that it believed that it could use a processor of this size to demonstrate quantum supremacy.

Of course, that feat requires performing calculations with a low enough degree of errors. “The critical piece is not just the number of qubits, it’s also the fidelity of the system,” says Google researcher Sergio Boixo, a former physics professor at the University of Southern California. While Google hasn’t released the error rates for its current chip, past chips have had rates as low as 0.6 percent for 2-qubit gates, a critical measure of fidelity. Boixo notes that Google has focused its efforts on increasing the number of qubits while maintaining the current rates of fidelity, and eventually even increasing gate fidelity. The company estimates that it will need error rates of less than 0.5 for 2-qubit gates to achieve quantum supremacy, and recently updated its timeline to predict it would achieve that supremacy sometime this year. IBM and Intel are not far behind, unveiling 50-qubit and 49-qubit chips respectively at January’s Consumer Electronics Show in Las Vegas. With a low enough error rate, those chips could also achieve quantum supremacy in the near term.

The best way for a quantum computer to demonstrate supremacy, of course, would be to model a quantum system. Even the most basic chemical reactions, however, are beyond the scope of the noisy non-error corrected devices any of the companies will be able to produce in the near future. In a paper published in April in Nature Physics, Boixo proposed that the one way to demonstrate quantum supremacy would be to calculate the outcome of a sampling of random quantum circuits — a task notoriously difficult for classical computers to achieve.

Such a task, while certainly a milestone, would be a far cry from a universal quantum computer that could run useful algorithms such as Grover’s and Shor’s algorithms or simulate quantum systems for use in chemistry and biology. For that reason, many quantum researchers have moved away from using the term quantum supremacy as the indicator for achieving success in the field. Even Caltech physicist John Preskill, who first coined the term quantum supremacy in 2011 has tried to downplay the hype, writing in a paperpublished in January that “quantum supremacy is a worthy goal, notable for entrepreneurs and investors not so much because of its intrinsic importance, but rather as a sign of progress towards more valuable applications further down the road.” In his paper, Preskill proposed a new-term, NISQ or “noisy intermediate stage quantum” to define the stage in which systems with 50 to 100 qubits might still be able to perform useful tasks better than classical computers, even without error correction.

“I want to revolutionize history through computing.” Alán Aspuru-Guzik

That’s been the focus of chemistry professor Alán Aspuru-Guzik, who is currently at Harvard but moving to the University of Toronto this summer. His office is full of Shepard Fairey graphic prints of revolutionaries in red and black. “I like these images of these heroes doing something for humanity,” he says. “I want to revolutionize history though computing.” After watching his postdocs and graduate students leave one by one for the likes of Google and Intel, he decided to start his own quantum computing company last summer, sketching out the company “over a couple of burritos at the Qdoba in Harvard Square.” After casting around for a sufficiently revolutionary name, he settled on Zapata, after Emiliano Zapata, the peasant leader during the Mexican revolution. “He was a good guy, and he had a beautiful moustache,” he says.

Unlike most quantum companies, which have focused on building quantum computing hardware, Zapata has focused on what might be considered software — the algorithms that drive quantum computing calculations. Before creation of a universal error-corrected quantum computer powerful enough to run algorithms like Grover’s and Shor’s, Aspuru-Guzik says, there will be a long period in which they can still run useful programs. “We already know the algorithms for a million qubits,” he says. “My company’s job is to figure out what are the algorithms for these decades of 100, 1,000, and 10,000 qubits.” Zapata is looking past the point of quantum supremacy — which Aspuru-Guzik prefers to call “quantum inflection point — because the word supremacy is loaded politically” to how quantum computing can best classical computing in the NISQ era. “The interesting point is what happens between 200 and a million qubits. What’s the first point that it will do a quantum task that is useful for humanity?”

Ultimately, Zapata aims to focus on chemistry, which Aspuru-Guzik and Peter Love identified more than a decade ago as the ultimate quantum problem, helping to design algorithms that might better identify catalysts for chemical processes or candidate molecules and materials. In the meantime, however, he says quantum algorithms will be able to aid in any problem that will require a more optimal answer, even if it can’t ultimately provide the best answer. Those problems could include machine learning, compression, route optimization, and searching. He calls such algorithms “variational algorithms” and compares them to tuning a guitar string with the help of a tuning fork. “The tuner is what I want the quantum algorithm to do, but I can use the algorithm to get the strings of a chord closer to the notes I want,” he says.

Zapata’s CEO Chris Savoie compares this stage in quantum software development to the early years of classical computing, even before the invention of assembly language, never mind modern computer languages like C. At this point, the company is literally sketching out its algorithms as complex circuits, and sending those to companies it partners with in order to perform certain tasks. “So the program software is a soft piece of paper for now,” he quips. So far, Savoie estimates, Zapata has been involved in a large majority of the near-term algorithms currently being tested on quantum computers, partnering with all of the major companies to run algorithms on their systems. Being flexible on which technology its uses allows Zapata to pick the ideal configuration of qubits able to be entangled with each other in order to pull it off. “We might go to a customer like a pharmaceutical company, and they say, here’s the mathematical problem we’re trying to solve,” he says, “and we take that and draw a bunch of Greek letters on the board, and then convert that into a diagram. Then we might say, oh wow, I need linear connectivity for this thing — or I need a lot of samples, so I need faster gate speeds, and we’ll make that choice.”

In various ways, other companies are developing their own approaches for making quantum computing useful in the near term. Schoelkopf, who created his own company Quantum Circuits out of his lab at Yale, is pursuing a strategy of integrating both hardware and software in one package. “We plan to field the first useful quantum computing systems,” he says. “It’s likely this will be offered as a service, since these things aren’t very portable for now.” His lab has been pursuing a unique modular architecture, which would put qubits on smaller chips that could be networked into a system in different configurations depending on what type of problem a client is trying to solve. “It means you could probably be more efficient in implementing certain algorithms, because you are not stuck to one network of connectivity,” he says.

The real race my not be between quantum and classical computers, after all, but between quantum hardware and quantum software.

In addition to its own success in fabricating quantum chips, IBM has focused efforts on educating the public on the potential of quantum computers, to prepare them for the eventual moment when they will be able to perform useful functions. Recently, it launched the Q Experience, a cloud-based interface in which users can use a quantum composer, which looks like an image of guitar tablature to drag-and-drop gates onto qubits to perform algorithms on real quantum computing hardware. “It allows us to reach a broader community of students, researchers, and just people who are interested in learning about new computing technology,” says Jerry Chow, IBM’s manager of experimental quantum computing. “The idea is to break down barriers, so you are not the only person at the cocktail party who understand what quantum computing is.”

IBM’s website also features video tutorials by its engineers breaking down quantum computing concepts into bite-sized 3-minute lessons to demystify the field. “We call that getting ‘quantum ready,’” Chow says. Already, the company has gotten a lot of interest from college and even high school students eager to learn about the new technology. For more advanced users, IBM has begun to develop its own programming language for quantum computing called QISKit, an open-source software which uses Python scripts to execute algorithms on quantum hardware. “One of the most important things we can do in the near term is get more people involved,” Chow says. “It’s really tapping into the developer mindset, where we want to cultivate the next generation of quantum developers.”

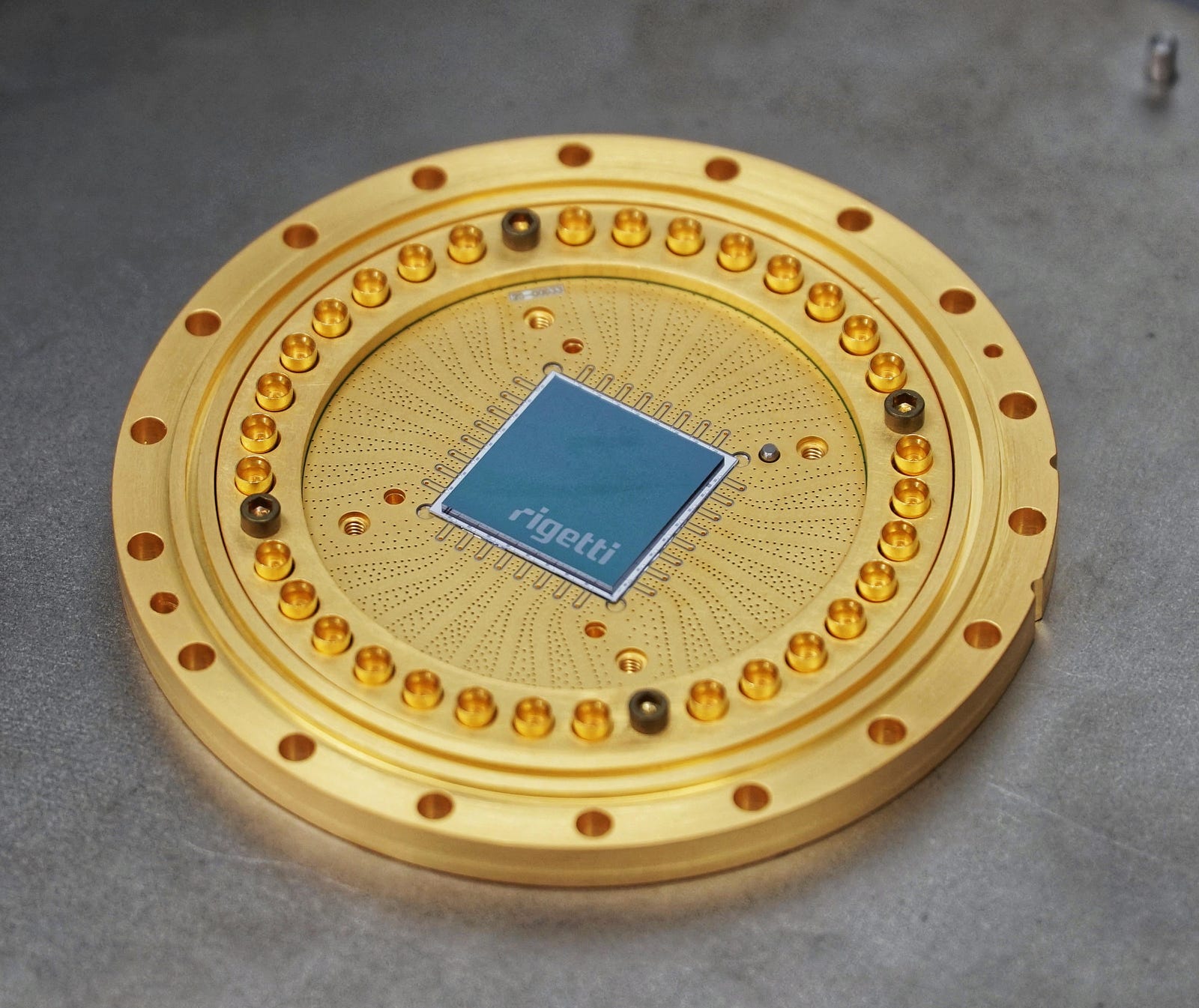

Berkeley-based start-up Rigetti has been building it own “soup-to-nuts” quantum company from the ground up, including building chips, computer, and software at the same time. The company has focused on speed, building a quantum integrated circuit fabrication lab that can iterate a new design on a 4–6 week timeline, rather than the 12–18 month timeline that might be typical to build a new quantum chip. “Since we first started manufacturing chips in January 2016, we’ve doubled the number of qubits every six months, and we expect to keep doubling every 6 months for a handful of years,” says CEO Chad Rigetti. Currently, Rigetti’s biggest publicly released chip has 19 qubits — though he says they already have a doubling or two beyond that (though the company has not released exactly how many yet).

Like IBM, Rigetti has created its own cloud-based programming interface, which is calls Forest, a similarly open-source software using Python scripts. Rather than focus solely on the quantum environment, however, Rigetti’s language allows for the integration of quantum and classical computers in the same program, something the company calls “quantum-classical hybrid computing.” “It uses a quantum computer as part of an optimization loop on a classical machine,” Rigetti explains. Rather than quantum supremacy, he likes to use the term “quantum advantage” to explain how, in the near-term at least, quantum computing can help to improve solutions to problems by providing an answer that is more efficient, or takes less time, or costs less money, than a classical computer can do alone.

Until engineers can truly build a quantum computer that is free enough from errors to perform the advanced calculations, this may be the future — a convergence of increasingly better quantum computers and increasingly better algorithms to perform more and more useful tasks. The real race may not be between quantum and classical computers, after all, but between quantum hardware and quantum software. “Quantum chips are going to continue to improve at an exponential rate, and pretty soon the physical capability is going to outstrip what we know how to take advantage of,” Rigetti says. “There will be huge value in capturing the right algorithms to unlock these possibilities.”