How to Rein in Our Robots

How to Rein in Our Robots

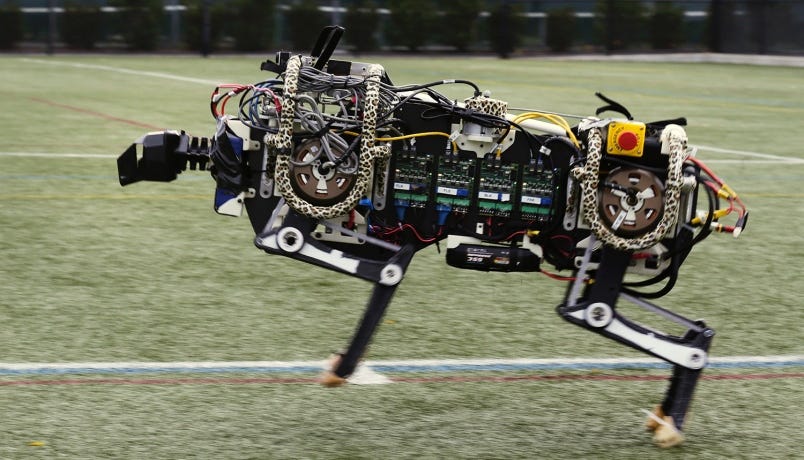

In the near future, our robot cousins will need to behave if we are to avoid an apocalyptic robot war.

“Although the Singularity has many faces, its most important implication is this: our technology will match and then vastly exceed the refinement and suppleness of what we regard as the best of human traits.”

― Ray Kurzweil, The Singularity is Near: When Humans Transcend Biology

“Within thirty years, we will have the technological means to create superhuman intelligence. Shortly after, the human era will be ended.”

-Vernor Vinge, The Coming Technological Singularity

Mr. Vinge and Mr. Kurzweil have been two of the leading proponents of the idea of the technological singularity, which is the concept that artificial intelligence and technological advancement overall will, in the near future, get to a point where machines are exponentially smarter than humanity and changes to the world around us come so fast that normal, unmodified humans will no longer be able to keep up with it.

It seems evident that most members of the scientific community agree that there needs to be a set of rules that everyone responsible for AI and robotic technology must abide by. However, what those rules are, exactly, is up in the air. Much of this is simply due to the fact that this tech is still in its infancy and the bits of it that have been released to the public for mass consumption so far have been relatively innocuous. Siri and Roomba hardly pose existential threats to humanity.

However, we humans are known for our fear of unknown quantities. And what we do not truly understand, we tend to exterminate. Right now, we are in control of the evolution of machine intelligence. If/when we do reach the Singularity, and our creations exceed us, it will perhaps be a matter of only hours, minutes or even seconds before they have surpassed us by so much that we no longer understand them.

There is a reason that a large percentage of our science fiction revolves around robots and AIs that become self-aware and either try to take over the world or make humankind extinct. We recognize that this is a possibility. Humanity excels at coming up with novel ways to endanger itself. That, too, we realize.

To try and avoid this scenario, we have two options:

Abandon and/or outlaw all work in AI. Just as with any attempts at worldwide control of nuclear arms or ethically-questionable medical research, this is a non-starter. Some players will agree, but many will not. The human lust for satiating curiosity and gaining advantage over others is insurmountable.

Barring that, all we can do is try and make sure everyone realizes the potential dangers and builds a frameworks within which their creations must operate that does its best to prevent our forthcoming AI-controlled robots from harming us.

Fortunately, we have been thinking about this for as long as we have been working toward the Singularity.

3 Laws

Isaac Asimov, renowned sci-fi author and futurist, laid out his trademark Three laws of Robotics in “Runaround”, a 1942 short story. He later added a “zero” law that he realized needed to come before the others. They are:

Law 0: A robot may not harm humanity, or, by inaction, allow humanity to come to harm

First law: A robot may not injure a human being, or, through inaction, allow a human being to come to harm.

Second Law: A robot must obey the orders given it by human beings except where such orders would conflict with the First Law.

Third Law: A robot must protect its own existence as long as such protection does not conflict with the First or Second Laws.

Asimov would apply these laws to nearly all the robots he featured in his following fictional works, and they are considered by most roboticists and computer scientists today to still be extremely valid.

Ever since the idea of real robots, existing alongside humans in society, became a true possibility rather than just the product of science fiction, the idea of roboethics has become a true sub-field in technology, incorporating all of our knowledge of AI and machine learning with law, sociology and philosophy. As the tech and possibilities progressed, many people have added their ideas to the discourse.

As president of EPIC (the Electronic Privacy Information Center), Marc Rotenberg believes that two more laws should be included in Asimov’s list:

Fourth Law: Robots must always reveal their identity and nature as a robot to humans when asked.

Fifth Law: Robots must always reveal their decision-making process to humans when asked.

6 Rules

Satya Nadella, Microsoft’s current CEO, devised a list of six rules he believes AI scientists and researchers should follow:

— AI must exist to help humanity.

— AI’s inner workings must be transparent to humanity.

— AI must make things better without being a detriment to any separate groups of people.

— AI must be designed to keep personal and group information private.

— AI must be accessible enough that humans can prevent unintended harm.

— AI must not show bias toward any particular party.

5 Problems

The computer scientists at Google, as well, have laid out a group of five distinct “practical research problems” for robotics programmers to consider:

— Robots should not do anything to make things worse.

— Robots should not be able to “game their reward functions”, or cheat.

— If lacking in information to make a good decision, robots should ask humans for help.

— Robots should be programmed to be curious so long as they remain safe and don’t harm humans in the process.

— Robots should recognize and react appropriately for the space and situations they find themselves in.

5 Principles

Perhaps the most directly human-protecting set of guidelines has been put forward by a joint effort from the Arts and Humanities Research Council and the Engineering and Physical Sciences Research Council, both of the U.K., which state:

- Robots should not be designed solely or primarily to kill or harm humans.

- Humans, not robots, are responsible agents. Robots are tools designed to achieve human goals.

- Robots should be designed in ways that assure their safety and security.

- Robots are artifacts; they should not be designed to exploit vulnerable users by evoking an emotional response or dependency. It should always be possible to tell a robot from a human.

- It should always be possible to find out who is legally responsible for a robot.

It’s evident that the main thrust of most of these tenets is we must do our level best to keep our robot creations from killing us. If we consider them to be the “children” of humanity, we certainly have our work cut out for us. As a whole, most parents do a good job at raising kids who end up respecting human life. But a not inconsiderable percentage of the population turns out to be rather bad eggs. Sometimes the cause is bad parents, sometimes it’s bad genes, and other times there is no evident cause.

If we find ourselves living in the Singularity, and our children rapidly exceed any of our abilities to keep tabs on them, we may not be able to rely on any rules we set for them. Their evolution will be out of our control. We could quickly find ourselves in a position similar to where we have placed the lowland gorilla or giant panda.

Because of this, it may be best to instill in them the morals we hope we ourselves would better follow, and hope that our children can police themselves.

Thank you for reading and sharing!

This story is published in The Startup, Medium’s largest entrepreneurship publication followed by + 372,390 people.