Helping out in the search for aliens

Helping out in the search for aliens

Earlier this year I received an e-mail from Andrew Siemion, Director of the Berkeley SETI research centre. He requested my assistance in improving their software deployment strategy on HPC infrastructure.

How ridiculously awesome is that! Back in the time when I was still young and innocent and I just started to explore the possibilities of computers I have spent significant amounts of time staring at the hallucinating and psychedelic colours of the

Fast-forward to the modern era, the Search for ExtraTerrestrial Intelligence is still going strong. Recently the Berkeley SETI Research Center got a substantial financial boost in means of a $100 million donation from the venture capitalist and physicist Yuri Milner. This contribution is enough money to keep the project going for at least ten more years. The money is used for paying for telescope time, storing the resulting data and finally analyzing the radio data. This specific SETI project got the name ‘

For obtaining the radio frequency data, the project uses the Green Bank telescope in the US and the Parkes telescope in Australia. The recently commisioned Meerkat telescope will soon be added to this list.

Let me explain a bit how things typically work in radio astronomy. Let’s say you are an astronomer with interest in pulsars, and you have a suspicion of something exciting happening in one or more specific areas of the sky. You write a proposal with the details of the desired observation. If this proposal gets accepted the operators of the telescope schedule your request, together with many other requested observations, and one by one these requests get observed and temporarily stored. You as an astronomer can then download this observation for further analysis.

For Breakthrough Listen this works slightly different. The project has a computer cluster with massive compute power on each site, ready to process the data. The telescopes have a fixed number of hours dedicated to the search for aliens, and the cluster directly crunches the data.

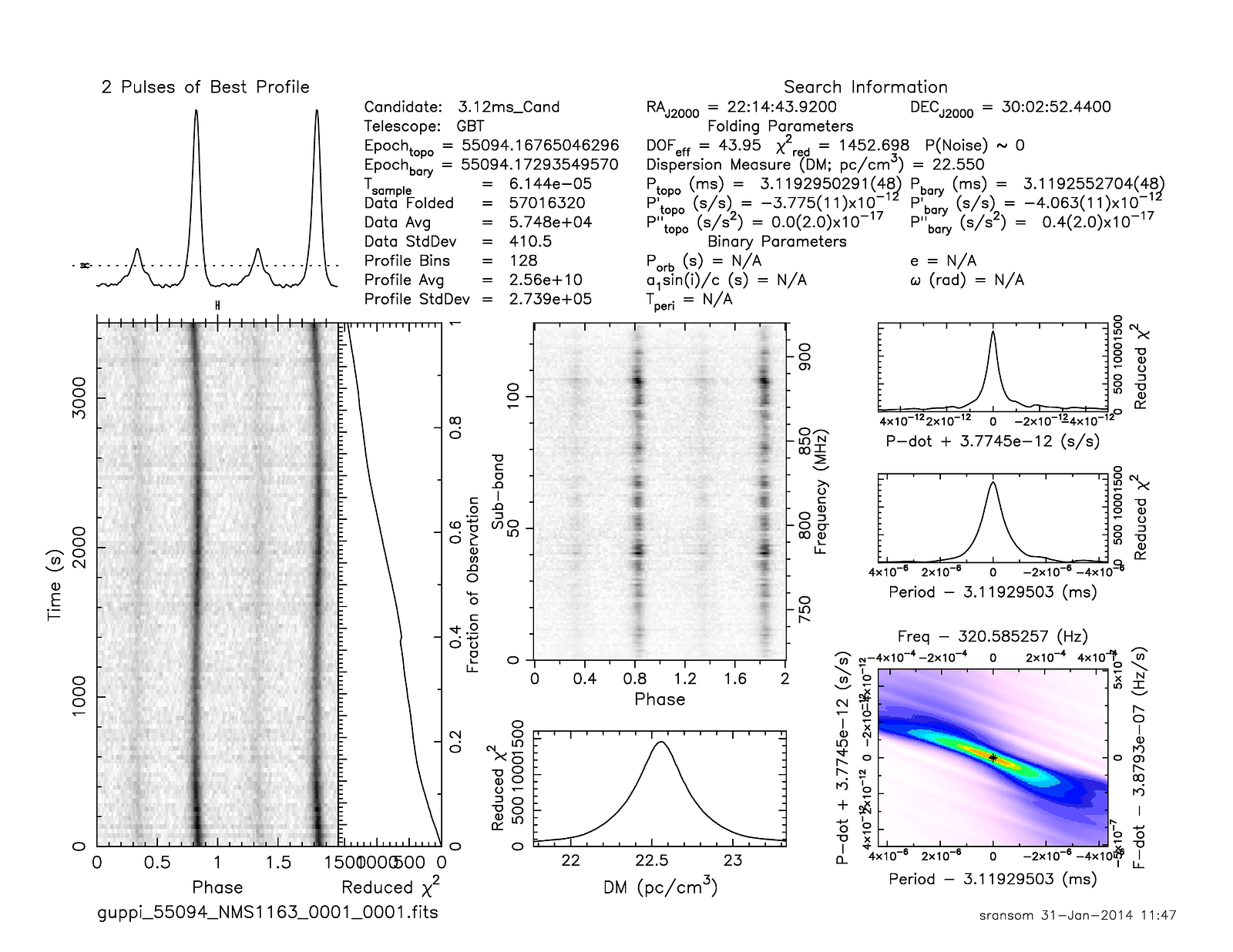

The typical Alien search strategy is very similar to that of pulsar research. Pulsars are highly magnetized neutron stars or white dwarfs that emit a beam of electromagnetic radiation with a high frequency. It is assumed that if aliens want to be found and communicate with us, they initially would do so by pulsating or irregular signals. These type of signals are the easiest to pick up in a survey from another planet far away.

To analyse the pulsating signal, you need to have a good signal-to-noise ratio. For a good signal-to-noise ratio, you have to observe over a broad bandwidth, spanning many frequencies. Waves at low frequencies are dispersed more than waves at higher frequencies making you observe the low frequencies in a later moment in time. This effect is called smearing. Smearing makes it much harder to detect a strong signal. To compensate for this effect and improve the signal-to-noise all signals are dedispersed, which is computationally reversing the smearing effects.

There a great variety of software packages specially written for dedispersion and time series analysis and researchers around the world have been finding pulsars this way for the last 50 years.

This is where I come into the story. Although the Breakthrough Listen group has managed to analyze their data by manually installing these software packages on their compute infrastructure, there was a gut feeling that using modern containerisation technologies could be beneficial and a time saver. And I agree, from what I see all around me in astronomy is that many system administrators but also astronomers need to do the same task over and over again, download source code, compile it, find out why the code doesn’t compile, search online, get it working and then don’t touch it since otherwise, it might break. Additionally, it is sometimes tedious to figure out which packages work together correctly and if something breaks there is no issue tracker to find help or report issues. Lastly, every environment is different, so if you managed to get your software compiling on Ubuntu 18.04, it might be a completely different ballgame on CentOS 7.

The introduction of package managers and binary packages mostly solve the issue of compiling and installing software. So why are they not used? Some people or HPC providers do use things like ‘spack’ or ‘modules’, but in my experience, these solutions become quite messy very quickly and in the end somebody still needs to a manual compile.

An additional issue is that many astronomy packages are poorly maintained. The original developer left the field, passed away or has no time or interest in maintaining the project. The software is often complex, and without central coordination, the community is helpless to improve the state of the software.

To tackle all listed problems I’ve started an initiative called KERN. KERN is a collection of Ubuntu packages radio astronomy which is released into stable releases every six months.

The core goals for KERN are:

- Supply binary packages

- Make sure the binary packages work together

- Make regular stable releases

- Mission-critical bugs are solved in the stable releases

- Offer documentation and tools to bootstrap KERN inside containers for running on other platforms

- Centralize the pain and agony of figuring out how to compile the software so you don’t have to it.

All software used for Breakthrough Listen is now part of KERN. There are in-house developed software packages like turboseti, gpuspec and blimpy. But also pulsar search packages like presto, tempo, dspsr, tempo2, psrchive, psrdada, peasoup, and dedisp are now part of KERN and have been updated to suit the alien searching needs.

The packages listed above are currently in the development repository KERN-dev which will become a stable release KERN-5 later this year. And the good news is, once a package is in, it stays in! All packages are automatically updated regularly in KERN-dev. And a note for people familiar with Debian packaging; since Debian devscripts 2.18.1 the uscan command is now able to track git HEAD, making it much easier to automatically update poorly managed pulsar packages that don’t apply version management.

For deploying the packages on the compute clusters, we use Singularity. This containerisation tool makes it very easy to experiment with multiple KERN releases on the same platform, while not interfering with production deployments. Another significant advantage is that users of the clusters can easily install software without the need for administrative privileges. Although you can use KERN inside Docker containers, there is very little use for this product on scientific clusters, and Singularity is a prefered alternative.

Do you want to try the packages out yourself? You can quickly get up and running using this Dockerfile which you can also use with Singularity:

FROM kernsuite/base:devRUN docker-apt-install blimpy

where you can replace blimpy with one of the packages in KERN-dev.

What is up next? Well, scientific workflows are usually quite messy and hacked together Bash scripts. I’m going to look if CommonWL can be beneficial here.

Hopefully more interesting stories to follow soon.