通過live555實現H264 RTSP直播

阿新 • • 發佈:2018-12-29

前面的文章中介紹了《H264視訊通過RTMP流直播》,下面將介紹一下如何將H264實時視訊通過RTSP直播。

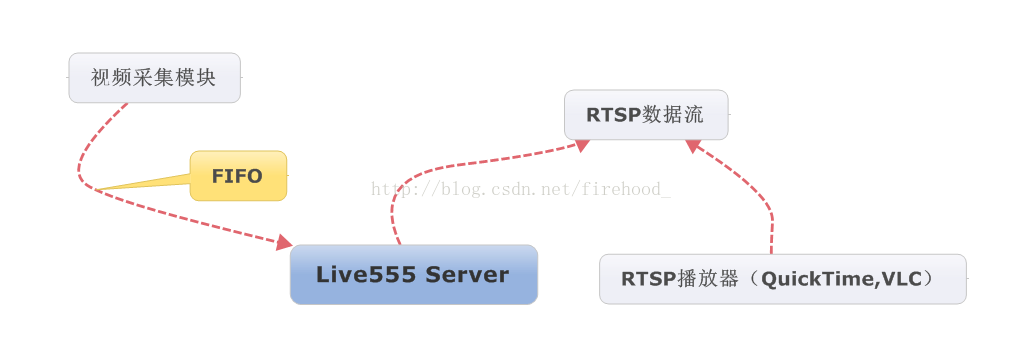

實現思路是將視訊流傳送給live555, 由live555來實現H264資料流直播。

視訊採集模組通過FIFO佇列將H264資料幀傳送給live555. live555 在收到客戶端的RTSP播放請求後,開始從FIFO中讀取H264視訊資料並通過RTSP直播出去。整個流程如下圖所示:

調整和修改Live555 MediaServer

下載live555原始碼,在media目錄下增加四個檔案並修改檔案live555MediaServer.cpp。增加的四個檔案如下:

WW_H264VideoServerMediaSubsession.h

WW_H264VideoServerMediaSubsession.cpp

WW_H264VideoSource.h

WW_H264VideoSource.cpp

下面附上四個檔案的原始碼:

WW_H264VideoServerMediaSubsession.h

- #pragma once

- #include "liveMedia.hh"

- #include "BasicUsageEnvironment.hh"

-

#include "GroupsockHelper.hh"

- #include "OnDemandServerMediaSubsession.hh"

- #include "WW_H264VideoSource.h"

- class WW_H264VideoServerMediaSubsession : public OnDemandServerMediaSubsession

- {

- public:

- WW_H264VideoServerMediaSubsession(UsageEnvironment & env, FramedSource * source);

-

~WW_H264VideoServerMediaSubsession(void);

- public:

- virtualcharconst * getAuxSDPLine(RTPSink * rtpSink, FramedSource * inputSource);

- virtual FramedSource * createNewStreamSource(unsigned clientSessionId, unsigned & estBitrate); // "estBitrate" is the stream's estimated bitrate, in kbps

- virtual RTPSink * createNewRTPSink(Groupsock * rtpGroupsock, unsigned char rtpPayloadTypeIfDynamic, FramedSource * inputSource);

- static WW_H264VideoServerMediaSubsession * createNew(UsageEnvironment & env, FramedSource * source);

- staticvoid afterPlayingDummy(void * ptr);

- staticvoid chkForAuxSDPLine(void * ptr);

- void chkForAuxSDPLine1();

- private:

- FramedSource * m_pSource;

- char * m_pSDPLine;

- RTPSink * m_pDummyRTPSink;

- char m_done;

- };

WW_H264VideoServerMediaSubsession.cpp

- #include "WW_H264VideoServerMediaSubsession.h"

- WW_H264VideoServerMediaSubsession::WW_H264VideoServerMediaSubsession(UsageEnvironment & env, FramedSource * source) : OnDemandServerMediaSubsession(env, True)

- {

- m_pSource = source;

- m_pSDPLine = 0;

- }

- WW_H264VideoServerMediaSubsession::~WW_H264VideoServerMediaSubsession(void)

- {

- if (m_pSDPLine)

- {

- free(m_pSDPLine);

- }

- }

- WW_H264VideoServerMediaSubsession * WW_H264VideoServerMediaSubsession::createNew(UsageEnvironment & env, FramedSource * source)

- {

- returnnew WW_H264VideoServerMediaSubsession(env, source);

- }

- FramedSource * WW_H264VideoServerMediaSubsession::createNewStreamSource(unsigned clientSessionId, unsigned & estBitrate)

- {

- return H264VideoStreamFramer::createNew(envir(), new WW_H264VideoSource(envir()));

- }

- RTPSink * WW_H264VideoServerMediaSubsession::createNewRTPSink(Groupsock * rtpGroupsock, unsigned char rtpPayloadTypeIfDynamic, FramedSource * inputSource)

- {

- return H264VideoRTPSink::createNew(envir(), rtpGroupsock, rtpPayloadTypeIfDynamic);

- }

- charconst * WW_H264VideoServerMediaSubsession::getAuxSDPLine(RTPSink * rtpSink, FramedSource * inputSource)

- {

- if (m_pSDPLine)

- {

- return m_pSDPLine;

- }

- m_pDummyRTPSink = rtpSink;

- //mp_dummy_rtpsink->startPlaying(*source, afterPlayingDummy, this);

- m_pDummyRTPSink->startPlaying(*inputSource, 0, 0);

- chkForAuxSDPLine(this);

- m_done = 0;

- envir().taskScheduler().doEventLoop(&m_done);

- m_pSDPLine = strdup(m_pDummyRTPSink->auxSDPLine());

- m_pDummyRTPSink->stopPlaying();

- return m_pSDPLine;

- }

- void WW_H264VideoServerMediaSubsession::afterPlayingDummy(void * ptr)

- {

- WW_H264VideoServerMediaSubsession * This = (WW_H264VideoServerMediaSubsession *)ptr;

- This->m_done = 0xff;

- }

- void WW_H264VideoServerMediaSubsession::chkForAuxSDPLine(void * ptr)

- {

- WW_H264VideoServerMediaSubsession * This = (WW_H264VideoServerMediaSubsession *)ptr;

- This->chkForAuxSDPLine1();

- }

- void WW_H264VideoServerMediaSubsession::chkForAuxSDPLine1()

- {

- if (m_pDummyRTPSink->auxSDPLine())

- {

- m_done = 0xff;

- }

- else

- {

- double delay = 1000.0 / (FRAME_PER_SEC); // ms

- int to_delay = delay * 1000; // us

- nextTask() = envir().taskScheduler().scheduleDelayedTask(to_delay, chkForAuxSDPLine, this);

- }

- }

WW_H264VideoSource.h

- #ifndef _WW_H264VideoSource_H

- #define _WW_H264VideoSource_H

- #include "liveMedia.hh"

- #include "BasicUsageEnvironment.hh"

- #include "GroupsockHelper.hh"

- #include "FramedSource.hh"

- #define FRAME_PER_SEC 25

- class WW_H264VideoSource : public FramedSource

- {

- public:

- WW_H264VideoSource(UsageEnvironment & env);

- ~WW_H264VideoSource(void);

- public:

- virtualvoid doGetNextFrame();

- virtual unsigned int maxFrameSize() const;

- staticvoid getNextFrame(void * ptr);

- void GetFrameData();

- private:

- void *m_pToken;

- char *m_pFrameBuffer;

- int m_hFifo;

- };

- #endif