Ubuntu 14.04上使用CMake編譯MXNet原始碼操作步驟(C++)

阿新 • • 發佈:2018-12-30

MXNet原始碼版本號為1.3.0,其它依賴庫的版本號可參考:https://blog.csdn.net/fengbingchun/article/details/84997490

build.sh指令碼內容為:

#! /bin/bash real_path=$(realpath $0) dir_name=`dirname "${real_path}"` echo "real_path: ${real_path}, dir_name: ${dir_name}" data_dir="data" if [ -d ${dir_name}/${data_dir} ]; then rm -rf ${dir_name}/${data_dir} fi ln -s ${dir_name}/./../../${data_dir} ${dir_name} new_dir_name=${dir_name}/build mkdir -p ${new_dir_name} cd ${new_dir_name} echo "pos: ${new_dir_name}" if [ "$(ls -A ${new_dir_name})" ]; then echo "directory is not empty: ${new_dir_name}" #rm -r * else echo "directory is empty: ${new_dir_name}" fi cd - # build openblas echo "========== start build openblas ==========" openblas_path=${dir_name}/../../src/openblas if [ -f ${openblas_path}/build/lib/libopenblas.so ]; then echo "openblas dynamic library already exists without recompiling" else mkdir -p ${openblas_path}/build cd ${openblas_path}/build cmake -DBUILD_SHARED_LIBS=ON .. make fi ln -s ${openblas_path}/build/lib/libopenblas* ${new_dir_name} echo "========== finish build openblas ==========" cd - # build dmlc-core echo "========== start build dmlc-core ==========" dmlc_path=${dir_name}/../../src/dmlc-core if [ -f ${dmlc_path}/build/libdmlc.a ]; then echo "dmlc static library already exists without recompiling" else mkdir -p ${dmlc_path}/build cd ${dmlc_path}/build cmake .. make fi ln -s ${dmlc_path}/build/libdmlc.a ${new_dir_name} echo "========== finish build dmlc-core ==========" rc=$? if [[ ${rc} != 0 ]]; then echo "########## Error: some of thess commands have errors above, please check" exit ${rc} fi cd - cd ${new_dir_name} cmake .. make cd -

CMakeLists.txt檔案內容為:

PROJECT(MXNet_Test) CMAKE_MINIMUM_REQUIRED(VERSION 3.0) # support C++11 SET(CMAKE_C_FLAGS "${CMAKE_C_FLAGS} -std=c11") SET(CMAKE_CXX_FLAGS "${CMAKE_CXX_FLAGS} -std=c++11") # support C++14, when gcc version > 5.1, use -std=c++14 instead of c++1y SET(CMAKE_CXX_FLAGS "${CMAKE_CXX_FLAGS} -std=c++1y") IF(NOT CMAKE_BUILD_TYPE) SET(CMAKE_BUILD_TYPE "Release") SET(CMAKE_C_FLAGS "${CMAKE_C_FLAGS} -Wall -O2") SET(CMAKE_CXX_FLAGS "${CMAKE_CXX_FLAGS} -Wall -O2") ELSE() SET(CMAKE_BUILD_TYPE "Debug") SET(CMAKE_C_FLAGS "${CMAKE_C_FLAGS} -g -Wall -O2") SET(CMAKE_CXX_FLAGS "${CMAKE_CXX_FLAGS} -g -Wall -O2") ENDIF() MESSAGE(STATUS "cmake build type: ${CMAKE_BUILD_TYPE}") MESSAGE(STATUS "cmake current source dir: ${CMAKE_CURRENT_SOURCE_DIR}") SET(PATH_TEST_FILES ${CMAKE_CURRENT_SOURCE_DIR}/./../../demo/MXNet_Test) SET(PATH_SRC_DMLC_FILES ${CMAKE_CURRENT_SOURCE_DIR}/./../../src/dmlc-core) SET(PATH_SRC_MSHADOW_FILES ${CMAKE_CURRENT_SOURCE_DIR}/./../../src/mshadow) SET(PATH_SRC_OPENBLAS_FILES ${CMAKE_CURRENT_SOURCE_DIR}/./../../src/openblas) SET(PATH_SRC_MXNET_FILES ${CMAKE_CURRENT_SOURCE_DIR}/./../../src/mxnet) SET(PATH_SRC_TVM_FILES ${CMAKE_CURRENT_SOURCE_DIR}/./../../src/tvm) SET(PATH_SRC_DLPACK_FILES ${CMAKE_CURRENT_SOURCE_DIR}/./../../src/dlpack) MESSAGE(STATUS "path test files: ${PATH_TEST_FILES}") # don't use opencv in mxnet ADD_DEFINITIONS(-DMXNET_USE_OPENCV=0) ADD_DEFINITIONS(-DMSHADOW_USE_F16C=0) SET(PATH_OPENCV /opt/opencv3.4.2) IF(EXISTS ${PATH_OPENCV}) MESSAGE(STATUS "Found OpenCV: ${PATH_OPENCV}") ELSE() MESSAGE(FATAL_ERROR "Can not find OpenCV in ${PATH_OPENCV}") ENDIF() # head file search path INCLUDE_DIRECTORIES( ${PATH_SRC_OPENBLAS_FILES} ${PATH_SRC_OPENBLAS_FILES}/build # include openblas config.h ${PATH_SRC_DLPACK_FILES}/include ${PATH_SRC_MSHADOW_FILES} ${PATH_SRC_DMLC_FILES}/include ${PATH_SRC_TVM_FILES}/include ${PATH_SRC_TVM_FILES}/nnvm/include ${PATH_SRC_MXNET_FILES}/src ${PATH_SRC_MXNET_FILES}/include ${PATH_SRC_MXNET_FILES}/cpp-package/include ${PATH_OPENCV}/include ${PATH_TEST_FILES} ) # build mxnet dynamic library SET(MXNET_SRC_LIST ) # tvm FILE(GLOB_RECURSE SRC_TVM_NNVM_C_API ${PATH_SRC_TVM_FILES}/nnvm/src/c_api/*.cc) FILE(GLOB_RECURSE SRC_TVM_NNVM_CORE ${PATH_SRC_TVM_FILES}/nnvm/src/core/*.cc) FILE(GLOB_RECURSE SRC_TVM_NNVM_PASS ${PATH_SRC_TVM_FILES}/nnvm/src/pass/*.cc) FILE(GLOB_RECURSE SRC_MXNET ${PATH_SRC_MXNET_FILES}/src/*.cc) LIST(APPEND MXNET_SRC_LIST ${SRC_TVM_NNVM_C_API} ${SRC_TVM_NNVM_CORE} ${SRC_TVM_NNVM_PASS} ${SRC_MXNET} ) #MESSAGE(STATUS "mxnet src: ${MXNET_SRC_LIST}") ADD_LIBRARY(mxnet SHARED ${MXNET_SRC_LIST}) # find opencv library FIND_LIBRARY(opencv_core NAMES opencv_core PATHS ${PATH_OPENCV}/lib NO_DEFAULT_PATH) FIND_LIBRARY(opencv_imgproc NAMES opencv_imgproc PATHS ${PATH_OPENCV}/lib NO_DEFAULT_PATH) FIND_LIBRARY(opencv_highgui NAMES opencv_highgui PATHS ${PATH_OPENCV}/lib NO_DEFAULT_PATH) FIND_LIBRARY(opencv_imgcodecs NAMES opencv_imgcodecs PATHS ${PATH_OPENCV}/lib NO_DEFAULT_PATH) FIND_LIBRARY(opencv_video NAMES opencv_video PATHS ${PATH_OPENCV}/lib NO_DEFAULT_PATH) FIND_LIBRARY(opencv_videoio NAMES opencv_videoio PATHS ${PATH_OPENCV}/lib NO_DEFAULT_PATH) FIND_LIBRARY(opencv_objdetect NAMES opencv_objdetect PATHS ${PATH_OPENCV}/lib NO_DEFAULT_PATH) FIND_LIBRARY(opencv_ml NAMES opencv_ml PATHS ${PATH_OPENCV}/lib NO_DEFAULT_PATH) MESSAGE(STATUS "opencv libraries: ${opencv_core} ${opencv_imgproc} ${opencv_highgui} ${opencv_imgcodecs} ${opencv_video}" ${opencv_videoio} ${opencv_objdetect} ${opencv_ml}) # find dep library SET(DEP_LIB_DIR ${CMAKE_CURRENT_SOURCE_DIR}/build CACHE PATH "dep library path") MESSAGE(STATUS "dep library dir: ${DEP_LIB_DIR}") LINK_DIRECTORIES(${DEP_LIB_DIR}) # recursive query match files :*.cpp FILE(GLOB_RECURSE TEST_CPP_LIST ${PATH_TEST_FILES}/*.cpp) FILE(GLOB_RECURSE TEST_CC_LIST ${PATH_TEST_FILES}/*.cc) MESSAGE(STATUS "test cpp list: ${TEST_CPP_LIST} ${TEST_C_LIST}") # build executable program ADD_EXECUTABLE(MXNet_Test ${TEST_CPP_LIST} ${TEST_CC_LIST}) # add dependent library: static and dynamic TARGET_LINK_LIBRARIES(MXNet_Test mxnet ${DEP_LIB_DIR}/libdmlc.a ${DEP_LIB_DIR}/libopenblas.so pthread rt # undefined reference to shm_open ${opencv_core} ${opencv_imgproc} ${opencv_highgui} ${opencv_imgcodecs} ${opencv_video} ${opencv_videoio} ${opencv_objdetect} ${opencv_ml} )

注:對原始碼有兩處修改:

1. OpenBLAS註釋掉common.h中的第681行;

2. dmlc-core再用CMake編譯時,關閉OpenMP的支援,即將CMakeLists.txt中的第18行由ON調整為OFF.

以下是MNIST train的測試程式碼:

#include "funset.hpp" #include <chrono> #include <string> #include <fstream> #include <vector> #include "mxnet-cpp/MxNetCpp.h" namespace { bool isFileExists(const std::string &filename) { std::ifstream fhandle(filename.c_str()); return fhandle.good(); } bool check_datafiles(const std::vector<std::string> &data_files) { for (size_t index = 0; index < data_files.size(); index++) { if (!(isFileExists(data_files[index]))) { LG << "Error: File does not exist: " << data_files[index]; return false; } } return true; } bool setDataIter(mxnet::cpp::MXDataIter *iter, std::string useType, const std::vector<std::string> &data_files, int batch_size) { if (!check_datafiles(data_files)) return false; iter->SetParam("batch_size", batch_size); iter->SetParam("shuffle", 1); iter->SetParam("flat", 1); if (useType == "Train") { iter->SetParam("image", data_files[0]); iter->SetParam("label", data_files[1]); } else if (useType == "Label") { iter->SetParam("image", data_files[2]); iter->SetParam("label", data_files[3]); } iter->CreateDataIter(); return true; } } // namespace ////////////////////////////// mnist //////////////////////// /* reference: https://mxnet.incubator.apache.org/tutorials/c%2B%2B/basics.html mxnet_source/cpp-package/example/mlp_cpu.cpp */ namespace { mxnet::cpp::Symbol mlp(const std::vector<int> &layers) { auto x = mxnet::cpp::Symbol::Variable("X"); auto label = mxnet::cpp::Symbol::Variable("label"); std::vector<mxnet::cpp::Symbol> weights(layers.size()); std::vector<mxnet::cpp::Symbol> biases(layers.size()); std::vector<mxnet::cpp::Symbol> outputs(layers.size()); for (size_t i = 0; i < layers.size(); ++i) { weights[i] = mxnet::cpp::Symbol::Variable("w" + std::to_string(i)); biases[i] = mxnet::cpp::Symbol::Variable("b" + std::to_string(i)); mxnet::cpp::Symbol fc = mxnet::cpp::FullyConnected(i == 0 ? x : outputs[i - 1], weights[i], biases[i], layers[i]); outputs[i] = i == layers.size() - 1 ? fc : mxnet::cpp::Activation(fc, mxnet::cpp::ActivationActType::kRelu); } return mxnet::cpp::SoftmaxOutput(outputs.back(), label); } } // namespace int test_mnist_train() { const int image_size = 28; const std::vector<int> layers{ 128, 64, 10 }; const int batch_size = 100; const int max_epoch = 10; const float learning_rate = 0.1; const float weight_decay = 1e-2; #ifdef _MSC_VER std::vector<std::string> data_files = { "E:/GitCode/MXNet_Test/data/mnist/train-images.idx3-ubyte", "E:/GitCode/MXNet_Test/data/mnist/train-labels.idx1-ubyte", "E:/GitCode/MXNet_Test/data/mnist/t10k-images.idx3-ubyte", "E:/GitCode/MXNet_Test/data/mnist/t10k-labels.idx1-ubyte"}; #else std::vector<std::string> data_files = { "data/mnist/train-images.idx3-ubyte", "data/mnist/train-labels.idx1-ubyte", "data/mnist/t10k-images.idx3-ubyte", "data/mnist/t10k-labels.idx1-ubyte"}; #endif auto train_iter = mxnet::cpp::MXDataIter("MNISTIter"); setDataIter(&train_iter, "Train", data_files, batch_size); auto val_iter = mxnet::cpp::MXDataIter("MNISTIter"); setDataIter(&val_iter, "Label", data_files, batch_size); auto net = mlp(layers); mxnet::cpp::Context ctx = mxnet::cpp::Context::cpu(); // Use CPU for training std::map<std::string, mxnet::cpp::NDArray> args; args["X"] = mxnet::cpp::NDArray(mxnet::cpp::Shape(batch_size, image_size*image_size), ctx); args["label"] = mxnet::cpp::NDArray(mxnet::cpp::Shape(batch_size), ctx); // Let MXNet infer shapes other parameters such as weights net.InferArgsMap(ctx, &args, args); // Initialize all parameters with uniform distribution U(-0.01, 0.01) auto initializer = mxnet::cpp::Uniform(0.01); for (auto& arg : args) { // arg.first is parameter name, and arg.second is the value initializer(arg.first, &arg.second); } // Create sgd optimizer mxnet::cpp::Optimizer* opt = mxnet::cpp::OptimizerRegistry::Find("sgd"); opt->SetParam("rescale_grad", 1.0 / batch_size)->SetParam("lr", learning_rate)->SetParam("wd", weight_decay); // Create executor by binding parameters to the model auto *exec = net.SimpleBind(ctx, args); auto arg_names = net.ListArguments(); // Start training for (int iter = 0; iter < max_epoch; ++iter) { int samples = 0; train_iter.Reset(); auto tic = std::chrono::system_clock::now(); while (train_iter.Next()) { samples += batch_size; auto data_batch = train_iter.GetDataBatch(); // Set data and label data_batch.data.CopyTo(&args["X"]); data_batch.label.CopyTo(&args["label"]); // Compute gradients exec->Forward(true); exec->Backward(); // Update parameters for (size_t i = 0; i < arg_names.size(); ++i) { if (arg_names[i] == "X" || arg_names[i] == "label") continue; opt->Update(i, exec->arg_arrays[i], exec->grad_arrays[i]); } } auto toc = std::chrono::system_clock::now(); mxnet::cpp::Accuracy acc; val_iter.Reset(); while (val_iter.Next()) { auto data_batch = val_iter.GetDataBatch(); data_batch.data.CopyTo(&args["X"]); data_batch.label.CopyTo(&args["label"]); // Forward pass is enough as no gradient is needed when evaluating exec->Forward(false); acc.Update(data_batch.label, exec->outputs[0]); } float duration = std::chrono::duration_cast<std::chrono::milliseconds> (toc - tic).count() / 1000.0; LG << "Epoch: " << iter << " " << samples / duration << " samples/sec Accuracy: " << acc.Get(); } #ifdef _MSC_VER std::string json_file{ "E:/GitCode/MXNet_Test/data/mnist.json" }; std::string param_file{"E:/GitCode/MXNet_Test/data/mnist.params"}; #else std::string json_file{ "data/mnist.json" }; std::string param_file{"data/mnist.params"}; #endif net.Save(json_file); mxnet::cpp::NDArray::Save(param_file, exec->arg_arrays); delete exec; MXNotifyShutdown(); return 0; }

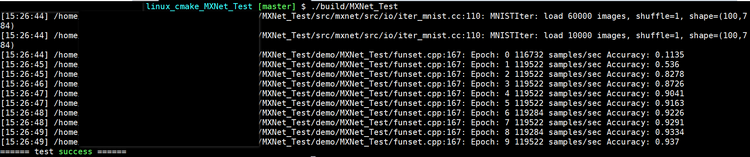

執行結果如下: