Python英超聯賽10年資料爬蟲

阿新 • • 發佈:2018-12-31

英超聯賽10年資料爬蟲

引言:今天對國外某足球網站進行爬蟲,爬取英超聯賽10年資料,主要包括比賽雙方以及比分。

1.網站分析

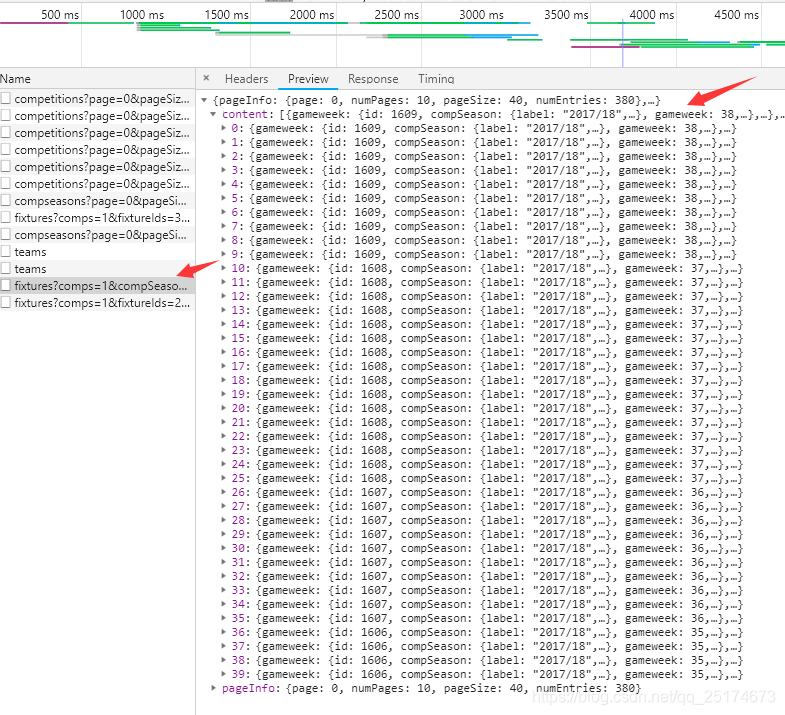

網址:https://www.premierleague.com/results(需要科學上網)。我們要的資訊主要是對戰雙方和第一粒進球的時間。右擊檢視原始碼沒有我們要的資訊,考慮是動態載入。F12開啟開發者選項。

Json資料分析

確定好了資料的來源,那麼我們就要分析如何獲取Json資料中我們要的部分。我們要的資料主要是存在瞭如下位置。

3.Python程式碼

確定好了資料位置我們就可以進行爬蟲獲取,程式碼如下:

import requests from requests.exceptions import RequestException import json import csv import time headers = { 'Content-Type': 'application/x-www-form-urlencoded; charset=UTF-8', 'Origin': 'https://www.premierleague.com', 'Referer': 'https://www.premierleague.com/results?co=1&se=17&cl=-1', 'User-Agent': 'Mozilla/5.0 (Windows NT 10.0; WOW64) AppleWebKit/537.36 (KHTML, like Gecko) Chrome/68.0.3440.17 Safari/537.36', } def get_page(url): try: response = requests.get(url, headers=headers) response.encoding = 'utf-8' if response.status_code == 200: # print(response.text) # print(response.text) return response.text return None except RequestException as err: print('獲取頁面錯誤') print(err) # time.sleep(3) # return get_index_page(html) def parse_page(url): html = get_page(url) html = json.loads(html) mainname , time , clientname,total_time = [], [] ,[],[] for i in html['content']: time = [] mainname.append(i['teams'][0]['team']['name']) num = len(i['goals']) if num == 0: total_time.append('None') else: for j in range(num): time.append(i['goals'][j]['clock']['label'][:2]) first_time = min(time) total_time.append(first_time) clientname.append(i['teams'][1]['team']['name']) info = zip(mainname,total_time,clientname) info = list(info) return info def write2csv(info): with open('all_data.csv','a',newline='',encoding='utf-8-sig') as f: for i in info: writer = csv.writer(f) writer.writerow(i) if __name__ == '__main__': # 18-19'https://footballapi.pulselive.com/football/fixtures?comps=1&compSeasons=210&teams=1,127,131,43,46,4,6,7,34,159,26,10,11,12,23,20,21,33,25,38&page=0&pageSize=40&sort=desc&statuses=C&altIds=true' # 17-18'https://footballapi.pulselive.com/football/fixtures?comps=1&compSeasons=79&teams=1,127,131,43,4,6,7,159,26,10,11,12,23,20,42,45,21,33,36,25&page=0&pageSize=40&sort=desc&statuses=C&altIds=true' # 16-17'https://footballapi.pulselive.com/football/fixtures?comps=1&compSeasons=54&teams=1,127,43,4,6,7,41,26,10,11,12,13,20,42,29,45,21,33,36,25&page=0&pageSize=40&sort=desc&statuses=C&altIds=true' # 15-16'https://footballapi.pulselive.com/football/fixtures?comps=1&compSeasons=42&teams=1,2,127,4,6,7,26,10,11,12,23,14,20,42,29,45,21,33,36,25&page=0&pageSize=40&sort=desc&statuses=C&altIds=true' # 14-15'https://footballapi.pulselive.com/football/fixtures?comps=1&compSeasons=27&teams=1,2,43,4,6,7,41,26,10,11,12,23,17,20,42,29,45,21,36,25&page=0&pageSize=40&sort=desc&statuses=C&altIds=true' # 13-14'https://footballapi.pulselive.com/football/fixtures?comps=1&compSeasons=22&teams=1,2,46,4,6,7,34,41,10,11,12,23,14,20,42,29,45,21,36,25&page=0&pageSize=40&sort=desc&statuses=C&altIds=true' # 12-13'https://footballapi.pulselive.com/football/fixtures?comps=1&compSeasons=21&teams=1,2,4,7,34,10,11,12,23,14,17,40,20,42,29,45,21,36,25,39&page=0&pageSize=40&sort=desc&statuses=C&altIds=true' # 11-12'https://footballapi.pulselive.com/football/fixtures?comps=1&compSeasons=20&teams=1,2,3,27,4,7,34,10,11,12,23,14,17,42,29,45,21,36,39,38&page=0&pageSize=40&sort=desc&statuses=C&altIds=true' # 10-11'https://footballapi.pulselive.com/football/fixtures?comps=1&compSeasons=19&teams=1,2,35,3,44,27,4,7,34,10,11,12,23,42,29,21,36,25,39,38&page=0&pageSize=40&sort=desc&statuses=C&altIds=true' # 09-10'https://footballapi.pulselive.com/football/fixtures?comps=1&compSeasons=18&page=0&pageSize=40&sort=desc&statuses=C&altIds=true' # 08-09'https://footballapi.pulselive.com/football/fixtures?comps=1&compSeasons=17&page=0&pageSize=40&sort=desc&statuses=C&altIds=true' # https: // www.premierleague.com / match / 6706 seasons = ['17','18','19','20','21','22','27','42','54','79','210',] for season in seasons: for page in range(3,11): time.sleep(3) print('當前執行到',season,page) url = 'https://footballapi.pulselive.com/football/fixtures?comps=1&compSeasons='+str(season)+'&page='+str(page)+'&pageSize=40&sort=desc&statuses=C&altIds=true' info = parse_page(url) write2csv(info)

獲取到的資料我們寫入CSV。進球時間我只保留了前兩位,便於後面的使用。對於平局即沒有進球數我用None代替。

4.資料分析

我採集好了資料之後,把每個主隊的第一粒進球時間做了一張表,統計每支隊伍主場作戰時第一粒進球的時間分佈情況。首先用程式碼對每個隊伍的每場比賽第一粒進球時間進行彙總

import csv

import os

from matplotlib import pyplot as plt

import numpy as np

#這一部分是對之前的總的csv資料進行分類,以戰隊名稱新建主場資料,使用一次之後則不再使用。

# zhudui_list = []

# with open('all_data.csv','r',encoding='utf-8-sig') as f:

# reader = csv.reader(f)

# for line in reader:

# zhudui = line[0]

# zhudui_list.append(zhudui)

# with open('./mainname/'+zhudui+'.csv','r',newline='',encoding='utf-8-sig') as e:

# writer = csv.writer(e)

# writer.writerow([line[1]])

#這裡面的資料就是來源於上一部分程式碼得到的資料

for csvfile in os .listdir('mainname/'):

print(csvfile)

with open('mainname/'+csvfile,'r',encoding='utf-8-sig') as f:

time_list = []

reader = csv.reader(f)

for line in reader:

if line[0] == 'None':

time_list.append(100)

else:

time_list.append(int(line[0]))

plt.figure(figsize=(12, 6))

plt.title(csvfile[:-4])

plt.hist(time_list, rwidth=0.95,bins=20)

plt.xticks(np.arange(0,100,5))

plt.xlabel('min')

plt.savefig('Pictures/'+csvfile[:-4]+'.tiff', dpi=60)

plt.show()5.資料展示

每一支隊伍都有,我就不一一展示了。