Rethinking the value of network pruning

最近將High-way的思想用到了步態識別上,我覺得應該算是一個創新點,但是小夥伴建議我讀一讀Rethinking the value of resnet ,或許會對我有所啟發。所以今天就來拜讀一下。主要是翻譯的形式,一段英文一段中文。

補充:好傷心,當我把步態識別的跨角度問題的準確率平均提高了8個百分點後,群裡的小夥伴們建議我讀一讀Rethinking the value of resnet,然後我在谷歌上搜呀搜,找呀找,才找見這個與他們說的相似的文章Rethinking the value of network pruning。雖然和我想找的論文題目不一樣,但是或許內容是一樣的呢,畢竟只能查到這一篇文章,然後等我翻譯完,理解完,發現完全不一樣時,我又去問了群裡的小夥伴,他們說,他們說,我們當時只是開玩笑的,你別當真。嗚嗚嗚~~~,一個人開玩笑也就算了,那麼多人一起開玩笑,還都辣麼認真。哎,我什麼時候能改掉別人隨便說說我就當真的壞毛病。所以這篇文章是講network pruning的,不是講the value of resnet的。

Abstract

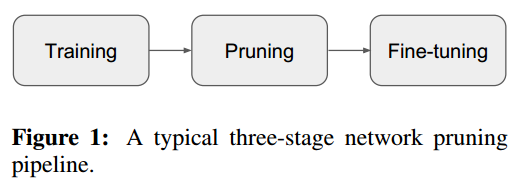

Network pruning is widely used for reducing the heavy computational cost of deep networks. A typical pruning algorithm is a three-stage pipeline, i.e., training (a large model), pruning and fine-tuning, and each of the three stages is considered as indispensable. In this work, we make several surprising observations which contradict common beliefs. For all the six state-of-the-art pruning algorithms we examined, fine-tuning a pruned model only gives comparable or even worse performance than training that model with randomly initialized weights. For pruning algorithms which assume a predefined architecture of the target pruned network, one can completely get rid of the pipeline and directly train the target network from scratch. Our observations are consistent for a wide variety of pruning algorithms with multiple network architectures, datasets, and tasks. Our results have several implications: 1) training an over-parameterized model is not necessary to obtain an efficient final model, 2) learned “important” weights of the large model are not necessarily helpful for the small pruned model, 3) the pruned architecture itself, rather than a set of inherited “important” weights, is what leads to the efficiency benefit in the final model, which suggests that some pruning algorithms could be seen as performing network architecture search.

摘要

網路修剪被廣泛用於降低深度網路的繁重計算成本。典型的修剪演算法是三階段流程,即訓練(大型模型),修剪和微調,並且三個階段中的每一個被認為是必不可少的。在這項工作中,我們做出了幾個與常見觀點相矛盾的令人驚訝的觀察。對於我們測試的所有六種最先進的修剪演算法,對修剪後的模型進行微調只能提供與使用隨機初始化權重訓練模型相當甚至更差的效能。對於目標修剪網路的預定義體系結構的修剪演算法,可以完全擺流程道並直接從頭開始訓練目標網路。我們的觀察結果與具有多種網路架構,資料集和任務的各種修剪演算法一致。我們的結果有幾個含義:1)訓練過度引數化的模型不是獲得有效的最終模型所必需的; 2)學習的大型模型的“重要”權重不一定有助於修剪後的小型模型,3)修剪的體系結構本身,而不是一組繼承的“重要”權重,導致最終模型的效率優勢,這表明一些修剪演算法可以被視為表徵網路架構探索。

introduction

Over-parameterization is a widely-recognized property of deep neural networks (Denton et al., 2014; Ba & Caruana, 2014), which leads to high computational cost and high memory footprint. As a remedy, network pruning (LeCun et al., 1990; Hassibi & Stork, 1993; Han et al., 2015; Molchanov et al., 2016; Li et al., 2017) has been identified as an effective technique to improve the efficiency of deep networks for applications with limited computational budget. A typical procedure of network pruning consists of three stages: 1) train a large, over-parameterized model, 2) prune the trained large model according to a certain criterion, and 3) fine-tune the pruned model to regain the lost performance.

過度引數化是深度神經網路的廣泛認可的屬性(Denton等人,2014; Ba&Caruana,2014),這導致高計算成本和高記憶體佔用。 作為一種補救措施,網路修剪(LeCun等,1990; Hassibi&Stork,1993; Han等,2015; Molchanov等,2016; Li等,2017)已被確定為一種對於應用在應用程式的深度網路且計算預算有限的情況下的有效的改進技術。網路修剪的典型過程包括三個階段:1)訓練大型的過度引數化的模型,2)根據特定標準修剪訓練的大模型,以及3)微調修剪的模型以重新獲得丟失的效能。

Generally, there are two common beliefs behind this pruning procedure. First, it is believed that starting with training a large, over-parameterized network is important (Luo et al., 2017), as it provides a high-performance model (due to stronger representation & optimization power) from which one can remove a set of redundant parameters without significant hurting the accuracy. This is usually reported to be superior to directly training a smaller network from scratch. Second, both the pruned architecture and its associated weights are believed to be essential for obtaining the final efficient model. Thus most existing pruning techniques choose to fine-tune a pruned model instead of training it from scratch. The preserved weights after pruning are usually considered to be critical, as how to accurately select the set of important weights is a very active research topic in the literature (Han et al., 2015; Hu et al., 2016; Li et al., 2017; Liu et al., 2017; Luo et al., 2017; He et al., 2017b;Ye et al., 2018).

通常,這種修剪程式背後有兩個共同的信念。首先,人們認為先訓練大型、過度引數化的網路是很重要的(Luo et al。,2017),因為它提供了一個高效能模型(由於更強的表徵和優化能力),這個高效能模型可以刪除一系列冗餘引數並且對於模型的精度沒有明顯損害。據報道,這通常優於直接從頭開始訓練較小的網路。其次,被修剪的體系結構及其相關權重被認為是獲得最終有效模型所必需的。因此,大多數現有的修剪技術選擇微調修剪的模型而不是從頭開始訓練。修剪後保留的權重通常被認為是關鍵的,因為如何準確地選擇一組重要的權重是學術界中非常活躍的研究課題(Han et al。,2015; Hu et al。,2016; Li et al。,2017; Liu等人,2017; Luo等人,2017; He等人,2017b; Ye等人,2018)。

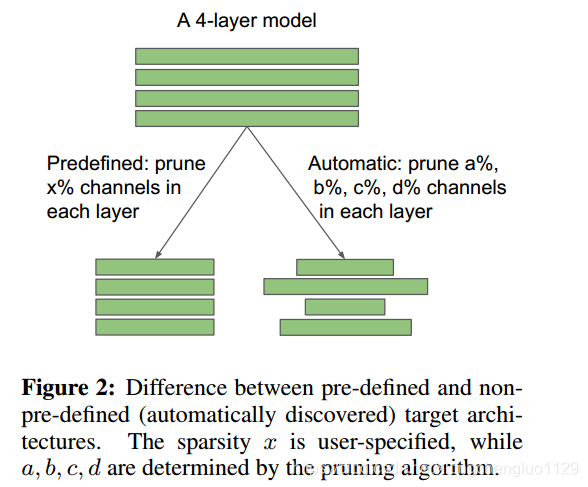

In this work, we show that both of the beliefs mentioned above are not necessarily true. Based on an extensive empirical evaluation of existing pruning algorithms on multiple datasets with multiple network architectures, we make two surprising observations. First, for pruning algorithms with pre-defined target network architectures (Figure 2), directly training the small target model from random initialization can achieve the same, if not better, performance, as the model obtained from the three-stage pipeline. This implies that starting with a large model is not necessary. Second, for pruning algorithms without a pre-defined target network, training the pruned model from scratch can also achieve comparable or even better performance than fine-tuning. This observation shows that for these pruning algorithms, what matters is the obtained architecture, instead of the preserved weights, despite training the large model is required to find that target architecture. The contradiction between our results and those reported in the literature might be explained by less carefully chosen hyper-parameters, data augmentation scheme and unfair computation budget for training.

在這項工作中,我們表明上面提到的兩種信念都不一定正確。基於對具有多個網路架構的多個數據集的現有剪枝演算法的廣泛經驗評估,我們做出了兩個令人驚訝的觀察。首先,對於具有預定義目標網路體系結構的修剪演算法(圖2),從隨機初始化直接訓練小目標模型可以實現與從三級流水線獲得的模型相同(如果不是更好)的效能。這意味著不需要從大型模型開始。其次,對於沒有預定義目標網路的修剪演算法,從頭開始訓練修剪模型也可以實現與微調相當或甚至更好的效能。這一觀察表明,對於這些修剪演算法,重要的是獲得的體系結構,而不是保留的權重,儘管訓練需要大模型來找到目標體系結構是必須的。 我們的結果與文獻中報道的結果之間的矛盾可能是通過較少精心選擇的超引數,資料增強方案和不公平的訓練所需的計算資源預算來解釋的。

Our results advocate a rethinking of existing network pruning algorithms. It seems that the overparameterization during the first-stage training is not as beneficial as previously thought. Also, inheriting weights from a large model is not necessarily optimal, and might trap the pruned model into a bad local minimum, even if the weights are considered “important” by the pruning criterion. Instead, our results suggest that the value of some pruning algorithms may lie in identifying efficient structures and performing architecture search, rather than selecting “important” weights. We verify this hypothesis through a set of carefully designed experiments described in Section5.

我們的結果提倡重新思考現有的網路修剪演算法。似乎訓練的第一階段期間的過度引數化並不像以前認為的那樣有益。 此外,從大型模型繼承權重不一定是最優的,並且可能將修剪後的模型陷入不良區域性最小值,即使權重被修剪標準視為“重要”。 相反,我們的結果表明,一些修剪演算法的價值可能在於識別有效結構和執行架構搜尋,而不是選擇“重要”權重。 我們通過第5節中描述的一系列精心設計的實驗驗證了這一假設。

The rest of the paper is organized as follows: in Section 2, we introduce the background and some related works on network pruning; in Section 3, we describe our methodology for training the pruned model from scratch; in Section 4 we experiment on various pruning methods to show our main results for both pruning methods with pre-defined or automatically discovered target architectures; in Section 5, we argue that the value of some pruning methods indeed lies in searching efficient network architectures, as supported by experiments; in Section 6 we discuss some implications and conclude the paper.

本文的其餘部分安排如下:在第2節中,我們介紹了網路修剪的背景和一些相關工作; 在第3節中,我們描述了從頭開始訓練修剪模型的方法; 在第4節中,我們嘗試了各種修剪方法,以顯示我們對具有預定義或自動發現的目標體系結構的修剪方法的主要結果; 在第5節中,我們認為一些修剪方法的價值確實在於搜尋有效的網路架構,正如實驗所支援的那樣; 在第6節中,我們討論了一些含義並總結了論文。

Recent success of deep convolutional networks (Girshick et al., 2014; Long et al., 2015; He et al., 2016; 2017a) has been coupled with increased requirement of computation resources. In particular, the model size, memory footprint, the number of computation operations (FLOPs) and power usage are major aspects inhibiting the use of deep neural networks in some resource-constrained settings. Those large models can be infeasible to store, and run in real time on embedded systems. To address this issue, many methods have been proposed such as low-rank approximation of weights (Denton et al., 2014; Lebedev et al., 2014), weight quantization (Courbariaux et al., 2016; Rastegari et al.), knowledge distillation (Hinton et al., 2014; Romero et al., 2015) and network pruning (Han et al., 2015; Li et al., 2017), among which network pruning has gained notable attention due to their competitive performance and compatibility.

最近深度卷積網路的成功(Girshick等人,2014; Long等人,2015; He等人,2016; 2017a)與計算資源的需求增加相得益彰。特別地,深度神經網路應用受限的主要資源包括模型大小、儲存器佔用空間、計算操作(FLOP)的次數和功率用量。 這些大型模型無法儲存,並且無法在嵌入式系統上實時執行。為了解決這個問題,已經提出了許多方法,例如權重的低秩逼近(Denton等人,2014; Lebedev等人,2014),權重量化(Courbariaux等人,2016; Rastegari等人), 知識蒸餾(Hinton et al。,2014; Romero et al。,2015)和網路修剪(Han et al。,2015; Li et al。,2017),其中網路修剪因其突出的表現和相容性而受到關注。

One major branch of network pruning methods is individual weight pruning, and it dates back to Optimal Brain Damage (LeCun et al., 1990) and Optimal Brain Surgeon (Hassibi & Stork, 1993), which prune weights based on Hessian of the loss function. More recently, Han et al. (2015) proposes to prune network weights with small magnitude, and this technique is further incorporated into the “Deep Compression” pipeline (Han et al., 2016). Srinivas & Babu (2015) proposes a data-free algorithm to remove redundant neurons iteratively. However, one drawback of these non-structured pruning methods is that the resulting weight matrices are sparse, which cannot lead to compression and speedup without dedicated hardware/libraries.

網路修剪方法的一個主要分支是個體權重修剪,它可以追溯到最佳腦損傷(LeCun等,1990)和Optimal Brain Surgeon(Hassibi&Stork,1993),它基於損失函式的Hessian矩陣修剪權重。 最近,Han等人。(2015)提出小幅度的修剪的網路權重,並將此技術進一步納入“深度壓縮”流程(Han et al。,2016)。 Srinivas&Babu(2015)提出了一種無資料演算法來迭代地去除冗餘神經元。 然而,這些非結構化修剪方法的一個缺點是所得到的權重矩陣是稀疏的,如果沒有專用硬體/庫,則不能達到壓縮和加速的效果。這裡說的非結構化的修建時直接修建權重的。

In contrast, structured pruning methods prune at the level of channels or even layers. Since the original convolution structure is still preserved, no dedicated hardware/libraries are required to realize the benefits. Among structured pruning methods, channel pruning is the most popular, since itoperates at the most fine-grained level while still fitting in conventional deep learning frameworks.

相反,結構化修剪方法在通道或甚至層的層次上進行修剪。由於原始卷積結構仍然保留,因此不需要專用的硬體/庫來實現這些好處。 在結構化修剪方法中,通道修剪是最受歡迎的,因為它在最細粒度的水平上執行,同時仍然適合傳統的深度學習框架。

Some heuristic methods include pruning channels based on their corresponding filter weight norm (Li et al., 2017) and average percentage of zeros in the output (Hu et al., 2016). Group sparsity is also widely used to smooth the pruning process after training (Wen et al., 2016; Alvarez & Salzmann, 2016; Lebedev & Lempitsky, 2016; Zhou et al., 2016). Liu et al. (2017) and Ye et al. (2018) impose sparsity constraints on channel-wise scaling factors during training, whose magnitudes are then used for channel pruning. Huang & Wang (2018) uses a similar idea to prune coarser structures such as residual blocks . He et al. (2017b) and Luo et al. (2017) minimizes next layer’s feature reconstruction error to determine which channels to keep. Similarly, Yu et al. (2018) optimizes the reconstruction error of the final response layer and propagates a “importance score” for each channel. Molchanov et al. (2016) use Taylor expansion to approximate each channel’s influence over the final loss and prune accordingly. Suau et al. (2018) analyzes the intrinsic correlation within each layer and prune redundant channels.

一些啟發式方法 包括根據相應的濾波器權重範數修剪通道(Li et al。,2017)和輸出中零的平均百分比(Hu et al。,2016)。組稀疏度(這個不是很懂) 也被廣泛用於在訓練後平滑修剪過程(Wen等人,2016; Alvarez&Salzmann,2016; Lebedev&Lempitsky,2016; Zhou等人,2016)。劉等人。(2017年)和葉等人。(2018)在訓練期間對原通道方面的縮放因子施加稀疏性約束,其大小隨後用於通道修剪。Huang&Wang(2018)使用類似的想法來修剪較粗糙的結構,例如殘差模組。He等人。 (2017b)和Luo等人。 (2017)最小化下一層的特徵重建錯誤,以確定要保留哪些通道。同樣,Yu等人。 (2018)優化最終響應層的重建誤差並傳播每個通道的“重要性分數”。 Molchanov等。 (2016)使用泰勒擴充套件來估計每個渠道對最終損失的影響並相應地修剪。 Suau等人。 (2018)分析每層內的固有相關性並修剪冗餘通道。(對這一部分很感興趣,到時候再來看看,好好理解一下這個方法)

Our work is also related to some recent studies on the characteristics of pruning algorithms. Mittal et al. (2018) shows that random channel pruning (Anwar & Sung, 2016) can perform on par with a variety of more sophisticated pruning criteria, demonstrating the plasticity of network models. Zhu & Gupta (2018) shows that training a small-dense model cannot achieve the same accuracy as a pruned large-sparse model with identical memory footprint. In this work, we reveal a different and rather surprising characteristic of network pruning methods: fine-tuning the pruned model with inherited weights is no better than training it from scratch.

我們的工作還涉及一些關於特徵修剪演算法的最新研究。 米塔爾等人。 (2018)表明隨機通道修剪(Anwar&Sung,2016)可以與各種更復雜的修剪標準相媲美,展示了網路模型的可塑性。 Zhu和Gupta(2018)表明,在相同記憶體佔用條件下,訓練小密度模型不能達到與的修剪大稀疏模型相同的精度。 在這項工作中,我們揭示了網路修剪方法的一個不同且相當令人驚訝的特徵:使用繼承權重微調修剪模型並不比從頭開始訓練更好。

methodology

In this section, we describe in detail our methodology for training a small target model from random initialization. Target Pruned Architectures. We first divide network pruning methods into two categories. In a pruning pipeline, the target pruned model’s architecture can be determined by either human (i.e., predefined) or the pruning algorithm (i.e., automatic)(see Figure 2).

方法

在本節中,我們將詳細描述從隨機初始化中訓練小規模目標模型的方法。Target Pruned Architectures.我們首先將網路修剪方法分為兩類。 在修剪流程中,目標修剪模型的架構可以由人(即,預定義的)或修剪演算法(即,自動)確定(參見圖2)。

When human predefines the target architecture, a common criterion is the ratio of channels to prune in each layer. For example, we may want to prune 50% channels in each layer of VGG. In this case, no matter which specific channels are pruned, the target architecture remains the same, because the pruning algorithm only locally prunes the least important 50% channels in each layer. In practice, the ratio in each layer is usually selected through empirical studies or heuristics.

當人類預定義目標體系結構時,通用標準是每個層中需要剪枝通道的比率。例如,我們可能希望在每層VGG中修剪50%的通道。 在這種情況下,無論修剪哪個特定通道,目標架構都保持不變,因為修剪演算法僅在本地修剪每層中最不重要的50%通道。 在實踐中,通常通過實證研究或啟發式選擇每層中的比率。

When the target architecture is automatically determined by a pruning algorithm, it is usually based on a pruning criterion that globally compares the importance of structures (e.g., channels) across layers. Examples include Liu et al. (2017), Huang & Wang (2018), Molchanov et al. (2016) and Suau et al. (2018). Non-structured weight pruning (Han et al., 2015) also falls into this category, where the sparsity patterns are determined by the magnitude of trained weights.

當通過修剪演算法自動確定目標體系結構時,通常基於修剪標準,該修剪標準全域性地比較跨層的結構(例如,通道)的重要性。 例如,Liu等人。 (2017),Huang&Wang(2018),Molchanov等。 (2016年)和Suau等人。(2018)。 非結構化重量修剪(Han et al。,2015)也屬於這一類,其中稀疏模式由訓練重量的大小決定。

Datasets, Network Architectures and Pruning Methods. In the network pruning literature, CIFAR-10, CIFAR-100 (Krizhevsky, 2009), and ImageNet (Deng et al., 2009) datasets are the de-facto benchmarks, while VGG (Simonyan & Zisserman, 2015), ResNet (He et al., 2016) and DenseNet (Huang et al., 2017) are the common network architectures. We evaluate three pruning methods with predefined target architectures, Li et al. (2017), Luo et al. (2017), He et al. (2017b) and three which automatically discovered target models, Liu et al. (2017), Huang & Wang (2018), Han et al. (2015). For the first five methods, we evaluate using the same (target model, dataset) pairs as presented in the original paper to keep our results comparable. For the last one (Han et al., 2015), we use the aforementioned architectures instead, since the ones in the original paper are no longer state-of-the-art. On CIFAR datasets, we run each experiment with 5 random seeds, and report the mean and standard deviation of the accuracy. For testing on the ImageNet, the image is first resized so that the shorter edge has length 256, and then center-cropped to be of 224×224.

資料集,網路架構和修剪方法:在網路修剪文獻中,CIFAR-10,CIFAR-100(Krizhevsky,2009)和ImageNet(Deng等,2009)資料集是事實上的基準資料集,而VGG(Simonyan& Zisserman,2015),ResNet(He et al。,2016)和DenseNet(Huang et al。,2017)是常見的網路架構。我們用預定義的目標體系結構評估了三種修剪方法,Li等(2017),羅等人。 (2017年),何等人。 (2017b)和三個自動發現目標模型,劉等人。 (2017),Huang&Wang(2018),Han et al。 (2015年)。對於前五種方法,我們使用原始論文中提供的相同(目標模型,資料集)對進行評估,以使我們的結果具有可比性。對於最後一個(Han et al。,2015),我們使用上述架構,因為原始論文中的那些不再是最先進的。在CIFAR資料集上,我們使用5個隨機種子執行每個實驗,並報告準確度的均值和標準差。為了在ImageNet上進行測試,首先調整影象大小,使較短邊的長度為256,然後將中心裁剪為224×224。

Training Budget. One crucial question is how long we should train the small pruned model from scratch? Naively training for the same number of epochs as we train the large model might be unfair, since the small pruned model requires significantly less computation for one epoch. Alternatively, we could compute the floating point operations (FLOPs) for both the pruned and large models, and choose the number of training epoch for the pruned model that would lead to the same amount of computation as training the large model.

訓練預算: 一個關鍵問題是我們應該從頭開始訓練小型修剪模型多久? 在我們用相同的訓練週期來訓練大型模型可能是不公平的,因為小型修剪模型在一個訓練週期中明顯需要少量的計算資源,我們可以計算修剪模型和大模型的浮點運算(FLOPs),然後選擇依據和大型模型一樣的運算量來選擇小修剪模型的訓練週期數。

In our experiments, we use Scratch-E to denote training the small pruned models for the same epochs, and Scratch-B to denote training for the same amount of computation budget1. One may argue that we should instead train the small target model for fewer epochs since it typically converges faster. However, in practice we found that increasing the training epochs within a reasonable range is rarely harmful. We hypothesize that this is because smaller models are less prone to over-fitting.

在我們的實驗中,我們使用** Scratch-E 來表示同一個訓練週期時的小型修剪模型,並使用 Scratch-B **來表示相同數量的計算預算1的訓練。 有人可能會爭辯說,我們應該用較少的週期訓練小目標模型,因為它通常收斂得更快。 然而,在實踐中,我們發現將訓練時期在合理範圍內增加幾乎沒有什麼害處。 我們假設這是因為較小的模型不太容易過度擬合。

Implementation. In order to keep our setup as close to the original paper as possible, we use the following protocols: 1) If a previous pruning method’s training setup is publicly available, e.g. Liu et al. (2017) and Huang & Wang (2018), we adopt the original implementation; 2) Otherwise, for simpler pruning methods, e.g., Li et al (2017) and Han et al. (2015), we re implement the threestage pruning procedure and achieve similar results to the original paper; 3) For the remaining two methods (Luo et al., 2017; He et al., 2017b), the pruned models are publicly available but without the training setup, thus we choose to re-train both large and small target models from scratch. Interestingly, the accuracy of our re-trained large model is higher than what is reported in the original paper2. In this case, to accommodate the effects of different frameworks and training setups, we report the relative accuracy drop from the unpruned large model.

實現: 為了使我們的設定儘可能接近原始檔案,我們使用以下協議:1)如果先前的修剪方法的訓練設定是公開可用的,例如, 劉等人。 (2017)和黃和王(2018),我們採用原來的實施; 2)否則,對於更簡單的修剪方法,例如,Li等人(2017)和Han等人。 (2015),我們重新實施三階段修剪程式,並取得與原始論文類似的結果; 3)對於其餘兩種方法(Luo et al。,2017; He et al。,2017b),修剪的模型是公開的,但沒有訓練設定,因此我們選擇從頭開始重新訓練大型和小型目標模型。 有趣的是,我們重新訓練的大型模型的準確性高於原始論文中報告的2。 在這種情況下,為了適應不同框架和訓練設定的效果,我們指出:未修剪的大型模型的精度相對下降了。

In all these implementations, we use standard training hyper-parameters and data-augmentation schemes. For random weight initialization, we adopt the scheme proposed in He et al. (2015). For results of models fine-tuned from inherited weights, we either use the released models from original papers (for case 3 above) or follow the common practice of fine-tuning the model using the lowest learning rate when training the large model (Li et al., 2017; He et al., 2017b). The code to reproduce the results will be made publicly available.

在所有這些實現版本中,我們使用標準訓練超引數和資料增強方案。 對於隨機權重初始化,我們採用He等人提出的方案。(2015年)。對於從遺傳權重微調的模型的結果來看,我們要麼使用原始論文中公佈的模型(對於上面的案例3),要麼遵循在訓練大型模型時使用最低學習率對模型進行微調的常規做法(Li et et Li)al。,2017; He et al。,2017b)。 重現結果的程式碼將公開發布。

4 EXPERIMENTS

看原論文就很清楚明瞭了,這裡就不再翻譯了