Tendermint原始碼分析——啟動流程分析

準備引數

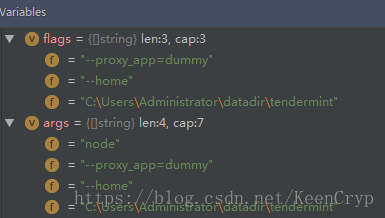

cli引數:

node --proxy_app=dummy --home "C:\Users\Administrator\datadir\tendermint"

Tendermint的cli解析使用cobra庫!

flags vs args

讓我們將程式定格在(c *Command) ExecuteC【/vendor/github.com/spf13/cobra/command.go#】中的下面這一行:

err=cmd.execute(flags)

我們可以看出,從命令列傳進來的所有“命令”都算作引數,而標記是“- -”開頭的引數。換句話說,標記是去掉不帶“- -”開頭的引數!

夢開始的地方

程式碼位置:/vendor/github.com/spf13/cobra/command.go#(c *Command) execute

if c.RunE != nil {

if err := c.RunE(c, argWoFlags); err != nil {

return err

}

} else {

c.Run(c, argWoFlags)

}

c.RunE(c, argWoFlags)是引發node啟動服務的根。一切有意思的事情從這裡開始。c.RunE(c, argWoFlags)是呼叫匿名函式RunE,而RunE的賦值則是在NewRunNodeCmd函式(/cmd/tendermints/commands/run_node.go):

// NewRunNodeCmd returns the command that allows the CLI to start a // node. It can be used with a custom PrivValidator and in-process ABCI application. func NewRunNodeCmd(nodeProvider nm.NodeProvider) *cobra.Command { cmd := &cobra.Command{ Use: "node", Short: "Run the tendermint node", RunE: func(cmd *cobra.Command, args []string) error { //匿名函式 // Create & start node n, err := nodeProvider(config, logger) if err != nil { return fmt.Errorf("Failed to create node: %v", err) } if err := n.Start(); err != nil { return fmt.Errorf("Failed to start node: %v", err) } else { logger.Info("Started node", "nodeInfo", n.Switch().NodeInfo()) //節點啟動完畢列印日誌Started node } // Trap signal, run forever. n.RunForever() return nil }, } AddNodeFlags(cmd) return cmd }

這個匿名函式有三個功能:

1、建立node(nodeProvider(config, logger))

2、啟動node(n.Start())

3、監聽停止node的訊號(n.RunForever())

下面我們一個功能往下走!我麼先看建立node!

建立node

nodeProvider(config,logger)具體執行DefaultNewNode函式(/node/node.go#DefaultNewNode)。DefaultNewNode函式會返回一個Node全域性變數。

// DefaultNewNode returns a Tendermint node with default settings for the

// PrivValidator, ClientCreator, GenesisDoc, and DBProvider.

// It implements NodeProvider.

func DefaultNewNode(config *cfg.Config, logger log.Logger) (*Node, error) {

return NewNode(config,

types.LoadOrGenPrivValidatorFS(config.PrivValidatorFile()),

proxy.DefaultClientCreator(config.ProxyApp, config.ABCI, config.DBDir()),

DefaultGenesisDocProviderFunc(config),

DefaultDBProvider,

logger)

}

DefaultNewNode的第一個實參為config。config的定義在/cmd/tendermint/commands/root.go:

var (

config = cfg.DefaultConfig()

)

注意:使用者在命令列傳入的引數,那只是啟動節點所需的非常小的一部分引數。大多數引數都是需要從預設配置中載入的。config全域性變數載入了node所需的所有預設引數(主要是預設基礎配置、預設RPC配置、預設P2P配置、記憶體池配置、共識配置和交易索引配置)。

DefaultNewNode的第二個實參為types.LoadOrGenPrivValidatorFS(config.PrivValidatorFile()),該函式會返回一個PrivValidatorFS例項。

DefaultNewNode的第三個實參為proxy.DefaultClientCreator(config.ProxyApp,config.ABCI,config.DBDir()),其中DefaultClientCreator函式(/proxy/client.go#DefaultClientCreator)的第一個實參config.ProxyApp=“dummy”;

第二個實參config.ABCI=“socket”;第三個實參config.DBDir()

=“C:\Users\Administrator\datadir\tendermint\data”。

但是具體負責建立這個全域性變數的函式走的是NewNode函式。程式碼在/node/node.go#NewNode。

// NewNode returns a new, ready to go, Tendermint Node.

func NewNode(config *cfg.Config,

privValidator types.PrivValidator,

clientCreator proxy.ClientCreator,

genesisDocProvider GenesisDocProvider,

dbProvider DBProvider,

logger log.Logger) (*Node, error) {

// Get BlockStore

//初始化blockstore資料庫

blockStoreDB, err := dbProvider(&DBContext{"blockstore", config})

if err != nil {

return nil, err

}

blockStore := bc.NewBlockStore(blockStoreDB)

// Get State

//初始化state資料庫

stateDB, err := dbProvider(&DBContext{"state", config})

if err != nil {

return nil, err

}

// Get genesis doc

// TODO: move to state package?

//從硬碟上讀取創世檔案

genDoc, err := loadGenesisDoc(stateDB)

if err != nil {

genDoc, err = genesisDocProvider()

if err != nil {

return nil, err

}

// save genesis doc to prevent a certain class of user errors (e.g. when it

// was changed, accidentally or not). Also good for audit trail.

saveGenesisDoc(stateDB, genDoc)

}

state, err := sm.LoadStateFromDBOrGenesisDoc(stateDB, genDoc)

if err != nil {

return nil, err

}

// Create the proxyApp, which manages connections (consensus, mempool, query)

// and sync tendermint and the app by performing a handshake

// and replaying any necessary blocks

consensusLogger := logger.With("module", "consensus")

handshaker := cs.NewHandshaker(stateDB, state, blockStore)

handshaker.SetLogger(consensusLogger)

proxyApp := proxy.NewAppConns(clientCreator, handshaker)

proxyApp.SetLogger(logger.With("module", "proxy"))

//Start()

if err := proxyApp.Start(); err != nil {

return nil, fmt.Errorf("Error starting proxy app connections: %v", err)

}

// reload the state (it may have been updated by the handshake)

state = sm.LoadState(stateDB)

// Decide whether to fast-sync or not

// We don't fast-sync when the only validator is us.

fastSync := config.FastSync //預設開啟快速同步

if state.Validators.Size() == 1 {

addr, _ := state.Validators.GetByIndex(0) //返回驗證人的地址

if bytes.Equal(privValidator.GetAddress(), addr) {

fastSync = false //如果只有一個驗證者,禁用快速同步

}

}

// Log(列印日誌) whether this node is a validator or an observer(觀察者)

if state.Validators.HasAddress(privValidator.GetAddress()) {

consensusLogger.Info("This node is a validator", "addr", privValidator.GetAddress(), "pubKey", privValidator.GetPubKey())

} else {

consensusLogger.Info("This node is not a validator", "addr", privValidator.GetAddress(), "pubKey", privValidator.GetPubKey())

}

// Make MempoolReactor

mempoolLogger := logger.With("module", "mempool")

//建立交易池

mempool := mempl.NewMempool(config.Mempool, proxyApp.Mempool(), state.LastBlockHeight)

mempool.InitWAL() // no need to have the mempool wal during tests

mempool.SetLogger(mempoolLogger)

mempoolReactor := mempl.NewMempoolReactor(config.Mempool, mempool)

mempoolReactor.SetLogger(mempoolLogger)

if config.Consensus.WaitForTxs() {

mempool.EnableTxsAvailable()

}

// Make Evidence Reactor

evidenceDB, err := dbProvider(&DBContext{"evidence", config})

if err != nil {

return nil, err

}

evidenceLogger := logger.With("module", "evidence")

evidenceStore := evidence.NewEvidenceStore(evidenceDB)

evidencePool := evidence.NewEvidencePool(stateDB, evidenceStore)

evidencePool.SetLogger(evidenceLogger)

evidenceReactor := evidence.NewEvidenceReactor(evidencePool)

evidenceReactor.SetLogger(evidenceLogger)

blockExecLogger := logger.With("module", "state")

// make block executor for consensus and blockchain reactors to execute blocks

blockExec := sm.NewBlockExecutor(stateDB, blockExecLogger, proxyApp.Consensus(), mempool, evidencePool)

// Make BlockchainReactor

bcReactor := bc.NewBlockchainReactor(state.Copy(), blockExec, blockStore, fastSync)

bcReactor.SetLogger(logger.With("module", "blockchain"))

// Make ConsensusReactor

consensusState := cs.NewConsensusState(config.Consensus, state.Copy(),

blockExec, blockStore, mempool, evidencePool)

consensusState.SetLogger(consensusLogger)

if privValidator != nil {

consensusState.SetPrivValidator(privValidator)

}

consensusReactor := cs.NewConsensusReactor(consensusState, fastSync)

consensusReactor.SetLogger(consensusLogger)

p2pLogger := logger.With("module", "p2p")

sw := p2p.NewSwitch(config.P2P)

sw.SetLogger(p2pLogger)

sw.AddReactor("MEMPOOL", mempoolReactor)

sw.AddReactor("BLOCKCHAIN", bcReactor)

sw.AddReactor("CONSENSUS", consensusReactor)

sw.AddReactor("EVIDENCE", evidenceReactor)

// Optionally, start the pex reactor

var addrBook pex.AddrBook

var trustMetricStore *trust.TrustMetricStore

if config.P2P.PexReactor {

addrBook = pex.NewAddrBook(config.P2P.AddrBookFile(), config.P2P.AddrBookStrict)

addrBook.SetLogger(p2pLogger.With("book", config.P2P.AddrBookFile()))

// Get the trust metric history data

trustHistoryDB, err := dbProvider(&DBContext{"trusthistory", config})

if err != nil {

return nil, err

}

trustMetricStore = trust.NewTrustMetricStore(trustHistoryDB, trust.DefaultConfig())

trustMetricStore.SetLogger(p2pLogger)

var seeds []string

if config.P2P.Seeds != "" {

seeds = strings.Split(config.P2P.Seeds, ",")

}

pexReactor := pex.NewPEXReactor(addrBook,

&pex.PEXReactorConfig{Seeds: seeds, SeedMode: config.P2P.SeedMode})

pexReactor.SetLogger(p2pLogger)

sw.AddReactor("PEX", pexReactor)

}

// Filter peers by addr or pubkey with an ABCI query.

// If the query return code is OK, add peer.

// XXX: Query format subject to change

if config.FilterPeers {

// NOTE: addr is ip:port

sw.SetAddrFilter(func(addr net.Addr) error {

resQuery, err := proxyApp.Query().QuerySync(abci.RequestQuery{Path: cmn.Fmt("/p2p/filter/addr/%s", addr.String())})

if err != nil {

return err

}

if resQuery.IsErr() {

return fmt.Errorf("Error querying abci app: %v", resQuery)

}

return nil

})

sw.SetPubKeyFilter(func(pubkey crypto.PubKey) error {

resQuery, err := proxyApp.Query().QuerySync(abci.RequestQuery{Path: cmn.Fmt("/p2p/filter/pubkey/%X", pubkey.Bytes())})

if err != nil {

return err

}

if resQuery.IsErr() {

return fmt.Errorf("Error querying abci app: %v", resQuery)

}

return nil

})

}

eventBus := types.NewEventBus()

eventBus.SetLogger(logger.With("module", "events"))

// services which will be publishing and/or subscribing for messages (events)

// consensusReactor will set it on consensusState and blockExecutor

consensusReactor.SetEventBus(eventBus)

// Transaction indexing

var txIndexer txindex.TxIndexer

switch config.TxIndex.Indexer {

case "kv":

store, err := dbProvider(&DBContext{"tx_index", config})

if err != nil {

return nil, err

}

if config.TxIndex.IndexTags != "" {

txIndexer = kv.NewTxIndex(store, kv.IndexTags(strings.Split(config.TxIndex.IndexTags, ",")))

} else if config.TxIndex.IndexAllTags {

txIndexer = kv.NewTxIndex(store, kv.IndexAllTags())

} else {

txIndexer = kv.NewTxIndex(store)

}

default:

txIndexer = &null.TxIndex{}

}

indexerService := txindex.NewIndexerService(txIndexer, eventBus)

// run the profile server

profileHost := config.ProfListenAddress

if profileHost != "" {

go func() {

logger.Error("Profile server", "err", http.ListenAndServe(profileHost, nil))

}()

}

//建立node,並給成員賦值

node := &Node{

config: config,

genesisDoc: genDoc,

privValidator: privValidator,

sw: sw,

addrBook: addrBook,

trustMetricStore: trustMetricStore,

stateDB: stateDB,

blockStore: blockStore,

bcReactor: bcReactor,

mempoolReactor: mempoolReactor,

consensusState: consensusState,

consensusReactor: consensusReactor,

evidencePool: evidencePool,

proxyApp: proxyApp,

txIndexer: txIndexer,

indexerService: indexerService,

eventBus: eventBus,

}

node.BaseService = *cmn.NewBaseService(logger, "Node", node)

return node, nil

}

這裡,我們有必要看看Node的定義:

// Node is the highest level interface to a full Tendermint node.

// It includes all configuration information and running services.

type Node struct {

cmn.BaseService //內部型別

// config

config *cfg.Config

genesisDoc *types.GenesisDoc // initial validator set

privValidator types.PrivValidator // local node's validator key

// network

sw *p2p.Switch // p2p connections

addrBook pex.AddrBook // known peers 已知的peer

trustMetricStore *trust.TrustMetricStore // trust metrics for all peers

// services

eventBus *types.EventBus // pub/sub for services

stateDB dbm.DB

blockStore *bc.BlockStore // store the blockchain to disk

bcReactor *bc.BlockchainReactor // for fast-syncing

mempoolReactor *mempl.MempoolReactor // for gossipping transactions

consensusState *cs.ConsensusState // latest consensus state

consensusReactor *cs.ConsensusReactor // for participating in the consensus

evidencePool *evidence.EvidencePool // tracking evidence

proxyApp proxy.AppConns // connection to the application

rpcListeners []net.Listener // rpc servers

txIndexer txindex.TxIndexer

indexerService *txindex.IndexerService

}

這讓我想起了geth中的Ethereum資料結構(/eth/backend.go#Ethereum)。Node組合了cmn.BaseService,按照go的語法,外部型別(Node)可以複用內部型別(cmn.BaseService)的方法和成員。Node自己實現Service介面的OnStart方法和OnStop方法。內部型別cmn.BaseService實現Service介面的所有方法(實際只實現了八個,OnStart和OnStop沒有函式體,而這兩個方法正是外部型別Node實現的兩個方法)。因此,Node變數是Service的例項。

啟動node的入口

程式碼位置:/vendor/github.com/tendermint/tmlibs/common/service.go#(bs *BaseService) Start()

// Start implements Service by calling OnStart (if defined). An error will be

// returned if the service is already running or stopped. Not to start the

// stopped service, you need to call Reset.

func (bs *BaseService) Start() error {

if atomic.CompareAndSwapUint32(&bs.started, 0, 1) {

if atomic.LoadUint32(&bs.stopped) == 1 {

bs.Logger.Error(Fmt("Not starting %v -- already stopped", bs.name), "impl", bs.impl)

return ErrAlreadyStopped

} else {

bs.Logger.Info(Fmt("Starting %v", bs.name), "impl", bs.impl) //列印Starting Node 日誌 module=main impl=Node

}

err := bs.impl.OnStart() //執行node/node.go#OnStart方法

if err != nil {

// revert flag

atomic.StoreUint32(&bs.started, 0)

return err

}

return nil

} else {

bs.Logger.Debug(Fmt("Not starting %v -- already started", bs.name), "impl", bs.impl)

return ErrAlreadyStarted

}

}

實際負責啟動的函式是OnStart,定義在/node/node.go#OnStart()

// OnStart starts the Node. It implements cmn.Service.

func (n *Node) OnStart() error {

err := n.eventBus.Start() //複用了BaseService的(bs *BaseService) Start()方法

if err != nil {

return err

}

// Run the RPC server first

// so we can eg. receive txs for the first block

if n.config.RPC.ListenAddress != "" {

listeners, err := n.startRPC() //啟動RPC

if err != nil {

return err

}

n.rpcListeners = listeners

}

// Create & add listener

protocol, address := cmn.ProtocolAndAddress(n.config.P2P.ListenAddress)

l := p2p.NewDefaultListener(protocol, address, n.config.P2P.SkipUPNP, n.Logger.With("module", "p2p"))

n.sw.AddListener(l)

// Generate node PrivKey

// TODO: pass in like priv_val

nodeKey, err := p2p.LoadOrGenNodeKey(n.config.NodeKeyFile())

if err != nil {

return err

}

n.Logger.Info("P2P Node ID", "ID", nodeKey.ID(), "file", n.config.NodeKeyFile())

// Start the switch

n.sw.SetNodeInfo(n.makeNodeInfo(nodeKey.PubKey()))

n.sw.SetNodeKey(nodeKey)

err = n.sw.Start()

if err != nil {

return err

}

// Always connect to persistent peers

if n.config.P2P.PersistentPeers != "" {

err = n.sw.DialPeersAsync(n.addrBook, strings.Split(n.config.P2P.PersistentPeers, ","), true)

if err != nil {

return err

}

}

// start tx indexer

return n.indexerService.Start()

}

停止node

TM中node啟動時執行了n.RunForever(),它負責監聽中斷訊號,然後停掉node。

// RunForever waits for an interrupt signal and stops the node.

func (n *Node) RunForever() {

// Sleep forever and then...

cmn.TrapSignal(func() {

n.Stop() //呼叫BaseService的(bs *BaseService) Stop方法

})

}

具體負責中斷訊號的是TrapSignal函式(vendor/github.com/tendermint/tmlibs/common/os.go):

// TrapSignal catches the SIGTERM and executes cb function. After that it exits

// with code 1.

func TrapSignal(cb func()) {

c := make(chan os.Signal, 1)

signal.Notify(c, os.Interrupt, syscall.SIGTERM)

go func() {

for sig := range c {

fmt.Printf("captured %v, exiting...\n", sig)

if cb != nil {

cb()

}

os.Exit(1)

}

}()

select {}

}

TrapSignal函式監聽了SIGTERM訊號。當用戶觸發了ctrl+c才終止node。

具體負責node的停止操作的是OnStop函式(/node/node.go#OnStop()):

// OnStop stops the Node. It implements cmn.Service.

func (n *Node) OnStop() {

n.BaseService.OnStop()

n.Logger.Info("Stopping Node")

// TODO: gracefully disconnect from peers.

n.sw.Stop()

for _, l := range n.rpcListeners {

n.Logger.Info("Closing rpc listener", "listener", l)

if err := l.Close(); err != nil {

n.Logger.Error("Error closing listener", "listener", l, "err", err)

}

}

n.eventBus.Stop()

n.indexerService.Stop()

}

總結

本文非常粗糙地梳理了TM中node啟動流程。後續我會進一步完善啟動流程分析。