scala在spark中使用log4j報不能序列化

阿新 • • 發佈:2019-01-05

Caused by: java.io.NotSerializableException: org.apache.log4j.Logger

Serialization stack:

- object not serializable (class: org.apache.log4j.Logger, value: [email protected])

- field (class: com.bluedon.dataMatch.NTADataProcess, name: log, type: class org.apache.log4j.Logger)

- object (class com.bluedon.dataMatch.NTADataProcess, [email protected])

- field (class: com.bluedon.dataMatch.NTADataProcess$$anonfun$ntaflowProcess$1, name: $outer, type: class com.bluedon.dataMatch.NTADataProcess)

- object (class com.bluedon.dataMatch.NTADataProcess$$anonfun$ntaflowProcess$1, <function1>)

- field (class: com.bluedon.dataMatch.NTADataProcess$$anonfun$ntaflowProcess$1$$anonfun$apply$1, name: $outer, type: class com.bluedon.dataMatch.NTADataProcess$$anonfun$ntaflowProcess$1)

- object (class com.bluedon.dataMatch.NTADataProcess$$anonfun$ntaflowProcess$1$$anonfun$apply$1, <function1>)

at org.apache.spark.serializer.SerializationDebugger$.improveException(SerializationDebugger.scala:40)

at org.apache.spark.serializer.JavaSerializationStream.writeObject(JavaSerializer.scala:46)

at org.apache.spark.serializer.JavaSerializerInstance.serialize(JavaSerializer.scala:100)

at org.apache.spark.util.ClosureCleaner$.ensureSerializable(ClosureCleaner.scala:295)

Serialization stack:

- object not serializable (class: org.apache.log4j.Logger, value: [email protected])

- field (class: com.bluedon.dataMatch.NTADataProcess, name: log, type: class org.apache.log4j.Logger)

- object (class com.bluedon.dataMatch.NTADataProcess, [email protected])

- field (class: com.bluedon.dataMatch.NTADataProcess$$anonfun$ntaflowProcess$1, name: $outer, type: class com.bluedon.dataMatch.NTADataProcess)

- object (class com.bluedon.dataMatch.NTADataProcess$$anonfun$ntaflowProcess$1, <function1>)

- field (class: com.bluedon.dataMatch.NTADataProcess$$anonfun$ntaflowProcess$1$$anonfun$apply$1, name: $outer, type: class com.bluedon.dataMatch.NTADataProcess$$anonfun$ntaflowProcess$1)

- object (class com.bluedon.dataMatch.NTADataProcess$$anonfun$ntaflowProcess$1$$anonfun$apply$1, <function1>)

at org.apache.spark.serializer.SerializationDebugger$.improveException(SerializationDebugger.scala:40)

at org.apache.spark.serializer.JavaSerializationStream.writeObject(JavaSerializer.scala:46)

at org.apache.spark.serializer.JavaSerializerInstance.serialize(JavaSerializer.scala:100)

at org.apache.spark.util.ClosureCleaner$.ensureSerializable(ClosureCleaner.scala:295)

... 30 more

log的檔案是

trait LogSupport {

val log = Logger.getLogger(this.getClass)

}

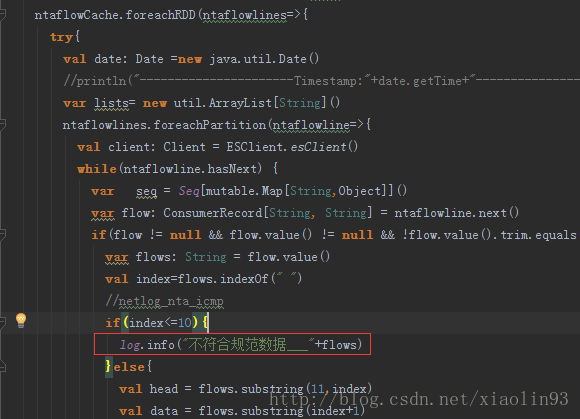

原因是因為在foreachRDD中是分割槽並行執行的,及時logsupport實現序列化也會報錯,解決方案是在

@transient lazy val log = Logger.getLogger(this.getClass)