【Python】打響2019年第三炮-Python爬蟲入門(三)

打響2019年第三炮-Python爬蟲入門

今晚喝了點茶,也就是剛剛,喝茶過程中大腦中溢位一個想法,茶中有茶葉,也有茶水,在茶水入口的一瞬間我不能直接喝進去,因為直接喝進去會帶著茶葉喝進去會很難受。這可能是一句廢話。

本章主要解決第一炮、第二炮遺留下來的問題,該如何去翻頁爬取資料?

等等,寫到這之前我突然想到了一個問題,不得不記錄一下。 因為敲程式碼一般都是使用兩個顯示器,一臺測試看文件,一臺去敲程式碼,那我突然想到,一般我們敲程式碼的顯示器一般是放在左邊還是右邊。 於是找了幾個資料,通過生理學所說:現代生理學表明,人的大腦分左腦和右腦兩部分。左腦是負責語言和抽象思維的腦,右腦主管形象思維,具有音樂、影象、整體性和幾何空間鑑別能力,對複雜關係的處理遠勝於左腦,左腦主要側重理性和邏輯,右腦主要側重形象情感功能。 心理學方面表示,人眼睛向左看時是在想問題,經過問了幾個稍微懂點的人,以及結合個人非專業的知識+百度。最後得出的結論為:一般看文件的顯示器放在左邊,一般敲程式碼的顯示器放在右邊。 個人理論:人在看文件也就是看原理的時候應該都會去想問題,思考,所以當人從左方看顯示器時,眼睛也會向左方,所以看文件,看原理顯示器放在左邊比較合適,那麼人在眼睛看右邊顯示器時候,程式碼一般都是有一些邏輯可以說是有一定複雜性的,正好人的右大腦對於複雜性的知識處理較快,因此經過我不專業的得出,擺放位置如下:

在上章中最終實現效果如下:

程式碼如下:

#!/usr/bin/env python

# -*- coding:utf-8 -*-

import requests

import csv

from requests.exceptions import RequestException

from bs4 import BeautifulSoup

def download(url, headers, num_retries=3):

print("download", url)

try:

response = requests.get(url, headers= 當我們開啟京東去搜索某一個商品時,點選下一頁來觀察URL的變化

當開啟第二頁的時候,在URL中顯示page=3如下:

以此類推當翻到第三頁的時候就是page=5 以此類推-1-3-5-7-9如下:

python程式碼中實現1-3-5-7-9…

#!/usr/bin/env python

# -*- coding:utf-8 -*-

for page in range(1, 9, 2):

print(f"這是第{page}頁")

>>>

這是第1頁

這是第3頁

這是第5頁

這是第7頁

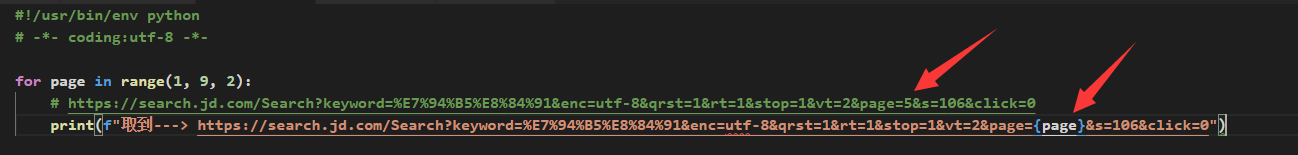

演變如下:

#!/usr/bin/env python

# -*- coding:utf-8 -*-

for page in range(1, 9, 2):

# https://search.jd.com/Search?keyword=%E7%94%B5%E8%84%91&enc=utf-8&qrst=1&rt=1&stop=1&vt=2&page=5&s=106&click=0

print(f"取到---> https://search.jd.com/Search?keyword=%E7%94%B5%E8%84%91&enc=utf-8&qrst=1&rt=1&stop=1&vt=2&page={page}&s=106&click=0")

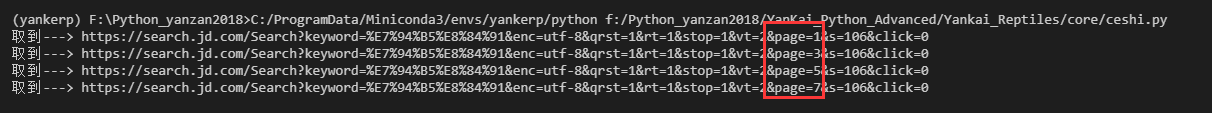

>>>

取到---> https://search.jd.com/Search?keyword=%E7%94%B5%E8%84%91&enc=utf-8&qrst=1&rt=1&stop=1&vt=2&page=1&s=106&click=0

取到---> https://search.jd.com/Search?keyword=%E7%94%B5%E8%84%91&enc=utf-8&qrst=1&rt=1&stop=1&vt=2&page=3&s=106&click=0

取到---> https://search.jd.com/Search?keyword=%E7%94%B5%E8%84%91&enc=utf-8&qrst=1&rt=1&stop=1&vt=2&page=5&s=106&click=0

取到---> https://search.jd.com/Search?keyword=%E7%94%B5%E8%84%91&enc=utf-8&qrst=1&rt=1&stop=1&vt=2&page=7&s=106&click=0

圖:

輸出結果

這樣就可以取到商品的不同頁面資訊,只需要通過requests.get取到值,隨後通過bs4找到資料即可如下:

def main():

headers = {

'User-agent': "Mozilla/5.0 (Windows NT 10.0; WOW64) AppleWebKit/537.36 (KHTML, like Gecko) Chrome/66.0.3359.139 Safari/537.36",

"referer": "https://passport.jd.com"

}

for page in range(1, 9, 2)

URL = f"https://search.jd.com/Search?keyword=%E7%94%B5%E8%84%91&enc=utf-8&qrst=1&rt=1&stop=1&vt=2&page={page}&s=106&click=0""

find_Computer(URL, headers=headers)

但是需要把多頁的資料存放至一個csv,所以得重寫一個寫入csv函式如下:

def write_csv(csv_name, data_list):

with open(csv_name, 'w', newline='') as f:

writer = csv.writer(f)

fields = ('ID', '名稱', '價格', '評論數', '好評率')

for data in data_list:

writer.writerow(data)

find_Computer如下:

程式碼如下:

#!/usr/bin/env python

# -*- coding:utf-8 -*-

import requests

import csv

from requests.exceptions import RequestException

from bs4 import BeautifulSoup

def download(url, headers, num_retries=3):

print("download", url)

try:

response = requests.get(url, headers=headers)

print(response.status_code)

if response.status_code == 200:

return response.content

return None

except RequestException as e:

print(e.response)

html = ""

if hasattr(e.response, 'status_code'):

code = e.response.status_code

print('error code', code)

if num_retries > 0 and 500 <= code < 600:

html = download(url, headers, num_retries - 1)

else:

code = None

return html

def write_csv(csv_name, data_list):

with open(csv_name, 'w', newline='') as f:

writer = csv.writer(f)

fields = ('ID', '名稱', '價格', '評論數', '好評率')

for data in data_list:

writer.writerow(data)

def find_Computer(url, headers):

r = download(url, headers=headers)

page = BeautifulSoup(r, "lxml")

all_items = page.find_all('li', attrs={'class' : 'gl-item'})

data_list = []

for all in all_items:

# 取每臺電腦的ID

Computer_id = all["data-sku"]

print(f"電腦ID為:{Computer_id}")

# 取每臺電腦的名稱

Computer_name = all.find('div', attrs={'class':'p-name p-name-type-2'}).find('em').text

print(f"電腦的名稱為:{Computer_name}")

# 取每臺電腦的價格

Computer_price = all.find('div', attrs={'class':'p-price'}).find('i').text

print(f"電腦的價格為:{Computer_price}元")

# 取每臺電腦的Json資料(包含 評價等等資訊)

Comment = f"https://club.jd.com/comment/productCommentSummaries.action?referenceIds={Computer_id}"

comment_count, good_rate = get_json(Comment)

print('評價人數:', comment_count)

print('好評率:', good_rate)

row = []

row.append(Computer_id)

row.append(Computer_name)

row.append(str(Computer_price) + "元")

row.append(comment_count)

row.append(str(good_rate) + "%")

data_list.append(row)

return data_list

def get_json(url):

data = requests.get(url).json()

result = data['CommentsCount']

for i in result:

return i["CommentCountStr"], i["GoodRateShow"]

def main():

headers = {

'User-agent': "Mozilla/5.0 (Windows NT 10.0; WOW64) AppleWebKit/537.36 (KHTML, like Gecko) Chrome/66.0.3359.139 Safari/537.36",

"referer": "https://passport.jd.com"

}

all_list = []

for page in range(1, 9, 2):

URL = f"https://search.jd.com/Search?keyword=%E7%94%B5%E8%84%91&enc=utf-8&qrst=1&rt=1&stop=1&vt=2&page={page}&s=106&click=0"

data_list = find_Computer(URL, headers=headers)

all_list += data_list

write_csv("csdn.csv", all_list)

if __name__ == '__main__':

main()

執行過程如下:

執行結果如下(部分截圖):

希望對您有所幫助,再見~