Android Camera HAL3中預覽preview模式下的控制流

本文均屬自己閱讀原始碼的點滴總結,轉賬請註明出處謝謝。

歡迎和大家交流。qq:1037701636 email:

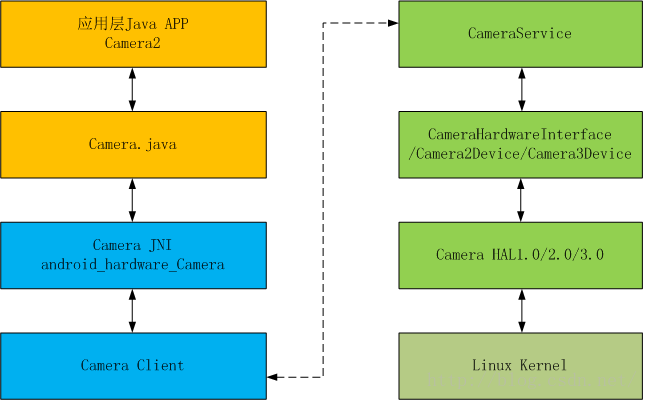

Software:系統原始碼Android5.1

Camera3研讀前沿:

當初在研讀Camera1.0相關的內容時,主要圍繞著CameraClient、CameraHardwareInterface等方面進行工作的開展,無論是資料流還是控制流看起來都很簡單、明瞭,一系列的流程化操作使得整個框架學起來特別的容易。因為沒有Camera2.0相關的基礎,所以這次直接看3.0相關的原始碼時,顯得十分的吃緊,再加上底層高通HAL3.0實現的過程也是相當的複雜,都給整個研讀過程帶來了很多的困難。可以說,自身目前對Camera3.0框架的熟悉度也大概只有70%左右,希望通過總結來進一步梳理他的工作原理與整個框架,並進一步熟悉與加深理解

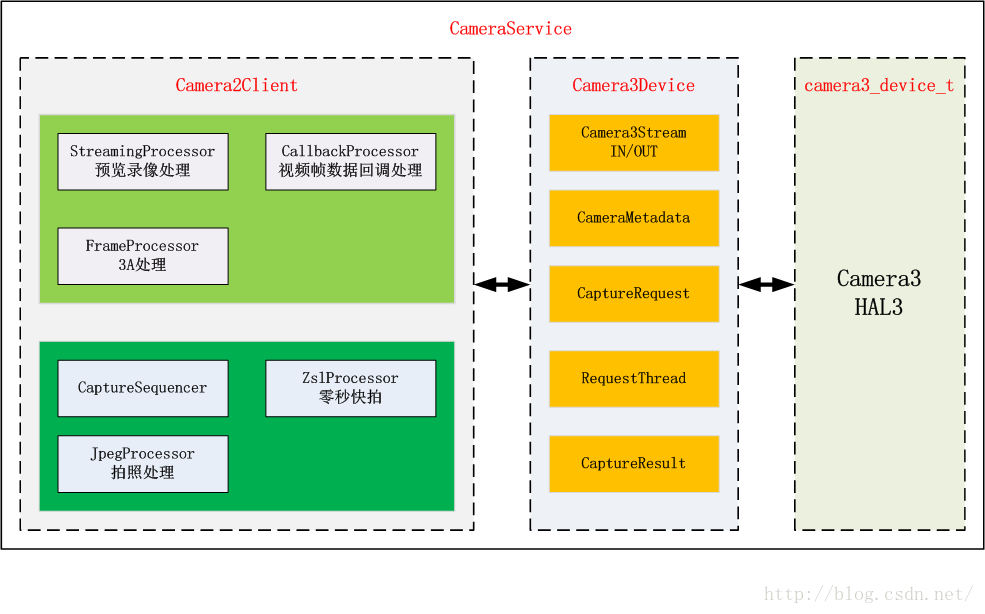

1.Camera3下的整體架構圖。

整個CameraService建立起一個可用操作底層Camera device大致需要經過Camera2Client、Camera3Device以及HAL層的camera3_device_t三個部分。

從上圖中可以發現Camera3架構看上去明顯比camera1來的複雜,但他更加的模組化。對比起Android4.2.2 Camer系統架構圖(HAL和回撥處理)一文中描述的單順序執行流程,Camera3將更多的工作集中在了Framework去完成,將更多的控制權掌握在自己的手裡,從而與HAL的互動的資料資訊更少,也進一步減輕了一些在舊版本中HAL層所需要做的事情。

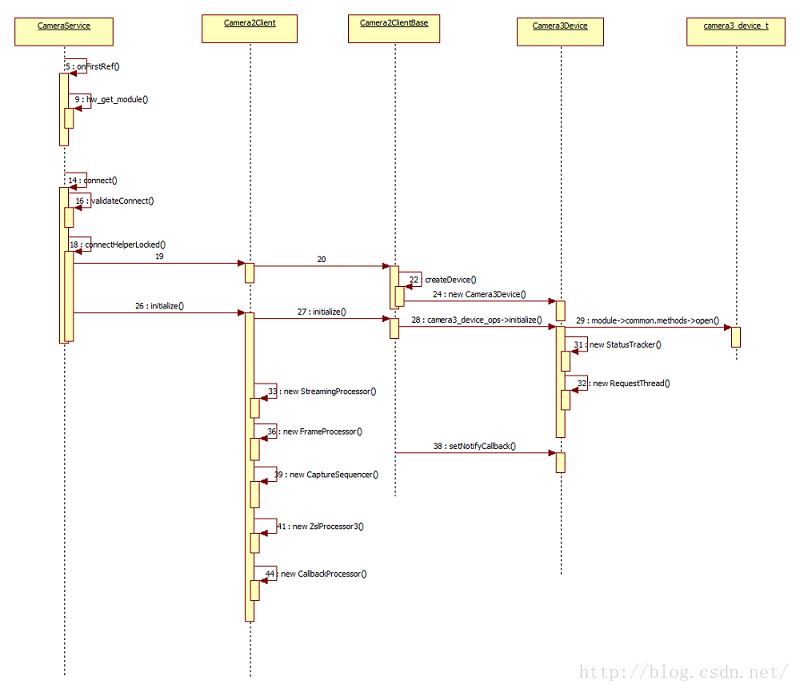

2. Camera2Client的建立與初始化過程

在建立好Camera2Client後會進行initialize操作,完成各個處理模組的建立:

依次分別建立了:.... mStreamingProcessor = new StreamingProcessor(this);//preview和recorder threadName = String8::format("C2-%d-StreamProc", mCameraId); mStreamingProcessor->run(threadName.string());//預覽與錄影 mFrameProcessor = new FrameProcessor(mDevice, this);// 3A threadName = String8::format("C2-%d-FrameProc", mCameraId); mFrameProcessor->run(threadName.string()); //3A mCaptureSequencer = new CaptureSequencer(this); threadName = String8::format("C2-%d-CaptureSeq", mCameraId); mCaptureSequencer->run(threadName.string());//錄影,拍照 mJpegProcessor = new JpegProcessor(this, mCaptureSequencer); threadName = String8::format("C2-%d-JpegProc", mCameraId); mJpegProcessor->run(threadName.string()); .... mCallbackProcessor = new CallbackProcessor(this);//回撥處理 threadName = String8::format("C2-%d-CallbkProc", mCameraId); mCallbackProcessor->run(threadName.string());

StreamingProcessor並啟動一個他所屬的thread,該模組主要負責處理previews與record兩種視訊流的處理,用於從hal層獲取原始的視訊資料

FrameProcessor並啟動一個thread,該模組專門用於處理回調回來的每一幀的3A等資訊,即每一幀視訊除去原始視訊資料外,還應該有其他附加的資料資訊,如3A值。

CaptureSequencer並啟動一個thread,該模組需要和其他模組配合使用,主要用於向APP層告知capture到的picture。

JpegProcessor並啟動一個thread,該模組和streamprocessor類似,他啟動一個拍照流,一般用於從HAL層獲取jpeg編碼後的影象照片資料。

此外ZslProcessor模組稱之為0秒快拍,其本質是直接從原始的Preview流中獲取預存著的最近的幾幀,直接編碼後返回給APP,而不需要再經過take picture去請求獲取jpeg資料。0秒快拍技術得意於當下處理器CSI2 MIPI效能的提升以及Sensor支援全畫素高幀率的實時輸出。一般手機拍照在按下快門後都會有一定的延時,是因為需要切換底層Camera以及ISP等的工作模式,並重新設定引數以及重新對焦等等,都需要花一定時間後才抓取一幀用於編碼為jpeg影象。

以上5個模組整合在一起基本上實現了Camera應用開發所需的基本業務功能。

3. 預覽Preview下的控制流

研讀Camera具體的業務處理功能,一般從視訊實時預覽Preview入手。一般熟悉Camera架構的人,可以從一個app端的一個api一直連續打通到底層hal的一個控制命令。大致可以如下圖所示:

對於preview部分到CameraService的控制流可以參考博文Android4.2.2的preview的資料流和控制流以及最終的預覽顯示,本文將直接從Camera2Client::startPreview() 作為入口來分析整個Framework層中Preview相關的資料流。

status_t Camera2Client::startPreview() {

ATRACE_CALL();

ALOGV("%s: E", __FUNCTION__);

Mutex::Autolock icl(mBinderSerializationLock);

status_t res;

if ( (res = checkPid(__FUNCTION__) ) != OK) return res;

SharedParameters::Lock l(mParameters);

return startPreviewL(l.mParameters, false);

}status_t Camera2Client::startPreviewL(Parameters ¶ms, bool restart) {//restart == false

ATRACE_CALL();

status_t res;

......

int lastPreviewStreamId = mStreamingProcessor->getPreviewStreamId();//獲取上一層Preview stream id

res = mStreamingProcessor->updatePreviewStream(params);//建立camera3device stream, Camera3OutputStream

.....

int lastJpegStreamId = mJpegProcessor->getStreamId();

res = updateProcessorStream(mJpegProcessor, params);//預覽啟動時就建立一個jpeg的outstream

.....

res = mCallbackProcessor->updateStream(params);//回撥處理建立一個Camera3outputstream

if (res != OK) {

ALOGE("%s: Camera %d: Unable to update callback stream: %s (%d)",

__FUNCTION__, mCameraId, strerror(-res), res);

return res;

}

outputStreams.push(getCallbackStreamId());

......

outputStreams.push(getPreviewStreamId());//預覽stream

......

if (!params.recordingHint) {

if (!restart) {

res = mStreamingProcessor->updatePreviewRequest(params);//request處理,更新了mPreviewrequest

if (res != OK) {

ALOGE("%s: Camera %d: Can't set up preview request: "

"%s (%d)", __FUNCTION__, mCameraId,

strerror(-res), res);

return res;

}

}

res = mStreamingProcessor->startStream(StreamingProcessor::PREVIEW,

outputStreams);//啟動stream,傳入outputStreams即stream 的id

} else {

if (!restart) {

res = mStreamingProcessor->updateRecordingRequest(params);

if (res != OK) {

ALOGE("%s: Camera %d: Can't set up preview request with "

"record hint: %s (%d)", __FUNCTION__, mCameraId,

strerror(-res), res);

return res;

}

}

res = mStreamingProcessor->startStream(StreamingProcessor::RECORD,

outputStreams);

}

......

}

(1). mStreamingProcessor->updatePreviewStream()

由預覽與錄影處理模組更新一個預覽流,其實現過程如下:

status_t StreamingProcessor::updatePreviewStream(const Parameters ¶ms) {

ATRACE_CALL();

Mutex::Autolock m(mMutex);

status_t res;

sp<CameraDeviceBase> device = mDevice.promote();//Camera3Device

if (device == 0) {

ALOGE("%s: Camera %d: Device does not exist", __FUNCTION__, mId);

return INVALID_OPERATION;

}

if (mPreviewStreamId != NO_STREAM) {

// Check if stream parameters have to change

uint32_t currentWidth, currentHeight;

res = device->getStreamInfo(mPreviewStreamId,

¤tWidth, ¤tHeight, 0);

if (res != OK) {

ALOGE("%s: Camera %d: Error querying preview stream info: "

"%s (%d)", __FUNCTION__, mId, strerror(-res), res);

return res;

}

if (currentWidth != (uint32_t)params.previewWidth ||

currentHeight != (uint32_t)params.previewHeight) {

ALOGV("%s: Camera %d: Preview size switch: %d x %d -> %d x %d",

__FUNCTION__, mId, currentWidth, currentHeight,

params.previewWidth, params.previewHeight);

res = device->waitUntilDrained();

if (res != OK) {

ALOGE("%s: Camera %d: Error waiting for preview to drain: "

"%s (%d)", __FUNCTION__, mId, strerror(-res), res);

return res;

}

res = device->deleteStream(mPreviewStreamId);

if (res != OK) {

ALOGE("%s: Camera %d: Unable to delete old output stream "

"for preview: %s (%d)", __FUNCTION__, mId,

strerror(-res), res);

return res;

}

mPreviewStreamId = NO_STREAM;

}

}

if (mPreviewStreamId == NO_STREAM) {//首次create stream

res = device->createStream(mPreviewWindow,

params.previewWidth, params.previewHeight,

CAMERA2_HAL_PIXEL_FORMAT_OPAQUE, &mPreviewStreamId);//建立一個Camera3OutputStream

if (res != OK) {

ALOGE("%s: Camera %d: Unable to create preview stream: %s (%d)",

__FUNCTION__, mId, strerror(-res), res);

return res;

}

}

res = device->setStreamTransform(mPreviewStreamId,

params.previewTransform);

if (res != OK) {

ALOGE("%s: Camera %d: Unable to set preview stream transform: "

"%s (%d)", __FUNCTION__, mId, strerror(-res), res);

return res;

}

return OK;

}

注意:在Camera2Client中,Stream大行其道,5大模組的資料互動均以stream作為基礎。

下面我們來重點關注Camera3Device的介面createStream,他是5個模組建立stream的基礎:

status_t Camera3Device::createStream(sp<ANativeWindow> consumer,

uint32_t width, uint32_t height, int format, int *id) {

ATRACE_CALL();

Mutex::Autolock il(mInterfaceLock);

Mutex::Autolock l(mLock);

ALOGV("Camera %d: Creating new stream %d: %d x %d, format %d",

mId, mNextStreamId, width, height, format);

status_t res;

bool wasActive = false;

switch (mStatus) {

case STATUS_ERROR:

CLOGE("Device has encountered a serious error");

return INVALID_OPERATION;

case STATUS_UNINITIALIZED:

CLOGE("Device not initialized");

return INVALID_OPERATION;

case STATUS_UNCONFIGURED:

case STATUS_CONFIGURED:

// OK

break;

case STATUS_ACTIVE:

ALOGV("%s: Stopping activity to reconfigure streams", __FUNCTION__);

res = internalPauseAndWaitLocked();

if (res != OK) {

SET_ERR_L("Can't pause captures to reconfigure streams!");

return res;

}

wasActive = true;

break;

default:

SET_ERR_L("Unexpected status: %d", mStatus);

return INVALID_OPERATION;

}

assert(mStatus != STATUS_ACTIVE);

sp<Camera3OutputStream> newStream;

if (format == HAL_PIXEL_FORMAT_BLOB) {//圖片

ssize_t jpegBufferSize = getJpegBufferSize(width, height);

if (jpegBufferSize <= 0) {

SET_ERR_L("Invalid jpeg buffer size %zd", jpegBufferSize);

return BAD_VALUE;

}

newStream = new Camera3OutputStream(mNextStreamId, consumer,

width, height, jpegBufferSize, format);//jpeg 快取的大小

} else {

newStream = new Camera3OutputStream(mNextStreamId, consumer,

width, height, format);//Camera3OutputStream

}

newStream->setStatusTracker(mStatusTracker);

res = mOutputStreams.add(mNextStreamId, newStream);//一個streamid與Camera3OutputStream繫結

if (res < 0) {

SET_ERR_L("Can't add new stream to set: %s (%d)", strerror(-res), res);

return res;

}

*id = mNextStreamId++;//至少一個previewstream 一般還有CallbackStream

mNeedConfig = true;

// Continue captures if active at start

if (wasActive) {

ALOGV("%s: Restarting activity to reconfigure streams", __FUNCTION__);

res = configureStreamsLocked();

if (res != OK) {

CLOGE("Can't reconfigure device for new stream %d: %s (%d)",

mNextStreamId, strerror(-res), res);

return res;

}

internalResumeLocked();

}

ALOGV("Camera %d: Created new stream", mId);

return OK;

}兩種stream,前者主要作為HAL的輸出,是請求HAL填充資料的OutPutStream,後者是由Framework將Stream進行填充。無論是Preview、record還是capture均是從HAL層獲取資料,故都會以OutPutStream的形式存在,是我們關注的重點,後面在描述Preview的資料流時還會進一步的闡述。

每當建立一個OutPutStream後,相關的stream資訊被push維護在一個mOutputStreams的KeyedVector<int, sp<camera3::Camera3OutputStreamInterface> >表中,分別是該stream在Camera3Device中建立時的ID以及Camera3OutputStream的sp值。同時對mNextStreamId記錄下一個Stream的ID號。

上述過程完成StreamingProcessor模組中一個PreviewStream的建立,其中Camera3OutputStream建立時的ID值被返回記錄作為mPreviewStreamId的值,此外每個Stream都會有一個對應的ANativeWindow,這裡稱之為Consumer。

(2)mCallbackProcessor->updateStream(params)

對比StreamingProcessor模組建立previewstream的過程,很容易定位到Callback模組是需要建立一個callback流,同樣需要建立一個Camera3OutputStream來接收HAL返回的每一幀幀資料,是否需要callback可以通過callbackenable來控制。一般但預覽階段可能不需要回調每一幀的資料到APP,但涉及到相應的其他業務如視訊處理時,就需要進行callback的enable。

status_t CallbackProcessor::updateStream(const Parameters ¶ms) {

ATRACE_CALL();

status_t res;

Mutex::Autolock l(mInputMutex);

sp<CameraDeviceBase> device = mDevice.promote();

if (device == 0) {

ALOGE("%s: Camera %d: Device does not exist", __FUNCTION__, mId);

return INVALID_OPERATION;

}

// If possible, use the flexible YUV format

int32_t callbackFormat = params.previewFormat;

if (mCallbackToApp) {

// TODO: etalvala: This should use the flexible YUV format as well, but

// need to reconcile HAL2/HAL3 requirements.

callbackFormat = HAL_PIXEL_FORMAT_YV12;

} else if(params.fastInfo.useFlexibleYuv &&

(params.previewFormat == HAL_PIXEL_FORMAT_YCrCb_420_SP ||

params.previewFormat == HAL_PIXEL_FORMAT_YV12) ) {

callbackFormat = HAL_PIXEL_FORMAT_YCbCr_420_888;

}

if (!mCallbackToApp && mCallbackConsumer == 0) {

// Create CPU buffer queue endpoint, since app hasn't given us one

// Make it async to avoid disconnect deadlocks

sp<IGraphicBufferProducer> producer;

sp<IGraphicBufferConsumer> consumer;

BufferQueue::createBufferQueue(&producer, &consumer);//BufferQueueProducer與BufferQueueConsumer

mCallbackConsumer = new CpuConsumer(consumer, kCallbackHeapCount);

mCallbackConsumer->setFrameAvailableListener(this);//當前CallbackProcessor繼承於CpuConsumer::FrameAvailableListener

mCallbackConsumer->setName(String8("Camera2Client::CallbackConsumer"));

mCallbackWindow = new Surface(producer);//用於queue操作,這裡直接進行本地的buffer操作

}

if (mCallbackStreamId != NO_STREAM) {

// Check if stream parameters have to change

uint32_t currentWidth, currentHeight, currentFormat;

res = device->getStreamInfo(mCallbackStreamId,

¤tWidth, ¤tHeight, ¤tFormat);

if (res != OK) {

ALOGE("%s: Camera %d: Error querying callback output stream info: "

"%s (%d)", __FUNCTION__, mId,

strerror(-res), res);

return res;

}

if (currentWidth != (uint32_t)params.previewWidth ||

currentHeight != (uint32_t)params.previewHeight ||

currentFormat != (uint32_t)callbackFormat) {

// Since size should only change while preview is not running,

// assuming that all existing use of old callback stream is

// completed.

ALOGV("%s: Camera %d: Deleting stream %d since the buffer "

"parameters changed", __FUNCTION__, mId, mCallbackStreamId);

res = device->deleteStream(mCallbackStreamId);

if (res != OK) {

ALOGE("%s: Camera %d: Unable to delete old output stream "

"for callbacks: %s (%d)", __FUNCTION__,

mId, strerror(-res), res);

return res;

}

mCallbackStreamId = NO_STREAM;

}

}

if (mCallbackStreamId == NO_STREAM) {

ALOGV("Creating callback stream: %d x %d, format 0x%x, API format 0x%x",

params.previewWidth, params.previewHeight,

callbackFormat, params.previewFormat);

res = device->createStream(mCallbackWindow,

params.previewWidth, params.previewHeight,

callbackFormat, &mCallbackStreamId);//Creating callback stream

if (res != OK) {

ALOGE("%s: Camera %d: Can't create output stream for callbacks: "

"%s (%d)", __FUNCTION__, mId,

strerror(-res), res);

return res;

}

}

return OK;

}通過這個對比,也需要重點關注到,對於每個Camera3OutPutStream來說,每一個stream都被一個Consumer,而在此處都是Surface(ANativeWindow)所擁有,這個Consumer和HAL相匹配來說是消費者,但對於真正的處理Buffer的Consumer來說如CPUConsumer,Surface卻又是以一個Product的角色存在的。

(3)updateProcessorStream(mJpegProcessor, params)

status_t Camera2Client::updateProcessorStream(sp<ProcessorT> processor,

camera2::Parameters params) {

// No default template arguments until C++11, so we need this overload

return updateProcessorStream<ProcessorT, &ProcessorT::updateStream>(

processor, params);

}

template <typename ProcessorT,

status_t (ProcessorT::*updateStreamF)(const Parameters &)>

status_t Camera2Client::updateProcessorStream(sp<ProcessorT> processor,

Parameters params) {

status_t res;

// Get raw pointer since sp<T> doesn't have operator->*

ProcessorT *processorPtr = processor.get();

res = (processorPtr->*updateStreamF)(params);

.......

}此外需要說明一點:

在preview模式下,就去建立一個jpeg處理的stream,目的在於啟動takepicture時,可以更快的進行capture操作。是通過犧牲記憶體空間來提升效率。

(4)整合startPreviewL中所有的stream 到Vector<int32_t> outputStreams

outputStreams.push(getPreviewStreamId());//預覽stream

outputStreams.push(getCallbackStreamId())//Callback stream

目前一次Preview構建的stream數目至少為兩個。

(5)mStreamingProcessor->updatePreviewRequest()

在建立好多路stream後,由StreamingProcessor模組來將所有的stream資訊交由Camera3Device去打包成Request請求。

注意:

Camera HAL2/3的特點是:將所有stream的請求都轉化為幾個典型的Request請求,而這些Request需要由HAL去解析,進而處理所需的業務。這也是Camera3資料處理複雜化的原因所在。

status_t StreamingProcessor::updatePreviewRequest(const Parameters ¶ms) {

ATRACE_CALL();

status_t res;

sp<CameraDeviceBase> device = mDevice.promote();

if (device == 0) {

ALOGE("%s: Camera %d: Device does not exist", __FUNCTION__, mId);

return INVALID_OPERATION;

}

Mutex::Autolock m(mMutex);

if (mPreviewRequest.entryCount() == 0) {

sp<Camera2Client> client = mClient.promote();

if (client == 0) {

ALOGE("%s: Camera %d: Client does not exist", __FUNCTION__, mId);

return INVALID_OPERATION;

}

// Use CAMERA3_TEMPLATE_ZERO_SHUTTER_LAG for ZSL streaming case.

if (client->getCameraDeviceVersion() >= CAMERA_DEVICE_API_VERSION_3_0) {

if (params.zslMode && !params.recordingHint) {

res = device->createDefaultRequest(CAMERA3_TEMPLATE_ZERO_SHUTTER_LAG,

&mPreviewRequest);

} else {

res = device->createDefaultRequest(CAMERA3_TEMPLATE_PREVIEW,

&mPreviewRequest);

}

} else {

res = device->createDefaultRequest(CAMERA2_TEMPLATE_PREVIEW,

&mPreviewRequest);//建立一個Preview相關的request,由底層的hal來完成default建立

}

if (res != OK) {

ALOGE("%s: Camera %d: Unable to create default preview request: "

"%s (%d)", __FUNCTION__, mId, strerror(-res), res);

return res;

}

}

res = params.updateRequest(&mPreviewRequest);//根據引數來更新CameraMetadata request

if (res != OK) {

ALOGE("%s: Camera %d: Unable to update common entries of preview "

"request: %s (%d)", __FUNCTION__, mId,

strerror(-res), res);

return res;

}

res = mPreviewRequest.update(ANDROID_REQUEST_ID,

&mPreviewRequestId, 1);//mPreviewRequest的ANDROID_REQUEST_ID

if (res != OK) {

ALOGE("%s: Camera %d: Unable to update request id for preview: %s (%d)",

__FUNCTION__, mId, strerror(-res), res);

return res;

}

return OK;

}a mPreviewRequest是一個CameraMetadata型別資料,用於封裝當前previewRequest。

b device->createDefaultRequest(CAMERA3_TEMPLATE_PREVIEW, &mPreviewRequest)

const camera_metadata_t *rawRequest;

ATRACE_BEGIN("camera3->construct_default_request_settings");

rawRequest = mHal3Device->ops->construct_default_request_settings(

mHal3Device, templateId);

ATRACE_END();

if (rawRequest == NULL) {

SET_ERR_L("HAL is unable to construct default settings for template %d",

templateId);

return DEAD_OBJECT;

}

*request = rawRequest;

mRequestTemplateCache[templateId] = rawRequest;struct camera_metadata {

metadata_size_t size;

uint32_t version;

uint32_t flags;

metadata_size_t entry_count;

metadata_size_t entry_capacity;

metadata_uptrdiff_t entries_start; // Offset from camera_metadata

metadata_size_t data_count;

metadata_size_t data_capacity;

metadata_uptrdiff_t data_start; // Offset from camera_metadata

uint8_t reserved[];

};c mPreviewRequest.update(ANDROID_REQUEST_ID,&mPreviewRequestId, 1)

將當前的PreviewRequest相應的ID儲存到camera metadata。

(6)mStreamingProcessor->startStream啟動整個預覽的stream流

該函式的處理過程較為複雜,可以說是整個Preview正常工作的核心控制

status_t StreamingProcessor::startStream(StreamType type,

const Vector<int32_t> &outputStreams) {

.....

CameraMetadata &request = (type == PREVIEW) ?

mPreviewRequest : mRecordingRequest;//取preview的CameraMetadata request

....res = request.update(

ANDROID_REQUEST_OUTPUT_STREAMS,

outputStreams);//CameraMetadata中新增outputStreams

res = device->setStreamingRequest(request);//向hal傳送request

.....

}該函式首先是根據當前工作模式來確定StreamingProcessor需要處理的Request,該模組負責Preview和Record兩個Request。

以PreviewRequest就是之前createDefaultRequest構建的,這裡先是將這個Request所需要操作的Outputstream打包到一個tag叫ANDROID_REQUEST_OUTPUT_STREAMS的entry當中。

a:setStreamingRequest

真正的請求Camera3Device去處理這個帶有多路stream的PreviewRequest。

status_t Camera3Device::setStreamingRequest(const CameraMetadata &request,

int64_t* /*lastFrameNumber*/) {

ATRACE_CALL();

List<const CameraMetadata> requests;

requests.push_back(request);

return setStreamingRequestList(requests, /*lastFrameNumber*/NULL);

}status_t Camera3Device::setStreamingRequestList(const List<const CameraMetadata> &requests,

int64_t *lastFrameNumber) {

ATRACE_CALL();

return submitRequestsHelper(requests, /*repeating*/true, lastFrameNumber);

}status_t Camera3Device::submitRequestsHelper(

const List<const CameraMetadata> &requests, bool repeating,

/*out*/

int64_t *lastFrameNumber) {//repeating = 1;lastFrameNumber = NULL

ATRACE_CALL();

Mutex::Autolock il(mInterfaceLock);

Mutex::Autolock l(mLock);

status_t res = checkStatusOkToCaptureLocked();

if (res != OK) {

// error logged by previous call

return res;

}

RequestList requestList;

res = convertMetadataListToRequestListLocked(requests, /*out*/&requestList);//返回的是CaptureRequest RequestList

if (res != OK) {

// error logged by previous call

return res;

}

if (repeating) {

res = mRequestThread->setRepeatingRequests(requestList, lastFrameNumber);//重複的request存入到RequestThread

} else {

res = mRequestThread->queueRequestList(requestList, lastFrameNumber);//capture模式,拍照單詞

}

if (res == OK) {

waitUntilStateThenRelock(/*active*/true, kActiveTimeout);

if (res != OK) {

SET_ERR_L("Can't transition to active in %f seconds!",

kActiveTimeout/1e9);

}

ALOGV("Camera %d: Capture request %" PRId32 " enqueued", mId,

(*(requestList.begin()))->mResultExtras.requestId);

} else {

CLOGE("Cannot queue request. Impossible.");

return BAD_VALUE;

}

return res;

}

這個函式是需要將Requestlist中儲存的CameraMetadata資料轉換為List<sp<CaptureRequest> >

status_t Camera3Device::convertMetadataListToRequestListLocked(

const List<const CameraMetadata> &metadataList, RequestList *requestList) {

if (requestList == NULL) {

CLOGE("requestList cannot be NULL.");

return BAD_VALUE;

}

int32_t burstId = 0;

for (List<const CameraMetadata>::const_iterator it = metadataList.begin();//CameraMetadata, mPreviewRequest

it != metadataList.end(); ++it) {

sp<CaptureRequest> newRequest = setUpRequestLocked(*it);//新建CaptureRequest由CameraMetadata轉化而來

if (newRequest == 0) {

CLOGE("Can't create capture request");

return BAD_VALUE;

}

// Setup burst Id and request Id

newRequest->mResultExtras.burstId = burstId++;

if (it->exists(ANDROID_REQUEST_ID)) {

if (it->find(ANDROID_REQUEST_ID).count == 0) {

CLOGE("RequestID entry exists; but must not be empty in metadata");

return BAD_VALUE;

}

newRequest->mResultExtras.requestId = it->find(ANDROID_REQUEST_ID).data.i32[0];//設定該request對應的id

} else {

CLOGE("RequestID does not exist in metadata");

return BAD_VALUE;

}

requestList->push_back(newRequest);

ALOGV("%s: requestId = %" PRId32, __FUNCTION__, newRequest->mResultExtras.requestId);

}

return OK;

}

c 重點來關注setUpRequestLocked複雜的處理過程

sp<Camera3Device::CaptureRequest> Camera3Device::setUpRequestLocked(

const CameraMetadata &request) {//mPreviewRequest

status_t res;

if (mStatus == STATUS_UNCONFIGURED || mNeedConfig) {

res = configureStreamsLocked();

......sp<CaptureRequest> newRequest = createCaptureRequest(request);//CameraMetadata轉為CaptureRequest,包含mOutputStreams

return newRequest;

}configureStreamsLocked函式主要是將Camera3Device側建立的所有Stream包括Output與InPut格式的交由HAL3層的Device去實現處理的核心介面是configure_streams與register_stream_buffer。該部分內容會涉及到更多的資料流,詳細的處理過程會放在下一博文中進行分析。

createCaptureRequest函式是將一個CameraMetadata格式的資料如PreviewRequest轉換為一個CaptureRequest:

sp<Camera3Device::CaptureRequest> Camera3Device::createCaptureRequest(

const CameraMetadata &request) {//mPreviewRequest

ATRACE_CALL();

status_t res;

sp<CaptureRequest> newRequest = new CaptureRequest;

newRequest->mSettings = request;//CameraMetadata

camera_metadata_entry_t inputStreams =

newRequest->mSettings.find(ANDROID_REQUEST_INPUT_STREAMS);

if (inputStreams.count > 0) {

if (mInputStream == NULL ||

mInputStream->getId() != inputStreams.data.i32[0]) {

CLOGE("Request references unknown input stream %d",

inputStreams.data.u8[0]);

return NULL;

}

// Lazy completion of stream configuration (allocation/registration)

// on first use

if (mInputStream->isConfiguring()) {

res = mInputStream->finishConfiguration(mHal3Device);

if (res != OK) {

SET_ERR_L("Unable to finish configuring input stream %d:"

" %s (%d)",

mInputStream->getId(), strerror(-res), res);

return NULL;

}

}

newRequest->mInputStream = mInputStream;

newRequest->mSettings.erase(ANDROID_REQUEST_INPUT_STREAMS);

}

camera_metadata_entry_t streams =

newRequest->mSettings.find(ANDROID_REQUEST_OUTPUT_STREAMS);//讀取儲存在CameraMetadata的stream id資訊

if (streams.count == 0) {

CLOGE("Zero output streams specified!");

return NULL;

}

for (size_t i = 0; i < streams.count; i++) {

int idx = mOutputStreams.indexOfKey(streams.data.i32[i]);//Camera3OutputStream的id在mOutputStreams中

if (idx == NAME_NOT_FOUND) {

CLOGE("Request references unknown stream %d",

streams.data.u8[i]);

return NULL;

}

sp<Camera3OutputStreamInterface> stream =

mOutputStreams.editValueAt(idx);//返回的是Camera3OutputStream,preview/callback等stream

// Lazy completion of stream configuration (allocation/registration)

// on first use

if (stream->isConfiguring()) {//STATE_IN_CONFIG或者STATE_IN_RECONFIG

res = stream->finishConfiguration(mHal3Device);//register_stream_buffer, STATE_CONFIGURED

if (res != OK) {

SET_ERR_L("Unable to finish configuring stream %d: %s (%d)",

stream->getId(), strerror(-res), res);

return NULL;

}

}

newRequest->mOutputStreams.push(stream);//Camera3OutputStream新增到CaptureRequest的mOutputStreams

}

newRequest->mSettings.erase(ANDROID_REQUEST_OUTPUT_STREAMS);

return newRequest;

}在構建這個PreviewRequest時,已經將ANDROID_REQUEST_OUTPUT_STREAMS這個Tag進行了初始化,相應的內容為Vector<int32_t> &outputStreams,包含著屬於PreviewRequest這個Request所需要的輸出stream的ID值,通過這個ID index值,可以遍歷到Camera3Device下所createstream創造的Camera3OutputStream,即說明不同型別的Request在Camera3Device端存在多個Stream,而每次不同業務下所需要Request的對應的Stream又僅是其中的個別而已。

idx = mOutputStreams.indexOfKey(streams.data.i32[i])是通過屬於PreviewRequest中包含的一個stream的ID值來查詢到mOutputStreams這個KeyedVector中對應的標定值index。注意:兩個索引值不一定是一致的。

mOutputStreams.editValueAt(idx)是獲取一個與該ID值(如Previewstream ID、Callback Stream ID等等)相對應的Camera3OutputStream。

在找到了當前Request中所有的Camera3OutputStream後,將其維護在CaptureRequest中

class CaptureRequest : public LightRefBase<CaptureRequest> {

public:

CameraMetadata mSettings;

sp<camera3::Camera3Stream> mInputStream;

Vector<sp<camera3::Camera3OutputStreamInterface> >

mOutputStreams;

CaptureResultExtras mResultExtras;

};返回到convertMetadataListToRequestListLocked中,現在已經完成了一個CameraMetadata Request的處理,生產的是一個CaptureRequest。我們將這個ANDROID_REQUEST_ID的ID值,保留在

newRequest->mResultExtras.requestId = it->find(ANDROID_REQUEST_ID).data.i32[0]。

這個值在整個Camera3的架構中,僅存在3大種Request型別,說明了整個和HAL層互動的Request型別是不多的:

預覽Request mPreviewRequest: mPreviewRequestId(Camera2Client::kPreviewRequestIdStart),

拍照Request mCaptureRequest:mCaptureId(Camera2Client::kCaptureRequestIdStart),

錄影Request mRecordingRequest: mRecordingRequestId(Camera2Client::kRecordingRequestIdStart),

static const int32_t kPreviewRequestIdStart = 10000000;

static const int32_t kPreviewRequestIdEnd = 20000000;

static const int32_t kRecordingRequestIdStart = 20000000;

static const int32_t kRecordingRequestIdEnd = 30000000;

static const int32_t kCaptureRequestIdStart = 30000000;

static const int32_t kCaptureRequestIdEnd = 40000000;d mRequestThread->setRepeatingRequests(requestList)

對於Preview來說,一次Preview後底層硬體就該可以連續的工作,而不需要進行過多的切換,故Framework每次向HAL傳送的Request均是一種repeat的操作模式,故呼叫了一個重複的RequestQueue來迴圈處理每次的Request。

status_t Camera3Device::RequestThread::setRepeatingRequests(

const RequestList &requests,

/*out*/

int64_t *lastFrameNumber) {

Mutex::Autolock l(mRequestLock);

if (lastFrameNumber != NULL) {//第一次進來為null

*lastFrameNumber = mRepeatingLastFrameNumber;

}

mRepeatingRequests.clear();

mRepeatingRequests.insert(mRepeatingRequests.begin(),

requests.begin(), requests.end());

unpauseForNewRequests();//signal request_thread in waitfornextrequest

mRepeatingLastFrameNumber = NO_IN_FLIGHT_REPEATING_FRAMES;

return OK;

}

(7) RequestThread 請求處理執行緒

RequestThread::threadLoop()函式主要用於響應並處理新加入到Request佇列中的請求。

bool Camera3Device::RequestThread::threadLoop() {

....

sp<CaptureRequest> nextRequest = waitForNextRequest();//返回的是mRepeatingRequests,mPreviewRequest

if (nextRequest == NULL) {

return true;

}

// Create request to HAL

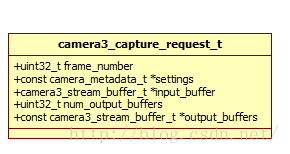

camera3_capture_request_t request = camera3_capture_request_t();//CaptureRequest轉為給HAL3.0的camera3_capture_request_t

request.frame_number = nextRequest->mResultExtras.frameNumber;//當前幀號

Vector<camera3_stream_buffer_t> outputBuffers;

// Get the request ID, if any

int requestId;

camera_metadata_entry_t requestIdEntry =

nextRequest->mSettings.find(ANDROID_REQUEST_ID);

if (requestIdEntry.count > 0) {

requestId = requestIdEntry.data.i32[0];//獲取requestid,這裡是mPreviewRequest的id

} else {

ALOGW("%s: Did not have android.request.id set in the request",

__FUNCTION__);

requestId = NAME_NOT_FOUND;

}

.....

camera3_stream_buffer_t inputBuffer;

uint32_t totalNumBuffers = 0;

.....

// Submit request and block until ready for next one

ATRACE_ASYNC_BEGIN("frame capture", request.frame_number);

ATRACE_BEGIN("camera3->process_capture_request");

res = mHal3Device->ops->process_capture_request(mHal3Device, &request);//呼叫底層的process_capture_request

ATRACE_END();

.......

}(7.1) waitForNextRequest()

Camera3Device::RequestThread::waitForNextRequest() {

status_t res;

sp<CaptureRequest> nextRequest;

// Optimized a bit for the simple steady-state case (single repeating

// request), to avoid putting that request in the queue temporarily.

Mutex::Autolock l(mRequestLock);

while (mRequestQueue.empty()) {

if (!mRepeatingRequests.empty()) {

// Always atomically enqueue all requests in a repeating request

// list. Guarantees a complete in-sequence set of captures to

// application.

const RequestList &requests = mRepeatingRequests;

RequestList::const_iterator firstRequest =

requests.begin();

nextRequest = *firstRequest;//取

mRequestQueue.insert(mRequestQueue.end(),

++firstRequest,

requests.end());//把當前的mRepeatingRequests插入到mRequestQueue

// No need to wait any longer

mRepeatingLastFrameNumber = mFrameNumber + requests.size() - 1;

break;

}

res = mRequestSignal.waitRelative(mRequestLock, kRequestTimeout);//等待下一個request

if ((mRequestQueue.empty() && mRepeatingRequests.empty()) ||

exitPending()) {

Mutex::Autolock pl(mPauseLock);

if (mPaused == false) {

ALOGV("%s: RequestThread: Going idle", __FUNCTION__);

mPaused = true;

// Let the tracker know

sp<StatusTracker> statusTracker = mStatusTracker.promote();

if (statusTracker != 0) {

statusTracker->markComponentIdle(mStatusId, Fence::NO_FENCE);

}

}

// Stop waiting for now and let thread management happen

return NULL;

}

}

if (nextRequest == NULL) {

// Don't have a repeating request already in hand, so queue

// must have an entry now.

RequestList::iterator firstRequest =

mRequestQueue.begin();

nextRequest = *firstRequest;

mRequestQueue.erase(firstRequest);//取一根mRequestQueue中的CaptureRequest,來自於mRepeatingRequests的next

}

// In case we've been unpaused by setPaused clearing mDoPause, need to

// update internal pause state (capture/setRepeatingRequest unpause

// directly).

Mutex::Autolock pl(mPauseLock);

if (mPaused) {

ALOGV("%s: RequestThread: Unpaused", __FUNCTION__);

sp<StatusTracker> statusTracker = mStatusTracker.promote();

if (statusTracker != 0) {

statusTracker->markComponentActive(mStatusId);

}

}

mPaused = false;

// Check if we've reconfigured since last time, and reset the preview

// request if so. Can't use 'NULL request == repeat' across configure calls.

if (mReconfigured) {

mPrevRequest.clear();

mReconfigured = false;

}

if (nextRequest != NULL) {

nextRequest->mResultExtras.frameNumber = mFrameNumber++;//對每一個非空的request需要幀號++

nextRequest->mResultExtras.afTriggerId = mCurrentAfTriggerId;

nextRequest->mResultExtras.precaptureTriggerId = mCurrentPreCaptureTriggerId;

}

return nextRequest;

}通過nextRequest->mResultExtras.frameNumber = mFrameNumber++表示當前CaptureRequest在處理的一幀影象號。

對於mRepeatingRequests而言,只有其非空,在執行完一次queue操作後,在迴圈進入執行時,會自動對mRequestQueue進行erase操作,是的mRequestQueue變為empty後再次重新載入mRepeatingRequests中的內容,從而形成一個隊repeatRequest的重複響應過程。

(7.2) camera_metadata_entry_t requestIdEntry = nextRequest->mSettings.find(ANDROID_REQUEST_ID);提取該CaptureRequest對應的Request 型別值

(7.3) getBuffer操作

涉及到比較複雜的資料流操作過程的內容見下一博文

(7.4) mHal3Device->ops->process_capture_request(mHal3Device, &request)

這裡的request是已經由一個CaptureRequest轉換為和HAL3.0互動的camera3_capture_request_t結構。

8 小結

至此已經完成了一次向HAL3.0 Device傳送一次完整的Request的請求。從最初Preview啟動建立多個OutPutStream,再是將這些Stream打包成一個mPreviewRequest來啟動stream,隨後將這個Request又轉變為一個CaptureRequest,直到轉為Capture list後交由RequestThread來處理這些請求。每一次的Request簡單可以說是Camera3Device向HAL3.0請求一幀資料,當然每一次Request也可以包含各種控制操作,如AutoFocus等內容,會在後續補充。

到這裡從StartPreview的入口開始,直到相應的Request下發到HAL3.0,基本描述了一次完成的控制流的處理。對於較為複雜的資料流本質也是一併合併在這個控制操作中的,但作為Buffer視訊快取流的管理維護將在下一博文進行描述與總結。