Centos7上部署Ceph儲存叢集以及CephFS的安裝

阿新 • • 發佈:2019-01-06

Ceph已然成為開源社群極為火爆的分散式儲存開源方案,最近需要調研Openstack與Ceph的融合方案,因此開始瞭解Ceph,當然從搭建Ceph叢集開始。

我搭建機器使用了6臺虛擬機器,包括一個admin節點,一個monitor節點,一個mds節點,兩個osd節點,一個client節點。機器的配置是:

> lsb_release -a

- LSB Version: :core-4.1-amd64:core-4.1-noarch

- Distributor ID: CentOS

- Description: CentOS Linux release 7.1.1503 (Core)

- Release: 7.1.1503

- Codename: Core

- 3.10.0-229.el7.x86_64

- >df -T

- >umount -l /data(去掉掛載點)

- >mkfs.xfs -f /dev/vdb(強制轉換檔案系統)

- >mount /dev/vdb /data(重新掛載)

- > hostnamectl set-hostname {name}

- > lsb_release -a

- > uname -r

0.1 安裝ceph部署工具:ceph-deploy

把軟體包源加入軟體倉庫。用文字編輯器建立一個YUM(Yellowing Updater,Modified)庫檔案,其路徑/etc/yum.repos.d/ceph.repo。例如:> sudo vim /etc/yum.repos.d/ceph.repo把如下內容粘帖進去,用 Ceph 的最新主穩定版名字替換{ceph-stable-release}(如firefly),用你的Linux發行版名字替換{distro}(如el6為 CentOS 6 、el7為 CentOS 7 、

- [ceph-noarch]

- name=Ceph noarch packages

- baseurl=http://download.ceph.com/rpm-{ceph-release}/{distro}/noarch

- enabled=1

- gpgcheck=1

- type=rpm-md

- gpgkey=https://download.ceph.com/keys/release.asc

- > sudo yum update && sudo yum install ceph-deploy

0.2 每臺 ssh-copy-id 完成這些伺服器之間免ssh密碼登入;

注:很多資料說是用ssh-copy-id,筆者嘗試並不work,就將admin的key新增到其他機器上去即可。

0.3 配置ntp服務- # yum -y install ntp ntpdate ntp-doc

- # ntpdate 0.us.pool.ntp.org

- # hwclock --systohc

- # systemctl enable ntpd.service

- # systemctl start ntpd.service

0.4 關閉防火牆或者開放 6789/6800~6900埠、關閉SELINUX

- # sed -i 's/SELINUX=enforcing/SELINUX=disabled/g' /etc/selinux/config

- # setenforce 0

- # yum -y install firewalld

- # firewall-cmd --zone=public --add-port=6789/tcp --permanent

- # firewall-cmd --zone=public --add-port=6800-7100/tcp --permanent

- # firewall-cmd --reload

1.叢集角色分配:

- 10.221.83.246 ceph-adm-node

- 10.221.83.247 ceph-mon-node

- 10.221.83.248 ceph-mds-node

- 10.221.83.249 ceph-osd-node1

- 10.221.83.250 ceph-osd-node2

- 10.221.83.251 ceph-client-node

2.部署monitor服務2.1 建立目錄

- mkdir -p ~/my-cluster

- cd ~/my-cluster

- >ceph-deploy new ceph-mon-node

- > osd pool default size = 2

- >ceph-deploy install ceph-adm-node ceph-mon-node ceph-mds-node ceph-osd-node1 ceph-osd-node2 ceph-client-node

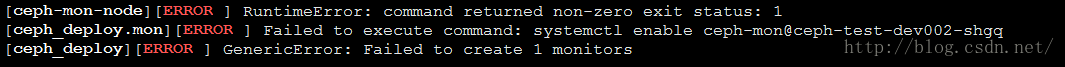

- >ceph-deploy mon create ceph-mon-node

解決方法:通過"hostnamectl set-hostname {name}"命令將所有主機名設定成與/etc/hosts裡面對應的主機名一致。2.6 收集各個節點的keyring檔案

- >ceph-deploy gatherkeys ceph-mon-node

- ssh ceph-osd-node{id}

- mkdir -p /var/local/osd{id}

- exit

- #建立osd

- ceph-deploy osd prepare ceph-osd-node1:/var/local/osd1 ceph-osd-node2:/var/local/osd2

- #啟用osd

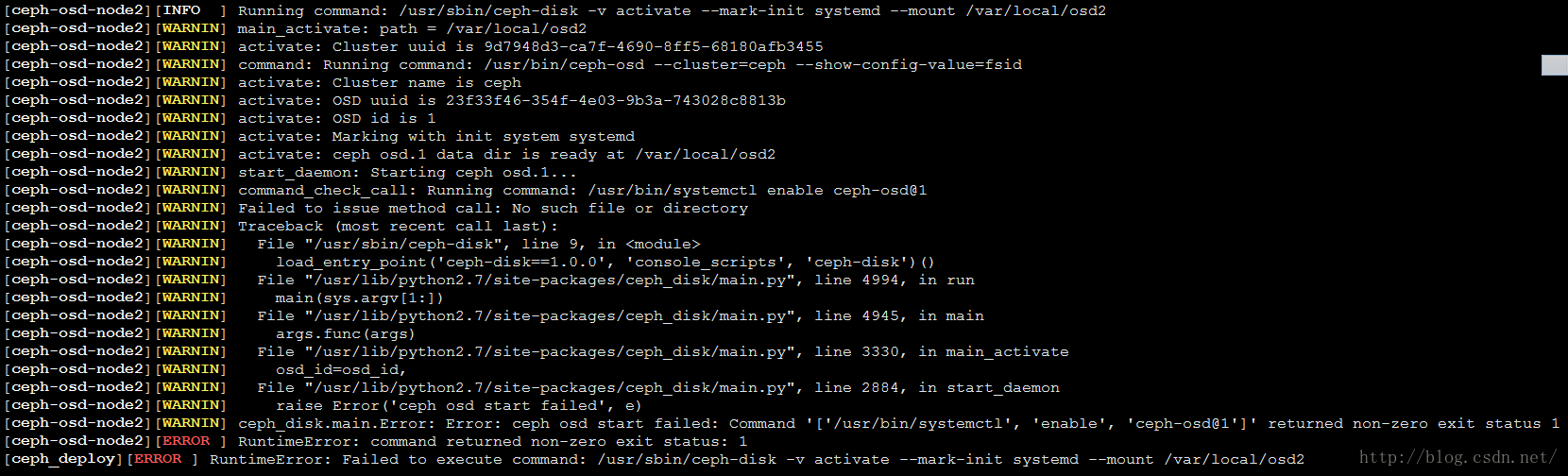

- ceph-deploy osd activate ceph-osd-node1:/var/local/osd1 ceph-osd-node2:/var/local/osd2

prepare->activate會出現上述問題,主要問題是:根本就沒有命令:systemctl enable [email protected] 應該是:systemctl status/start [email protected]解決方案,直接create來代替prepare和activate命令:

- ceph-deploy osd create ceph-osd-node1:/var/local/osd1 ceph-osd-node2:/var/local/osd2

檢視狀態:ceph-deploy osd list ceph-osd-node1 ceph-osd-node2

- [ceph-osd-node1][INFO ] Running command: /usr/sbin/ceph-disk list

- [ceph-osd-node1][INFO ] ----------------------------------------

- [ceph-osd-node1][INFO ] ceph-0

- [ceph-osd-node1][INFO ] ----------------------------------------

- [ceph-osd-node1][INFO ] Path /var/lib/ceph/osd/ceph-0

- [ceph-osd-node1][INFO ] ID 0

- [ceph-osd-node1][INFO ] Name osd.0

- [ceph-osd-node1][INFO ] Status up

- [ceph-osd-node1][INFO ] Reweight 1.0

- [ceph-osd-node1][INFO ] Active ok

- [ceph-osd-node1][INFO ] Magic ceph osd volume v026

- [ceph-osd-node1][INFO ] Whoami 0

- [ceph-osd-node1][INFO ] Journal path /var/local/osd1/journal

- [ceph-osd-node1][INFO ] ----------------------------------------

- [ceph-osd-node2][INFO ] Running command: /usr/sbin/ceph-disk list

- [ceph-osd-node2][INFO ] ----------------------------------------

- [ceph-osd-node2][INFO ] ceph-1

- [ceph-osd-node2][INFO ] ----------------------------------------

- [ceph-osd-node2][INFO ] Path /var/lib/ceph/osd/ceph-1

- [ceph-osd-node2][INFO ] ID 1

- [ceph-osd-node2][INFO ] Name osd.1

- [ceph-osd-node2][INFO ] Status up

- [ceph-osd-node2][INFO ] Reweight 1.0

- [ceph-osd-node2][INFO ] Active ok

- [ceph-osd-node2][INFO ] Magic ceph osd volume v026

- [ceph-osd-node2][INFO ] Whoami 1

- [ceph-osd-node2][INFO ] Journal path /var/local/osd2/journal

- [ceph-osd-node2][INFO ] ----------------------------------------

- > ceph-deploy admin ceph-*(all ceph node)

- > 例如:ceph-deploy admin ceph-osd-node1 ceph-osd-node2 ceph-mon-node ceph-mds-node ceph-client-node

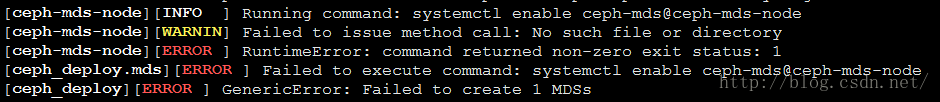

- > ceph-deploy mds create ceph-mds-node

原因:在mds機器ceph-mds-node上面沒有找到[email protected]。解決方案:在ceph-mds-node機器上:cp [email protected][email protected]

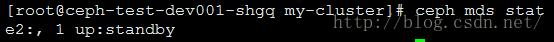

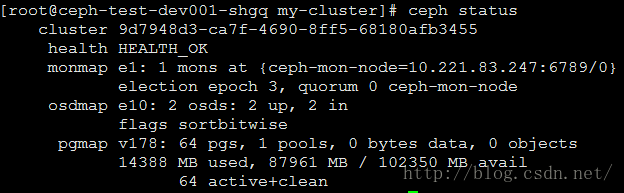

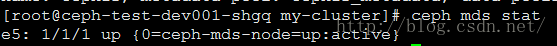

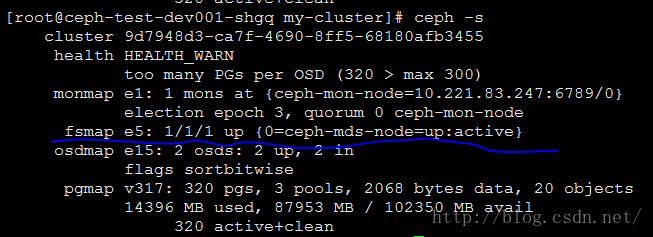

5.叢集驗證5.1 驗證mds節點> ps -ef | grep mds> ceph mds stat

> ceph mds dump5.2 驗證mon節點> ps -ef | grep mon5.3 驗證osd節點> ps -ef | grep osd5.4 叢集狀態> ceph -s

以上基本上完成了ceph儲存叢集的搭建,那麼現在就需要進一步對特定的儲存方式進行配置。

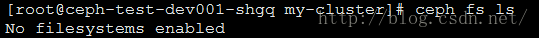

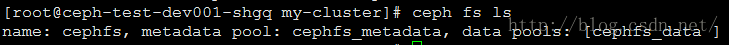

6.建立ceph檔案系統6.1 在管理節點,通過ceph-deploy把ceph安裝到ceph-client節點上

- > ceph-deploy install ceph-client-node

- > ceph osd pool create cephfs_data <pg_num>

- > ceph osd pool create cephfs_metadata <pg_num>

- > ceph fd new <fs_name> cephfs_metadata cephfs_data

> ceph mds stat

> ceph -s

7.掛載ceph檔案系統