Hadoop學習筆記4之HDFS常用命令

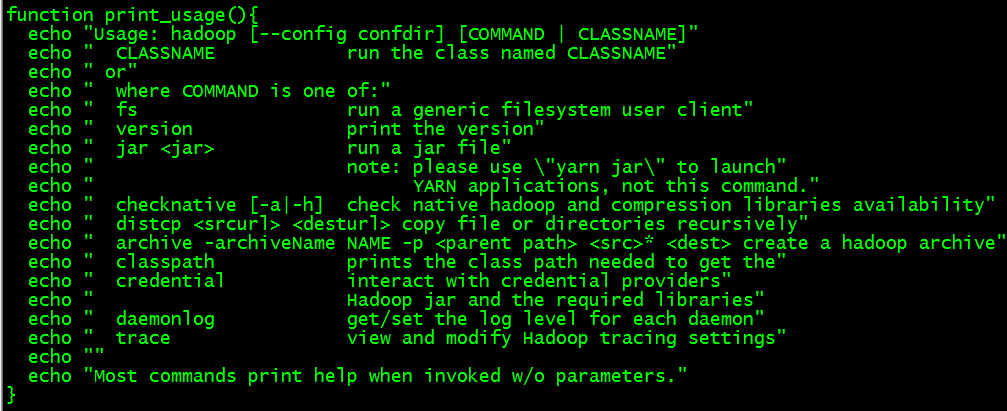

1.檢視${Hadoop_HOME}/bin/hadoop指令碼的hadoop命令幫助資訊列印可知:

hadoop version //檢視版本

hadoop fs //檔案系統客戶端

hadoop jar //執行jar包

hadoop classpath //檢視類路徑

hadoop checknative //檢查本地庫並壓縮

hadoop distcp // 遠端遞迴拷貝檔案

hadoop credential //認證

hadoop trace //跟蹤

不同的命令對應了不同的java類

2.bin/hdfs可以執行的命令

dfs //等價於 hadoop fs命令.

classpath prints the classpath

namenode -format format theDFS filesystem

secondarynamenode run the DFSsecondary namenode

namenode run the DFSnamenode

journalnode run the DFSjournalnode

zkfc run the ZKFailover Controller daemon

datanode run a DFSdatanode

dfsadmin run a DFSadmin client

haadmin run a DFS HAadmin client

fsck run a DFSfilesystem checking utility

balancer run a clusterbalancing utility

jmxget get JMXexported values from NameNode or DataNode.

mover run a utilityto move block replicas across

storage types

oiv apply theoffline fsimage viewer to an fsimage

oiv_legacy apply theoffline fsimage viewer to an legacy fsimage

oev apply theoffline edits viewer to an edits file

fetchdt fetch adelegation token from the NameNode

getconf get configvalues from configuration

groups get thegroups which users belong to

snapshotDiff diff twosnapshots of a directory or diff the

current directorycontents with a snapshot

lsSnapshottableDir list allsnapshottable dirs owned by the current user

Use -help to see options

portmap run a portmapservice

nfs3 run an NFSversion 3 gateway

cacheadmin configure theHDFS cache

crypto configureHDFS encryption zones

storagepolicies list/get/setblock storage policies

version print theversion

3.hdfs fds命令:

Usage: hadoop fs [generic options]

[-appendToFile <localsrc> ... <dst>]

[-cat [-ignoreCrc] <src> ...]

[-checksum <src> ...]

[-chgrp [-R] GROUP PATH...]

[-chmod [-R] <MODE[,MODE]... | OCTALMODE> PATH...]

[-chown [-R] [OWNER][:[GROUP]] PATH...]

[-copyFromLocal [-f] [-p] [-l] <localsrc> ... <dst>]

[-copyToLocal [-p] [-ignoreCrc] [-crc] <src> ... <localdst>]

[-count [-q] [-h] <path> ...]

[-cp [-f] [-p | -p[topax]] <src> ... <dst>]

[-createSnapshot <snapshotDir> [<snapshotName>]]

[-deleteSnapshot<snapshotDir> <snapshotName>]

[-df [-h] [<path> ...]]

[-du [-s] [-h] <path> ...]

[-expunge]

[-find <path> ... <expression> ...]

[-get [-p] [-ignoreCrc] [-crc] <src> ... <localdst>]

[-getfacl [-R] <path>]

[-getfattr [-R] {-n name | -d} [-e en] <path>]

[-getmerge [-nl] <src> <localdst>]

[-help [cmd ...]]

[-ls [-d] [-h] [-R] [<path> ...]]

[-mkdir [-p] <path> ...]

[-moveFromLocal <localsrc> ... <dst>]

[-moveToLocal <src> <localdst>]

[-mv <src> ... <dst>]

[-put [-f] [-p] [-l] <localsrc> ... <dst>]

[-renameSnapshot <snapshotDir> <oldName> <newName>]

[-rm [-f] [-r|-R] [-skipTrash] <src> ...]

[-rmdir [--ignore-fail-on-non-empty] <dir> ...]

[-setfacl [-R] [{-b|-k} {-m|-x <acl_spec>} <path>]|[--set<acl_spec> <path>]]

[-setfattr {-n name [-v value] | -x name} <path>]

[-setrep [-R] [-w] <rep> <path> ...]

[-stat [format] <path> ...]

[-tail [-f] <file>]

[-test -[defsz] <path>]

[-text [-ignoreCrc] <src> ...]

[-touchz <path> ...]

[-truncate [-w] <length> <path> ...]

[-usage [cmd ...]]

4.例:

$ hdfs dfs -mkdir-p /user/ubuntu/ //在hdfs上建立資料夾

$ hdfs dfs -puthdfs.cmd /user/ubuntu/ //將本地檔案上傳到HDFS

$ hdfs dfs -get/user/ubuntu/hadoop.cmd a.cmd //將檔案從HDFS取回本地

$ hdfs dfs -rm -r -f /user/ubuntu/ //刪除

hdfs dfs -ls -R/ //遞迴展示HDFS檔案系統