[1] Hive3.x 安裝與debug

阿新 • • 發佈:2019-01-11

1 下載安裝hive3.1.1

HADOOP_HOME=/Users/xxx/software/hadoop/hadoop-2.7.4

export HIVE_CONF_DIR=/Users/xxx/software/hive/conf

export HIVE_AUX_JARS_PATH=/Users/xxx//software/hive/lib

建立hive-site.xml

拷貝hive-default.xml.template修改為hive-site.xml檔案

開頭新增

<name>system:java.io.tmpdir</name> <value>/tmp/hive3/java</value> </property> <property> <name>system:user.name</name> <value>${user.name}</value> </property>

其他修改部分

<name>hive.exec.scratchdir</name> <value>/tmp/hive3</value> <name>hive.metastore.warehouse.dir</name> <value>/user/hive3/warehouse</value> <name>hive.repl.rootdir</name> <value>/user/hive3/repl/</value> <name>hive.repl.cmrootdir</name> <value>/user/hive3/cmroot/</value> <name>hive.repl.replica.functions.root.dir</name> <value>/user/hive3/repl/functions/</value> <name>hive.query.results.cache.directory</name> <value>/tmp/hive3/_resultscache_</value> <name>hive.exec.local.scratchdir</name> <value>/Users/didi/Documents/software/hive3/data0/hive/${user.name}</value>

以上目錄可以使用預設(這裡本機已經有hive2.1,把hive改成hive3了)

在hdfs中建立相應檔案目錄: /bin/hdfs dfs -mkdir -p /user/hive3/warehouse /bin/hdfs dfs -mkdir -p /tmp/hive3/ hdfs dfs -chmod 777 /user/hive/warehouse hdfs dfs -chmod 777 /tmp/hive hadoop fs -chmod 777 /user/hive/warehouse hadoop fs -chmod 777 /tmp/hive 同上建立並設定 /bin/hdfs dfs -mkdir -p /user/hive3/repl hdfs dfs -chmod 777 /user/hive3/repl hadoop fs -chmod 777 /user/hive3/repl /bin/hdfs dfs -mkdir -p /user/hive3/cmroot hdfs dfs -chmod 777 /user/hive3/cmroot hadoop fs -chmod 777 /user/hive3/cmroot /bin/hdfs dfs -mkdir -p /user/hive3/repl/functions hdfs dfs -chmod 777 /user/hive3/repl/functions hadoop fs -chmod 777 /user/hive3/repl/functions /bin/hdfs dfs -mkdir -p /tmp/hive3/_resultscache_ hdfs dfs -chmod 777 /tmp/hive3/_resultscache_ hadoop fs -chmod 777 /tmp/hive3/_resultscache_

其他修改

<name>hive.metastore.db.type</name>

<value>mysql</value>

<name>hive.metastore.uris</name>

<value>thrift://localhost:9083</value>

<name>javax.jdo.option.ConnectionPassword</name>

<value>root</value> #mysql 密碼

<name>javax.jdo.option.ConnectionUserName</name>

<value>root</value> #mysql user

<name>javax.jdo.option.ConnectionURL</name>

<value>jdbc:mysql://localhost:3306/hive3?createDatabaseIfNotExist=true&characterEncoding=UTF-8</value>

<name>javax.jdo.option.ConnectionDriverName</name>

<value>com.mysql.jdbc.Driver</value>

<name>datanucleus.connectionPoolingType</name>

<value>BONECP</value>

<name>hive.local.time.zone</name>

<value>Asia/Shanghai</value>

<name>hive.mapred.mode</name>

<value>nonstrict</value>

其他參考:Mac-單機Hive安裝與測試 將hive安裝好,初始化完元資料

2 Hive命名行並啟動debug模式

/..../software/hive3/hive$ bin/hive --debug

ERROR StatusLogger No log4j2 configuration file found. Using default configuration: logging only errors to the console.

Listening for transport dt_socket at address: 8000

這裡沒有修改監聽埠,預設8000

3-IDEA配置remote-debug

- 同版本的hive原始碼並匯入IDEA中提前編譯好(具體參見hive wiki)

- 配置遠端debug:Once you see this message, in Eclipse right click on the project you want to debug, go to “Debug As -> Debug Configurations -> Remote Java Application” and hit the “+” sign on far left top. This should bring up a dialog box. Make sure that the host is the host on which the Beeline CLI is running and the port is “8000”. Before you start debugging, make sure that you have set appropriate debug breakpoints in the code. Once ready, hit “Debug”. The remote debugger should attach to Beeline and proceed.

4-點選debug配置好的遠端debug

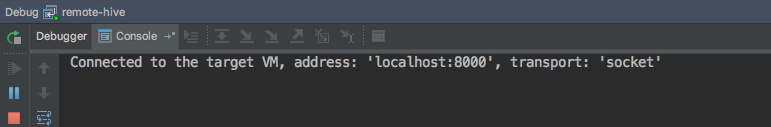

IDEA console顯示如下表示連線成功

Connected to the target VM, address: 'localhost:8000', transport: 'socket'

5-同時Hive cli進入命令cli狀態

hive3/hive$ bin/hive --debug

Listening for transport dt_socket at address: 8000

SLF4J: Class path contains multiple SLF4J bindings.

SLF4J: Found binding in [jar:file:/..../software/hive3/hive/lib/log4j-slf4j-impl-2.10.0.jar!/org/slf4j/impl/StaticLoggerBinder.class]

SLF4J: Found binding in [jar:file:/.../software/hadoop/hadoop-2.7.4/share/hadoop/common/lib/slf4j-log4j12-1.7.10.jar!/org/slf4j/impl/StaticLoggerBinder.class]

SLF4J: See http://www.slf4j.org/codes.html#multiple_bindings for an explanation.

SLF4J: Actual binding is of type [org.apache.logging.slf4j.Log4jLoggerFactory]

Hive Session ID = 378e1844-e7fe-40c7-ada5-7de6a30508a5

Logging initialized using configuration in jar:file:/.../software/hive3/hive/lib/hive-common-3.1.1.jar!/hive-log4j2.properties Async: true

Hive Session ID = 152f6855-de8d-4a5d-b251-b6b0347c4c08

Hive-on-MR is deprecated in Hive 2 and may not be available in the future versions. Consider using a different execution engine (i.e. spark, tez) or using Hive 1.X releases.

hive>

6-在IDEA 新增斷點

如在CliDriver新增斷點

public int processCmd(String cmd) {

在該方法中新增斷點,等待命令列輸入

}

7-Hive命令列輸入

n engine (i.e. spark, tez) or using Hive 1.X releases.

hive> show databases;

此時IDEA中執行至斷點處

參考