Machine Learning in a Week

Getting into machine learning (ml) can seem like an unachievable task from the outside.

And it definitely can be, if you attack it from the wrong end.

However, after dedicating one week to learning the basics of the subject, I found it to be much more accessible than I anticipated.

This article is intended to give others who’re interested in getting into ml a roadmap of how to get started, drawing from the experiences I made in my intro week.

Background

Before my machine learning week, I had been reading about the subject for a while, and had gone through half of Andrew Ng’s course on Coursera and a few other theoretical courses. So I had a tiny bit of conceptual understanding of ml, though I was completely unable to transfer any of my knowledge into code. This is what I wanted to change.

I wanted to be able to solve problems with ml by the end of the week, even through this meant skipping a lot of fundamentals, and going for a top-down approach, instead of bottoms up.

After asking for advice on Hacker News, I came to the conclusion that Python’s Scikit Learn-module was the best starting point. This module gives you a wealth of algorithms to choose from, reducing the actual machine learning to a few lines of code.

Monday: Learning some practicalities

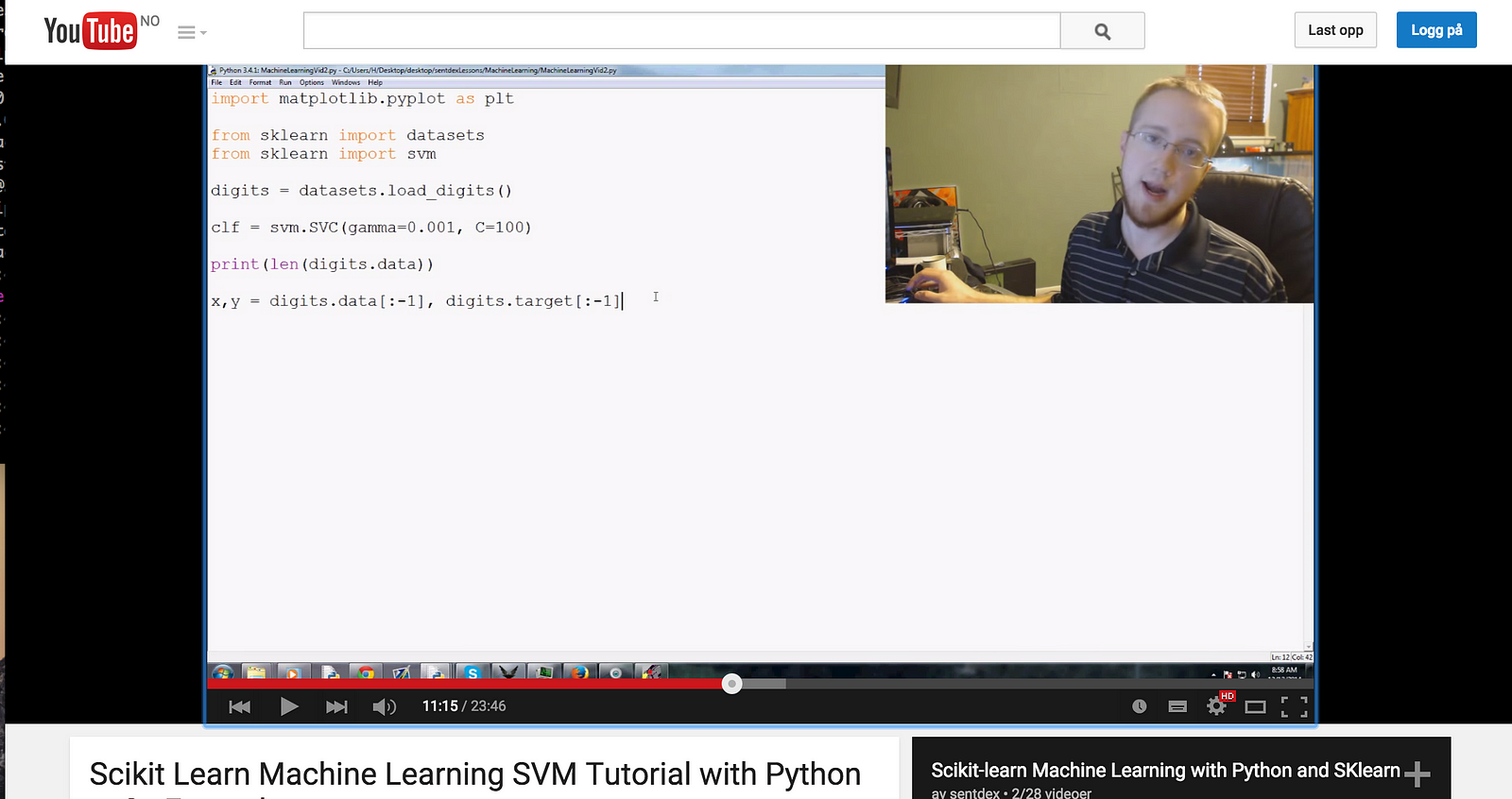

I started off the week by looking for video tutorials which involved Scikit Learn. I finally landed on Sentdex’s tutorial on how to use ml for investing in stocks, which gave me the necessary knowledge to move on to the next step.

The good thing about the Sentdex tutorial is that the instructor takes you through all the steps of gathering the data. As you go along, you realize that fetching and cleaning up the data can be much more time consuming than doing the actually machine learning. So the ability to write scripts to scrape data from files or crawl the web are essential skills for aspiring machine learning geeks.

I have re-watched several of the videos later on, to help me when I’ve been stuck with problems, so I’d recommend you to do the same.

However, if you already know how to scrape data from websites, this tutorial might not be the perfect fit, as a lot of the videos evolve around data fetching. In that case, the Udacity’s Intro to Machine Learning might be a better place to start.

Tuesday: Applying it to a real problem

Tuesday I wanted to see if I could use what I had learned to solve an actual problem. As another developer in my coding cooperative was working on Bank of England’s data visualization competition, I teamed up with him to check out the datasets the bank has released. The most interesting data was their household surveys. This is an annual survey the bank perform on a few thousand households, regarding money related subjects.

The problem we decided to solve was the following:

Given a persons education level, age and income, can the computer predict its gender?

I played around with the dataset, spent a few hours cleaning up the data, and used the Scikit Learn map to find a suitable algorithm for the problem.

We ended up with a success ratio at around 63%, which isn’t impressive at all. But the machine did at least manage to guess a little better than flipping a coin, which would have given a success rate at 50%.

Seeing results is like fuel to your motivation, so I’d recommend you doing this for yourself, once you have a basic grasp of how to use Scikit Learn.

It’s a pivotal moment when you realize that you can start using ml to solve in real life problems.

Wednesday: From the ground up

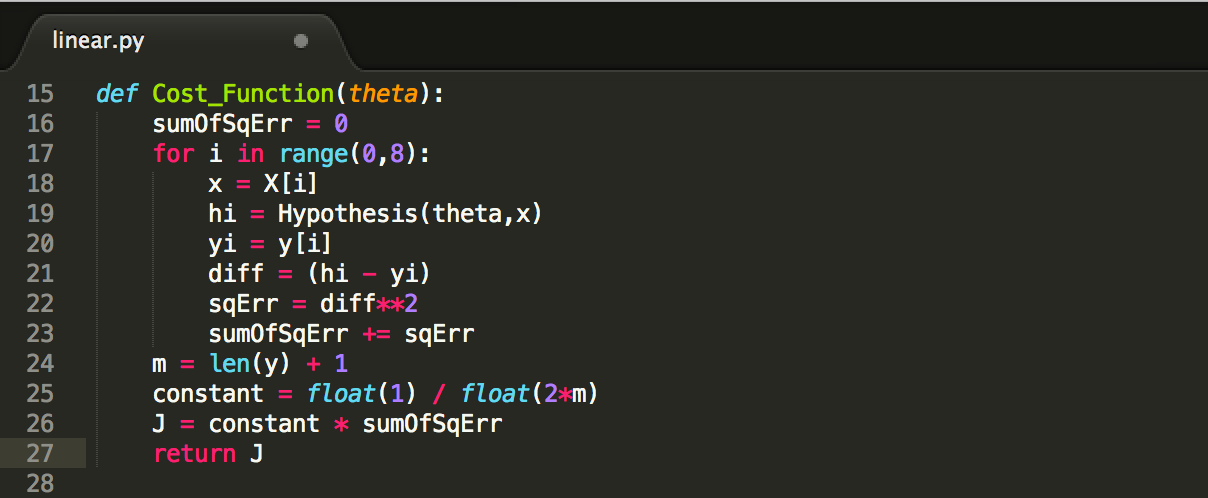

After playing around with various Scikit Learn modules, I decided to try and write a linear regression algorithm from the ground up.

I wanted to do this, because I felt (and still feel) that I really don’t understand what’s happening on under the hood.

Luckily, the Coursera course goes into detail on how a few of the algorithms work, which came to great use at this point. More specifically, it describes the underlying concepts of using linear regression with gradient descent.

This has definitely been the most effective of learning technique, as it forces you to understand the steps that are going on ‘under the hood’. I strongly recommend you to do this at some point.

I plan to rewrite my own implementations of more complex algorithms as I go along, but I prefer doing this after I’ve played around with the respective algorithms in Scikit Learn.

Thursday: Start competing

On Thursday, I started doing Kaggle’s introductory tutorials. Kaggle is a platform for machine learning competitions, where you can submit solutions to problems released by companies or organizations .

I recommend you trying out Kaggle after having a little bit of a theoretical and practical understanding of machine learning. You’ll need this in order to start using Kaggle. Otherwise, it will be more frustrating than rewarding.

The Bag of Words tutorial guides you through every steps you need to take in order to enter a submission to a competition, plus gives you a brief and exciting introduction into Natural Language Processing (NLP). I ended the tutorial with much higher interest in NLP than I had when entering it.

Friday: Back to school

Friday, I continued working on the Kaggle tutorials, and also started Udacity’s Intro to Machine Learning. I’m currently half ways through, and find it quite enjoyable.

It’s a lot easier the Coursera course, as it doesn’t go in depth in the algorithms. But it’s also more practical, as it teaches you Scikit Learn, which is a whole lot easier to apply to the real world than writing algorithms from the ground up in Octave, as you do in the Coursera course.

The road ahead

Doing it for a week hasn’t just been great fun, it has also helped my awareness of its usefulness of machine learning in society. The more I learn about it, the more I see which areas it can be used to solve problems.

If you’re interested in getting into machine learning, I strongly recommend you setting off a few days or evenings and simply dive into it.

Choose a top down approach if you’re not ready for the heavy stuff, and get into problem solving as quickly as possible.

Good luck!

相關推薦

Machine Learning in a Week

Getting into machine learning (ml) can seem like an unachievable task from the outside.And it definitely can be, if you attack it from the wrong end.Howeve

Machine Learning in a Box (Week 8): SAP HANA EML and TensorFlow Integration

Last time, we looked at how to use Jupyter notebooks to run kernels in Python and R and even SQL. I'll be honest, Jupyter is now my new "go to" tool. I eve

Machine Learning in a Year

First intro: Hacker News and UdacityMy interest in ml stems back to 2014 when I started reading articles about it on Hacker News. I simply found the idea o

Machine Learning In Netflix, A Visionary Video Streaming App Development Approach

Updating an application is significantly important for both software developers and users. Paying attention to the tips overviewed in this article can help

Machine Learning in Action-chapter2-k近鄰算法

turn fma 全部 pytho label -c log eps 數組 一.numpy()函數 1.shape[]讀取矩陣的長度 例: import numpy as np x = np.array([[1,2],[2,3],[3,4]]) print x

<Machine Learning in Action >之二 樸素貝葉斯 C#實現文章分類

options 直升機 water 飛機 math mes 視頻 write mod def trainNB0(trainMatrix,trainCategory): numTrainDocs = len(trainMatrix) numWords =

[Javascript] Classify text into categories with machine learning in Natural

bus easy ann etc hms scrip steps spam not In this lesson, we will learn how to train a Naive Bayes classifier or a Logistic Regression cl

[Javascript] Classify JSON text data with machine learning in Natural

comm about cnblogs ++ get ssi learn clas save In this lesson, we will learn how to train a Naive Bayes classifier and a Logistic Regressi

斯坦福大學公開課機器學習: advice for applying machine learning - evaluatin a phpothesis(怎麽評估學習算法得到的假設以及如何防止過擬合或欠擬合)

class 中一 技術分享 cnblogs 訓練數據 是否 多個 期望 部分 怎樣評價我們的學習算法得到的假設以及如何防止過擬合和欠擬合的問題。 當我們確定學習算法的參數時,我們考慮的是選擇參數來使訓練誤差最小化。有人認為,得到一個很小的訓練誤差一定是一件好事。但其實,僅

機器學習實戰(Machine Learning in Action)學習筆記————02.k-鄰近演算法(KNN)

機器學習實戰(Machine Learning in Action)學習筆記————02.k-鄰近演算法(KNN)關鍵字:鄰近演算法(kNN: k Nearest Neighbors)、python、原始碼解析、測試作者:米倉山下時間:2018-10-21機器學習實戰(Machine Learning in

機器學習實戰(Machine Learning in Action)學習筆記————05.Logistic迴歸

機器學習實戰(Machine Learning in Action)學習筆記————05.Logistic迴歸關鍵字:Logistic迴歸、python、原始碼解析、測試作者:米倉山下時間:2018-10-26機器學習實戰(Machine Learning in Action,@author: Peter H

機器學習實戰(Machine Learning in Action)學習筆記————04.樸素貝葉斯分類(bayes)

機器學習實戰(Machine Learning in Action)學習筆記————04.樸素貝葉斯分類(bayes)關鍵字:樸素貝葉斯、python、原始碼解析作者:米倉山下時間:2018-10-25機器學習實戰(Machine Learning in Action,@author: Peter Harri

機器學習實戰(Machine Learning in Action)學習筆記————03.決策樹原理、原始碼解析及測試

機器學習實戰(Machine Learning in Action)學習筆記————03.決策樹原理、原始碼解析及測試關鍵字:決策樹、python、原始碼解析、測試作者:米倉山下時間:2018-10-24機器學習實戰(Machine Learning in Action,@author: Peter Harr

機器學習實戰(Machine Learning in Action)學習筆記————08.使用FPgrowth演算法來高效發現頻繁項集

機器學習實戰(Machine Learning in Action)學習筆記————08.使用FPgrowth演算法來高效發現頻繁項集關鍵字:FPgrowth、頻繁項集、條件FP樹、非監督學習作者:米倉山下時間:2018-11-3機器學習實戰(Machine Learning in Action,@autho

機器學習實戰(Machine Learning in Action)學習筆記————07.使用Apriori演算法進行關聯分析

機器學習實戰(Machine Learning in Action)學習筆記————07.使用Apriori演算法進行關聯分析關鍵字:Apriori、關聯規則挖掘、頻繁項集作者:米倉山下時間:2018-11-2機器學習實戰(Machine Learning in Action,@author: Peter H

機器學習實戰(Machine Learning in Action)學習筆記————06.k-均值聚類演算法(kMeans)學習筆記

機器學習實戰(Machine Learning in Action)學習筆記————06.k-均值聚類演算法(kMeans)學習筆記關鍵字:k-均值、kMeans、聚類、非監督學習作者:米倉山下時間:2018-11-3機器學習實戰(Machine Learning in Action,@author: Pet

A Gentle Introduction to Applied Machine Learning as a Search Problem (譯文)

A Gentle Introduction to Applied Machine Learning as a Search Problem 原文作者:Jason Brownlee 原文地址:https://machinelearningmastery.com/applied-m

《Machine Learning in Action》| 第1章 k-近鄰演算法

準備:使用 Python 匯入資料 """ @函式說明: 建立資料集 """ def createDataSet(): # 四組二維特徵 group = np.array([[3,104],[2,100],[101,10],[99,5]])

《Machine Learning in Action》| 第2章 決策樹

決策樹 調包 import numpy as np import matplotlib.pyplot as plt import operator from matplotlib.font_manager import FontProperties 3.1.決

機器學習實戰(Machine Learning in Action)學習筆記————10.奇異值分解(SVD)原理、基於協同過濾的推薦引擎、資料降維

關鍵字:SVD、奇異值分解、降維、基於協同過濾的推薦引擎作者:米倉山下時間:2018-11-3機器學習實戰(Machine Learning in Action,@author: Peter Harrington)原始碼下載地址:https://www.manning.com/books/machine-le