Hue上檢視spark執行報錯資訊(一)

阿新 • • 發佈:2019-01-14

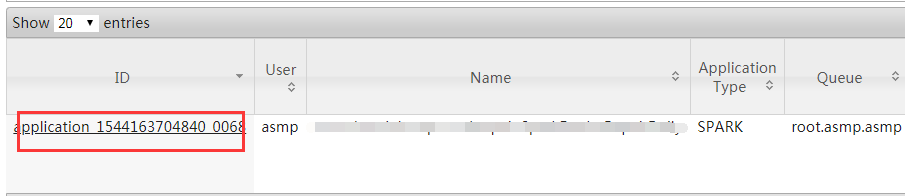

點選Hue報錯頁面,找到application_ID

根據application_ID到yarn介面(http://bigdata.lhx.com:8088/cluster)找到完整資訊

點選ID或者history進入logs介面

詳細報錯資訊:spark找不到叢集中asmp資料庫

ERROR yarn.ApplicationMaster: User class threw exception: org.apache.spark.sql.catalyst.analysis.NoSuchDatabaseException: Database 'asmp' not found; org.apache.spark.sql.catalyst.analysis.NoSuchDatabaseException: Database 'asmp' not found; at org.apache.spark.sql.catalyst.catalog.SessionCatalog.org$apache$spark$sql$catalyst$catalog$SessionCatalog$$requireDbExists(SessionCatalog.scala:131) at org.apache.spark.sql.catalyst.catalog.SessionCatalog.setCurrentDatabase(SessionCatalog.scala:212) at org.apache.spark.sql.execution.command.SetDatabaseCommand.run(databases.scala:59) at org.apache.spark.sql.execution.command.ExecutedCommandExec.sideEffectResult$lzycompute(commands.scala:58) at org.apache.spark.sql.execution.command.ExecutedCommandExec.sideEffectResult(commands.scala:56) at org.apache.spark.sql.execution.command.ExecutedCommandExec.doExecute(commands.scala:74) at org.apache.spark.sql.execution.SparkPlan$$anonfun$execute$1.apply(SparkPlan.scala:114) at org.apache.spark.sql.execution.SparkPlan$$anonfun$execute$1.apply(SparkPlan.scala:114) at org.apache.spark.sql.execution.SparkPlan$$anonfun$executeQuery$1.apply(SparkPlan.scala:135) at org.apache.spark.rdd.RDDOperationScope$.withScope(RDDOperationScope.scala:151) at org.apache.spark.sql.execution.SparkPlan.executeQuery(SparkPlan.scala:132) at org.apache.spark.sql.execution.SparkPlan.execute(SparkPlan.scala:113) at org.apache.spark.sql.execution.QueryExecution.toRdd$lzycompute(QueryExecution.scala:87) at org.apache.spark.sql.execution.QueryExecution.toRdd(QueryExecution.scala:87) at org.apache.spark.sql.Dataset.<init>(Dataset.scala:185) at org.apache.spark.sql.Dataset$.ofRows(Dataset.scala:64) at org.apache.spark.sql.SparkSession.sql(SparkSession.scala:600) at org.apache.spark.sql.SQLContext.sql(SQLContext.scala:699) at com.chumi.dac.sp.csr.skrepair.SparkDealerRepairDaily$.main(SparkDealerRepairDaily.scala:44) at com.chumi.dac.sp.csr.skrepair.SparkDealerRepairDaily.main(SparkDealerRepairDaily.scala) at sun.reflect.NativeMethodAccessorImpl.invoke0(Native Method) at sun.reflect.NativeMethodAccessorImpl.invoke(NativeMethodAccessorImpl.java:57) at sun.reflect.DelegatingMethodAccessorImpl.invoke(DelegatingMethodAccessorImpl.java:43) at java.lang.reflect.Method.invoke(Method.java:606) at org.apache.spark.deploy.yarn.ApplicationMaster$$anon$2.run(ApplicationMaster.scala:645)

解決方案:把叢集中hive-site.xml新增到程式碼資源中即可。