Neutron DVR實現multi-host特性打通東西南北流量提前看(by quqi99)

作者:張華 發表於:2014-03-07

版權宣告:可以任意轉載,轉載時請務必以超連結形式標明文章原始出處和作者資訊及本版權宣告

(http://blog.csdn.net/quqi99 )

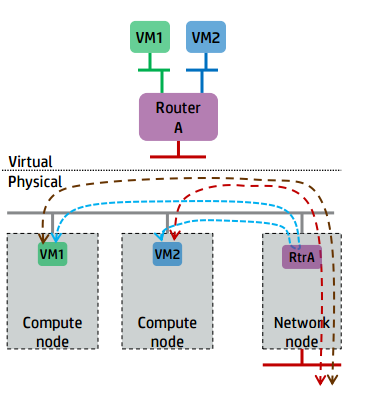

Legacy Routing and Distributed Router in Neutron

先溫習下l3-agent原理:l3-agent節點為所有subnet建立內部閘道器,外部閘道器,路由等。l3-agent定期同步router時會為將和該router相關聯的subnet排程到相應的l3-agent節點上建立閘道器(根據port的device_owner屬性找到對應的port, port裡有subnet)。neutron支援在多個節點上啟動多個l3-agent, l3-agent的排程是針對router為單位的, 試想, 如果我們建立眾多的router, 每一個subnet都關聯到一個router的話, 那麼也意味著每一個subnet的閘道器都可被排程到不同的l3-agent上

但是上述方案不具有HA特性, 所以出現一個VRRP HA的Blueprint繼續使用VRRP+Keepalived+Conntrackd技術解決單點l3-agent的HA問題, VRRP使用廣播進行心跳檢查, backup節點收不到master節點定期發出的心跳廣播時便認為master死掉從而接管master的工作(刪除掉原master節點上的閘道器的IP, 在新master節點上重設閘道器). 可參見我的另一博文:

但是, 上述兩種方案均無法解決相同子網的東西向流量不繞道l3-agent的狀況,所以Legacy Routeing in Neutron具有效能瓶頸、水平可擴充套件性差。

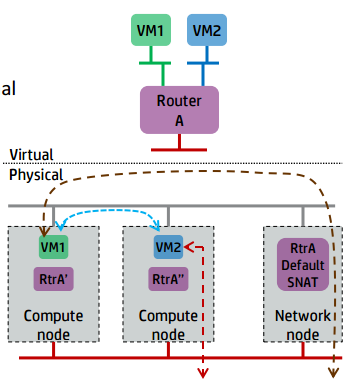

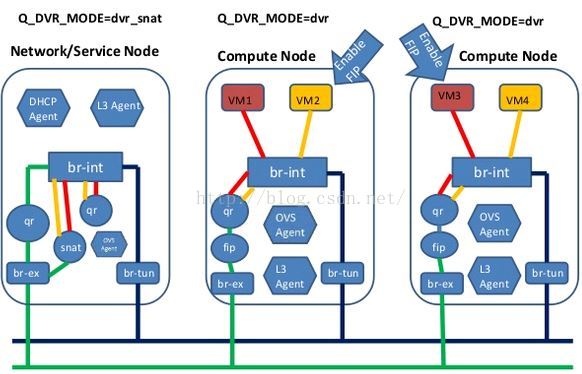

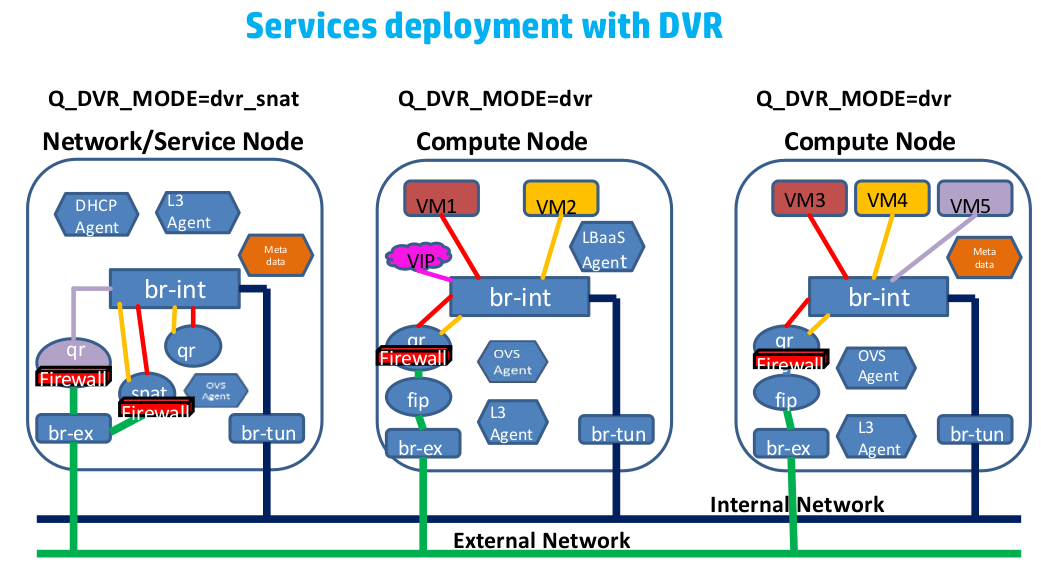

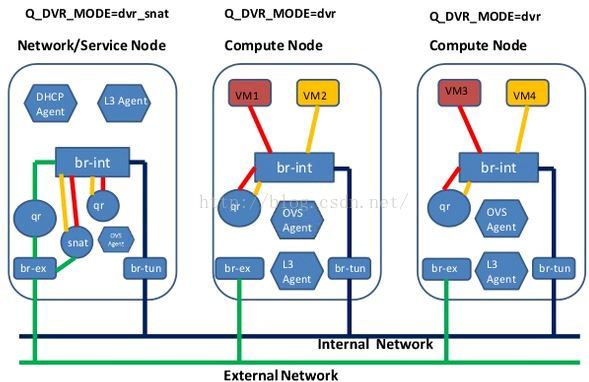

所以出現DVR,設計要點如下:

- 東西向流量不再走l3-agent。

- 計算節點上無FIP時SNAT/DNAT均走中心化的l3-agent節點。(此時計算節點有qrouter-xxx ns(qr介面為子網閘道器如192.168.21.1,此處採用策略路由將SNAT流量導到網路節點snat-xxx的sg介面上), 網路節點有qrouter-xxx ns與snat-xxx (qg介面為用於SNAT的外網介面,sg介面為該子網上與閘道器不同的IP如192.168.21.3, SNAT/DNAT規則設定在snat-xxx ns中)

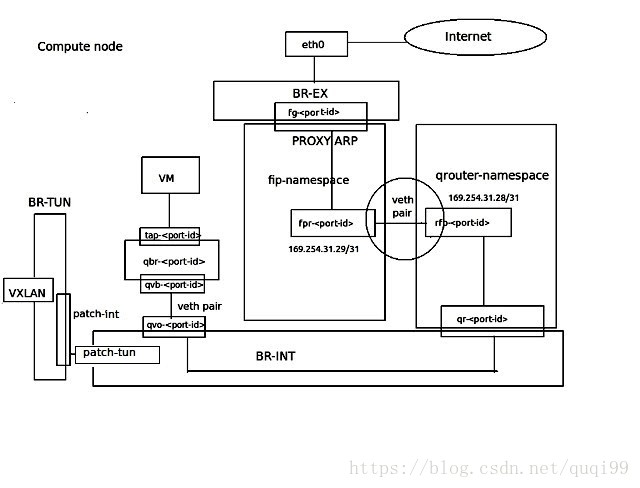

- 計算節點有FIP時SNAT/DNAT均走計算節點。(相對於上面無FIP的情況,此時在計算節點多出fip-xxxns, fip-xxx與qrouter-xxx通過fpr與rfp這對peer devices相連,fip-xxx中還有fg介面設定外網IP插在br-int上最終和br-ex接起來)對於SNAT, snat規則設定在qrouter-xxx上;對於DNAT,首先fip-xxx會有arp代理欺騙外部FIP的mac就是fg介面的mac地址這樣外部流量到達fg介面,然後fip-xxx裡的路由再將到FIP的流量導到qrouter-xxx的rfp, 然後在qrouter-xxx中設定DNAT規則導到虛機,由於採用了DNAT規則這樣FIP是否設定在rfp介面都是無所謂的,在ocata版本中我看見就沒設定)。

- DVR對VPNaaS的影響。DVR分legency, dvr, dvr_snat三種模式。dvr只用於計算節點,legency/dvr_snat只用於網路節點。legency模式下只有qrouter-xxx ns(SNAT等設定在此ns裡), dvr_snat模式下才有snat_xxx(SNAT設定在此ns裡)。由於vpnaas的特性它只能中心化使用,所以對於dvr router應該將VPNaaS服務只設置在中心化的snat_xxx ns中,並且將vpn流量從計算節點導到網路節點)。見:https://review.openstack.org/#/c/143203/

- DVR對FWaaS的影響。legency時代neutron只有一個qrouter-xxx,所以東西南北向的防火牆功能都在qrouter-xxx裡;而DVR時代在計算節點多出fip-xxx(此ns中不安裝防火牆規則),在網路節點多出snat-xxx處理SNAT,我們確保計算節點上的qrouter-xxx裡也有南北向防火牆功能,並確保DVR在東西向流量不出問題。見:https://review.openstack.org/#/c/113359/ 與https://specs.openstack.org/openstack/neutron-specs/specs/juno/neutron-dvr-fwaas.html

- 自newton起,DVR與VRRP也可以結合使用

- DVR對LBaaS的影響,lbaas的vip是沒有binding:host屬性的,對於這種unbound port也應該中心化。nobound port like allowed_address_pair port and vip port - https://bugs.launchpad.net/neutron/+bug/1583694

https://www.openstack.org/assets/presentation-media/Neutron-Port-Binding-and-Impact-of-unbound-ports-on-DVR-Routers-with-FloatingIP.pdf

unbound port(像allowed_address_pair port和vip port),這種port可以和很多VM關聯所以它沒有binding:host屬性(沒有binding:host就意味著agentg不會處理它,因為MQ只對有host的agent發訊息),這在DVR環境下造成了很多問題:如果這種port繼承VM的port-binding屬性,因為有多個VM也不知道該繼承哪一個;當把FIP關聯上這種unbound port之後(LB backend VMs中的各個port都可以關聯有相同FIP的allowed_address_pair)會存在GARP問題造成FIP失效。

解決辦法是將這種unbound port中心化,即只有中心化的網路節點來處理unbound port(allowed_address_pair port and vip port),這樣網路節點上的snat_xxx名空間也需要處理unbound port的DNAT等事情。程式碼如下:

server/db side - https://review.openstack.org/#/c/466434/ - FIP port中沒有binding:host時設定DVR_SNAT_BOUND內部變數,然後位於計算節點上的dvr-agent就別處理帶DVR_SNAT_BOUND的port了 (server side別返回這種port就行,所以程式碼主要在server/db side)

agent side - https://review.openstack.org/#/c/437986/ - l3-agent需要在snat-xxx裡新增帶DVR_SNAT_BOUND的port,並設定它的SNAT/DNAT

然後有一個bug - Unbound ports floating ip not working with address scopes in DVR HA - https://bugs.launchpad.net/neutron/+bug/1753434

經過上面修改後,也順便在計算節點上多出一個叫dvr_no_external的模式(https://review.openstack.org/#/c/493321/) - this mode enables only East/West DVR routing functionality for a L3 agent that runs on a compute host, the North/South functionality such as DNAT and SNAT will be provided by the centralized network node that is running in ‘dvr_snat’ mode. This mode should be used when there is no external network connectivity on the compute host.

程式碼:

server/db side: DVR VIP只是router中的一個屬性(const.FLOATINGIP_KEY),它並不是有binding-host一樣的普通port, 讓這個unbound port中心化處理:

_process_router_update(l3-agent) -> L3PluginApi.get_routers -> sync_routers(rpc) -> list_active_sync_routers_on_active_l3_agent -> _get_active_l3_agent_routers_sync_data(l3_dvrscheduler_db.py) -> get_ha_sync_data_for_host(l3_dvr_db.py) -> _get_dvr_sync_data -> _process_floating_ips_dvr(router[const.FLOATINGIP_KEY] = router_floatingips)

agent side: l3-agent只處理有DVR_SNAT_BOUND標籤(也就是unbount ports)的port

_process_router_update -> _process_router_if_compatible -> _process_added_router(ri.process) -> process_external -> configure_fip_addresses -> process_floating_ip_addresses -> add_floating_ip -> floating_ip_added_dist(only call add_cetralized_floatingip when having DVR_SNAT_BOUND) -> add_centralized_floatingip -> process_floating_ip_nat_rules_for_centralized_floatingip(floating_ips = self.get_floating_ips())

上面針對的是vip,對於allowed_addr_pair這種unbound port也是一樣中心化處理

_dvr_handle_unbound_allowed_addr_pair_add|_notify_l3_agent_new_port|_notify_l3_agent_port_update -> update_arp_entry_for_dvr_service_port (generate arp table for every pairs of this port)

測試環境快速搭建

juju bootstrap

bzr branch lp:openstack-charm-testing && cd openstack-charm-testing/

juju-deployer -c ./next.yaml -d xenial-mitaka

juju status |grep message

juju set neutron-api l2-population=True enable-dvr=True

./configure

source ./novarc

nova boot --flavor 2 --image trusty --nic net-id=$(neutron net-list |grep ' private ' |awk '{print $2}') --poll i1

nova secgroup-add-rule default icmp -1 -1 0.0.0.0/0

nova secgroup-add-rule default tcp 22 22 0.0.0.0/0

nova floating-ip-create

nova floating-ip-associate i1 $(nova floating-ip-list |grep 'ext_net' |awk '{print $2}')

neutron net-create private_2 --provider:network_type gre --provider:segmentation_id 1012

neutron subnet-create --gateway 192.168.22.1 private_2 192.168.22.0/24 --enable_dhcp=True --name private_subnet_2

ROUTER_ID=$(neutron router-list |grep ' provider-router ' |awk '{print $2}')

SUBNET_ID=$(neutron subnet-list |grep '192.168.22.0/24' |awk '{print $2}')

neutron router-interface-add $ROUTER_ID $SUBNET_ID

nova hypervisor-list

nova boot --flavor 2 --image trusty --nic net-id=$(neutron net-list |grep ' private_2 ' |awk '{print $2}') --hint force_hosts=juju-zhhuabj-machine-16 i2

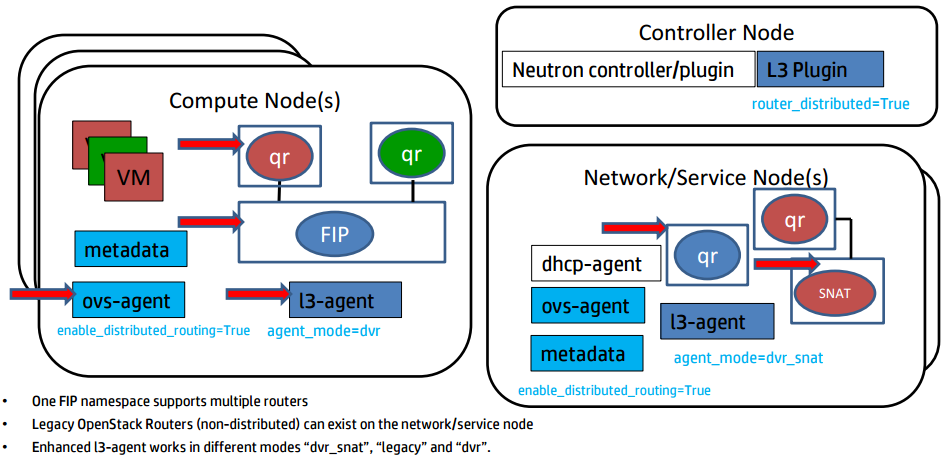

相關配置

1, neutron.conf on the node hosted neutron-api

router_distributed = True

2, ml2_conf.ini on the node hosts neutron-api

mechanism_drivers =openvswitch,l2population

3, l3_agent on the node hosted nova-compute

agent_mode = dvr

4, l3_agent on the node hosted l3-agent

agent_mode = dvr_snat

5, openvswitch_agent.ini on all nodes

[agent]

l2_population = True

enable_distributed_routing = True

基於策略的路由

如路由器上有多個介面,一個為IF1(IP為IP1), 與之相連的子網為P1_NET

a, 建立路由表T1, echo 200 T1 >> /etc/iproute2/rt_tables

b, 設定main表中的預設路由,ip route add default via $P1

c, 設定main表中的路由,ip route add $P1_NET dev $IF1 src IP1, 注意一定要加src引數, 與步驟d)配合

d, 設定路由規則, ip rule add from $IP1 table T1, 注意與上步c)配合

e, 設定T1表中的路由, ip route add $P1_NET dev $IF1 src $IP1 table T1 && ip route flush cache

f, 負載均衡, ip route add default scope global nexthop via $P1 dev $IF1 weight 1 \

nexthop via $P2 dev $IF2 weight 1

1, 中心化SNAT流量 (計算節點沒有FIP時會這麼走)

DVR Deployment without FIP - SNAT goes centralized l3-agent:

-

qr namespace (R ns) in compute node uses table=218103809 policy router to guide SNAT traffic from compute node to centralized network node (eg: qr1=192.168.21.1, sg1=192.168.21.3, VM=192.168.21.5)

-

ip netns exec qr ip route add192.168.21.0/24 dev qr1 src 192.168.21.1 table main #ingress

-

ip netns exec qr ip rule add from 192.168.21.1/24 table 218103809 #egress

-

ip netns exec qr ip route add default via 192.168.21.3 dev qr1 table 218103809

-

-

SNAT namespace in network node uses the following SNAT rules

-

-A neutron-l3-agent-snat -o qg -j SNAT --to-source <FIP_on_qg>

-

-A neutron-l3-agent-snat -m mark ! --mark 0x2/0xffff -m conntrack --ctstate DNAT -j SNAT --to-source <FIP_on_qg>

-

完整的路徑如下:

計算節點只有qrouter-xxx名空間(計算節點有FIP才有fip-xxx名空間)

$ sudo ip netns exec qrouter-9a3594b0-52d1-4f29-a799-2576682e275b ip addr show

19: qr-48e90ad1-5f: <BROADCAST,MULTICAST,UP,LOWER_UP> mtu 1458 qdisc noqueue state UNKNOWN group default qlen 1

link/ether fa:16:3e:f8:fe:97 brd ff:ff:ff:ff:ff:ff

inet 192.168.21.1/24 brd 192.168.21.255 scope global qr-48e90ad1-5f

valid_lft forever preferred_lft forever

$ sudo ip netns exec qrouter-9a3594b0-52d1-4f29-a799-2576682e275b ip rule list

0: from all lookup local

32766: from all lookup main

32767: from all lookup default

3232240897: from 192.168.21.1/24 lookup 3232240897

$ sudo ip netns exec qrouter-9a3594b0-52d1-4f29-a799-2576682e275b ip route list table main

192.168.21.0/24 dev qr-48e90ad1-5f proto kernel scope link src 192.168.21.1

$ sudo ip netns exec qrouter-9a3594b0-52d1-4f29-a799-2576682e275b ip route list table 3232240897

default via 192.168.21.3 dev qr-48e90ad1-5f

網路節點, sg-xxx上的介面即為同子閘道器的一個專用於SNAT的IP

$ sudo ip netns list

qrouter-9a3594b0-52d1-4f29-a799-2576682e275b (id: 2)

snat-9a3594b0-52d1-4f29-a799-2576682e275b (id: 1)

qdhcp-7a8aad5b-393e-4428-b098-066d002b2253 (id: 0)

$ sudo ip netns exec snat-9a3594b0-52d1-4f29-a799-2576682e275b ip addr show

2: [email protected]: <BROADCAST,MULTICAST,UP,LOWER_UP> mtu 1458 qdisc noqueue state UP group default qlen 1000

link/ether fa:16:3e:c4:fd:ad brd ff:ff:ff:ff:ff:ff link-netnsid 0

inet 10.5.150.0/16 brd 10.5.255.255 scope global qg-b825f548-32

valid_lft forever preferred_lft forever

3: [email protected]: <BROADCAST,MULTICAST,UP,LOWER_UP> mtu 1458 qdisc noqueue state UP group default qlen 1000

link/ether fa:16:3e:62:d3:26 brd ff:ff:ff:ff:ff:ff link-netnsid 0

inet 192.168.21.3/24 brd 192.168.21.255 scope global sg-95540cb3-85

valid_lft forever preferred_lft forever

$ sudo ip netns exec snat-9a3594b0-52d1-4f29-a799-2576682e275b iptables-save |grep SNAT

-A neutron-l3-agent-snat -o qg-b825f548-32 -j SNAT --to-source 10.5.150.0

-A neutron-l3-agent-snat -m mark ! --mark 0x2/0xffff -m conntrack --ctstate DNAT -j SNAT --to-source 10.5.150.02, 計算節點有FIP時SNAT走計算節點

DVR Deployment without FIP - SNAT goes from qr ns to FIP ns via peer devices rfp and fpr

-

qr namespace in compute node uses table=16 policy router to guide SNAT traffic from compute node to centralized network node (eg: rfp/qr=169.254.109.46/31, fpr/fip=169.254.106.115/31, VM=192.168.21.5)

-

ip netns exec qr ip route add 192.168.21.0/24 dev qr src 192.168.21.1 table main #ingress

-

ip netns exec qr ip rule add from 192.168.21.5 table 16 #egress

-

ip netns exec qr ip route add default via 169.254.106.115 dev rfp table 16

-

-

qr namespace in compute node also uses the following SNAT rules

-A neutron-l3-agent-float-snat -s 192.168.21.5/32 -j SNAT --to-source <FIP_on_rfp>

具實設定是,這個和下面的DNAT類似,計算節點上因為已經有FIP那SNAT也從計算節點出,故需要將SNAT流量從qrouter-xxx名空間通過rfp-xxx與fpr-xxx這對peer devices採用16這個路由表導到fip-xxx名空間。

$ sudo ip netns list

qrouter-9a3594b0-52d1-4f29-a799-2576682e275b

fip-57282976-a9b2-4c3f-9772-6710507c5c4e

$ sudo ip netns exec qrouter-9a3594b0-52d1-4f29-a799-2576682e275b ip addr show

4: rfp-[email protected]: <BROADCAST,MULTICAST,UP,LOWER_UP> mtu 1458 qdisc noqueue state UP group default qlen 1000

link/ether 3a:63:a4:11:4d:c4 brd ff:ff:ff:ff:ff:ff link-netnsid 0

inet 169.254.109.46/31 scope global rfp-9a3594b0-5

valid_lft forever preferred_lft forever

inet 10.5.150.1/32 brd 10.5.150.1 scope global rfp-9a3594b0-5

valid_lft forever preferred_lft forever

19: qr-48e90ad1-5f: <BROADCAST,MULTICAST,UP,LOWER_UP> mtu 1458 qdisc noqueue state UNKNOWN group default qlen 1

link/ether fa:16:3e:f8:fe:97 brd ff:ff:ff:ff:ff:ff

inet 192.168.21.1/24 brd 192.168.21.255 scope global qr-48e90ad1-5f

valid_lft forever preferred_lft forever

$ sudo ip netns exec qrouter-9a3594b0-52d1-4f29-a799-2576682e275b ip rule list

0: from all lookup local

32766: from all lookup main

32767: from all lookup default

57481: from 192.168.21.5 lookup 16

3232240897: from 192.168.21.1/24 lookup 3232240897

$ sudo ip netns exec qrouter-9a3594b0-52d1-4f29-a799-2576682e275b ip route list table main

169.254.109.46/31 dev rfp-9a3594b0-5 proto kernel scope link src 169.254.109.46

192.168.21.0/24 dev qr-48e90ad1-5f proto kernel scope link src 192.168.21.1

$ sudo ip netns exec qrouter-9a3594b0-52d1-4f29-a799-2576682e275b ip route list table 16

default via 169.254.106.115 dev rfp-9a3594b0-5

$ sudo ip netns exec qrouter-9a3594b0-52d1-4f29-a799-2576682e275b iptables-save |grep SNAT

-A neutron-l3-agent-float-snat -s 192.168.21.5/32 -j SNAT --to-source 10.5.150.1

$ sudo ip netns exec fip-57282976-a9b2-4c3f-9772-6710507c5c4e ip addr show

5: fpr-9a3594b0-5: <BROADCAST,MULTICAST,UP,LOWER_UP> mtu 1458 qdisc noqueue state UP group default qlen 1000

link/ether fa:e7:f9:fd:5a:40 brd ff:ff:ff:ff:ff:ff link-netnsid 0

inet 169.254.106.115/31 scope global fpr-9a3594b0-5

valid_lft forever preferred_lft forever

17: fg-d35ad06f-ae: <BROADCAST,MULTICAST,UP,LOWER_UP> mtu 1458 qdisc noqueue state UNKNOWN group default qlen 1

link/ether fa:16:3e:b5:2d:2c brd ff:ff:ff:ff:ff:ff

inet 10.5.150.2/16 brd 10.5.255.255 scope global fg-d35ad06f-ae

valid_lft forever preferred_lft forever

$ sudo ip netns exec qrouter-9a3594b0-52d1-4f29-a799-2576682e275b route -n

Kernel IP routing table

Destination Gateway Genmask Flags Metric Ref Use Iface

169.254.106.114 0.0.0.0 255.255.255.254 U 0 0 0 rfp-9a3594b0-5

192.168.21.0 0.0.0.0 255.255.255.0 U 0 0 0 qr-48e90ad1-5f

$ sudo ip netns exec fip-57282976-a9b2-4c3f-9772-6710507c5c4e route -n

Kernel IP routing table

Destination Gateway Genmask Flags Metric Ref Use Iface

0.0.0.0 10.5.0.1 0.0.0.0 UG 0 0 0 fg-d35ad06f-ae

10.5.0.0 0.0.0.0 255.255.0.0 U 0 0 0 fg-d35ad06f-ae

10.5.150.1 169.254.106.114 255.255.255.255 UGH 0 0 0 fpr-9a3594b0-5

169.254.106.114 0.0.0.0 255.255.255.254 U 0 0 0 fpr-9a3594b0-5rfp-[email protected]: <BROADCAST,MULTICAST,UP,LOWER_UP> mtu 1458 qdisc noqueue state UP group default qlen 1000

link/ether 3a:63:a4:11:4d:c4 brd ff:ff:ff:ff:ff:ff link-netnsid 0

inet 169.254.109.46/31 scope global rfp-9a3594b0-5

valid_lft forever preferred_lft forever

inet 10.5.150.1/32 brd 10.5.150.1 scope global rfp-9a3594b0-5

valid_lft forever preferred_lft forever

19: qr-48e90ad1-5f: <BROADCAST,MULTICAST,UP,LOWER_UP> mtu 1458 qdisc noqueue state UNKNOWN group default qlen 1

link/ether fa:16:3e:f8:fe:97 brd ff:ff:ff:ff:ff:ff

inet 192.168.21.1/24 brd 192.168.21.255 scope global qr-48e90ad1-5f

valid_lft forever preferred_lft forever

$ sudo ip netns exec qrouter-9a3594b0-52d1-4f29-a799-2576682e275b ip rule list

0: from all lookup local

32766: from all lookup main

32767: from all lookup default

57481: from 192.168.21.5 lookup 16

3232240897: from 192.168.21.1/24 lookup 3232240897

$ sudo ip netns exec qrouter-9a3594b0-52d1-4f29-a799-2576682e275b ip route list table main

169.254.109.46/31 dev rfp-9a3594b0-5 proto kernel scope link src 169.254.109.46

192.168.21.0/24 dev qr-48e90ad1-5f proto kernel scope link src 192.168.21.1

$ sudo ip netns exec qrouter-9a3594b0-52d1-4f29-a799-2576682e275b ip route list table 16

default via 169.254.106.115 dev rfp-9a3594b0-5

$ sudo ip netns exec qrouter-9a3594b0-52d1-4f29-a799-2576682e275b iptables-save |grep SNAT

-A neutron-l3-agent-float-snat -s 192.168.21.5/32 -j SNAT --to-source 10.5.150.1

$ sudo ip netns exec fip-57282976-a9b2-4c3f-9772-6710507c5c4e ip addr show

5: fpr-9a3594b0-5: <BROADCAST,MULTICAST,UP,LOWER_UP> mtu 1458 qdisc noqueue state UP group default qlen 1000

link/ether fa:e7:f9:fd:5a:40 brd ff:ff:ff:ff:ff:ff link-netnsid 0

inet 169.254.106.115/31 scope global fpr-9a3594b0-5

valid_lft forever preferred_lft forever

17: fg-d35ad06f-ae: <BROADCAST,MULTICAST,UP,LOWER_UP> mtu 1458 qdisc noqueue state UNKNOWN group default qlen 1

link/ether fa:16:3e:b5:2d:2c brd ff:ff:ff:ff:ff:ff

inet 10.5.150.2/16 brd 10.5.255.255 scope global fg-d35ad06f-ae

valid_lft forever preferred_lft forever

$ sudo ip netns exec qrouter-9a3594b0-52d1-4f29-a799-2576682e275b route -n

Kernel IP routing table

Destination Gateway Genmask Flags Metric Ref Use Iface

169.254.106.114 0.0.0.0 255.255.255.254 U 0 0 0 rfp-9a3594b0-5

192.168.21.0 0.0.0.0 255.255.255.0 U 0 0 0 qr-48e90ad1-5f

$ sudo ip netns exec fip-57282976-a9b2-4c3f-9772-6710507c5c4e route -n

Kernel IP routing table

Destination Gateway Genmask Flags Metric Ref Use Iface

0.0.0.0 10.5.0.1 0.0.0.0 UG 0 0 0 fg-d35ad06f-ae

10.5.0.0 0.0.0.0 255.255.0.0 U 0 0 0 fg-d35ad06f-ae

10.5.150.1 169.254.106.114 255.255.255.255 UGH 0 0 0 fpr-9a3594b0-5

169.254.106.114 0.0.0.0 255.255.255.254 U 0 0 0 fpr-9a3594b0-5

3, 計算節點上的DNAT流量(一個計算節點需要兩個外網IP)

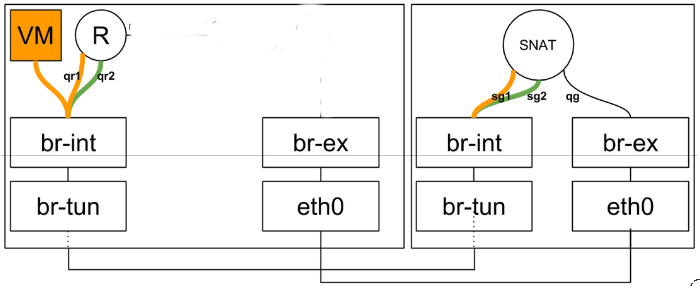

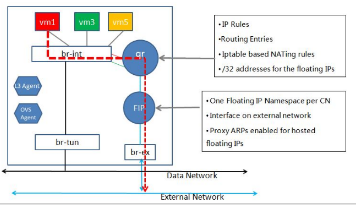

網路節點上的namespace是qrouter(管子網閘道器)和snat(有qg-介面與用於SNAT的,與子網閘道器不同的sg-介面)。

計算節點上的namespace是qrouter(管子網閘道器,rpf:169.254.31.29/24,FIP位於rpf介面)和fip(fg:外網閘道器,fpr:169.254.31.28), qrouter與fip兩個名空間通過fpr與rpf兩個peer裝置關聯,流量從fip:fg經peer裝置導到qrouter裡FIP。

DVR Deployment without FIP - DNAT first via arp proxy then goes from FIP ns to qr ns via peer devices rfp and fpr

-

FIP ns need to proxy ARP for FIP_on_rfp on fg(fg will finally connect to br-ex) because rfp is in another ns

-

ip netns exec fip sysctl net.ipv4.conf.fg-d35ad06f-ae.proxy_arp=1

-

-

The floating traffic is guided from FIP ns to qr ns via peer devices (rfp and fpr)

-

qr namespace in compute node has the following DNAT

-

ip netns exec qr iptables -A neutron-l3-agent-PREROUTING -d <FIP_on_rfp> -j DNAT --to-destination 192.168.21.5

-

ip netns exec qr iptables -A neutron-l3-agent-POSTROUTING ! -i rfp ! -o rfp -m conntrack ! --ctstate DNAT -j ACCEPT

-

繼續給上面的虛機13.0.0.6配置一個浮動IP(192.168.122.219)

a, 計算節點的qrouter名空間除了有它的閘道器13.0.0.1外,還會有它的浮動IP(192.168.122.219),和rfp-db5090df-8=169.254.31.28/31

有了DNAT規則(-A neutron-l3-agent-PREROUTING -d 192.168.122.219/32 -j DNAT --to-destination 13.0.0.6)

-A neutron-l3-agent-POSTROUTING ! -i rfp-db5090df-8 ! -o rfp-db5090df-8 -m conntrack ! --ctstate DNAT -j ACCEPT

c, 計算節點除了有qrouter名空間外,還多出一個fip名空間。它多用了一個外網IP(192.168.122.220), 和fpr-fpr-db5090df-8=169.254.31.29/31

d, 處理snat流量和之前一樣由路由表21813809處理(以下所有命令均是在名空間執行的,為了清晰我去掉了)

ip route add 13.0.0.0/24 dev rfp-db5090df-8 src 13.0.0.1

ip rule add from 13.0.0.1 table 218103809

ip route add 13.0.0.0/24 dev rfp-db5090df-8 src 13.0.0.1 table 218103809

e, qrouter名空間內處理dnat流量新增路由表16處理,把從虛機13.0.0.6出來的流量通過rfp-db5090df-8導到fip名空間

ip rule add from 13.0.0.6 table 16

ip route add default gw dev rfp-db5090df-8 table 6

f, fip名空間處理dnat流量,

ip route add 169.254.31.28/31 dev fpr-db5090df-8

ip route add 192.168.122.219 dev fpr-db5090df-8

ip route add 192.168.122.0/24 dev fg-c56eb4c0-b0

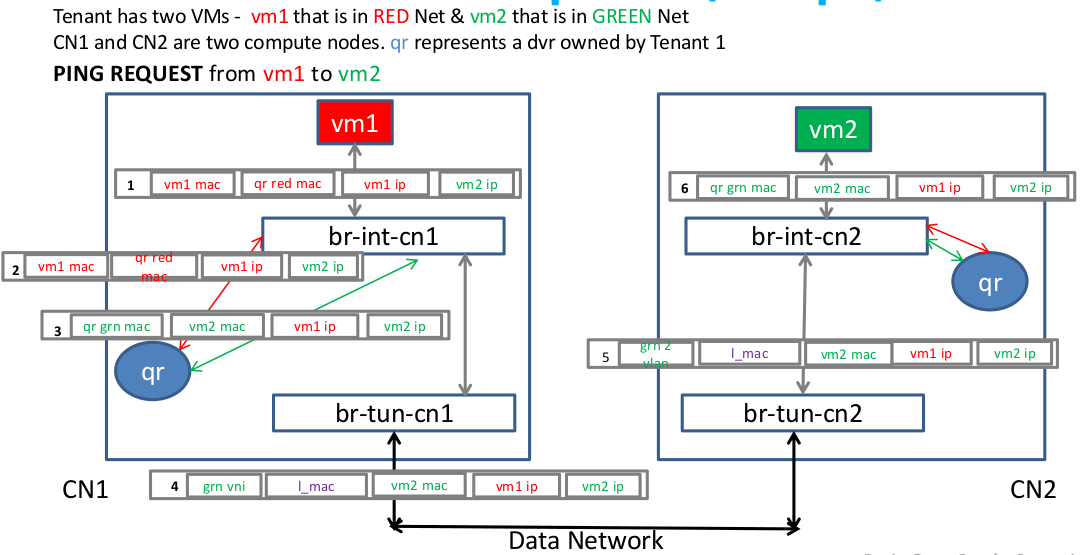

4, 東西流量(同一tenant下不同子網)

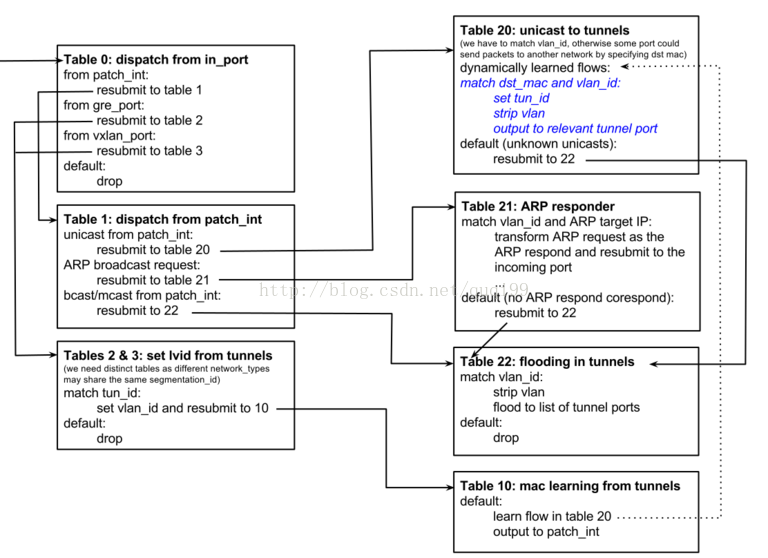

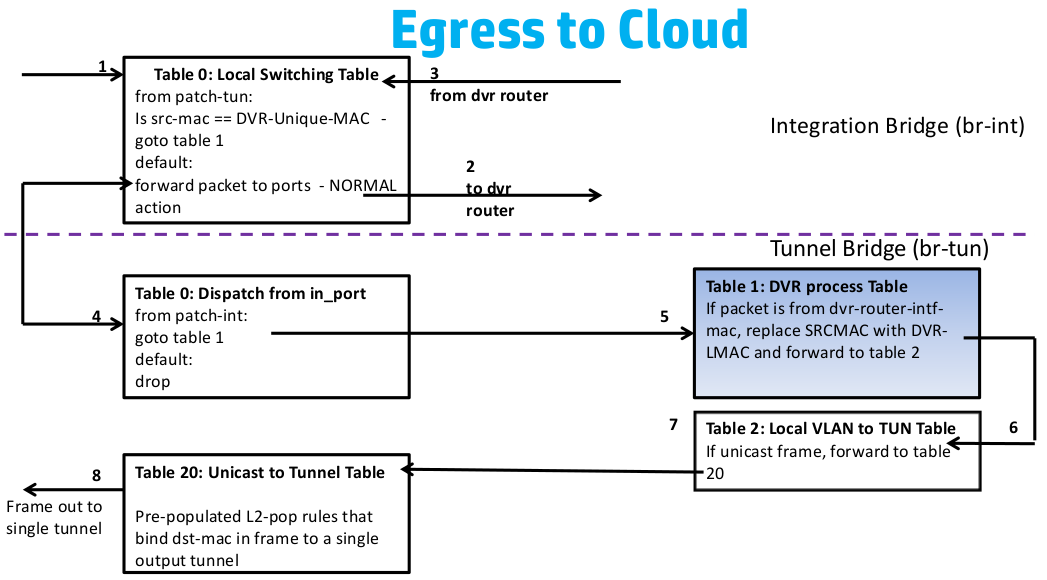

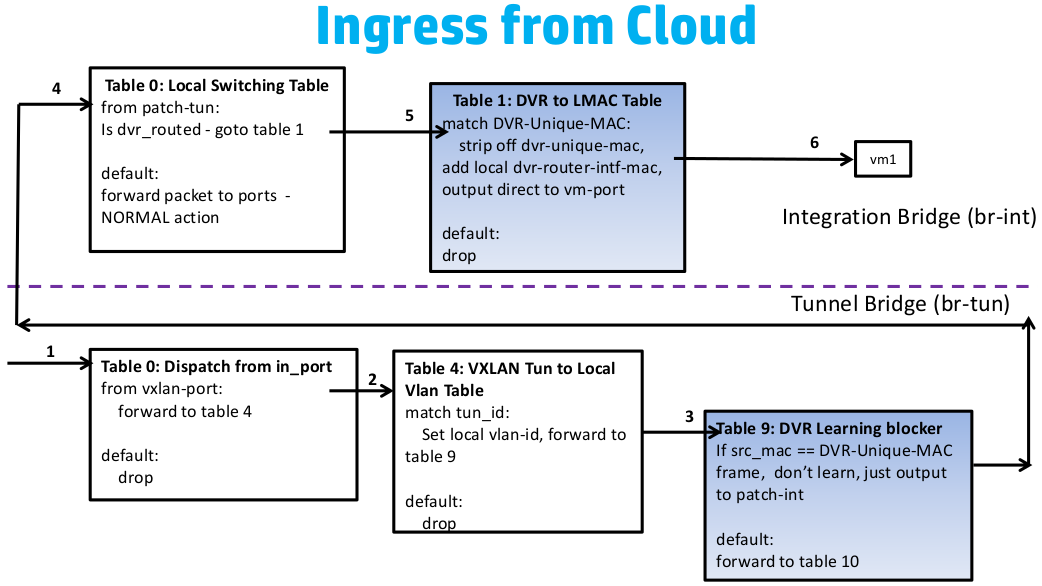

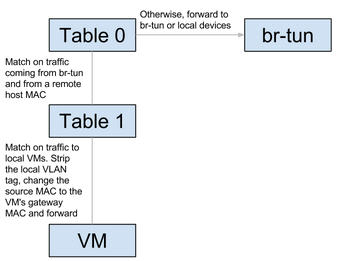

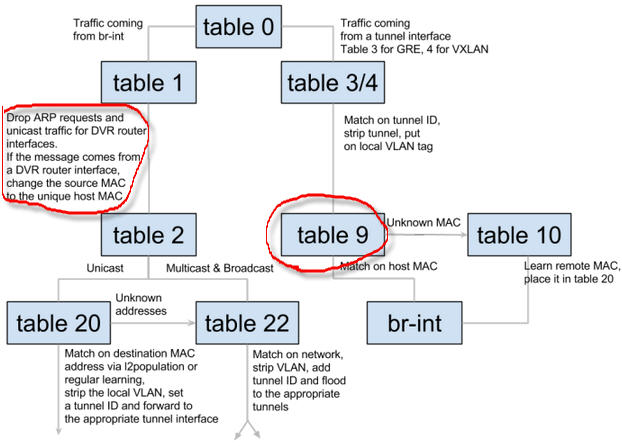

- 入口流量 - 即從br-tun的gre_port或vxlan_port進入的流量,先轉到table 2&3去tunnel id加lvid, 然後轉到table 10去學習遠端的tunnel地址,最到轉到patch-int從而讓流量從br-tun到br-int

- 出口流量 - 即從br-tun的patch-int進入的流量,先轉到table 1,單播轉到table 20去學習VM的MAC,廣播或多播轉到table 22, ARP廣播則轉到table 21

從下圖的東西向流量(同一tenant下的不同子網)的流向圖可以看出,它的設計的關鍵是肯定會出現多個計算節點上存在同一子網的閘道器,所以應該各計算節點上相同子網的所有閘道器使用相同的IP與MAC地址,或者也可以為每個計算節點生成唯一的DVR MAC地址。所以流表需要在進出br-tun時對DVR-MAC進行封裝與解封。

總結後的流表如下:

對於出口流量,

table=1, priority=4, dl_vlan=vlan1, dl_type=arp, ar_tpa=gw1 actions:drop #一計算節點所有子網到其他計算節點其閘道器的arp流量

table=1, priority=4, dl_vlan=vlan1, dl_dst=gw1-mac actions:drop #一計算節點所有子網到其他計算節點其閘道器的流量

table=1, priority=1, dl_vlan=vlan1, dl_src=gw1-mac, actions:mod dl_src=dvr-cn1-mac,resubmit(,2) #所有出計算節點的流量使用DVR MAC

對於入口流量,還得增加一個table 9, 插在table 2&3 (改先跳到table 9) 與table 10之間:

table=9, priority=1, dl_src=dvc-cn1-mac actions=output-> patch-int

table=9, priority=0, action=-resubmit(,10)

真實的流表如下:

$ sudo ovs-ofctl dump-flows br-int

NXST_FLOW reply (xid=0x4):

cookie=0xb9f4f1698b73b733, duration=4086.225s, table=0, n_packets=0, n_bytes=0, idle_age=4086, priority=10,icmp6,in_port=8,icmp_type=136 actions=resubmit(,24)

cookie=0xb9f4f1698b73b733, duration=4085.852s, table=0, n_packets=5, n_bytes=210, idle_age=3963, priority=10,arp,in_port=8 actions=resubmit(,24)

cookie=0xb9f4f1698b73b733, duration=91031.974s, table=0, n_packets=0, n_bytes=0, idle_age=65534, hard_age=65534, priority=4,in_port=1,dl_src=fa:16:3f:02:1a:a7 actions=resubmit(,2)

cookie=0xb9f4f1698b73b733, duration=91031.667s, table=0, n_packets=0, n_bytes=0, idle_age=65534, hard_age=65534, priority=2,in_port=2,dl_src=fa:16:3f:02:1a:a7 actions=resubmit(,1)

cookie=0xb9f4f1698b73b733, duration=91031.178s, table=0, n_packets=0, n_bytes=0, idle_age=65534, hard_age=65534, priority=4,in_port=1,dl_src=fa:16:3f:be:36:1f actions=resubmit(,2)

cookie=0xb9f4f1698b73b733, duration=91030.686s, table=0, n_packets=87, n_bytes=6438, idle_age=475, hard_age=65534, priority=2,in_port=2,dl_src=fa:16:3f:be:36:1f actions=resubmit(,1)

cookie=0xb9f4f1698b73b733, duration=91030.203s, table=0, n_packets=0, n_bytes=0, idle_age=65534, hard_age=65534, priority=4,in_port=1,dl_src=fa:16:3f:ef:44:e9 actions=resubmit(,2)

cookie=0xb9f4f1698b73b733, duration=91029.710s, table=0, n_packets=0, n_bytes=0, idle_age=65534, hard_age=65534, priority=2,in_port=2,dl_src=fa:16:3f:ef:44:e9 actions=resubmit(,1)

cookie=0xb9f4f1698b73b733, duration=91033.507s, table=0, n_packets=0, n_bytes=0, idle_age=65534, hard_age=65534, priority=2,in_port=1 actions=drop

cookie=0xb9f4f1698b73b733, duration=91039.210s, table=0, n_packets=0, n_bytes=0, idle_age=65534, hard_age=65534, priority=0 actions=NORMAL

cookie=0xb9f4f1698b73b733, duration=91033.676s, table=0, n_packets=535, n_bytes=51701, idle_age=3963, hard_age=65534, priority=1 actions=NORMAL

cookie=0xb9f4f1698b73b733, duration=4090.101s, table=1, n_packets=2, n_bytes=196, idle_age=3965, priority=4,dl_vlan=4,dl_dst=fa:16:3e:26:85:02 actions=strip_vlan,mod_dl_src:fa:16:3e:6d:f9:9c,output:8

cookie=0xb9f4f1698b73b733, duration=91033.992s, table=1, n_packets=84, n_bytes=6144, idle_age=475, hard_age=65534, priority=1 actions=drop

cookie=0xb9f4f1698b73b733, duration=91033.835s, table=2, n_packets=0, n_bytes=0, idle_age=65534, hard_age=65534, priority=1 actions=drop

cookie=0xb9f4f1698b73b733, duration=91034.151s, table=23, n_packets=0, n_bytes=0, idle_age=65534, hard_age=65534, priority=0 actions=drop

cookie=0xb9f4f1698b73b733, duration=4086.430s, table=24, n_packets=0, n_bytes=0, idle_age=4086, priority=2,icmp6,in_port=8,icmp_type=136,nd_target=fe80::f816:3eff:fe26:8502 actions=NORMAL

cookie=0xb9f4f1698b73b733, duration=4086.043s, table=24, n_packets=5, n_bytes=210, idle_age=3963, priority=2,arp,in_port=8,arp_spa=192.168.22.5 actions=NORMAL

cookie=0xb9f4f1698b73b733, duration=91038.874s, table=24, n_packets=0, n_bytes=0, idle_age=65534, hard_age=65534, priority=0 actions=drop

$ sudo ovs-ofctl dump-flows br-tun

NXST_FLOW reply (xid=0x4):

cookie=0x8502c5292d4bca36, duration=91092.422s, table=0, n_packets=165, n_bytes=14534, idle_age=4114, hard_age=65534, priority=1,in_port=1 actions=resubmit(,1)

cookie=0x8502c5292d4bca36, duration=4158.903s, table=0, n_packets=86, n_bytes=6340, idle_age=534, priority=1,in_port=4 actions=resubmit(,3)

cookie=0x8502c5292d4bca36, duration=4156.707s, table=0, n_packets=104, n_bytes=9459, idle_age=4114, priority=1,in_port=5 actions=resubmit(,3)

cookie=0x8502c5292d4bca36, duration=91093.596s, table=0, n_packets=0, n_bytes=0, idle_age=65534, hard_age=65534, priority=0 actions=drop

cookie=0x8502c5292d4bca36, duration=4165.402s, table=1, n_packets=0, n_bytes=0, idle_age=4165, priority=3,arp,dl_vlan=3,arp_tpa=192.168.21.1 actions=drop

cookie=0x8502c5292d4bca36, duration=4154.134s, table=1, n_packets=1, n_bytes=42, idle_age=4123, priority=3,arp,dl_vlan=4,arp_tpa=192.168.22.1 actions=drop

cookie=0x8502c5292d4bca36, duration=4165.079s, table=1, n_packets=0, n_bytes=0, idle_age=4165, priority=2,dl_vlan=3,dl_dst=fa:16:3e:f8:fe:97 actions=drop

cookie=0x8502c5292d4bca36, duration=4153.942s, table=1, n_packets=0, n_bytes=0, idle_age=4153, priority=2,dl_vlan=4,dl_dst=fa:16:3e:6d:f9:9c actions=drop

cookie=0x8502c5292d4bca36, duration=4164.583s, table=1, n_packets=0, n_bytes=0, idle_age=4164, priority=1,dl_vlan=3,dl_src=fa:16:3e:f8:fe:97 actions=mod_dl_src:fa:16:3f:6b:1f:bb,resubmit(,2)

cookie=0x8502c5292d4bca36, duration=4153.760s, table=1, n_packets=0, n_bytes=0, idle_age=4153, priority=1,dl_vlan=4,dl_src=fa:16:3e:6d:f9:9c actions=mod_dl_src:fa:16:3f:6b:1f:bb,resubmit(,2)

cookie=0x8502c5292d4bca36, duration=91092.087s, table=1, n_packets=161, n_bytes=14198, idle_age=4114, hard_age=65534, priority=0 actions=resubmit(,2)

cookie=0x8502c5292d4bca36, duration=91093.596s, table=2, n_packets=104, n_bytes=8952, idle_age=4114, hard_age=65534, priority=0,dl_dst=00:00:00:00:00:00/01:00:00:00:00:00 actions=resubmit(,20)

cookie=0x8502c5292d4bca36, duration=91093.596s, table=2, n_packets=60, n_bytes=5540, idle_age=4115, hard_age=65534, priority=0,dl_dst=01:00:00:00:00:00/01:00:00:00:00:00 actions=resubmit(,22)

cookie=0x8502c5292d4bca36, duration=4166.465s, table=3, n_packets=81, n_bytes=6018, idle_age=534, priority=1,tun_id=0x5 actions=mod_vlan_vid:3,resubmit(,9)

cookie=0x8502c5292d4bca36, duration=4155.086s, table=3, n_packets=109, n_bytes=9781, idle_age=4024, priority=1,tun_id=0x3f4 actions=mod_vlan_vid:4,resubmit(,9)

cookie=0x8502c5292d4bca36, duration=91093.595s, table=3, n_packets=0, n_bytes=0, idle_age=65534, hard_age=65534, priority=0 actions=drop

cookie=0x8502c5292d4bca36, duration=91093.594s, table=4, n_packets=0, n_bytes=0, idle_age=65534, hard_age=65534, priority=0 actions=drop

cookie=0x8502c5292d4bca36, duration=91093.593s, table=6, n_packets=0, n_bytes=0, idle_age=65534, hard_age=65534, priority=0 actions=drop

cookie=0x8502c5292d4bca36, duration=91090.524s, table=9, n_packets=0, n_bytes=0, idle_age=65534, hard_age=65534, priority=1,dl_src=fa:16:3f:02:1a:a7 actions=output:1

cookie=0x8502c5292d4bca36, duration=91089.541s, table=9, n_packets=87, n_bytes=6438, idle_age=534, hard_age=65534, priority=1,dl_src=fa:16:3f:be:36:1f actions=output:1

cookie=0x8502c5292d4bca36, duration=91088.584s, table=9, n_packets=0, n_bytes=0, idle_age=65534, hard_age=65534, priority=1,dl_src=fa:16:3f:ef:44:e9 actions=output:1

cookie=0x8502c5292d4bca36, duration=91092.260s, table=9, n_packets=104, n_bytes=9459, idle_age=4114, hard_age=65534, priority=0 actions=resubmit(,10)

cookie=0x8502c5292d4bca36, duration=91093.593s, table=10, n_packets=104, n_bytes=9459, idle_age=4114, hard_age=65534, priority=1 actions=learn(table=20,hard_timeout=300,priority=1,cookie=0x8502c5292d4bca36,NXM_OF_VLAN_TCI[0..11],NXM_OF_ETH_DST[]=NXM_OF_ETH_SRC[],load:0->NXM_OF_VLAN_TCI[],load:NXM_NX_TUN_ID[]->NXM_NX_TUN_ID[],output:NXM_OF_IN_PORT[]),output:1

cookie=0x8502c5292d4bca36, duration=4158.170s, table=20, n_packets=0, n_bytes=0, idle_age=4158, priority=2,dl_vlan=3,dl_dst=fa:16:3e:4a:60:12 actions=strip_vlan,set_tunnel:0x5,output:4

cookie=0x8502c5292d4bca36, duration=4155.621s, table=20, n_packets=0, n_bytes=0, idle_age=4155, priority=2,dl_vlan=3,dl_dst=fa:16:3e:f9:88:90 actions=strip_vlan,set_tunnel:0x5,output:5

cookie=0x8502c5292d4bca36, duration=4155.219s, table=20, n_packets=0, n_bytes=0, idle_age=4155, priority=2,dl_vlan=3,dl_dst=fa:16:3e:62:d3:26 actions=strip_vlan,set_tunnel:0x5,output:5

cookie=0x8502c5292d4bca36, duration=4150.520s, table=20, n_packets=101, n_bytes=8658, idle_age=4114, priority=2,dl_vlan=4,dl_dst=fa:16:3e:55:1d:42 actions=strip_vlan,set_tunnel:0x3f4,output:5

cookie=0x8502c5292d4bca36, duration=4150.330s, table=20, n_packets=0, n_bytes=0, idle_age=4150, priority=2,dl_vlan=4,dl_dst=fa:16:3e:3b:59:ef actions=strip_vlan,set_tunnel:0x3f4,output:5

cookie=0x8502c5292d4bca36, duration=91093.592s, table=20, n_packets=2, n_bytes=196, idle_age=4567, hard_age=65534, priority=0 actions=resubmit(,22)

cookie=0x8502c5292d4bca36, duration=4158.469s, table=22, n_packets=0, n_bytes=0, idle_age=4158, hard_age=4156, dl_vlan=3 actions=strip_vlan,set_tunnel:0x5,output:4,output:5

cookie=0x8502c5292d4bca36, duration=4155.574s, table=22, n_packets=13, n_bytes=1554, idle_age=4115, hard_age=4150, dl_vlan=4 actions=strip_vlan,set_tunnel:0x3f4,output:4,output:5

cookie=0x8502c5292d4bca36, duration=91093.416s, table=22, n_packets=47, n_bytes=3986, idle_age=4148, hard_age=65534, priority=0 actions=drop特性歷史

- DVR and VRRP are only supported since Juno

- DVR only supports the use of the vxlan overlay network for Juno

- l2-population must be disabled with VRRP before Newton

- l2-population must be enabled with DVR for all releases

- VRRP must be disabled with DVR before Newton

- DVR supports augmentation using VRRP since Newton,即支援將中心化的SNAT l3-agent新增VRRP HA支援 - https://review.openstack.org/#/c/143169/

- DVR對VPNaaS的影響。DVR分legency, dvr, dvr_snat三種模式。dvr只用於計算節點,legency/dvr_snat只用於網路節點。legency模式下只有qrouter-xxx ns(SNAT等設定在此ns裡), dvr_snat模式下才有snat_xxx(SNAT設定在此ns裡)。由於vpnaas的特性它只能中心化使用(neutron router-create vpn-router --distributed=False --ha=True),所以對於dvr router應該將VPNaaS服務只設置在中心化的snat_xxx ns中,並且將vpn流量從計算節點導到網路節點)。見:https://review.openstack.org/#/c/143203/ 。

- DVR對FWaaS的影響。legency時代neutron只有一個qrouter-xxx,所以東西南北向的防火牆功能都在qrouter-xxx裡;而DVR時代在計算節點多出fip-xxx(此ns中不安裝防火牆規則),在網路節點多出snat-xxx處理SNAT,我們確保計算節點上的qrouter-xxx裡也有南北向防火牆功能,並確保DVR在東西向流量不出問題。見:https://review.openstack.org/#/c/113359/ 與https://specs.openstack.org/openstack/neutron-specs/specs/juno/neutron-dvr-fwaas.html

- DVR對LBaaS無影響

Juju DVR

juju config neutron-api enable_dvr=True

juju config neutron-api neutron-external-network=ext_net

juju config neutron-gateway bridge-mappings=physnet1:br-ex

juju config neutron-openvswitch bridge-mappings=physnet1:br-ex #dvr need this

juju config neutron-openvswitch ext-port='ens7' #dvr need this

juju config neutron-gateway ext-port='ens7'

#juju config neutron-openvswitch data-port='br-ex:ens7'

#juju config neutron-gateway data-port='br-ex:ens7'

juju config neutron-openvswitch enable-local-dhcp-and-metadata=True #enable dhcp-agent in every nova-compute

juju config neutron-api dhcp-agents-per-network=3

juju config neutron-openvswitch dns-servers=8.8.8.8

1, br-int與br-ex通過fg-與phy-br-ex相連, fg-是在br-int上, br-ex有外部網絡卡ens7

Bridge br-ex

Controller "tcp:127.0.0.1:6633"

is_connected: true

fail_mode: secure

Port "ens7"

Interface "ens7"

Port br-ex

Interface br-ex

type: internal

Port phy-br-ex

Interface phy-br-ex

type: patch

options: {peer=int-br-ex}

Bridge br-int

Port "fg-8e1bdebc-b0"

tag: 2

Interface "fg-8e1bdebc-b0"

type: internal

如果沒有多餘的外部網絡卡ens7呢? 只有一個網絡卡且做成了linux bridge呢, 可以這樣:

on each compute node

# create veth pair between br-bond0 and veth-tenant

ip l add name veth-br-bond0 type veth peer name veth-tenant# set mtu if needed on veth interfaces and turn up

#ip l set dev veth-br-bond0 mtu 9000

#ip l set dev veth-tenant mtu 9000

ip l set dev veth-br-bond0 up

ip l set dev veth-tenant up

# add br-bond0 as master for veth-br-bond0

ip l set veth-br-bond0 master br-bond0

juju set neutron-openvswitch data-port="br-ex:veth-tenant"

2, fip-xxx上有arp proxy

[email protected]:~# ip netns exec fip-01471212-b65f-4735-9c7e-45bf9ec5eee8 sysctl net.ipv4.conf.fg-8e1bdebc-b0.proxy_arp

net.ipv4.conf.fg-8e1bdebc-b0.proxy_arp = 1

3, qrouter-xxx上有NAT rule, FIP並不需要設定在具體的網絡卡上, 只需要在SNAT/DNAT中指明即可.

[email protected]:~# ip netns exec qrouter-909c6b55-9bc6-476f-9d28-c32d031c41d7 iptables-save |grep NAT

-A neutron-l3-agent-POSTROUTING ! -i rfp-909c6b55-9 ! -o rfp-909c6b55-9 -m conntrack ! --ctstate DNAT -j ACCEPT

-A neutron-l3-agent-PREROUTING -d 10.5.150.9/32 -i rfp-909c6b55-9 -j DNAT --to-destination 192.168.21.10

-A neutron-l3-agent-float-snat -s 192.168.21.10/32 -j SNAT --to-source 10.5.150.9

-A neutron-postrouting-bottom -m comment --comment "Perform source NAT on outgoing traffic." -j neutron-l3-agent-snat

[email protected]:~# ip netns exec qrouter-909c6b55-9bc6-476f-9d28-c32d031c41d7 ip addr show |grep 10.5.150.9

參考

https://docs.openstack.org/newton/networking-guide/deploy-ovs-ha-dvr.html#deploy-ovs-ha-dvr

相關推薦

Neutron DVR實現multi-host特性打通東西南北流量提前看(by quqi99)

作者:張華 發表於:2014-03-07 版權宣告:可以任意轉載,轉載時請務必以超連結形式標明文章原始出處和作者資訊及本版權宣告 (http://blog.csdn.net/quqi99 ) Legacy Routing and Distributed Router i

基於ASP.NET WebAPI OWIN實現Self-Host項目實戰

hosting 知識 工作 develop plist 簡單 eba 直接 sock 引用 寄宿ASP.NET Web API 不一定需要IIS 的支持,我們可以采用Self Host 的方式使用任意類型的應用程序(控制臺、Windows Forms 應用、WPF 應

如何通過Rancher webhook微服務實現Service/Host的彈性伸縮

docker 容器 kubernetes 微服務 概述結合大家CICD的應用場景,本篇Blog旨在介紹如何通過Rancher的webhook微服務來實現Service/Host的彈性伸縮。流程介紹Service Scale創建example服務對象。創建service scale webhoo

Openstack中Neutron的實現模型

主機 linux內核 應用安全 公網 har 請求 tac pro router 一、Neutron概述 眾所周知,整個Open stack中網絡是通過Neutron組件實現,它也成為了整個Open stack中最復雜的部分,本文重點介紹Neutron的實現模型與應用場景,

酷客多企業版後臺開放實現BAT三平臺打通

技術分享 vpd 支付寶小程序 企業版 實現 開放 oss 打通 alt 好消息!酷客多單商戶企業版開放百度小程序!現企業版支持百度小程序和支付寶小程序,做到與旗艦版一致三平臺打通,為商家帶來更加全面的電商運營流量。歡迎各位合作夥伴推廣。酷客多企業版後臺開放實現BAT三平臺

FIR濾波器實現全通特性的充要條件——理論推導

對於FIR數字濾波器,可設其系統函式為 (1)H(z)=∑n=0N−1anz−n H(z)=\sum_{n=0}^{N-1} a_n z^{-n} \quad \tag{1} H(z)=n=0∑N−1anz−n(1) 從(1)式中,可以看出,H(z)H(z)

PhpStorm 2018.3 released: DQL, PHP 7.3, Multi-host Deployment, PHP CS Fixer, new refactorings, and more

PhpStorm 2018.3 is now available! This major release brings with it a lot of new powerful features and improvements, including DQL support, P

C語言函式指標實現多型特性

1、函式指標 函式指標是指向函式的指標變數,本質上是一個指標,類似於int*,只不過它是指向一個函式的入口地址。有了指向函式的指標變數後,就可以用該指標變數呼叫函式,就如同用指標變數引用其他型別變數一樣。 指標函式一般有兩個作用:呼叫函式和做函式的引數。 2、函式指標實現多

使用Realm實現Extension&Host共享資料

3.這次主要講解使用Realm進行Extension和Host共享資料 本文主要將該問題的解決過程和方法,參考資料會在本文末尾給出。 前言 Realm是一個第三方的跨平臺移動端資料庫,不是基於sqlite和coredata的,它做了一個自己的儲存系

python 學習 實現isOdd函式 實現isNum()函式 實現multi() 實現isPrime() 格式化輸出日期DAY16

1、實現isOdd()函式 def isOdd(num): if num % 2 == 0: return True else: return False n = eval(input()) print(isOdd(n))

Neutron 理解 (1): Neutron 所實現的網路虛擬化 [How Neutron Virtualizes Network]

學習 Neutron 系列文章: 1. 為什麼要網路虛擬化? 個人認為,這裡主要有兩個需求:一個是資料中心的現有網路不能滿足雲端計算的物理需求;另一個是資料中心的現有網路不能滿足雲端計算的軟體化即SDN要求。 1.1 現有物理網路不能

Neutron 理解 (1): Neutron 所實現的虛擬化網路 [How Netruon Virtualizes Network]

1. 為什麼要網路虛擬化? 個人認為,這裡主要有兩個需求:一個是資料中心的現有網路不能滿足雲端計算的物理需求;另一個是資料中心的現有網路不能滿足雲端計算的軟體化即SDN要求。 1.1 現有物理網路不能滿足雲端計算的需求 網際網路行業資料中心的基本特徵就是伺服

dubbox的學習之路1(實現原理,特性、安裝部署、負載均衡)

1. Dubbo是什麼? Dubbo是一個分散式服務框架,致力於提供高效能和透明化的RPC遠端服務呼叫方案,以及SOA服務治理方案。簡單的說,dubbo就是個服務框架,如果沒有分散式的需求,其實是不需要用的,只有在分散式的時候,才有dubbo這樣的分散式服務框架的需求,並且

使用WebStorm/Phpstorm實現remote host遠端開發

如果你的開發環境是在遠端主機上,webstorm可以提供通過ftp/ftps/sftp等方式實現遠端同步開發。這樣我們可以就拋棄ftp、winscp等工具,通過webstorm編輯遠端檔案以及部署,本文基於WebStorm5.04編寫, Intellij IDEA或者PH

初探Openstack Neutron DVR

比如我們在虛機中ping 8.8.8.8 。首先在虛機中查詢路由,和第一種情況一樣,虛機會發送給閘道器。傳送的包如下: Dest IP: 8.8.8.8 Souce IP: 10.0.1.5 Dest MAC: MAC of 10.0.1.1 Source MAC: MAC of 10.0.1.5 檢

bash的常見特性及文本查看命令

需要 深度 過程 命令行展開 文件 強引用 設置 direct 存在 (一)bash的基礎特性 命令補全 shell程序在接收到用戶執行命令的請求,分析完成之後,最左側的字符串會被當作命令; 命令查找機制:shell首先會在內部命令中匹配查找,如果沒有找到,則會在PAT

Python全棧day13(作業講解字典嵌套實現用戶輸入添加及查看)

語句 地址 技術 list 輸入 ima 北京 ice lower 要求: 列出字典對應節點名稱,根據用戶輸入可以添加節點,查看節點等功能,這裏以地址省-市-縣等作為列子,此題熟悉字典嵌套功能 vim day13-16.py db = {} path = [] wh

如何實現自己的執行緒池(不看後悔,一看必懂)

首先,在服務啟動的時候,我們可以啟動好幾個執行緒,並用一個容器(如執行緒池)來管理這些執行緒。當請求到來時,可以從池中取一個執行緒出來,執行任務(通常是對請求的響應),當任務結束後,再將這個執行緒放入池中備用;如果請求到來而池中沒有空閒的執行緒,該請求需要排隊等候。最後,當服務關閉時銷燬該池即可

java動態代理實現與原理詳細分析(【轉載】By--- Gonjan )

【轉載】By--- Gonjan 關於Java中的動態代理,我們首先需要了解的是一種常用的設計模式--代理模式,而對於代理,根據建立代理類的時間點,又可以分為靜態代理和動態代理。 一、代理模式

java動態代理實現與原理詳細分析(【轉載】By--- Gonjan )

sleep class 實施 div prot stack 註意 san 由於 【轉載】By--- Gonjan 關於Java中的動態代理,我們首先需要了解的是一種常用的設計模式--代理模式,而對於代理,根據創建代理類的時間點,又可以分為靜態代理和動態代理。