Maven 構建Java專案 通過JavaApi 操作 Hbase

部落格內容

在windows環境下使用Maven工具構建Java專案,通過JavaApi操作Hbase

構建前提

- 已經搭建好hadoop大資料平臺(我的hadoop基本配置為:3臺主機,主機名稱為master,slave1,slave2)

hbase-site.xml配置為:

<configuration> <property> <name>hbase.rootdir</name> <value>hdfs://mycluster/hbase</value> </property> <property> <name>hbase.master</name> <value>master</value> </property> <property> <name>hbase.cluster.distributed</name> <value>true</value> </property> <property> <name>hbase.zookeeper.property.clientPort</name> <value>2181</value> </property> <property> <name>hbase.zookeeper.quorum</name> <value>master,slave1,slave2</value> </property> <property> <name>zookeeper.session.timeout</name> <value>60000000</value> </property> <property> <name>dfs.support.append</name> <value>true</value> </property> </configuration>

- windows中安裝JDK

略

- windows中安裝Maven

略

- windows中修改host檔案

/*

File C:\Windows\System32\drivers\etc\hosts shouled be modified.

example:

10.0.0.3 master

10.0.0.2 slave1

10.0.0.4 slave2

*/工程建立

(本文中所有的檔案/資料夾建立,編譯執行使用cmder工具完成,亦可使用系統自帶的cmd)

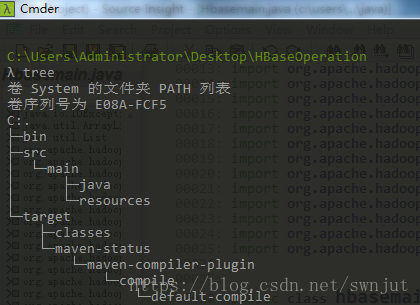

- 建立一個資料夾:HBaseOperation

- 在HBaseOperation中建立pom.xml

- 在HBaseOperation中建立src/main/java,及src/main/resources

- 在src/main/java建立 hbasemain.java, hbaseoperation.java

- 在src/main/resources建立log4j.properties

目錄結構

具體內容

pom.xml

<project xmlns="http://maven.apache.org/POM/4.0.0" xmlns:xsi="http://www.w3.org/2001/XMLSchema-instance" xsi:schemaLocation="http://maven.apache.org/POM/4.0.0 http://maven.apache.org/xsd/maven-4.0.0.xsd"> <modelVersion>4.0.0</modelVersion> <groupId>hbasedemo</groupId> <artifactId>hbasedemo01</artifactId> <version>0.0.1-SNAPSHOT</version> <packaging>jar</packaging> <name>hbasedemo01</name> <url>http://maven.apache.org</url> <properties> <project.build.sourceEncoding>UTF-8</project.build.sourceEncoding> </properties> <repositories> <repository> <id>cloudera</id> <url>https://repository.cloudera.com/artifactory/cloudera-repos/</url> </repository> </repositories> <dependencies> <dependency> <groupId>org.apache.hbase</groupId> <artifactId>hbase-client</artifactId> <version>1.3.1</version> </dependency> <dependency> <groupId>org.apache.hbase</groupId> <artifactId>hbase-server</artifactId> <version>1.3.1</version> </dependency> <dependency> <groupId>org.apache.hbase</groupId> <artifactId>hbase-common</artifactId> <version>1.3.1</version> </dependency> <dependency> <groupId>commons-logging</groupId> <artifactId>commons-logging</artifactId> <version>1.2</version> </dependency> <dependency> <groupId>org.apache.hadoop</groupId> <artifactId>hadoop-hdfs</artifactId> <version>2.5.1</version> </dependency> <dependency> <groupId>org.apache.hadoop</groupId> <artifactId>hadoop-client</artifactId> <version>2.5.1</version> </dependency> <dependency> <groupId>org.apache.hadoop</groupId> <artifactId>hadoop-common</artifactId> <version>2.5.1</version> </dependency> <dependency> <groupId>log4j</groupId> <artifactId>log4j</artifactId> <version>1.2.17</version> </dependency> <dependency> <groupId>org.slf4j</groupId> <artifactId>slf4j-log4j12</artifactId> <version>1.7.12</version> </dependency> <dependency> <groupId>junit</groupId> <artifactId>junit</artifactId> <version>3.8.1</version> <scope>test</scope> </dependency> </dependencies> </project>

log4j.properties

### 設定###

log4j.rootLogger = error,stdout,D,E

### 輸出資訊到控制擡 ###

log4j.appender.stdout = org.apache.log4j.ConsoleAppender

log4j.appender.stdout.Target = System.out

log4j.appender.stdout.layout = org.apache.log4j.PatternLayout

log4j.appender.stdout.layout.ConversionPattern = [%-5p] %d{yyyy-MM-dd HH:mm:ss,SSS} method:%l%n%m%n

### 輸出DEBUG 級別以上的日誌到=E://logs/error.log ###

log4j.appender.D = org.apache.log4j.DailyRollingFileAppender

log4j.appender.D.File = D://logs/log.log

log4j.appender.D.Append = true

log4j.appender.D.Threshold = ERROR

log4j.appender.D.layout = org.apache.log4j.PatternLayout

log4j.appender.D.layout.ConversionPattern = %-d{yyyy-MM-dd HH:mm:ss} [ %t:%r ] - [ %p ] %m%n

### 輸出ERROR 級別以上的日誌到=E://logs/error.log ###

log4j.appender.E = org.apache.log4j.DailyRollingFileAppender

log4j.appender.E.File =D://logs/error.log

log4j.appender.E.Append = true

log4j.appender.E.Threshold = ERROR

log4j.appender.E.layout = org.apache.log4j.PatternLayout

log4j.appender.E.layout.ConversionPattern = %-d{yyyy-MM-dd HH:mm:ss} [ %t:%r ] - [ %p ] %m%nhbasemain.java

import java.io.IOException;

import java.util.ArrayList;

import java.util.List;

import org.apache.hadoop.conf.Configuration;

import org.apache.hadoop.hbase.Cell;

import org.apache.hadoop.hbase.HBaseConfiguration;

import org.apache.hadoop.hbase.HColumnDescriptor;

import org.apache.hadoop.hbase.HTableDescriptor;

import org.apache.hadoop.hbase.TableName;

import org.apache.hadoop.hbase.client.Admin;

import org.apache.hadoop.hbase.client.Connection;

import org.apache.hadoop.hbase.client.ConnectionFactory;

import org.apache.hadoop.hbase.client.Delete;

import org.apache.hadoop.hbase.client.Get;

import org.apache.hadoop.hbase.client.Put;

import org.apache.hadoop.hbase.client.Result;

import org.apache.hadoop.hbase.client.ResultScanner;

import org.apache.hadoop.hbase.client.Scan;

import org.apache.hadoop.hbase.client.Table;

import org.apache.hadoop.hbase.exceptions.DeserializationException;

import org.apache.hadoop.hbase.filter.Filter;

import org.apache.hadoop.hbase.filter.SingleColumnValueFilter;

import org.apache.hadoop.hbase.filter.CompareFilter.CompareOp;

import org.apache.hadoop.hbase.util.Bytes;

public class hbasemain

{

public static void main(String[] args) throws Exception

{

hbaseoperation baseOperation = new hbaseoperation();

baseOperation.initconnection();

baseOperation.createTable();

//baseOperation.insert();

//baseOperation.queryTable();

//baseOperation.queryTableByRowKey("row1");

//baseOperation.queryTableByCondition("Kitty");

//baseOperation.deleteColumnFamily("columnfamily_1");

//baseOperation.deleteByRowKey("row1");

//baseOperation.truncateTable();

//baseOperation.deleteTable();

}

}hbaseoperation.java

import java.io.IOException;

import java.util.ArrayList;

import java.util.List;

import java.io.File;

import org.apache.hadoop.conf.Configuration;

import org.apache.hadoop.hbase.*;

import org.apache.hadoop.hbase.client.Admin;

import org.apache.hadoop.hbase.client.Connection;

import org.apache.hadoop.hbase.client.ConnectionFactory;

import org.apache.hadoop.hbase.client.Delete;

import org.apache.hadoop.hbase.client.Get;

import org.apache.hadoop.hbase.client.Put;

import org.apache.hadoop.hbase.client.Result;

import org.apache.hadoop.hbase.client.ResultScanner;

import org.apache.hadoop.hbase.client.Scan;

import org.apache.hadoop.hbase.client.Table;

import org.apache.hadoop.hbase.exceptions.DeserializationException;

import org.apache.hadoop.hbase.filter.Filter;

import org.apache.hadoop.hbase.filter.SingleColumnValueFilter;

import org.apache.hadoop.hbase.filter.CompareFilter.CompareOp;

import org.apache.hadoop.hbase.util.Bytes;

public class hbaseoperation

{

public Connection connection; //connection object

public Admin admin; //operation object

public void initconnection() throws Exception

{

File workaround = new File(".");

System.getProperties().put("hadoop.home.dir",workaround.getAbsolutePath());

new File("./bin").mkdirs();

try

{

new File("./bin/winutils.exe").createNewFile();

}

catch (IOException e)

{

//

}

Configuration conf = HBaseConfiguration.create();

/*

File C:\Windows\System32\drivers\etc\hosts shouled be modified.

example:

10.0.0.3 master

10.0.0.2 slave1

10.0.0.4 slave2

*/

conf.set("hbase.zookeeper.quorum", "master,slave1,slave2");

conf.set("hbase.zookeeper.property.clientPort", "2181");

connection = ConnectionFactory.createConnection(conf);

admin = connection.getAdmin();

}

public void createTable() throws IOException

{

System.out.println("[hbaseoperation] start createtable...");

String tableNameString = "table_book";

TableName tableName = TableName.valueOf(tableNameString);

if (admin.tableExists(tableName))

{

System.out.println("[INFO] table exist");

}

else

{

HTableDescriptor hTableDescriptor = new HTableDescriptor(tableName);

hTableDescriptor.addFamily(new HColumnDescriptor("columnfamily_1"));

hTableDescriptor.addFamily(new HColumnDescriptor("columnfamily_2"));

hTableDescriptor.addFamily(new HColumnDescriptor("columnfamily_3"));

admin.createTable(hTableDescriptor);

}

System.out.println("[hbaseoperation] end createtable...");

}

public void insert() throws IOException

{

System.out.println("[hbaseoperation] start insert...");

Table table = connection.getTable(TableName.valueOf("table_book"));

List<Put> putList = new ArrayList<Put>();

Put put1;

put1 = new Put(Bytes.toBytes("row1"));

put1.addColumn(Bytes.toBytes("columnfamily_1"), Bytes.toBytes("name"), Bytes.toBytes("<<Java In Action>>"));

put1.addColumn(Bytes.toBytes("columnfamily_1"), Bytes.toBytes("price"), Bytes.toBytes("98.50"));

put1.addColumn(Bytes.toBytes("columnfamily_2"), Bytes.toBytes("author"), Bytes.toBytes("Tom"));

put1.addColumn(Bytes.toBytes("columnfamily_2"), Bytes.toBytes("version"), Bytes.toBytes("3 thrd"));

put1.addColumn(Bytes.toBytes("columnfamily_3"), Bytes.toBytes("discount"), Bytes.toBytes("5%"));

Put put2;

put2 = new Put(Bytes.toBytes("row2"));

put2.addColumn(Bytes.toBytes("columnfamily_1"), Bytes.toBytes("name"), Bytes.toBytes("<<C++ Prime>>"));

put2.addColumn(Bytes.toBytes("columnfamily_1"), Bytes.toBytes("price"), Bytes.toBytes("68.88"));

put2.addColumn(Bytes.toBytes("columnfamily_2"), Bytes.toBytes("author"), Bytes.toBytes("Jimmy"));

put2.addColumn(Bytes.toBytes("columnfamily_2"), Bytes.toBytes("version"), Bytes.toBytes("5 thrd"));

put2.addColumn(Bytes.toBytes("columnfamily_3"), Bytes.toBytes("discount"), Bytes.toBytes("15%"));

Put put3;

put3 = new Put(Bytes.toBytes("row3"));

put3.addColumn(Bytes.toBytes("columnfamily_1"), Bytes.toBytes("name"), Bytes.toBytes("<<Hadoop in Action>>"));

put3.addColumn(Bytes.toBytes("columnfamily_1"), Bytes.toBytes("price"), Bytes.toBytes("78.92"));

put3.addColumn(Bytes.toBytes("columnfamily_2"), Bytes.toBytes("author"), Bytes.toBytes("Kitty"));

put3.addColumn(Bytes.toBytes("columnfamily_2"), Bytes.toBytes("version"), Bytes.toBytes("2 thrd"));

put3.addColumn(Bytes.toBytes("columnfamily_3"), Bytes.toBytes("discount"), Bytes.toBytes("20%"));

putList.add(put1);

putList.add(put2);

putList.add(put3);

table.put(putList);

System.out.println("[hbaseoperation] start insert...");

}

public void queryTable() throws IOException

{

System.out.println("[hbaseoperation] start queryTable...");

Table table = connection.getTable(TableName.valueOf("table_book"));

ResultScanner scanner = table.getScanner(new Scan());

for (Result result : scanner)

{

byte[] row = result.getRow();

System.out.println("row key is:" + Bytes.toString(row));

List<Cell> listCells = result.listCells();

for (Cell cell : listCells)

{

System.out.print("family:" + Bytes.toString(cell.getFamilyArray(), cell.getFamilyOffset(),cell.getFamilyLength()));

System.out.print("qualifier:" + Bytes.toString(cell.getQualifierArray(), cell.getQualifierOffset(), cell.getQualifierLength()));

System.out.print("value:" + Bytes.toString(cell.getValueArray(), cell.getValueOffset(), cell.getValueLength()));

System.out.println("Timestamp:" + cell.getTimestamp());

}

}

System.out.println("[hbaseoperation] end queryTable...");

}

public void queryTableByRowKey(String rowkey) throws IOException

{

System.out.println("[hbaseoperation] start queryTableByRowKey...");

Table table = connection.getTable(TableName.valueOf("table_book"));

Get get = new Get(rowkey.getBytes());

Result result = table.get(get);

List<Cell> listCells = result.listCells();

for (Cell cell : listCells)

{

String rowKey = Bytes.toString(CellUtil.cloneRow(cell));

long timestamp = cell.getTimestamp();

String family = Bytes.toString(CellUtil.cloneFamily(cell));

String qualifier = Bytes.toString(CellUtil.cloneQualifier(cell));

String value = Bytes.toString(CellUtil.cloneValue(cell));

System.out.println(" ===> rowKey : " + rowKey + ", timestamp : " + timestamp + ", family : " + family + ", qualifier : " + qualifier + ", value : " + value);

}

System.out.println("[hbaseoperation] end queryTableByRowKey...");

}

public void queryTableByCondition(String authorName) throws IOException

{

System.out.println("[hbaseoperation] start queryTableByCondition...");

Table table = connection.getTable(TableName.valueOf("table_book"));

Filter filter = new SingleColumnValueFilter(Bytes.toBytes("columnfamily_2"), Bytes.toBytes("author"),CompareOp.EQUAL, Bytes.toBytes(authorName));

Scan scan = new Scan();

scan.setFilter(filter);

ResultScanner scanner = table.getScanner(scan);

for (Result result : scanner)

{

List<Cell> listCells = result.listCells();

for (Cell cell : listCells)

{

String rowKey = Bytes.toString(CellUtil.cloneRow(cell));

long timestamp = cell.getTimestamp();

String family = Bytes.toString(CellUtil.cloneFamily(cell));

String qualifier = Bytes.toString(CellUtil.cloneQualifier(cell));

String value = Bytes.toString(CellUtil.cloneValue(cell));

System.out.println(" ===> rowKey : " + rowKey + ", timestamp : " + timestamp + ", family : " + family + ", qualifier : " + qualifier + ", value : " + value);

}

}

System.out.println("[hbaseoperation] end queryTableByCondition...");

}

public void deleteColumnFamily(String cf) throws IOException

{

TableName tableName = TableName.valueOf("table_book");

admin.deleteColumn(tableName, Bytes.toBytes(cf));

}

public void deleteByRowKey(String rowKey) throws IOException

{

Table table = connection.getTable(TableName.valueOf("table_book"));

Delete delete = new Delete(Bytes.toBytes(rowKey));

table.delete(delete);

queryTable();

}

public void truncateTable() throws IOException

{

TableName tableName = TableName.valueOf("table_book");

admin.disableTable(tableName);

admin.truncateTable(tableName, true);

}

public void deleteTable() throws IOException

{

admin.disableTable(TableName.valueOf("table_book"));

admin.deleteTable(TableName.valueOf("table_book"));

}

}mvn clean

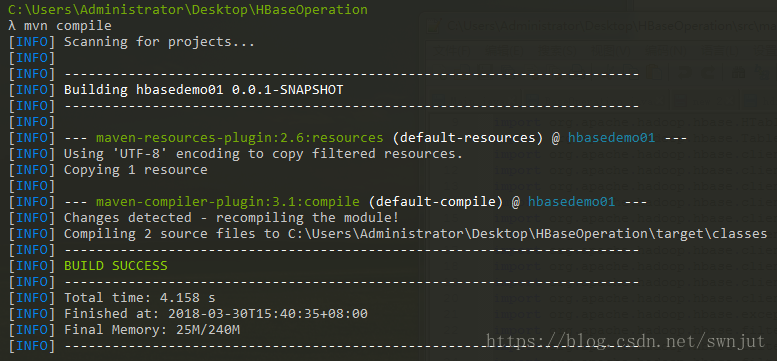

mvn compile

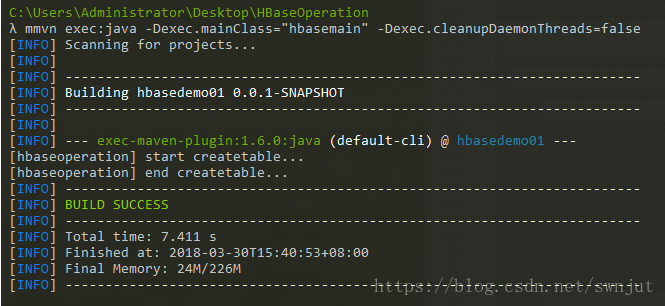

mvn exec:java -Dexec.mainClass="hbasemain" -Dexec.cleanupDaemonThreads=false

程式碼說明

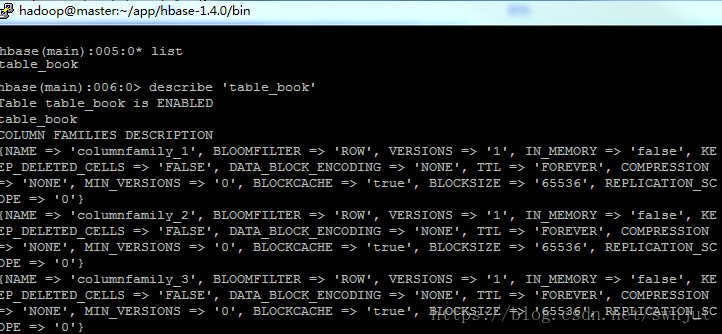

baseOperation.createTable();

在hbase中建立表:table_book併為其新增三個列簇 columnfamily_1,columnfamily_2,columnfamily_3

通過hbase shell檢視,已經建立成功

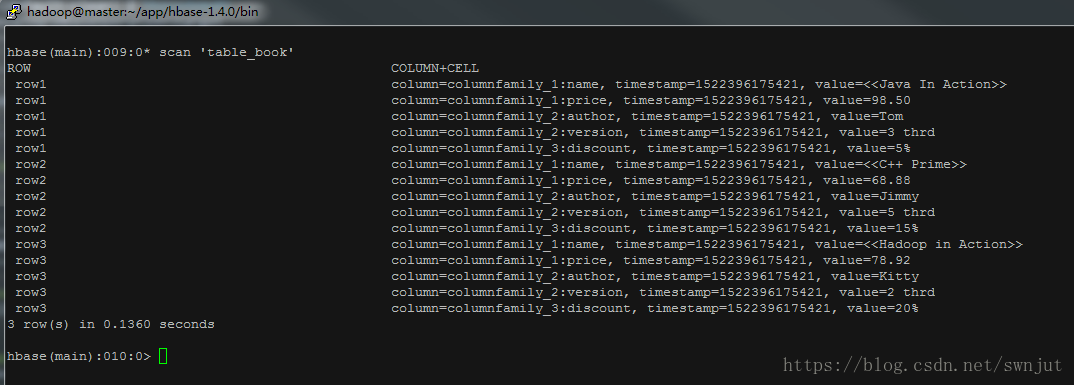

baseOperation.insert();

插入資料到表,在hbase shell中查看錶資料內容

baseOperation.queryTable();

查詢表中所有內容

baseOperation.queryTableByRowKey("row1");

依據rowkey查詢

baseOperation.queryTableByCondition("Kitty");

條件查詢,程式碼中依據作者名查詢

baseOperation.deleteColumnFamily("columnfamily_1");

刪除列簇

baseOperation.deleteByRowKey("row1");

依據rowkey值刪除

baseOperation.truncateTable();

清空表

baseOperation.deleteTable();

刪除表