spark讀取redis資料(互動式,scala單機版,java單機版)

阿新 • • 發佈:2019-01-23

- 互動式

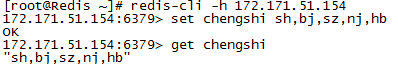

第一步:向redis中新增資料

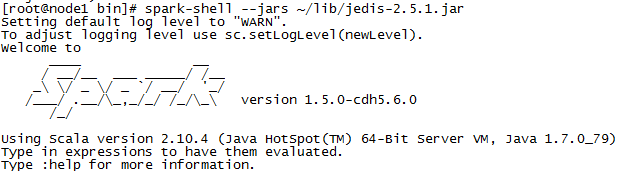

第二步:將jedis jar包放入~/lib目錄下,開啟spark服務

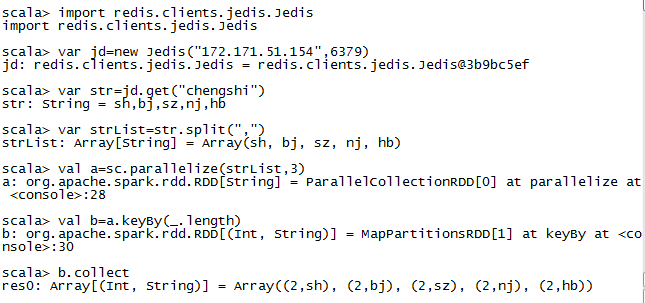

第三步:通過spark-shell讀取redis資料,並做相應處理

- scala單機版

package com.test

import org.apache.spark.SparkConf

import org.apache.spark.SparkContext

import redis.clients.jedis.Jedis

object RedisClient {

def main(args: Array[String]) {

val conf = new SparkConf()

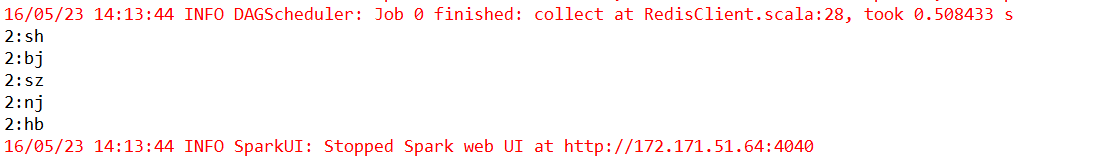

conf.setAppName 輸出結果

- java單機版

package com.dt.spark.SparkApps.cores;

import java.io.FileNotFoundException;

import java.io.IOException;

import java.util.Arrays;

import org.apache.spark.SparkConf;

import org.apache.spark.api.java.JavaPairRDD;

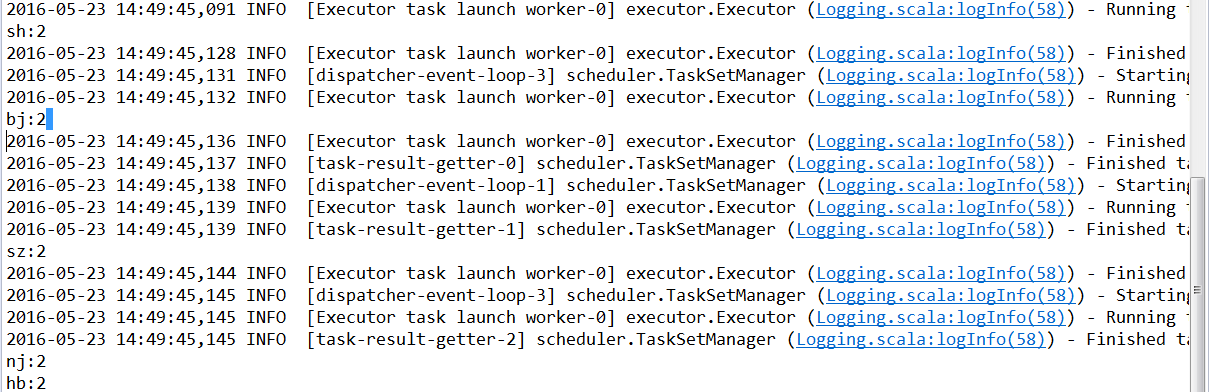

import org.apache.spark.api.java 輸出結果