向HBase中匯入資料3:使用MapReduce從HDFS或本地檔案中讀取資料並寫入HBase(增加使用Reduce批量插入)

前面我們介紹了:

為了提高插入效率,我們在前面只使用map的基礎上增加使用reduce,思想是使用map-reduce操作,將rowkey相同的項規約到同一個reduce中,再在reduce中構建put物件實現批量插入

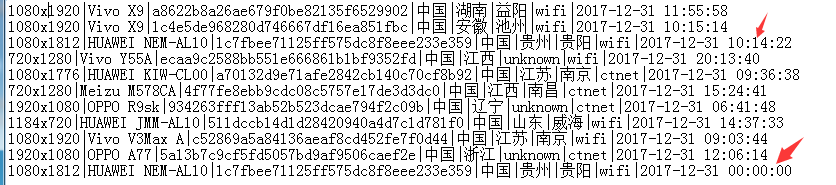

測試資料如下:

注意到有兩條記錄是相似的。

package cn.edu.shu.ces.chenjie.tianyi.hadoop; import java.io.IOException; import org.apache.hadoop.conf.Configuration; import org.apache.hadoop.fs.Path; import org.apache.hadoop.io.LongWritable; import org.apache.hadoop.io.NullWritable; import org.apache.hadoop.io.Text; import org.apache.hadoop.mapreduce.Job; import org.apache.hadoop.mapreduce.Mapper; import org.apache.hadoop.mapreduce.lib.input.FileInputFormat; import org.apache.hadoop.hbase.HBaseConfiguration; import org.apache.hadoop.hbase.client.Put; import org.apache.hadoop.hbase.mapreduce.TableMapReduceUtil; import org.apache.hadoop.hbase.mapreduce.TableReducer; import org.apache.hadoop.hbase.util.Bytes; /*** * 使用MapReduce向HBase中匯入資料 * @author chenjie * */ public class HadoopConnectTest4 { public static class HBaseHFileMapper extends Mapper<LongWritable, Text, Text, Text> { @Override protected void map(LongWritable key, Text value, Context context) { String value_str = value.toString(); System.out.println("----map----" + value_str); //將Text型資料轉為字串: //1080x1920|Vivo X9|a8622b8a26ae679f0be82135f6529902|中國|湖南|益陽|wifi|2017-12-31 11:55:58 String values[] = value_str.split("\\|"); //按|隔開 if (values == null || values.length < 8) return; //過濾掉長度不符合要求的記錄 String userID = values[2]; //取出使用者:a8622b8a26ae679f0be82135f6529902 String time = values[7]; //取出時間:2017-12-31 11:55:58 String ymd = time.split(" ")[0]; //得到年月日:2017-12-31 String rowkey = userID + "-" + ymd; //使用使用者ID-年月日作為HBase表中的行鍵 try { context.write(new Text(rowkey), new Text(value_str)); } catch (IOException e) { e.printStackTrace(); } catch (InterruptedException e) { e.printStackTrace(); } } } /** * 注意這裡的TableReducer */ public static class HBaseHFileReducer2 extends TableReducer<Text,Text,NullWritable> { @Override protected void reduce(Text key, Iterable<Text> texts, Context context) throws IOException, InterruptedException { String rowkey = key.toString(); System.out.println("--reduce:" + rowkey); Put p1 = new Put(Bytes.toBytes(rowkey)); //使用行鍵新建Put物件 for(Text value : texts) { String value_str = value.toString(); System.out.println("\t" + value_str); String values[] = value_str.split("\\|"); //按|隔開 if (values == null || values.length < 8) return; //過濾掉長度不符合要求的記錄 String time = values[7]; //取出時間:2017-12-31 11:55:58 String hms = time.split(" ")[1] + ":000"; //得到時分秒:11:55:58 //p1.addColumn(value.getFamily(), value.getQualifier(), value.getValue()); p1.addColumn("d".getBytes(), hms.getBytes(), value_str.getBytes()); //向put中增加一列,列族為d,列名為時分秒,值為原字串 } context.write(NullWritable.get(), p1); } } private static final String HDFS = "hdfs://192.168.1.112:9000";// HDFS路徑 private static final String INPATH = HDFS + "/tmp/clientdata10_3.txt";// 輸入檔案路徑 public int run() throws IOException, ClassNotFoundException, InterruptedException { Configuration conf = HBaseConfiguration.create(); //任務的配置設定,configuration是一個任務的配置物件,封裝了任務的配置資訊 conf.set("hbase.zookeeper.quorum", "pc2:2181,pc3:2181,pc4:2181"); //設定zookeeper conf.set("hbase.rootdir", "hdfs://pc2:9000/hbase"); //設定hbase根目錄 conf.set("zookeeper.znode.parent", "/hbase"); Job job = Job.getInstance(conf, "HFile bulk load test"); // 生成一個新的任務物件並 job.setJarByClass(HadoopConnectTest4.class); //設定driver類 job.setMapperClass(HBaseHFileMapper.class); job.setMapOutputKeyClass(Text.class); job.setMapOutputValueClass(Text.class); // 設定任務的map類和,map類輸出結果是ImmutableBytesWritable和put型別 TableMapReduceUtil.initTableReducerJob("clientdata_test5", HBaseHFileReducer2.class, job); // TableMapReduceUtil是HBase提供的工具類,會自動設定mapreuce提交到hbase任務的各種配置,封裝了操作,只需要簡單的設定即可 //設定表名為clientdata_test5,reducer類為空,job為此前設定號的job job.setNumReduceTasks(1); // 設定reduce過程,這裡由map端的資料直接提交,不要使用reduce類,因而設定成null,並設定reduce的個數為0 FileInputFormat.addInputPath(job, new Path(INPATH)); // 設定輸入檔案路徑 return (job.waitForCompletion(true) ? 0 : -1); } }

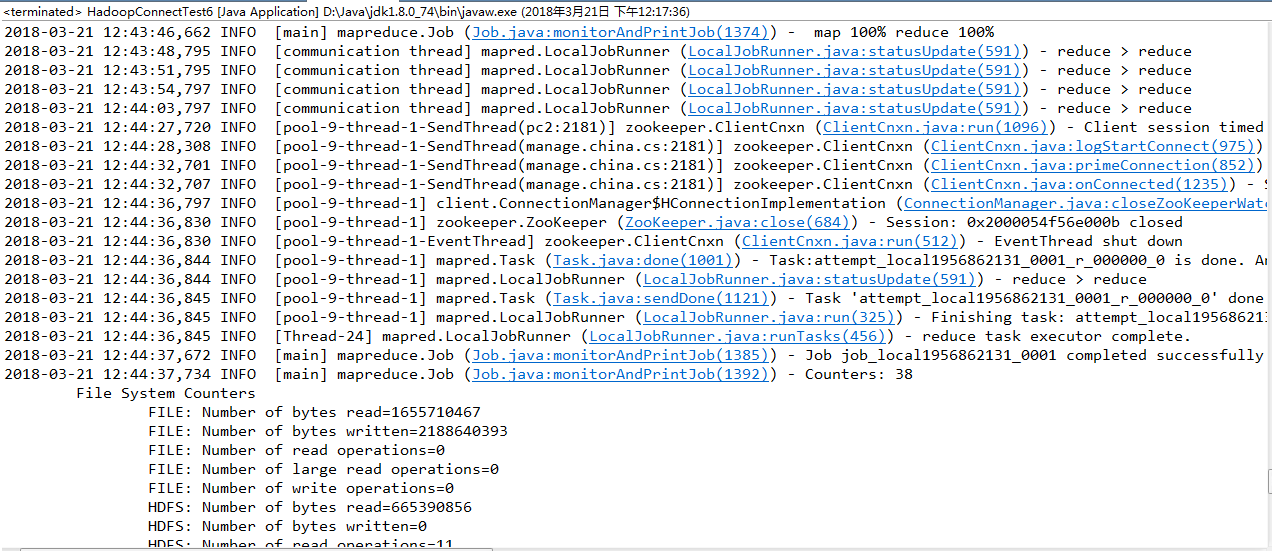

執行結果:

SLF4J: Class path contains multiple SLF4J bindings.

SLF4J: Found binding in [jar:file:/D:/SourceCode/MyEclipse2014Workspace/HDFS2HBaseByHadoopMR/hadooplibs/slf4j-log4j12-1.7.7.jar!/org/slf4j/impl/StaticLoggerBinder.class]

SLF4J: Found binding in [jar:file:/D:/SourceCode/MyEclipse2014Workspace/HDFS2HBaseByHadoopMR/hbaselibs/slf4j-log4j12-1.7.5.jar!/org/slf4j/impl/StaticLoggerBinder.class]

SLF4J: See http://www.slf4j.org/codes.html#multiple_bindings for an explanation.

SLF4J: Actual binding is of type [org.slf4j.impl.Log4jLoggerFactory]

2018-03-16 22:43:42,360 INFO [main] Configuration.deprecation (Configuration.java:warnOnceIfDeprecated(1129)) - session.id is deprecated. Instead, use dfs.metrics.session-id

2018-03-16 22:43:42,366 INFO [main] jvm.JvmMetrics (JvmMetrics.java:init(76)) - Initializing JVM Metrics with processName=JobTracker, sessionId=

2018-03-16 22:43:42,437 INFO [main] Configuration.deprecation (Configuration.java:warnOnceIfDeprecated(1129)) - io.bytes.per.checksum is deprecated. Instead, use dfs.bytes-per-checksum

2018-03-16 22:43:42,717 WARN [main] mapreduce.JobResourceUploader (JobResourceUploader.java:uploadFiles(64)) - Hadoop command-line option parsing not performed. Implement the Tool interface and execute your application with ToolRunner to remedy this.

2018-03-16 22:43:42,729 WARN [main] mapreduce.JobResourceUploader (JobResourceUploader.java:uploadFiles(171)) - No job jar file set. User classes may not be found. See Job or Job#setJar(String).

2018-03-16 22:43:44,094 INFO [main] input.FileInputFormat (FileInputFormat.java:listStatus(281)) - Total input paths to process : 1

2018-03-16 22:43:44,181 INFO [main] mapreduce.JobSubmitter (JobSubmitter.java:submitJobInternal(199)) - number of splits:1

2018-03-16 22:43:44,192 INFO [main] Configuration.deprecation (Configuration.java:warnOnceIfDeprecated(1129)) - io.bytes.per.checksum is deprecated. Instead, use dfs.bytes-per-checksum

2018-03-16 22:43:44,355 INFO [main] mapreduce.JobSubmitter (JobSubmitter.java:printTokens(288)) - Submitting tokens for job: job_local597129759_0001

2018-03-16 22:43:46,410 INFO [main] mapred.LocalDistributedCacheManager (LocalDistributedCacheManager.java:setup(165)) - Localized file:/D:/SourceCode/MyEclipse2014Workspace/HDFS2HBaseByHadoopMR/hbaselibs/zookeeper-3.4.6.jar as file:/tmp/hadoop-Administrator/mapred/local/1521211424485/zookeeper-3.4.6.jar

2018-03-16 22:43:46,411 INFO [main] mapred.LocalDistributedCacheManager (LocalDistributedCacheManager.java:setup(165)) - Localized file:/D:/SourceCode/MyEclipse2014Workspace/HDFS2HBaseByHadoopMR/hadooplibs/hadoop-mapreduce-client-core-2.6.5.jar as file:/tmp/hadoop-Administrator/mapred/local/1521211424486/hadoop-mapreduce-client-core-2.6.5.jar

2018-03-16 22:43:46,412 INFO [main] mapred.LocalDistributedCacheManager (LocalDistributedCacheManager.java:setup(165)) - Localized file:/D:/SourceCode/MyEclipse2014Workspace/HDFS2HBaseByHadoopMR/hbaselibs/hbase-client-1.2.6.jar as file:/tmp/hadoop-Administrator/mapred/local/1521211424487/hbase-client-1.2.6.jar

2018-03-16 22:43:46,413 INFO [main] mapred.LocalDistributedCacheManager (LocalDistributedCacheManager.java:setup(165)) - Localized file:/D:/SourceCode/MyEclipse2014Workspace/HDFS2HBaseByHadoopMR/hadooplibs/protobuf-java-2.5.0.jar as file:/tmp/hadoop-Administrator/mapred/local/1521211424488/protobuf-java-2.5.0.jar

2018-03-16 22:43:46,416 INFO [main] mapred.LocalDistributedCacheManager (LocalDistributedCacheManager.java:setup(165)) - Localized file:/D:/SourceCode/MyEclipse2014Workspace/HDFS2HBaseByHadoopMR/hbaselibs/htrace-core-3.1.0-incubating.jar as file:/tmp/hadoop-Administrator/mapred/local/1521211424489/htrace-core-3.1.0-incubating.jar

2018-03-16 22:43:46,518 INFO [main] mapred.LocalDistributedCacheManager (LocalDistributedCacheManager.java:setup(165)) - Localized file:/D:/SourceCode/MyEclipse2014Workspace/HDFS2HBaseByHadoopMR/hbaselibs/metrics-core-2.2.0.jar as file:/tmp/hadoop-Administrator/mapred/local/1521211424490/metrics-core-2.2.0.jar

2018-03-16 22:43:46,687 INFO [main] mapred.LocalDistributedCacheManager (LocalDistributedCacheManager.java:setup(165)) - Localized file:/D:/SourceCode/MyEclipse2014Workspace/HDFS2HBaseByHadoopMR/hadooplibs/hadoop-common-2.6.5.jar as file:/tmp/hadoop-Administrator/mapred/local/1521211424491/hadoop-common-2.6.5.jar

2018-03-16 22:43:46,691 INFO [main] mapred.LocalDistributedCacheManager (LocalDistributedCacheManager.java:setup(165)) - Localized file:/D:/SourceCode/MyEclipse2014Workspace/HDFS2HBaseByHadoopMR/hbaselibs/hbase-common-1.2.6.jar as file:/tmp/hadoop-Administrator/mapred/local/1521211424492/hbase-common-1.2.6.jar

2018-03-16 22:43:46,692 INFO [main] mapred.LocalDistributedCacheManager (LocalDistributedCacheManager.java:setup(165)) - Localized file:/D:/SourceCode/MyEclipse2014Workspace/HDFS2HBaseByHadoopMR/hbaselibs/hbase-hadoop-compat-1.2.6.jar as file:/tmp/hadoop-Administrator/mapred/local/1521211424493/hbase-hadoop-compat-1.2.6.jar

2018-03-16 22:43:46,694 INFO [main] mapred.LocalDistributedCacheManager (LocalDistributedCacheManager.java:setup(165)) - Localized file:/D:/SourceCode/MyEclipse2014Workspace/HDFS2HBaseByHadoopMR/hbaselibs/netty-all-4.0.23.Final.jar as file:/tmp/hadoop-Administrator/mapred/local/1521211424494/netty-all-4.0.23.Final.jar

2018-03-16 22:43:46,696 INFO [main] mapred.LocalDistributedCacheManager (LocalDistributedCacheManager.java:setup(165)) - Localized file:/D:/SourceCode/MyEclipse2014Workspace/HDFS2HBaseByHadoopMR/hbaselibs/hbase-prefix-tree-1.2.6.jar as file:/tmp/hadoop-Administrator/mapred/local/1521211424495/hbase-prefix-tree-1.2.6.jar

2018-03-16 22:43:46,698 INFO [main] mapred.LocalDistributedCacheManager (LocalDistributedCacheManager.java:setup(165)) - Localized file:/D:/SourceCode/MyEclipse2014Workspace/HDFS2HBaseByHadoopMR/hbaselibs/hbase-protocol-1.2.6.jar as file:/tmp/hadoop-Administrator/mapred/local/1521211424496/hbase-protocol-1.2.6.jar

2018-03-16 22:43:46,707 INFO [main] mapred.LocalDistributedCacheManager (LocalDistributedCacheManager.java:setup(165)) - Localized file:/D:/SourceCode/MyEclipse2014Workspace/HDFS2HBaseByHadoopMR/hbaselibs/hbase-server-1.2.6.jar as file:/tmp/hadoop-Administrator/mapred/local/1521211424497/hbase-server-1.2.6.jar

2018-03-16 22:43:46,708 INFO [main] mapred.LocalDistributedCacheManager (LocalDistributedCacheManager.java:setup(165)) - Localized file:/D:/SourceCode/MyEclipse2014Workspace/HDFS2HBaseByHadoopMR/hadooplibs/guava-11.0.2.jar as file:/tmp/hadoop-Administrator/mapred/local/1521211424498/guava-11.0.2.jar

2018-03-16 22:43:46,795 INFO [main] mapred.LocalDistributedCacheManager (LocalDistributedCacheManager.java:makeClassLoader(234)) - file:/D:/tmp/hadoop-Administrator/mapred/local/1521211424485/zookeeper-3.4.6.jar

2018-03-16 22:43:46,796 INFO [main] mapred.LocalDistributedCacheManager (LocalDistributedCacheManager.java:makeClassLoader(234)) - file:/D:/tmp/hadoop-Administrator/mapred/local/1521211424486/hadoop-mapreduce-client-core-2.6.5.jar

2018-03-16 22:43:46,796 INFO [main] mapred.LocalDistributedCacheManager (LocalDistributedCacheManager.java:makeClassLoader(234)) - file:/D:/tmp/hadoop-Administrator/mapred/local/1521211424487/hbase-client-1.2.6.jar

2018-03-16 22:43:46,796 INFO [main] mapred.LocalDistributedCacheManager (LocalDistributedCacheManager.java:makeClassLoader(234)) - file:/D:/tmp/hadoop-Administrator/mapred/local/1521211424488/protobuf-java-2.5.0.jar

2018-03-16 22:43:46,796 INFO [main] mapred.LocalDistributedCacheManager (LocalDistributedCacheManager.java:makeClassLoader(234)) - file:/D:/tmp/hadoop-Administrator/mapred/local/1521211424489/htrace-core-3.1.0-incubating.jar

2018-03-16 22:43:46,796 INFO [main] mapred.LocalDistributedCacheManager (LocalDistributedCacheManager.java:makeClassLoader(234)) - file:/D:/tmp/hadoop-Administrator/mapred/local/1521211424490/metrics-core-2.2.0.jar

2018-03-16 22:43:46,797 INFO [main] mapred.LocalDistributedCacheManager (LocalDistributedCacheManager.java:makeClassLoader(234)) - file:/D:/tmp/hadoop-Administrator/mapred/local/1521211424491/hadoop-common-2.6.5.jar

2018-03-16 22:43:46,797 INFO [main] mapred.LocalDistributedCacheManager (LocalDistributedCacheManager.java:makeClassLoader(234)) - file:/D:/tmp/hadoop-Administrator/mapred/local/1521211424492/hbase-common-1.2.6.jar

2018-03-16 22:43:46,797 INFO [main] mapred.LocalDistributedCacheManager (LocalDistributedCacheManager.java:makeClassLoader(234)) - file:/D:/tmp/hadoop-Administrator/mapred/local/1521211424493/hbase-hadoop-compat-1.2.6.jar

2018-03-16 22:43:46,799 INFO [main] mapred.LocalDistributedCacheManager (LocalDistributedCacheManager.java:makeClassLoader(234)) - file:/D:/tmp/hadoop-Administrator/mapred/local/1521211424494/netty-all-4.0.23.Final.jar

2018-03-16 22:43:46,799 INFO [main] mapred.LocalDistributedCacheManager (LocalDistributedCacheManager.java:makeClassLoader(234)) - file:/D:/tmp/hadoop-Administrator/mapred/local/1521211424495/hbase-prefix-tree-1.2.6.jar

2018-03-16 22:43:46,799 INFO [main] mapred.LocalDistributedCacheManager (LocalDistributedCacheManager.java:makeClassLoader(234)) - file:/D:/tmp/hadoop-Administrator/mapred/local/1521211424496/hbase-protocol-1.2.6.jar

2018-03-16 22:43:46,799 INFO [main] mapred.LocalDistributedCacheManager (LocalDistributedCacheManager.java:makeClassLoader(234)) - file:/D:/tmp/hadoop-Administrator/mapred/local/1521211424497/hbase-server-1.2.6.jar

2018-03-16 22:43:46,800 INFO [main] mapred.LocalDistributedCacheManager (LocalDistributedCacheManager.java:makeClassLoader(234)) - file:/D:/tmp/hadoop-Administrator/mapred/local/1521211424498/guava-11.0.2.jar

2018-03-16 22:43:46,807 INFO [main] mapreduce.Job (Job.java:submit(1301)) - The url to track the job: http://localhost:8080/

2018-03-16 22:43:46,808 INFO [main] mapreduce.Job (Job.java:monitorAndPrintJob(1346)) - Running job: job_local597129759_0001

2018-03-16 22:43:46,818 INFO [Thread-24] mapred.LocalJobRunner (LocalJobRunner.java:createOutputCommitter(471)) - OutputCommitter set in config null

2018-03-16 22:43:46,896 INFO [Thread-24] Configuration.deprecation (Configuration.java:warnOnceIfDeprecated(1129)) - io.bytes.per.checksum is deprecated. Instead, use dfs.bytes-per-checksum

2018-03-16 22:43:46,898 INFO [Thread-24] mapred.LocalJobRunner (LocalJobRunner.java:createOutputCommitter(489)) - OutputCommitter is org.apache.hadoop.hbase.mapreduce.TableOutputCommitter

2018-03-16 22:43:46,984 INFO [Thread-24] mapred.LocalJobRunner (LocalJobRunner.java:runTasks(448)) - Waiting for map tasks

2018-03-16 22:43:46,987 INFO [LocalJobRunner Map Task Executor #0] mapred.LocalJobRunner (LocalJobRunner.java:run(224)) - Starting task: attempt_local597129759_0001_m_000000_0

2018-03-16 22:43:47,079 INFO [LocalJobRunner Map Task Executor #0] util.ProcfsBasedProcessTree (ProcfsBasedProcessTree.java:isAvailable(181)) - ProcfsBasedProcessTree currently is supported only on Linux.

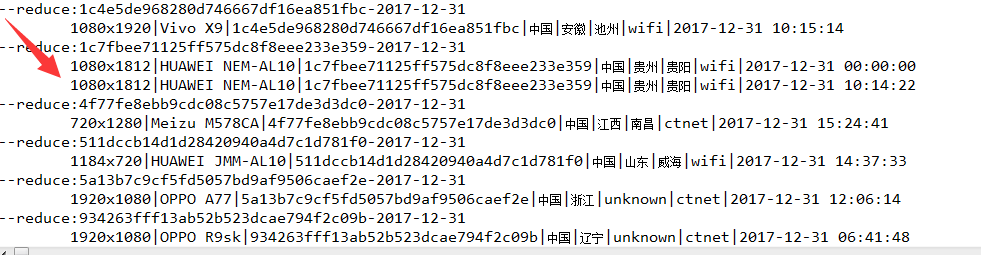

2018-03-16 22:43:47,172 INFO [LocalJobRunner Map Task Executor #0] mapred.Task (Task.java:initialize(587)) - Using ResourceCalculatorProcessTree : 注意到rowkey相同的行規約到了一起

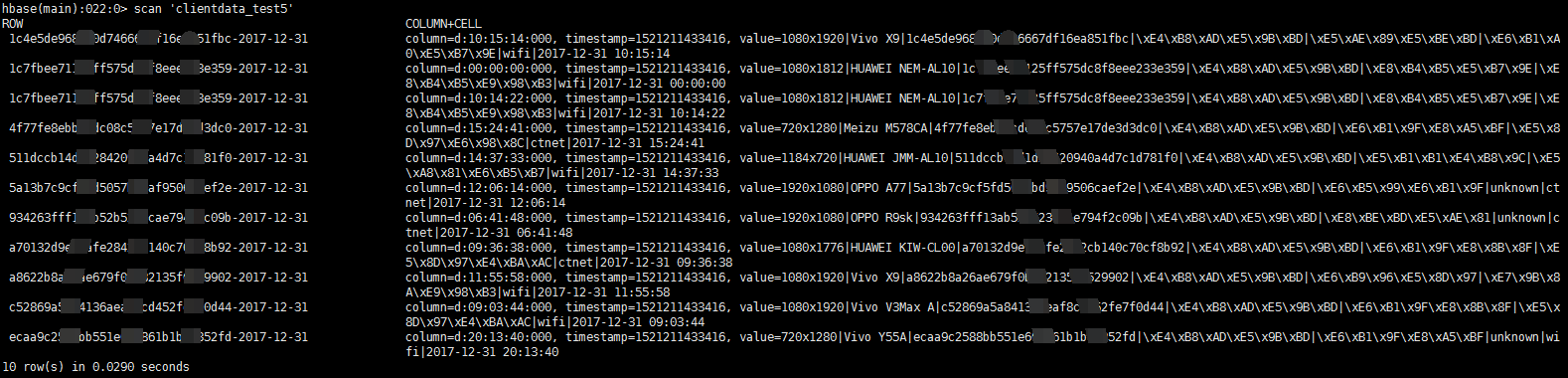

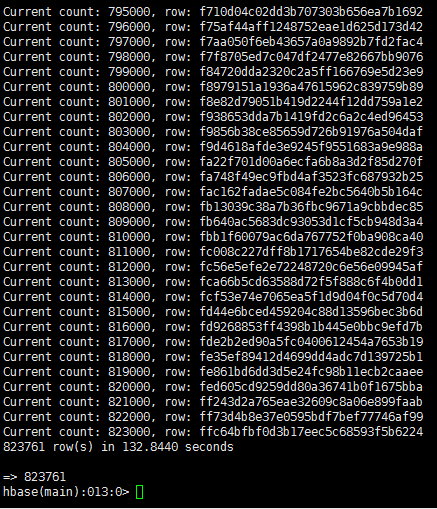

大量資料測試:

相關推薦

向HBase中匯入資料3:使用MapReduce從HDFS或本地檔案中讀取資料並寫入HBase(增加使用Reduce批量插入)

前面我們介紹了:為了提高插入效率,我們在前面只使用map的基礎上增加使用reduce,思想是使用map-reduce操作,將rowkey相同的項規約到同一個reduce中,再在reduce中構建put物件實現批量插入測試資料如下:注意到有兩條記錄是相似的。package cn

從xml或yml檔案中讀取資料

CvFileStorage:檔案儲存器,這是資料持久化和RTTI部分基礎的資料結構,該部分的其他函式均通過此結構來訪問檔案。 typedef struct CvFileStorage { int flags; int is_xml; int write_mode; int i

軟件工程課後作業3:如何返回一個整數數組中最大子數組的和

10個 如何 nbsp ima stdio.h scan can getchar() char 4 代碼語言: #include <stdio.h> int main(){ int a[10]; int b[5]; int i,j,t; printf("請輸入1

線性排序3:如何根據年齡給100萬用戶資料排序?

如何根據年齡給 100 萬用戶排序? 你可能會說,我用上一節課講的歸併、快排就可以搞定啊!是的,它們也可以完成功能,但是時間複雜度最低也是O(nlogn)。有沒有更快的排序方法呢?讓我們一起進入今天的內容! 桶排序(Bucket sort) 首先

eclipse中匯入maven專案:org.apache.maven.archiver.MavenArchiver.getManifest(org.apache.maven.project.Maven

org.apache.maven.archiver.MavenArchiver.getManifest(org.apache.maven.project.Maven)匯入專案報錯 原因:maven的配置檔案不是最新的 解決方法為:更新eclipse中的maven外掛 1

Android開發: Eclipse中匯入專案前有紅叉提示但是專案檔案內容無錯誤的解決方法

Eclipse中,Android專案名稱前有紅叉,但專案內所有檔案都無錯誤,通常發生在匯入專案時。 先可以去看一下幾個視窗的輸出內容,不同的錯誤日誌要採用不同的方法,要靈活使用各種方法! 1>選單路徑----Window/Show View/Console 2

eclipse中匯入maven專案:org.apache.maven.archiver.MavenArchiver

org.codehaus.plexus.archiver.jar.Manifest.write(java.io.PrintWriter) 解決方法為:更新eclipse中的maven外掛 1.help -> Install New Software -> ad

Hadoop: MapReduce使用hdfs中的檔案

本程式碼包含功能:獲取DataNode名,並寫入到HDFS檔案系統中的檔案hdfs:///copyOftest.c中。 並計數檔案hdfs:///copyOftest.c中的wordcount計數,有別於Hadoop的examples中的讀取本地檔案系統中的檔案,這次讀取的

(轉)eclipse中匯入maven專案:org.apache.maven.archiver.MavenArchiver.getManifest(org.apache.maven.project.Ma

org.codehaus.plexus.archiver.jar.Manifest.write(java.io.PrintWriter)解決方法為:更新eclipse中的maven外掛1.help -> Install New Software -> add -

C#開發BIMFACE系列18 服務端API之獲取模型資料3:獲取構件屬性

系列目錄 【已更新最新開發文章,點選檢視詳細】 本篇主要介紹如何獲取單檔案/模型下單個構建的屬性資訊。 請求地址:GET https://api.bimface.com/data/v2/files/{fileId}/elements/{elementId}

資料分析:如何從網際網路大資料中分析行業趨勢

一、前言: 研究行業趨勢是每家公司的硬需求,如手機業者希望瞭解同行有沒有什麼顏色是比較受消費者歡迎的,護膚品公司想要了解什麼成分是被廣泛而且美譽的討論,藉由加入這些概念元素,他們可以讓他們的產品更具吸引力,這種跟風做法其實一直都有,但是傳統人工去看會遇到兩個問題: 1、發現過慢:通常人工可以發現時,這些概念元

第二章 從鍵盤或文件中獲取標準輸入:read命令

read命令 從鍵盤或文件中獲取標準輸入 第二章 從鍵盤或文件中獲取標準輸入:read命令 read命令 從鍵盤讀取變量的值,通常用在shell腳本中與用戶進行交互的場合。該命令可以一次讀多個變量的值,變量和輸入的值都需要使用空格隔開。在read命令後面,如果沒有指定變量名,讀取的數據將被自動賦值給

資料處理:用pandas處理大型csv檔案

在訓練機器學習模型的過程中,源資料常常不符合我們的要求。大量繁雜的資料,需要按照我們的需求進行過濾。拿到我們想要的資料格式,並建立能夠反映資料間邏輯結構的資料表達形式。 最近就拿到一個小任務,需要處理70多萬條資料。 我們在處理csv檔案時,經常使用pandas,可以幫助處理較大的

Android RxJava操作符的學習---組合合併操作符---從磁碟或記憶體快取中獲取快取資料

1. 需求場景 2. 功能說明 對於從磁碟 / 記憶體快取中 獲取快取資料 的功能邏輯如下: 3. 具體實現 詳細請看程式碼註釋 // 該2變數用於模擬記憶體快取 & 磁碟快取中的資料 String me

系統排錯3:若誤刪grub引導檔案,如何恢復?

系統排錯 若誤刪grub引導檔案,如何恢復? (1)刪除grub引導檔案但系統並未重啟 1).模擬實驗環境 [[email protected] ~]# cd /boot/grub2 [[email protected] grub2]# ls device

Python:如何從字典的多value中的某個值得到這個多value?

在這裡再次解釋一下題目: 目的:一個字典,存在著1key多value的現象,如果根據多value中的值,找到這個key,並且得到這個key對應的所有value? 比如我們這裡有一個字典: mydict = {'george':16,'amber':[19, 20]} 我們想根據19,

海量資料處理:十道面試題與十個海量資料處理方法總結(大資料演算法面試題)

第一部分、十道海量資料處理面試題 1、海量日誌資料,提取出某日訪問百度次數最多的那個IP。 首先是這一天,並且是訪問百度的日誌中的IP取出來,逐個寫入到一個大檔案中。注意到IP是32位的,最多有個2^32個IP。同樣可以採用對映的方法

C++學習筆記(二):開啟檔案、讀取資料、資料定位與資料寫入

1.開啟二進位制檔案(fopen)、讀取資料(fread),應用示例如下: FILE *fp = fopen("data.yuv", "rb+"); //開啟當前目錄中的data.yuv檔案 char *buffer = ( char*) malloc (sizeof(char)*FrameSi

python讀txt檔案讀資料,然後修改資料,再以矩陣形式儲存在檔案中

import os os.environ['TF_CPP_MIN_LOG_LEVEL'] = '2' # -*- coding: UTF-8 -*- import numpy as np import glob import tensorflow as tf flag=T

Pandas日期資料處理:如何按日期篩選、顯示及統計資料

前言 pandas有著強大的日期資料處理功能,本期我們來了解下pandas處理日期資料的一些基本功能,主要包括以下三個方面: 按日期篩選資料 按日期顯示資料 按日期統計資料 執行環境為 windows系統,64位,python3.5。 1 讀取並整理資料 首先