NLP--gensim中doc2vec句向量例項

阿新 • • 發佈:2019-01-24

Doc2vec又叫Paragraph Vector是Tomas Mikolov基於word2vec模型提出的,其具有一些優點,比如不用固定句子長度,接受不同長度的句子做訓練樣本,Doc2vec是一個無監督學習演算法,可以用於生成句向量,段落向量和文件向量。生成的向量可以用於文字分類和語義分析.

下面是一個生成句向量,並檢視效果的程式(適用小資料量):

#coding:utf-8

import jieba

import sys

import gensim

import sklearn

import numpy as np

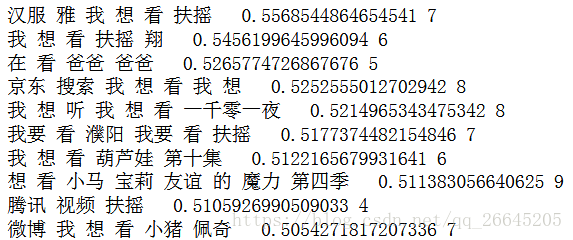

from gensim.models.doc2vec import 扶搖相似度為:

如果需要大資料量的資料進行訓練,則訓練過程中只需要TaggedLineDocument(inp)

具體例子如下:

import multiprocessing

from gensim.models.doc2vec import Doc2Vec

from gensim.models.doc2vec import TaggedLineDocument

import numpy as np

inp='corpusSegDone.txt'

sents = TaggedLineDocument(inp) #直接對文字標號 (大資料適用,但是這樣後續不能檢視對應的內容,需要其他方法查詢)

model = Doc2Vec(sents, size = 200, window = 8, alpha = 0.015)

outp1='docmodel'

model.save(outp1)

model=Doc2Vec.load(outp1)

sims = model.docvecs.most_similar(0)#0代表第一個句子或段落

#如果有兩個句子

doc_words1=['驗證','失敗','驗證碼','未','收到']

doc_words2=['今天','獎勵','有','哪些','呢']

#轉換為向量:

invec1 = model.infer_vector(doc_words1, alpha=0.1, min_alpha=0.0001, steps=5)

invec2 = model.infer_vector(doc_words2, alpha=0.1, min_alpha=0.0001, steps=5)

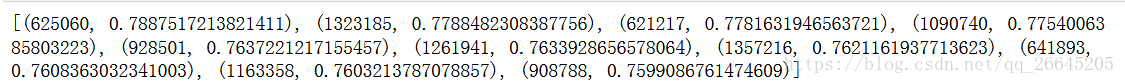

sims = model.docvecs.most_similar([invec1])#計算訓練模型中與句子1相似的內容

print (sims)

print(model.docvecs.similarity(0,1086620))#計算句子的相似度(0和1086620為句子的標號)

#列印結果相似度位: 0.9385169567251749

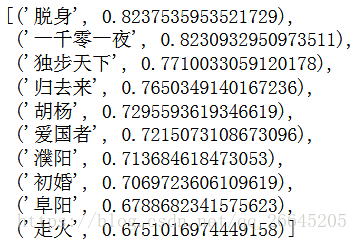

sims列印結果: