hadoop錯誤提示 exitCode: 1 due to: Exception from container-launch.

阿新 • • 發佈:2019-01-26

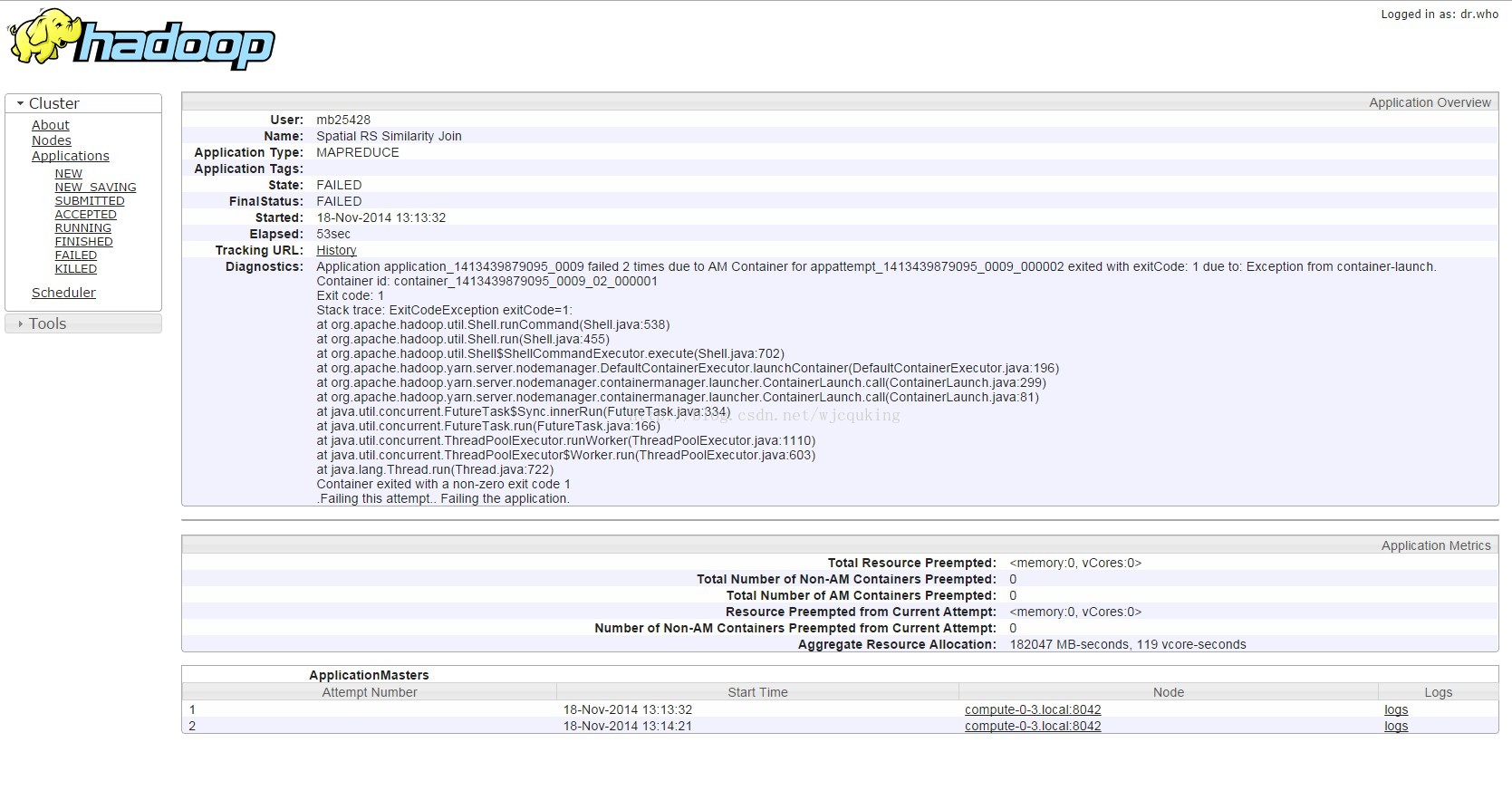

1. 在叢集上執行hadoop程式有有問題

2. 錯誤提示程式碼為

<pre name="code" class="html">The Start time is 1416232445259 14/11/17 21:54:06 INFO client.RMProxy: Connecting to ResourceManager at fireslate.cis.umac.mo/10.119.176.10:8032 14/11/17 21:54:06 WARN mapreduce.JobSubmitter: Hadoop command-line option parsing not performed. Implement the Tool interface and execute your application with ToolRunner to remedy this. 14/11/17 21:54:07 INFO input.FileInputFormat: Total input paths to process : 2 14/11/17 21:54:07 INFO mapreduce.JobSubmitter: number of splits:2 14/11/17 21:54:07 INFO mapreduce.JobSubmitter: Submitting tokens for job: job_1413439879095_0006 14/11/17 21:54:08 INFO impl.YarnClientImpl: Submitted application application_1413439879095_0006 14/11/17 21:54:08 INFO mapreduce.Job: The url to track the job: http://fireslate.cis.umac.mo:8088/proxy/application_1413439879095_0006/ 14/11/17 21:54:08 INFO mapreduce.Job: Running job: job_1413439879095_0006 14/11/17 21:54:15 INFO mapreduce.Job: Job job_1413439879095_0006 running in uber mode : false 14/11/17 21:54:15 INFO mapreduce.Job: map 0% reduce 0% 14/11/17 21:54:23 INFO mapreduce.Job: map 50% reduce 0% 14/11/17 21:54:27 INFO mapreduce.Job: map 76% reduce 0% 14/11/17 21:54:29 INFO mapreduce.Job: map 100% reduce 0% 14/11/17 21:54:32 INFO mapreduce.Job: map 100% reduce 17% 14/11/17 21:54:33 INFO mapreduce.Job: map 100% reduce 33% 14/11/17 21:54:34 INFO mapreduce.Job: map 100% reduce 67% 14/11/17 21:54:35 INFO mapreduce.Job: map 100% reduce 83% 14/11/17 21:54:36 INFO mapreduce.Job: map 100% reduce 100% 14/11/17 21:55:01 INFO ipc.Client: Retrying connect to server: compute-0-4.local/10.1.10.250:39872. Already tried 0 time(s); retry policy is RetryUpToMaximumCountWithFixedSleep(maxRetries=3, sleepTime=1000 MILLISECONDS) 14/11/17 21:55:02 INFO ipc.Client: Retrying connect to server: compute-0-4.local/10.1.10.250:39872. Already tried 1 time(s); retry policy is RetryUpToMaximumCountWithFixedSleep(maxRetries=3, sleepTime=1000 MILLISECONDS) 14/11/17 21:55:03 INFO ipc.Client: Retrying connect to server: compute-0-4.local/10.1.10.250:39872. Already tried 2 time(s); retry policy is RetryUpToMaximumCountWithFixedSleep(maxRetries=3, sleepTime=1000 MILLISECONDS) 14/11/17 21:55:07 INFO mapreduce.Job: map 0% reduce 0% 14/11/17 21:55:07 INFO mapreduce.Job: Job job_1413439879095_0006 failed with state FAILED due to: Application application_1413439879095_0006 failed 2 times due to AM Container for appattempt_1413439879095_0006_000002 exited with exitCode: 1 due to: Exception from container-launch. Container id: container_1413439879095_0006_02_000001 Exit code: 1 Stack trace: ExitCodeException exitCode=1: at org.apache.hadoop.util.Shell.runCommand(Shell.java:538) at org.apache.hadoop.util.Shell.run(Shell.java:455) at org.apache.hadoop.util.Shell$ShellCommandExecutor.execute(Shell.java:702) at org.apache.hadoop.yarn.server.nodemanager.DefaultContainerExecutor.launchContainer(DefaultContainerExecutor.java:196) at org.apache.hadoop.yarn.server.nodemanager.containermanager.launcher.ContainerLaunch.call(ContainerLaunch.java:299) at org.apache.hadoop.yarn.server.nodemanager.containermanager.launcher.ContainerLaunch.call(ContainerLaunch.java:81) at java.util.concurrent.FutureTask$Sync.innerRun(FutureTask.java:334) at java.util.concurrent.FutureTask.run(FutureTask.java:166) at java.util.concurrent.ThreadPoolExecutor.runWorker(ThreadPoolExecutor.java:1110) at java.util.concurrent.ThreadPoolExecutor$Worker.run(ThreadPoolExecutor.java:603) at java.lang.Thread.run(Thread.java:722) Container exited with a non-zero exit code 1 .Failing this attempt.. Failing the application. 14/11/17 21:55:07 INFO mapreduce.Job: Counters: 0

當我按照 http://blog.csdn.net/xichenguan/article/details/38796541 這個文章的提示,講yarn-site中的最後一項yarn.application.classpath 註釋掉後,錯誤的提示改為

The Start time is 1416273967065 14/11/18 09:26:08 INFO client.RMProxy: Connecting to ResourceManager at fireslate.cis.umac.mo/10.119.176.10:8032 14/11/18 09:26:08 WARN mapreduce.JobSubmitter: Hadoop command-line option parsing not performed. Implement the Tool interface and execute your application with ToolRunner to remedy this. 14/11/18 09:26:09 INFO input.FileInputFormat: Total input paths to process : 2 14/11/18 09:26:09 INFO mapreduce.JobSubmitter: number of splits:2 14/11/18 09:26:09 INFO mapreduce.JobSubmitter: Submitting tokens for job: job_1413439879095_0007 14/11/18 09:26:10 INFO impl.YarnClientImpl: Submitted application application_1413439879095_0007 14/11/18 09:26:10 INFO mapreduce.Job: The url to track the job: http://fireslate.cis.umac.mo:8088/proxy/application_1413439879095_0007/ 14/11/18 09:26:10 INFO mapreduce.Job: Running job: job_1413439879095_0007 14/11/18 09:26:13 INFO mapreduce.Job: Job job_1413439879095_0007 running in uber mode : false 14/11/18 09:26:13 INFO mapreduce.Job: map 0% reduce 0% 14/11/18 09:26:13 INFO mapreduce.Job: Job job_1413439879095_0007 failed with state FAILED due to: Application application_1413439879095_0007 failed 2 times due to AM Container for appattempt_1413439879095_0007_000002 exited with exitCode: 1 due to: Exception from container-launch. Container id: container_1413439879095_0007_02_000001 Exit code: 1 Stack trace: ExitCodeException exitCode=1: at org.apache.hadoop.util.Shell.runCommand(Shell.java:538) at org.apache.hadoop.util.Shell.run(Shell.java:455) at org.apache.hadoop.util.Shell$ShellCommandExecutor.execute(Shell.java:702) at org.apache.hadoop.yarn.server.nodemanager.DefaultContainerExecutor.launchContainer(DefaultContainerExecutor.java:196) at org.apache.hadoop.yarn.server.nodemanager.containermanager.launcher.ContainerLaunch.call(ContainerLaunch.java:299) at org.apache.hadoop.yarn.server.nodemanager.containermanager.launcher.ContainerLaunch.call(ContainerLaunch.java:81) at java.util.concurrent.FutureTask$Sync.innerRun(FutureTask.java:334) at java.util.concurrent.FutureTask.run(FutureTask.java:166) at java.util.concurrent.ThreadPoolExecutor.runWorker(ThreadPoolExecutor.java:1110) at java.util.concurrent.ThreadPoolExecutor$Worker.run(ThreadPoolExecutor.java:603) at java.lang.Thread.run(Thread.java:722) Container exited with a non-zero exit code 1 .Failing this attempt.. Failing the application. 14/11/18 09:26:13 INFO mapreduce.Job: Counters: 0

發現根本就不是那麼一回事,這種該法不適合這個問題

開啟提示的網頁

想通過檢視日誌檔案,看看問題的所在,雖然提示的內容類似,但是實際上真實的問題所在是不一樣的,只有通過日誌檔案才能夠看到到底哪裡出現了問題

網頁無法訪問的原因是所有的地址都是相對於NameNode來說的,就是伺服器來說的,在本地訪問是訪問不了的,即使你改成相對應的電腦的IP也是無法訪問的,所以需要在本地安裝一個Xming,和SSH Secure Shell繫結後就可以使用了

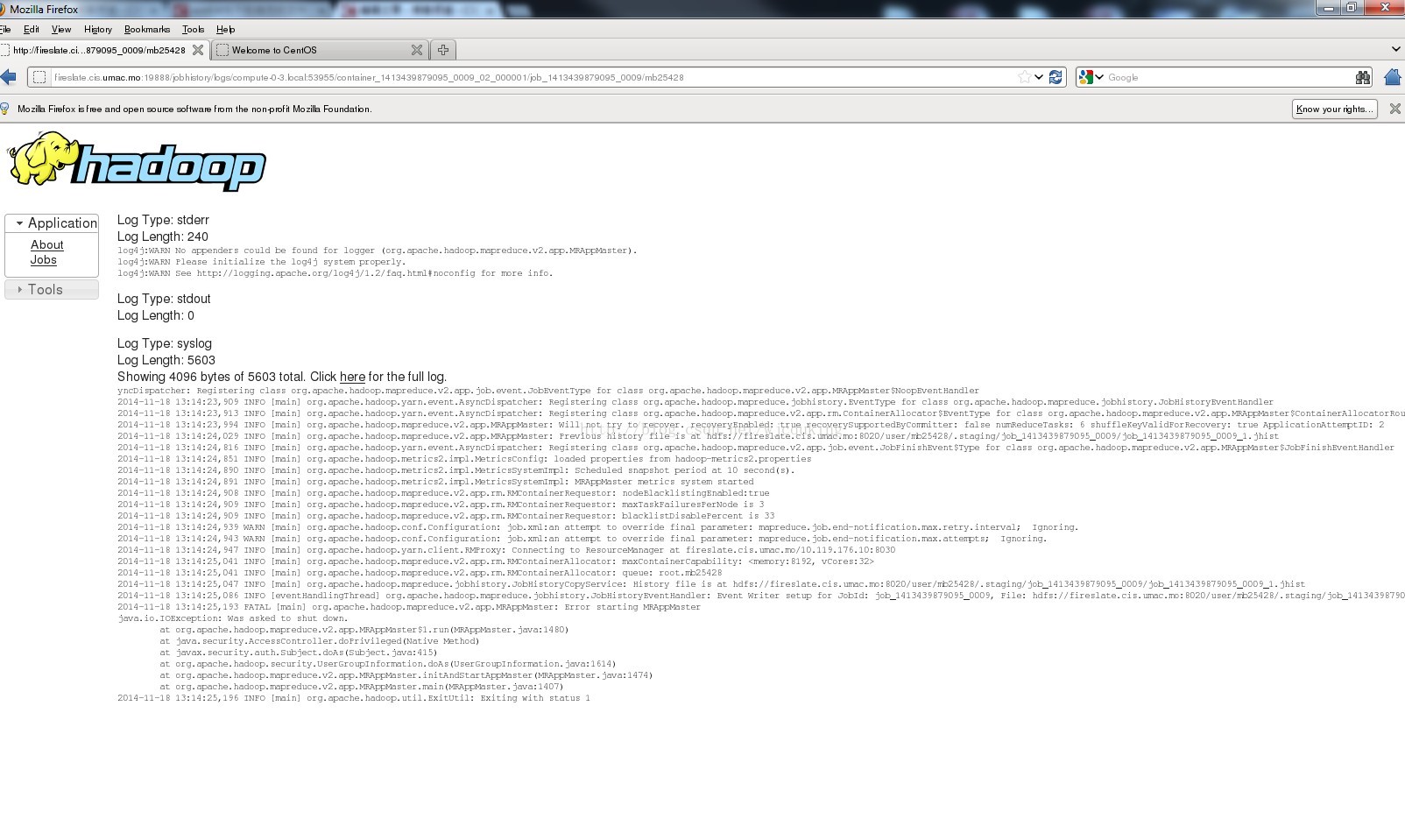

在shell中輸入firefox,之後開啟遠端訪問的瀏覽器

這樣就可以檢視錯誤的log檔案了

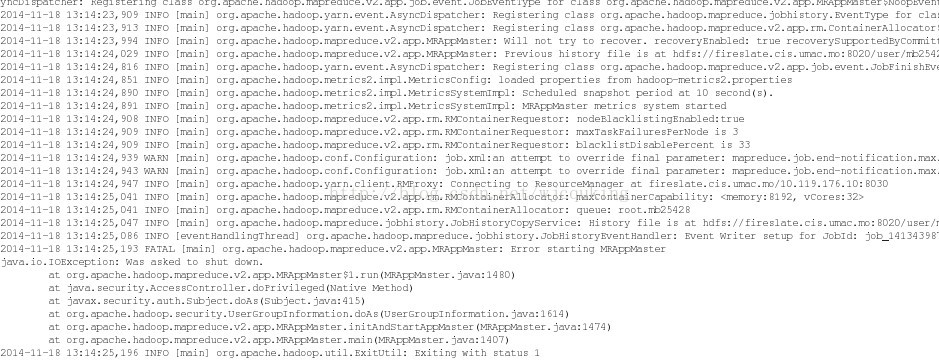

檢視錯誤內容為

師兄發現是jobhistory有問題,IO的問題,這個程式在原來的虛擬機器中是可以執行的,但是之後不能使用,應該是許可權的問題,或者配置的問題,後來發現是Jobhistory地址有問題,

參考:https://issues.apache.org/jira/browse/MAPREDUCE-5721

原來mapreduce.jobhistory.address 和mapreduce.jobhistory.webapp.addres 這兩個address的地址使用的是CDH預設的配置值,這裡需要改成hostname,這樣可能就是原來的位置不對造成的

<?xml version="1.0"?>

<!--

Licensed to the Apache Software Foundation (ASF) under one or more

contributor license agreements. See the NOTICE file distributed with

this work for additional information regarding copyright ownership.

The ASF licenses this file to You under the Apache License, Version 2.0

(the "License"); you may not use this file except in compliance with

the License. You may obtain a copy of the License at

http://www.apache.org/licenses/LICENSE-2.0

Unless required by applicable law or agreed to in writing, software

distributed under the License is distributed on an "AS IS" BASIS,

WITHOUT WARRANTIES OR CONDITIONS OF ANY KIND, either express or implied.

See the License for the specific language governing permissions and

limitations under the License.

-->

<?xml-stylesheet type="text/xsl" href="configuration.xsl"?>

<configuration>

<property>

<name>mapreduce.framework.name</name>

<value>yarn</value>

</property>

<property>

<name>mapreduce.jobhistory.address</name>

<value>fireslate.cis.umac.mo:10020</value>

</property>

<property>

<name>mapreduce.jobhistory.webapp.address</name>

<value>fireslate.cis.umac.mo:19888</value>

</property>

<property>

<name>yarn.app.mapreduce.am.staging-dir</name>

<value>/user</value>

</property>

</configuration>

經過師兄的幫忙終於搞定了這個錯誤

正確的執行結果

The Start time is 1416289956560

14/11/18 13:52:37 INFO client.RMProxy: Connecting to ResourceManager at fireslate.cis.umac.mo/10.119.176.10:8032

14/11/18 13:52:38 WARN mapreduce.JobSubmitter: Hadoop command-line option parsing not performed. Implement the Tool interface and execute your application with ToolRunner to remedy this.

14/11/18 13:52:40 INFO input.FileInputFormat: Total input paths to process : 2

14/11/18 13:52:40 INFO mapreduce.JobSubmitter: number of splits:2

14/11/18 13:52:40 INFO mapreduce.JobSubmitter: Submitting tokens for job: job_1413439879095_0010

14/11/18 13:52:41 INFO impl.YarnClientImpl: Submitted application application_1413439879095_0010

14/11/18 13:52:41 INFO mapreduce.Job: The url to track the job: http://fireslate.cis.umac.mo:8088/proxy/application_1413439879095_0010/

14/11/18 13:52:41 INFO mapreduce.Job: Running job: job_1413439879095_0010

14/11/18 13:52:47 INFO mapreduce.Job: Job job_1413439879095_0010 running in uber mode : false

14/11/18 13:52:47 INFO mapreduce.Job: map 0% reduce 0%

14/11/18 13:52:54 INFO mapreduce.Job: map 50% reduce 0%

14/11/18 13:52:59 INFO mapreduce.Job: map 83% reduce 0%

14/11/18 13:53:00 INFO mapreduce.Job: map 100% reduce 0%

14/11/18 13:53:04 INFO mapreduce.Job: map 100% reduce 50%

14/11/18 13:53:05 INFO mapreduce.Job: map 100% reduce 96%

14/11/18 13:53:07 INFO mapreduce.Job: map 100% reduce 100%

14/11/18 13:53:08 INFO mapreduce.Job: Job job_1413439879095_0010 completed successfully

14/11/18 13:53:08 INFO mapreduce.Job: Counters: 50

File System Counters

FILE: Number of bytes read=71684520

FILE: Number of bytes written=144186954

FILE: Number of read operations=0

FILE: Number of large read operations=0

FILE: Number of write operations=0

HDFS: Number of bytes read=1741779

HDFS: Number of bytes written=206411

HDFS: Number of read operations=24

HDFS: Number of large read operations=0

HDFS: Number of write operations=12

Job Counters

Killed reduce tasks=1

Launched map tasks=2

Launched reduce tasks=7

Data-local map tasks=2

Total time spent by all maps in occupied slots (ms)=15424

Total time spent by all reduces in occupied slots (ms)=55705

Total time spent by all map tasks (ms)=15424

Total time spent by all reduce tasks (ms)=55705

Total vcore-seconds taken by all map tasks=15424

Total vcore-seconds taken by all reduce tasks=55705

Total megabyte-seconds taken by all map tasks=15794176

Total megabyte-seconds taken by all reduce tasks=57041920

Map-Reduce Framework

Map input records=20000

Map output records=764114

Map output bytes=70060932

Map output materialized bytes=71684556

Input split bytes=265

Combine input records=0

Combine output records=0

Reduce input groups=60

Reduce shuffle bytes=71684556

Reduce input records=764114

Reduce output records=9240

Spilled Records=1528228

Shuffled Maps =12

Failed Shuffles=0

Merged Map outputs=12

GC time elapsed (ms)=1958

CPU time spent (ms)=65700

Physical memory (bytes) snapshot=1731551232

Virtual memory (bytes) snapshot=5733769216

Total committed heap usage (bytes)=1616838656

Shuffle Errors

BAD_ID=0

CONNECTION=0

IO_ERROR=0

WRONG_LENGTH=0

WRONG_MAP=0

WRONG_REDUCE=0

File Input Format Counters

Bytes Read=1741514

File Output Format Counters

Bytes Written=206411

End at 1416289988693

Phase One cost32.134 seconds.