阿里電話面試問題----100萬個URL如何找到出現頻率最高的前100個?

阿新 • • 發佈:2019-01-26

內推阿里電話面試中面試官給我出的一個題:

我想的頭一個解決方案,就是放到stl 的map裡面對出現的頻率作為pair的第二個欄位進行排序,之後按照排序結果返回:

下面口說無憑,show your code,當然在討論帖子中遭遇了工程界大牛的sql程式碼在技術上的碾壓。什麼是做工程的,什麼是工程師的思維,不要一味的埋頭搞演算法。

討論帖:

python 抓取百度搜索結果的討論貼:

實驗資料,python從百度抓得:

# -*- coding: utf-8 -*- """ Spyder Editor This is a temporary script file. """ import urllib2 import re import os #connect to a URL #一頁的搜尋結果中url大概是200個左右 file_url = open('url.txt','ab+') #搜尋框裡的東西,這塊可以設定成數字好讓每次搜尋的結果不一樣 search = '123' url = "http://www.baidu.com/s?wd="+search def setUrlToFile(): website = urllib2.urlopen(url) #read html code html = website.read() #use re.findall to get all the links links = re.findall('"((http|ftp)s?://.*?)"', html) for s in links: print s[0] if len(s[0]) < 256: file_url.write(s[0]+'\r\n') #收集實驗資料 for i in range(0,50): setUrlToFile() file_url.close() ###需要重新開啟再讀一下 file_url = open('url.txt','r') file_lines = len(file_url.readlines()) print "there are %d url in %s" %(file_lines,file_url) file_url.close()

方法1:

c++ 寫的讀 url.txt放到map裡面

對map<string , int>的value進行排序,得到前100個

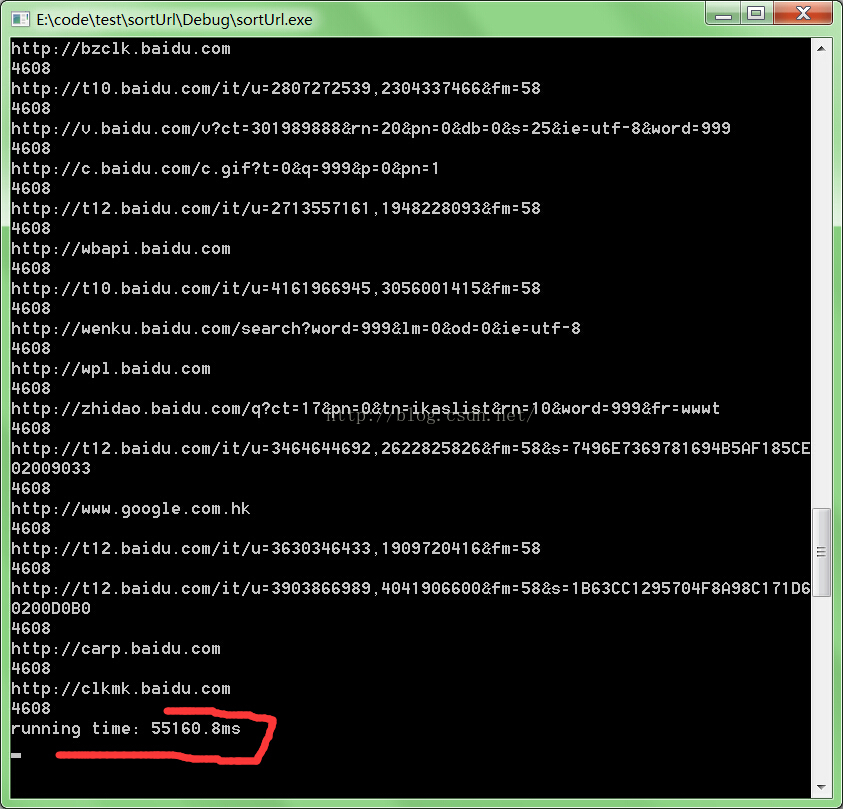

執行一下也就55s,還是很快的,url長度進行了限制小於256個字元

#pragma once /* //計算程式碼段執行時間的類 // */ #include <iostream> #ifndef ComputeTime_h #define ComputeTime_h //單位毫秒 class ComputeTime { private: int Initialized; __int64 Frequency; __int64 BeginTime; public: bool Avaliable(); double End(); bool Begin(); ComputeTime(); virtual ~ComputeTime(); }; #endif #include "stdafx.h" #include "ComputeTime.h" #include <iostream> #include <Windows.h> ComputeTime::ComputeTime() { Initialized=QueryPerformanceFrequency((LARGE_INTEGER *)&Frequency); } ComputeTime::~ComputeTime() { } bool ComputeTime::Begin() { if(!Initialized) return 0; return QueryPerformanceCounter((LARGE_INTEGER *)&BeginTime); } double ComputeTime::End() { if(!Initialized) return 0; __int64 endtime; QueryPerformanceCounter((LARGE_INTEGER *)&endtime); __int64 elapsed = endtime-BeginTime; return ((double)elapsed/(double)Frequency)*1000.0; //單位毫秒 } bool ComputeTime::Avaliable() { return Initialized; } // sortUrl.cpp : 定義控制檯應用程式的入口點。 // #include "stdafx.h" //#include <utility> #include <vector> #include <map> #include <fstream> #include <iostream> #include <string> #include <algorithm> #include "ComputeTime.h" using namespace std; map<string,int> urlfrequency; typedef pair<string, int> PAIR; struct CmpByValue { bool operator()(const PAIR& lhs, const PAIR& rhs) { return lhs.second > rhs.second; } }; void find_largeTH(map<string,int> urlfrequency) { //把map中元素轉存到vector中 ,按照value排序 vector<PAIR> url_quency_vec(urlfrequency.begin(), urlfrequency.end()); sort(url_quency_vec.begin(), url_quency_vec.end(), CmpByValue()); //url_quency_vec.size() for (int i = 0; i != 100; ++i) { cout<<url_quency_vec[i].first<<endl; cout<<url_quency_vec[i].second<<endl; } } //urlheap的建立過程,URL插入時候存在的 void insertUrl(string url) { pair<map<string ,int>::iterator, bool> Insert_Pair; Insert_Pair = urlfrequency.insert(map<string, int>::value_type(url,1)); if (Insert_Pair.second == false) { (Insert_Pair.first->second++); } } int _tmain(int argc, _TCHAR* argv[]) { fstream URLfile; char buffer[1024]; URLfile.open("url.txt",ios::in|ios::out|ios::binary); if (! URLfile.is_open()) { cout << "Error opening file"; exit (1); } else { cout<<"open file success!"<<endl; } ComputeTime cp; cp.Begin(); int i = 0; while (!URLfile.eof()) { URLfile.getline (buffer,1024); //cout << buffer << endl; string temp(buffer); //cout<<i++<<endl; insertUrl(temp); } find_largeTH(urlfrequency); cout<<"running time: "<<cp.End()<<"ms"<<endl; getchar(); //system("pause"); return 0; }

實驗結果:55s還不算太差,可以接受,畢竟是頭腦中的第一個解決方案。

方法2:

hash code 版本,只是不知道怎麼 hash和url關聯起來:

// urlFind.cpp : 定義控制檯應用程式的入口點。 // // sortUrl.cpp : 定義控制檯應用程式的入口點。 // #include "stdafx.h" #include <vector> #include <map> #include <fstream> #include <iostream> #include <string> #include <algorithm> #include <unordered_map> #include "ComputeTime.h" using namespace std; map<unsigned int,int> urlhash; typedef pair<unsigned int, int> PAIR; struct info{ string url; int cnt; bool operator<(const info &r) const { return cnt>r.cnt; } }; unordered_map<string,int> count; //priority_queue<info> pq; struct CmpByValue { bool operator()(const PAIR& lhs, const PAIR& rhs) { return lhs.second > rhs.second; } }; void find_largeTH(map<unsigned int,int> urlhash) { //把map中元素轉存到vector中 ,按照value排序 vector<PAIR> url_quency_vec(urlhash.begin(), urlhash.end()); sort(url_quency_vec.begin(), url_quency_vec.end(), CmpByValue()); //url_quency_vec.size() for (int i = 0; i != 100; ++i) { cout<<url_quency_vec[i].first<<endl; cout<<url_quency_vec[i].second<<endl; } } // BKDR Hash Function unsigned int BKDRHash(char *str) { unsigned int seed = 131; // 31 131 1313 13131 131313 etc.. unsigned int hash = 0; while (*str) { hash = hash * seed + (*str++); } return (hash & 0x7FFFFFFF); } // void insertUrl(string url) { unsigned int hashvalue = BKDRHash((char *)url.c_str()); pair<map<unsigned int ,int>::iterator, bool> Insert_Pair; Insert_Pair = urlhash.insert(map<unsigned int, int>::value_type(hashvalue,1)); if (Insert_Pair.second == false) { (Insert_Pair.first->second++); } } int _tmain(int argc, _TCHAR* argv[]) { fstream URLfile; char buffer[1024]; URLfile.open("url.txt",ios::in|ios::out|ios::binary); if (! URLfile.is_open()) { cout << "Error opening file"; exit (1); } else { cout<<"open file success!"<<endl; } ComputeTime cp; cp.Begin(); int i = 0; while (!URLfile.eof()) { URLfile.getline (buffer,1024); //cout << buffer << endl; string temp(buffer); //cout<<i++<<endl; insertUrl(temp); } find_largeTH(urlhash); cout<<"running time: "<<cp.End()<<"ms"<<endl; getchar(); //system("pause"); return 0; }

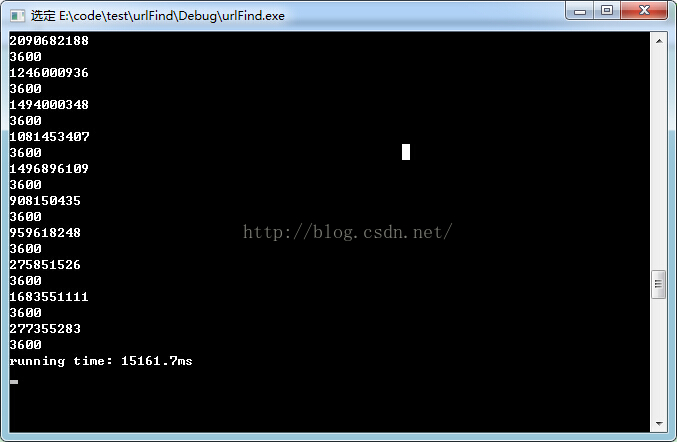

效能15秒左右:缺點在於沒有把hashcode和url進行關聯,技術的處理速度已經非常可觀了

方法3:

下面用STL的hash容器unordered_map,和優先佇列(就是堆)來實現這個問題。

// urlFind.cpp : 定義控制檯應用程式的入口點。

//

// sortUrl.cpp : 定義控制檯應用程式的入口點。

//

#include "stdafx.h"

#include <vector>

#include <map>

#include <fstream>

#include <iostream>

#include <string>

#include <algorithm>

#include <unordered_map>

#include <queue>

#include "ComputeTime.h"

using namespace std;

typedef pair<string, int> PAIR;

struct info

{

string url;

int cnt;

bool operator<(const info &r) const

{

return cnt<r.cnt;

}

};

unordered_map<string,int> hash_url;

priority_queue<info> pq;

void find_largeTH(unordered_map<string,int> urlhash)

{

unordered_map<string,int>::iterator iter = urlhash.begin();

info temp;

for (; iter!= urlhash.end();++iter)

{

temp.url = iter->first;

temp.cnt = iter->second;

pq.push(temp);

}

for (int i = 0; i != 100; ++i)

{

cout<<pq.top().url<<endl;

cout<<pq.top().cnt<<endl;

pq.pop();

}

}

void insertUrl(string url)

{

pair<unordered_map<string ,int>::iterator, bool> Insert_Pair;

Insert_Pair = hash_url.insert(unordered_map<string, int>::value_type(url,1));

if (Insert_Pair.second == false)

{

(Insert_Pair.first->second++);

}

}

int _tmain(int argc, _TCHAR* argv[])

{

fstream URLfile;

char buffer[1024];

URLfile.open("url.txt",ios::in|ios::out|ios::binary);

if (! URLfile.is_open())

{ cout << "Error opening file"; exit (1); }

else

{

cout<<"open file success!"<<endl;

}

ComputeTime cp;

cp.Begin();

int i = 0;

while (!URLfile.eof())

{

URLfile.getline (buffer,1024);

//cout << buffer << endl;

string temp(buffer);

//cout<<i++<<endl;

insertUrl(temp);

}

find_largeTH(hash_url);

cout<<"running time: "<<cp.End()<<"ms"<<endl;

getchar();

//system("pause");

return 0;

}

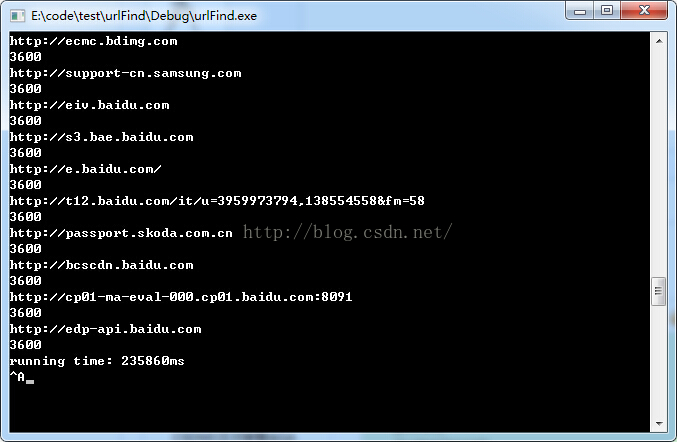

基本上算是演算法裡面比較優秀的解決方案了,面試官如果能聽到這個方案應該會比較欣喜。

方法4:實驗耗時未知,技術上碾壓了上述解決方案,中高年輕人,不要重複造輪子!哈哈

資料庫,SQL語句:

load data infile "d:/bigdata.txt" into table tb_url(url);

SELECT

url,

count(url) as show_count

FROM

tb_url

GROUP BY url

ORDER BY show_count desc

LIMIT 100