FFmpeg4Android:音訊解碼與播放

阿新 • • 發佈:2019-01-27

4.3 音訊解碼

音訊解碼,就是將視訊檔案中的音訊部分抽離出來,生成PCM檔案,並使用Android控制元件AudioTrack進行播放。

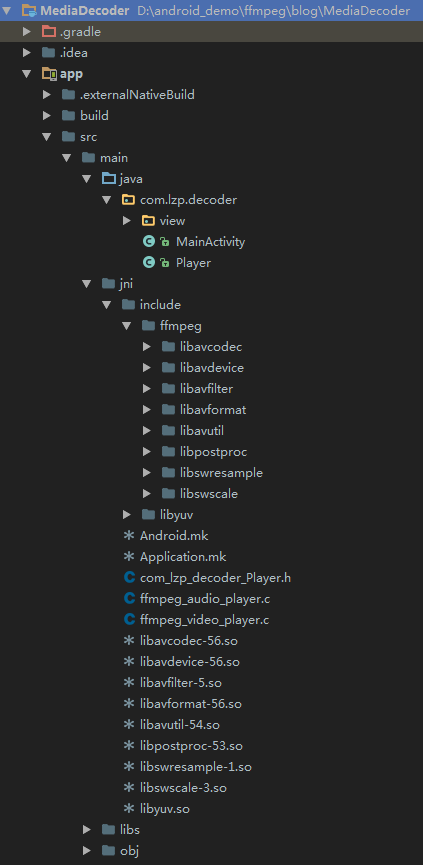

新建,MedioPlayer專案,其目錄結構如下:

java端程式碼,MainActivity.java:

package com.lzp.decoder;

import java.io.File;

import com.lzp.decoder.view.VideoView;

import android.app.Activity;

import android.os.Bundle;

import android.os.Environment;

import java端程式碼,Player.java:

package com.lzp.decoder;

import android.media.AudioFormat;

import android.media.AudioManager;

import android.media.AudioTrack;

import android.util.Log;

import android.view.Surface;

/**

* 視訊播放的控制器

*/

public class Player {

// 解碼視訊

public native void render(String input, Surface surface);

// 解碼音訊

public native void sound(String input, String output);

/**

* 建立一個AudioTrac物件,用於播放

*

* @param nb_channels

* @return

*/

public AudioTrack createAudioTrack(int sampleRateInHz, int nb_channels) {

// 固定格式的音訊碼流

int audioFormat = AudioFormat.ENCODING_PCM_16BIT;

Log.i("jason", "nb_channels:" + nb_channels);

// 聲道佈局

int channelConfig;

if (nb_channels == 1) {

channelConfig = android.media.AudioFormat.CHANNEL_OUT_MONO;

} else if (nb_channels == 2) {

channelConfig = android.media.AudioFormat.CHANNEL_OUT_STEREO;

} else {

channelConfig = android.media.AudioFormat.CHANNEL_OUT_STEREO;

}

int bufferSizeInBytes = AudioTrack.getMinBufferSize(sampleRateInHz, channelConfig, audioFormat);

AudioTrack audioTrack = new AudioTrack(

AudioManager.STREAM_MUSIC,

sampleRateInHz, channelConfig,

audioFormat,

bufferSizeInBytes, AudioTrack.MODE_STREAM);

// 播放

// audioTrack.play();

// 寫入PCM

// audioTrack.write(audioData, offsetInBytes, sizeInBytes);

return audioTrack;

}

static {

System.loadLibrary("avutil-54");

System.loadLibrary("swresample-1");

System.loadLibrary("avcodec-56");

System.loadLibrary("avformat-56");

System.loadLibrary("swscale-3");

System.loadLibrary("postproc-53");

System.loadLibrary("avfilter-5");

System.loadLibrary("avdevice-56");

System.loadLibrary("myffmpeg");

}

}

c端解碼程式碼,ffmpeg_audio_player.c:

#include "com_lzp_decoder_Player.h"

#include <stdlib.h>

#include <unistd.h>

#include <android/log.h>

//封裝格式

#include "libavformat/avformat.h"

//解碼

#include "libavcodec/avcodec.h"

//縮放

#include "libswscale/swscale.h"

//重取樣

#include "libswresample/swresample.h"

#define LOGI(FORMAT, ...) __android_log_print(ANDROID_LOG_INFO, "ffmpeg",FORMAT, ##__VA_ARGS__);

#define LOGE(FORMAT, ...) __android_log_print(ANDROID_LOG_ERROR, "ffmpeg",FORMAT, ##__VA_ARGS__);

#define MAX_AUDIO_FRME_SIZE 48000 * 4

JNIEXPORT void JNICALL Java_com_lzp_decoder_Player_sound

(JNIEnv *env, jobject jthiz, jstring input_jstr, jstring output_jstr){

const char* input_cstr = (*env)->GetStringUTFChars(env, input_jstr, NULL);

const char* output_cstr = (*env)->GetStringUTFChars(env, output_jstr, NULL);

LOGI("%s", "sound");

// 註冊元件

av_register_all();

AVFormatContext *pFormatCtx = avformat_alloc_context();

//開啟音訊檔案

if (avformat_open_input(&pFormatCtx, input_cstr, NULL, NULL) != 0){

LOGI("無法開啟音訊檔案:%s\n", input_cstr);

return;

}

// 獲取輸入檔案資訊

if (avformat_find_stream_info(pFormatCtx, NULL) < 0){

LOGI("%s", "無法獲取輸入檔案資訊");

return;

}

// 獲取音訊流索引位置

int i = 0, audio_stream_idx = -1;

for (; i < pFormatCtx->nb_streams; i++){

if (pFormatCtx->streams[i]->codec->codec_type == AVMEDIA_TYPE_AUDIO){

audio_stream_idx = i;

break;

}

}

// 獲取解碼器

AVCodecContext *codecCtx = pFormatCtx->streams[audio_stream_idx]->codec;

AVCodec *codec = avcodec_find_decoder(codecCtx->codec_id);

if (codec == NULL){

LOGI("%s", "無法獲取解碼器");

return;

}

// 開啟解碼器

if (avcodec_open2(codecCtx, codec, NULL) < 0){

LOGI("%s", "無法開啟解碼器");

return;

}

// 壓縮資料

AVPacket *packet = (AVPacket *)av_malloc(sizeof(AVPacket));

// 解壓縮資料

AVFrame *frame = av_frame_alloc();

// frame->16bit 44100 PCM 統一音訊取樣格式與取樣率

SwrContext *swrCtx = swr_alloc();

// 重取樣設定引數-------------start

// 輸入的取樣格式

enum AVSampleFormat in_sample_fmt = codecCtx->sample_fmt;

// 輸出取樣格式16bit PCM

enum AVSampleFormat out_sample_fmt = AV_SAMPLE_FMT_S16;

// 輸入取樣率

int in_sample_rate = codecCtx->sample_rate;

// 輸出取樣率

int out_sample_rate = in_sample_rate;

// 獲取輸入的聲道佈局

// 根據聲道個數獲取預設的聲道佈局(2個聲道,預設立體聲stereo)

// av_get_default_channel_layout(codecCtx->channels);

uint64_t in_ch_layout = codecCtx->channel_layout;

// 輸出的聲道佈局(立體聲)

uint64_t out_ch_layout = AV_CH_LAYOUT_STEREO;

swr_alloc_set_opts(swrCtx,

out_ch_layout, out_sample_fmt, out_sample_rate,

in_ch_layout, in_sample_fmt, in_sample_rate,

0, NULL);

swr_init(swrCtx);

// 輸出的聲道個數

int out_channel_nb = av_get_channel_layout_nb_channels(out_ch_layout);

// 重取樣設定引數-------------end

// JNI begin------------------

// JasonPlayer

jclass player_class = (*env)->GetObjectClass(env, jthiz);

// AudioTrack物件

jmethodID create_audio_track_mid = (*env)->GetMethodID(env, player_class, "createAudioTrack", "(II)Landroid/media/AudioTrack;");

jobject audio_track = (*env)->CallObjectMethod(env, jthiz, create_audio_track_mid, out_sample_rate, out_channel_nb);

// 呼叫AudioTrack.play方法

jclass audio_track_class = (*env)->GetObjectClass(env, audio_track);

jmethodID audio_track_play_mid = (*env)->GetMethodID(env, audio_track_class, "play", "()V");

(*env)->CallVoidMethod(env, audio_track, audio_track_play_mid);

// AudioTrack.write

jmethodID audio_track_write_mid = (*env)->GetMethodID(env, audio_track_class, "write", "([BII)I");

// JNI end------------------

FILE *fp_pcm = fopen(output_cstr, "wb");

// 16bit 44100 PCM 資料

uint8_t *out_buffer = (uint8_t *)av_malloc(MAX_AUDIO_FRME_SIZE);

int got_frame = 0, index = 0, ret;

// 不斷讀取壓縮資料

while (av_read_frame(pFormatCtx, packet) >= 0){

// 解碼音訊型別的Packet

if (packet->stream_index == audio_stream_idx){

// 解碼

ret = avcodec_decode_audio4(codecCtx, frame, &got_frame, packet);

if (ret < 0){

LOGI("%s", "解碼完成");

}

// 解碼一幀成功

if (got_frame > 0){

LOGI("解碼:%d", index++);

swr_convert(swrCtx, &out_buffer, MAX_AUDIO_FRME_SIZE, (const uint8_t **)frame->data, frame->nb_samples);

// 獲取sample的size

int out_buffer_size = av_samples_get_buffer_size(NULL, out_channel_nb,

frame->nb_samples, out_sample_fmt, 1);

fwrite(out_buffer, 1, out_buffer_size, fp_pcm);

// out_buffer緩衝區資料,轉成byte陣列

jbyteArray audio_sample_array = (*env)->NewByteArray(env, out_buffer_size);

jbyte* sample_bytep = (*env)->GetByteArrayElements(env, audio_sample_array, NULL);

// out_buffer的資料複製到sampe_bytep

memcpy(sample_bytep, out_buffer, out_buffer_size);

// 同步

(*env)->ReleaseByteArrayElements(env, audio_sample_array, sample_bytep, 0);

// AudioTrack.write PCM資料

(*env)->CallIntMethod(env, audio_track, audio_track_write_mid,

audio_sample_array, 0, out_buffer_size);

// 釋放區域性引用

(*env)->DeleteLocalRef(env, audio_sample_array);

usleep(1000 * 16);

}

}

av_free_packet(packet);

}

av_frame_free(&frame);

av_free(out_buffer);

swr_free(&swrCtx);

avcodec_close(codecCtx);

avformat_close_input(&pFormatCtx);

(*env)->ReleaseStringUTFChars(env, input_jstr, input_cstr);

(*env)->ReleaseStringUTFChars(env, output_jstr, output_cstr);

}

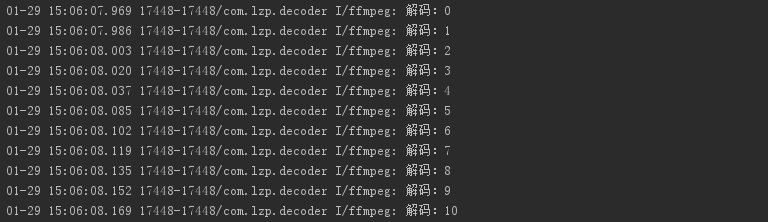

執行結果日誌: