使用librtmp推送AVC資料

一、前言

本文要講述的是將AVC(h264)資料推送到流媒體伺服器。我的實現方法是:1先使用android自帶的API採集攝像頭資料,然後進行h264編碼。2、然後使用ndk開發將編碼後的資料通過librtmp傳送出去。關於如何得到librtmp的動態庫和如何使用系統API編碼視訊,可以參考我以前的文章。

二、思路

1、使用攝像頭採集視訊、編碼、得到h264資料。(這些不是重點,之前文章有講到)

2、定義jni方法,我定義了4個方法:

public static final native int init(String url, int timeOut);

public 分別的作用:1、初始化,並連線url、握手。2、傳送SPS幀、和PPS幀。3、傳送視訊資料。4、釋放資源。

3、實現這些jni方法。

4、對得到的h264幀進行判斷,(主要的幀分為 SPS幀、 PPS幀、 IDR幀、 非關鍵幀)呼叫。

三、h264資料的格式分析

1、對應h264資料而言,每幀的界定符都是 00 00 00 01 或者 00 00 01。但如果是SPS或PPS幀他們的界定符一定是00 00 00 01

2、 幀型別有:

NAL_SLICE = 1

NAL_SLICE_DPA = 2

NAL_SLICE_DPB = 3

NAL_SLICE_DPC = 4

NAL_SLICE_IDR = 5

NAL_SEI = 6

NAL_SPS = 7

NAL_PPS = 8

NAL_AUD = 9

NAL_FILLER = 12

我們傳送 RTMP 資料時只需要知道四種幀型別,其它型別我都把它規類成非關鍵幀。分別是

NAL_SPS(7), sps 幀

NAL_PPS(8), pps 幀

NAL_SLICE_IDR(5), 關鍵幀

NAL_SLICE(1) 非關鍵幀

幀型別的方式判斷為介面符後首位元組的低四位。

第一幀的幀型別為: 0x67 & 0x1F = 7,這是一個 SPS 幀

第二幀的幀型別為: 0x68 & 0x1F = 8,這是一個 PPS 幀

第三幀的幀型別為: 0x06 & 0x1F = 6,這是一個 SEI 幀

四、程式碼

1、初始化並連線url

JNIEXPORT jint JNICALL

Java_com_blueberry_hellortmp_Rtmp_init(JNIEnv *env, jclass type, jstring url_, jint timeOut) {

const char *url = (*env)->GetStringUTFChars(env, url_, 0);

int ret;

RTMP_LogSetLevel(RTMP_LOGDEBUG);

rtmp = RTMP_Alloc(); //申請rtmp空間

RTMP_Init(rtmp);

rtmp->Link.timeout = timeOut;//單位秒

RTMP_SetupURL(rtmp, url);

RTMP_EnableWrite(rtmp);

//握手

if ((ret = RTMP_Connect(rtmp, NULL)) <= 0) {

LOGD("rtmp connet error");

return (*env)->NewStringUTF(env, "error");

}

if ((ret = RTMP_ConnectStream(rtmp, 0)) <= 0) {

LOGD("rtmp connect stream error");

}

(*env)->ReleaseStringUTFChars(env, url_, url);

return ret;

}2、傳送sps幀 和pps幀

/**

* H.264 的編碼資訊幀是傳送給 RTMP 伺服器稱為 AVC sequence header,

* RTMP 伺服器只有收到 AVC sequence header 中的 sps, pps 才能解析後續傳送的 H264 幀。

*/

int send_video_sps_pps(unsigned char *sps, int sps_len, unsigned char *pps, int pps_len) {

int i;

packet = (RTMPPacket *) malloc(RTMP_HEAD_SIZE + 1024);

memset(packet, 0, RTMP_HEAD_SIZE);

packet->m_body = (char *) packet + RTMP_HEAD_SIZE;

body = (unsigned char *) packet->m_body;

i = 0;

body[i++] = 0x17; //1:keyframe 7:AVC

body[i++] = 0x00; // AVC sequence header

body[i++] = 0x00;

body[i++] = 0x00;

body[i++] = 0x00; //fill in 0

/*AVCDecoderConfigurationRecord*/

body[i++] = 0x01;

body[i++] = sps[1]; //AVCProfileIndecation

body[i++] = sps[2]; //profile_compatibilty

body[i++] = sps[3]; //AVCLevelIndication

body[i++] = 0xff;//lengthSizeMinusOne

/*SPS*/

body[i++] = 0xe1;

body[i++] = (sps_len >> 8) & 0xff;

body[i++] = sps_len & 0xff;

/*sps data*/

memcpy(&body[i], sps, sps_len);

i += sps_len;

/*PPS*/

body[i++] = 0x01;

/*sps data length*/

body[i++] = (pps_len >> 8) & 0xff;

body[i++] = pps_len & 0xff;

memcpy(&body[i], pps, pps_len);

i += pps_len;

packet->m_packetType = RTMP_PACKET_TYPE_VIDEO;

packet->m_nBodySize = i;

packet->m_nChannel = 0x04;

packet->m_nTimeStamp = 0;

packet->m_hasAbsTimestamp = 0;

packet->m_headerType = RTMP_PACKET_SIZE_MEDIUM;

packet->m_nInfoField2 = rtmp->m_stream_id;

/*傳送*/

if (RTMP_IsConnected(rtmp)) {

RTMP_SendPacket(rtmp, packet, TRUE);

}

free(packet);

return 0;

}

3、傳送視訊資料

//sps 與 pps 的幀界定符都是 00 00 00 01,而普通幀可能是 00 00 00 01 也有可能 00 00 01

int send_rtmp_video(unsigned char *buf, int len, long time) {

int type;

long timeOffset;

timeOffset = time - start_time;/*start_time為開始直播的時間戳*/

/*去掉幀界定符*/

if (buf[2] == 0x00) {/*00 00 00 01*/

buf += 4;

len -= 4;

} else if (buf[2] == 0x01) {

buf += 3;

len - 3;

}

type = buf[0] & 0x1f;

packet = (RTMPPacket *) malloc(RTMP_HEAD_SIZE + len + 9);

memset(packet, 0, RTMP_HEAD_SIZE);

packet->m_body = (char *) packet + RTMP_HEAD_SIZE;

packet->m_nBodySize = len + 9;

/* send video packet*/

body = (unsigned char *) packet->m_body;

memset(body, 0, len + 9);

/*key frame*/

body[0] = 0x27;

if (type == NAL_SLICE_IDR) {

body[0] = 0x17; //關鍵幀

}

body[1] = 0x01;/*nal unit*/

body[2] = 0x00;

body[3] = 0x00;

body[4] = 0x00;

body[5] = (len >> 24) & 0xff;

body[6] = (len >> 16) & 0xff;

body[7] = (len >> 8) & 0xff;

body[8] = (len) & 0xff;

/*copy data*/

memcpy(&body[9], buf, len);

packet->m_hasAbsTimestamp = 0;

packet->m_packetType = RTMP_PACKET_TYPE_VIDEO;

packet->m_nInfoField2 = rtmp->m_stream_id;

packet->m_nChannel = 0x04;

packet->m_headerType = RTMP_PACKET_SIZE_LARGE;

packet->m_nTimeStamp = timeOffset;

if (RTMP_IsConnected(rtmp)) {

RTMP_SendPacket(rtmp, packet, TRUE);

}

free(packet);

}4、釋放

int stop() {

RTMP_Close(rtmp);

RTMP_Free(rtmp);

}

完整程式碼:

MainActivity.java

package com.blueberry.hellortmp;

import android.app.Activity;

import android.graphics.ImageFormat;

import android.hardware.Camera;

import android.media.MediaCodec;

import android.media.MediaCodecInfo;

import android.media.MediaCodecList;

import android.media.MediaFormat;

import android.os.Bundle;

import android.support.v7.app.AppCompatActivity;

import android.util.Log;

import android.view.Surface;

import android.view.SurfaceHolder;

import android.view.SurfaceView;

import android.view.View;

import android.widget.Button;

import java.io.IOException;

import java.nio.ByteBuffer;

import java.util.Arrays;

import java.util.Date;

import java.util.List;

import static android.hardware.Camera.Parameters.FOCUS_MODE_AUTO;

import static android.hardware.Camera.Parameters.PREVIEW_FPS_MAX_INDEX;

import static android.hardware.Camera.Parameters.PREVIEW_FPS_MIN_INDEX;

import static android.media.MediaCodec.CONFIGURE_FLAG_ENCODE;

import static android.media.MediaFormat.KEY_BIT_RATE;

import static android.media.MediaFormat.KEY_COLOR_FORMAT;

import static android.media.MediaFormat.KEY_FRAME_RATE;

import static android.media.MediaFormat.KEY_I_FRAME_INTERVAL;

public class MainActivity extends AppCompatActivity implements SurfaceHolder.Callback2 {

static {

System.loadLibrary("hellortmp");

}

static final int NAL_SLICE = 1;

static final int NAL_SLICE_DPA = 2;

static final int NAL_SLICE_DPB = 3;

static final int NAL_SLICE_DPC = 4;

static final int NAL_SLICE_IDR = 5;

static final int NAL_SEI = 6;

static final int NAL_SPS = 7;

static final int NAL_PPS = 8;

static final int NAL_AUD = 9;

static final int NAL_FILLER = 12;

private static final String VCODEC_MIME = "video/avc";

private Button btnStart;

private SurfaceView mSurfaceView;

private SurfaceHolder mSurfaceHolder;

private Camera mCamera;

private boolean isStarted;

private int colorFormat;

private long presentationTimeUs;

@Override

protected void onCreate(Bundle savedInstanceState) {

super.onCreate(savedInstanceState);

setContentView(R.layout.activity_main);

btnStart = (Button) findViewById(R.id.btn_start);

mSurfaceView = (SurfaceView) findViewById(R.id.surface_view);

btnStart.setOnClickListener(new View.OnClickListener() {

@Override

public void onClick(View v) {

togglePublish();

}

});

mSurfaceHolder = mSurfaceView.getHolder();

mSurfaceHolder.addCallback(this);

}

private void togglePublish() {

if (isStarted) {

stop();

} else {

start();

}

btnStart.setText(isStarted ? "停止" : "開始");

}

private void start() {

isStarted = true;

//

initVideoEncoder();

presentationTimeUs = new Date().getTime() * 1000;

Rtmp.init("rtmp://192.168.155.1:1935/live/test", 5);

}

private MediaCodec vencoder;

private void initVideoEncoder() {

MediaCodecInfo mediaCodecInfo = selectCodec(VCODEC_MIME);

colorFormat = getColorFormat(mediaCodecInfo);

try {

vencoder = MediaCodec.createByCodecName(mediaCodecInfo.getName());

Log.d(TAG, "編碼器:" + mediaCodecInfo.getName() + "建立完成!");

} catch (IOException e) {

e.printStackTrace();

throw new RuntimeException("vencodec初始化失敗!", e);

}

MediaFormat mediaFormat = MediaFormat

.createVideoFormat(MediaFormat.MIMETYPE_VIDEO_AVC,

previewSize.width, previewSize.height);

mediaFormat.setInteger(MediaFormat.KEY_MAX_INPUT_SIZE, 0);

mediaFormat.setInteger(KEY_BIT_RATE, 300 * 1000); //位元率

mediaFormat.setInteger(KEY_COLOR_FORMAT, colorFormat);

mediaFormat.setInteger(KEY_FRAME_RATE, 20);

mediaFormat.setInteger(KEY_I_FRAME_INTERVAL, 5);

vencoder.configure(mediaFormat, null, null, CONFIGURE_FLAG_ENCODE);

vencoder.start();

}

private static MediaCodecInfo selectCodec(String mimeType) {

int numCodecs = MediaCodecList.getCodecCount();

for (int i = 0; i < numCodecs; i++) {

MediaCodecInfo codecInfo = MediaCodecList.getCodecInfoAt(i);

if (!codecInfo.isEncoder()) {

continue;

}

String[] types = codecInfo.getSupportedTypes();

for (int j = 0; j < types.length; j++) {

if (types[j].equalsIgnoreCase(mimeType)) {

return codecInfo;

}

}

}

return null;

}

private int getColorFormat(MediaCodecInfo mediaCodecInfo) {

int matchedFormat = 0;

MediaCodecInfo.CodecCapabilities codecCapabilities =

mediaCodecInfo.getCapabilitiesForType(VCODEC_MIME);

for (int i = 0; i < codecCapabilities.colorFormats.length; i++) {

int format = codecCapabilities.colorFormats[i];

if (format >= codecCapabilities.COLOR_FormatYUV420Planar &&

format <= codecCapabilities.COLOR_FormatYUV420PackedSemiPlanar) {

if (format >= matchedFormat) {

matchedFormat = format;

break;

}

}

}

return matchedFormat;

}

private void stop() {

isStarted = false;

vencoder.stop();

vencoder.release();

Rtmp.stop();

}

@Override

protected void onResume() {

super.onResume();

initCamera();

}

@Override

protected void onPause() {

super.onPause();

releaseCamera();

}

private void releaseCamera() {

if (mCamera != null) {

mCamera.release();

}

mCamera = null;

}

private void initCamera() {

try {

if (mCamera == null) {

mCamera = Camera.open();

}

} catch (Exception e) {

throw new RuntimeException("open camera fail", e);

}

setParameters();

setCameraDisplayOrientation(this, Camera.CameraInfo.CAMERA_FACING_BACK, mCamera);

try {

mCamera.setPreviewDisplay(mSurfaceHolder);

} catch (IOException e) {

e.printStackTrace();

}

mCamera.addCallbackBuffer(new byte[calculateFrameSize(ImageFormat.NV21)]);

mCamera.setPreviewCallbackWithBuffer(getPreviewCallback());

mCamera.startPreview();

}

public static void setCameraDisplayOrientation(Activity activity,

int cameraId, android.hardware.Camera camera) {

android.hardware.Camera.CameraInfo info =

new android.hardware.Camera.CameraInfo();

android.hardware.Camera.getCameraInfo(cameraId, info);

int rotation = activity.getWindowManager().getDefaultDisplay()

.getRotation();

int degrees = 0;

switch (rotation) {

case Surface.ROTATION_0:

degrees = 0;

break;

case Surface.ROTATION_90:

degrees = 90;

break;

case Surface.ROTATION_180:

degrees = 180;

break;

case Surface.ROTATION_270:

degrees = 270;

break;

}

int result;

if (info.facing == Camera.CameraInfo.CAMERA_FACING_FRONT) {

result = (info.orientation + degrees) % 360;

result = (360 - result) % 360; // compensate the mirror

} else { // back-facing

result = (info.orientation - degrees + 360) % 360;

}

camera.setDisplayOrientation(result);

}

private void setParameters() {

Camera.Parameters parameters = mCamera.getParameters();

List<Camera.Size> supportedPreviewSizes = parameters.getSupportedPreviewSizes();

for (Camera.Size size : supportedPreviewSizes

) {

if (size.width <= 360 && size.width >= 180) {

previewSize = size;

Log.d(TAG, "select size width=" + size.width + ",height=" + size.height);

break;

}

}

List<int[]> supportedPreviewFpsRange = parameters.getSupportedPreviewFpsRange();

int[] destRange = {30 * 1000, 30 * 1000};

for (int[] range : supportedPreviewFpsRange

) {

if (range[PREVIEW_FPS_MIN_INDEX] >= 30 * 1000 && range[PREVIEW_FPS_MAX_INDEX] <= 100 * 1000) {

destRange = range;

break;

}

}

parameters.setPreviewSize(previewSize.width, previewSize.height);

parameters.setPreviewFpsRange(destRange[PREVIEW_FPS_MIN_INDEX],

destRange[PREVIEW_FPS_MAX_INDEX]);

parameters.setPreviewFormat(ImageFormat.NV21);

parameters.setFocusMode(FOCUS_MODE_AUTO);

mCamera.setParameters(parameters);

}

private static final String TAG = "MainActivity";

private Camera.Size previewSize;

@Override

public void surfaceRedrawNeeded(SurfaceHolder holder) {

}

@Override

public void surfaceCreated(SurfaceHolder holder) {

}

@Override

public void surfaceChanged(SurfaceHolder holder, int format, int width, int height) {

initCamera();

}

@Override

public void surfaceDestroyed(SurfaceHolder holder) {

}

public Camera.PreviewCallback getPreviewCallback() {

return new Camera.PreviewCallback() {

byte[] dstByte = new byte[calculateFrameSize(ImageFormat.NV21)];

@Override

public void onPreviewFrame(byte[] data, Camera camera) {

if (data == null) {

mCamera.addCallbackBuffer(new byte[calculateFrameSize(ImageFormat.NV21)]);

} else {

if (isStarted) {

// data 是Nv21

if (colorFormat == MediaCodecInfo.CodecCapabilities.COLOR_FormatYUV420SemiPlanar) {

Yuv420Util.Nv21ToYuv420SP(data, dstByte, previewSize.width, previewSize.height);

} else if (colorFormat == MediaCodecInfo.CodecCapabilities.COLOR_FormatYUV420Planar) {

Yuv420Util.Nv21ToI420(data, dstByte, previewSize.width, previewSize.height);

} else if (colorFormat == MediaCodecInfo.CodecCapabilities.COLOR_FormatYUV420PackedPlanar) {

// Yuv420packedPlannar 和 yuv420sp很像

// 區別在於 加入 width = 4的話 y1,y2,y3 ,y4公用 u1v1

// 而 yuv420dp 則是 y1y2y5y6 共用 u1v1

//http://blog.csdn.net/jumper511/article/details/21719313

//這樣處理的話顏色核能會有些失真。

Yuv420Util.Nv21ToYuv420SP(data, dstByte, previewSize.width, previewSize.height);

} else {

System.arraycopy(data, 0, dstByte, 0, data.length);

}

onGetVideoFrame(dstByte);

}

mCamera.addCallbackBuffer(data);

}

}

};

}

private MediaCodec.BufferInfo vBufferInfo = new MediaCodec.BufferInfo();

private void onGetVideoFrame(byte[] i420) {

// MediaCodec

ByteBuffer[] inputBuffers = vencoder.getInputBuffers();

ByteBuffer[] outputBuffers = vencoder.getOutputBuffers();

int inputBufferId = vencoder.dequeueInputBuffer(-1);

if (inputBufferId >= 0) {

// fill inputBuffers[inputBufferId] with valid data

ByteBuffer bb = inputBuffers[inputBufferId];

bb.clear();

bb.put(i420, 0, i420.length);

long pts = new Date().getTime() * 1000 - presentationTimeUs;

vencoder.queueInputBuffer(inputBufferId, 0, i420.length, pts, 0);

}

for (; ; ) {

int outputBufferId = vencoder.dequeueOutputBuffer(vBufferInfo, 0);

if (outputBufferId >= 0) {

// outputBuffers[outputBufferId] is ready to be processed or rendered.

ByteBuffer bb = outputBuffers[outputBufferId];

onEncodedh264Frame(bb, vBufferInfo);

vencoder.releaseOutputBuffer(outputBufferId, false);

}

if (outputBufferId < 0) {

break;

}

}

}

private void onEncodedh264Frame(ByteBuffer bb, MediaCodec.BufferInfo vBufferInfo) {

int offset = 4;

//判斷幀的型別

if (bb.get(2) == 0x01) {

offset = 3;

}

int type = bb.get(offset) & 0x1f;

switch (type) {

case NAL_SLICE:

Log.d(TAG, "type=NAL_SLICE");

break;

case NAL_SLICE_DPA:

Log.d(TAG, "type=NAL_SLICE_DPA");

break;

case NAL_SLICE_DPB:

Log.d(TAG, "type=NAL_SLICE_DPB");

break;

case NAL_SLICE_DPC:

Log.d(TAG, "type=NAL_SLICE_DPC");

break;

case NAL_SLICE_IDR: //關鍵幀

Log.d(TAG, "type=NAL_SLICE_IDR");

break;

case NAL_SEI:

Log.d(TAG, "type=NAL_SEI");

break;

case NAL_SPS: // sps

Log.d(TAG, "type=NAL_SPS");

//[0, 0, 0, 1, 103, 66, -64, 13, -38, 5, -126, 90, 1, -31, 16, -115, 64, 0, 0, 0, 1, 104, -50, 6, -30]

//打印發現這裡將 SPS幀和 PPS幀合在了一起傳送

// SPS為 [4,len-8]

// PPS為後4個位元組

//so .

byte[] pps = new byte[4];

byte[] sps = new byte[vBufferInfo.size - 12];

bb.getInt();// 拋棄 0,0,0,1

bb.get(sps, 0, sps.length);

bb.getInt();

bb.get(pps, 0, pps.length);

Log.d(TAG, "解析得到 sps:" + Arrays.toString(sps) + ",PPS=" + Arrays.toString(pps));

Rtmp.sendSpsAndPps(sps, sps.length, pps, pps.length, vBufferInfo.presentationTimeUs / 1000);

return;

case NAL_PPS: // pps

Log.d(TAG, "type=NAL_PPS");

break;

case NAL_AUD:

Log.d(TAG, "type=NAL_AUD");

break;

case NAL_FILLER:

Log.d(TAG, "type=NAL_FILLER");

break;

}

byte[] bytes = new byte[vBufferInfo.size];

bb.get(bytes);

Rtmp.sendVideoFrame(bytes, bytes.length, vBufferInfo.presentationTimeUs / 1000);

}

private int calculateFrameSize(int format) {

return previewSize.width * previewSize.height * ImageFormat.getBitsPerPixel(format) / 8;

}

}

Yuv420Util.java

package com.blueberry.hellortmp;

/**

* Created by blueberry on 1/13/2017.

*/

public class Yuv420Util {

public static void Nv21ToI420(byte[] data, byte[] dstData, int w, int h) {

int size = w * h;

// Y

System.arraycopy(data, 0, dstData, 0, size);

for (int i = 0; i < size / 4; i++) {

dstData[size + i] = data[size + i * 2 + 1]; //U

dstData[size + size / 4 + i] = data[size + i * 2]; //V

}

}

public static void Nv21ToYuv420SP(byte[] data, byte[] dstData, int w, int h) {

int size = w * h;

// Y

System.arraycopy(data, 0, dstData, 0, size);

for (int i = 0; i < size / 4; i++) {

dstData[size + i * 2] = data[size + i * 2 + 1]; //U

dstData[size + i * 2 + 1] = data[size + i * 2]; //V

}

}

}

public.c

#include <jni.h>

#include "rtmp.h"

#include "rtmp_sys.h"

#include "log.h"

#include "android/log.h"

#include "time.h"

#define TAG "RTMP"

#define RTMP_HEAD_SIZE (sizeof(RTMPPacket)+RTMP_MAX_HEADER_SIZE)

#define NAL_SLICE 1

#define NAL_SLICE_DPA 2

#define NAL_SLICE_DPB 3

#define NAL_SLICE_DPC 4

#define NAL_SLICE_IDR 5

#define NAL_SEI 6

#define NAL_SPS 7

#define NAL_PPS 8

#define NAL_AUD 9

#define NAL_FILLER 12

#define LOGD(fmt, ...) \

__android_log_print(ANDROID_LOG_DEBUG,TAG,fmt,##__VA_ARGS__);

RTMP *rtmp;

RTMPPacket *packet = NULL;

unsigned char *body;

long start_time;

//int send(const char *buf, int buflen, int type, unsigned int timestamp);

int send_video_sps_pps(unsigned char *sps, int sps_len, unsigned char *pps, int pps_len);

int send_rtmp_video(unsigned char *buf, int len, long time);

int stop();

JNIEXPORT jint JNICALL

Java_com_blueberry_hellortmp_Rtmp_init(JNIEnv *env, jclass type, jstring url_, jint timeOut) {

const char *url = (*env)->GetStringUTFChars(env, url_, 0);

int ret;

RTMP_LogSetLevel(RTMP_LOGDEBUG);

rtmp = RTMP_Alloc(); //申請rtmp空間

RTMP_Init(rtmp);

rtmp->Link.timeout = timeOut;//單位秒

RTMP_SetupURL(rtmp, url);

RTMP_EnableWrite(rtmp);

//握手

if ((ret = RTMP_Connect(rtmp, NULL)) <= 0) {

LOGD("rtmp connet error");

return (*env)->NewStringUTF(env, "error");

}

if ((ret = RTMP_ConnectStream(rtmp, 0)) <= 0) {

LOGD("rtmp connect stream error");

}

(*env)->ReleaseStringUTFChars(env, url_, url);

return ret;

}

JNIEXPORT jint JNICALL

Java_com_blueberry_hellortmp_Rtmp_sendSpsAndPps(JNIEnv *env, jclass type, jbyteArray sps_,

jint spsLen, jbyteArray pps_, jint ppsLen,

jlong time) {

jbyte *sps = (*env)->GetByteArrayElements(env, sps_, NULL);

jbyte *pps = (*env)->GetByteArrayElements(env, pps_, NULL);

int ret = send_video_sps_pps((unsigned char *) sps, spsLen, (unsigned char *) pps, ppsLen);

start_time = time;

(*env)->ReleaseByteArrayElements(env, sps_, sps, 0);

(*env)->ReleaseByteArrayElements(env, pps_, pps, 0);

return ret;

}

JNIEXPORT jint JNICALL

Java_com_blueberry_hellortmp_Rtmp_sendVideoFrame(JNIEnv *env, jclass type, jbyteArray frame_,

jint len, jlong time) {

jbyte *frame = (*env)->GetByteArrayElements(env, frame_, NULL);

int ret = send_rtmp_video((unsigned char *) frame, len, time);

(*env)->ReleaseByteArrayElements(env, frame_, frame, 0);

return ret;

}

int stop() {

RTMP_Close(rtmp);

RTMP_Free(rtmp);

}

/**

* H.264 的編碼資訊幀是傳送給 RTMP 伺服器稱為 AVC sequence header,

* RTMP 伺服器只有收到 AVC sequence header 中的 sps, pps 才能解析後續傳送的 H264 幀。

*/

int send_video_sps_pps(unsigned char *sps, int sps_len, unsigned char *pps, int pps_len) {

int i;

packet = (RTMPPacket *) malloc(RTMP_HEAD_SIZE + 1024);

memset(packet, 0, RTMP_HEAD_SIZE);

packet->m_body = (char *) packet + RTMP_HEAD_SIZE;

body = (unsigned char *) packet->m_body;

i = 0;

body[i++] = 0x17; //1:keyframe 7:AVC

body[i++] = 0x00; // AVC sequence header

body[i++] = 0x00;

body[i++] = 0x00;

body[i++] = 0x00; //fill in 0

/*AVCDecoderConfigurationRecord*/

body[i++] = 0x01;

body[i++] = sps[1]; //AVCProfileIndecation

body[i++] = sps[2]; //profile_compatibilty

body[i++] = sps[3]; //AVCLevelIndication

body[i++] = 0xff;//lengthSizeMinusOne

/*SPS*/

body[i++] = 0xe1;

body[i++] = (sps_len >> 8) & 0xff;

body[i++] = sps_len & 0xff;

/*sps data*/

memcpy(&body[i], sps, sps_len);

i += sps_len;

/*PPS*/

body[i++] = 0x01;

/*sps data length*/

body[i++] = (pps_len >> 8) & 0xff;

body[i++] = pps_len & 0xff;

memcpy(&body[i], pps, pps_len);

i += pps_len;

packet->m_packetType = RTMP_PACKET_TYPE_VIDEO;

packet->m_nBodySize = i;

packet->m_nChannel = 0x04;

packet->m_nTimeStamp = 0;

packet->m_hasAbsTimestamp = 0;

packet->m_headerType = RTMP_PACKET_SIZE_MEDIUM;

packet->m_nInfoField2 = rtmp->m_stream_id;

/*傳送*/

if (RTMP_IsConnected(rtmp)) {

RTMP_SendPacket(rtmp, packet, TRUE);

}

free(packet);

return 0;

}

//sps 與 pps 的幀界定符都是 00 00 00 01,而普通幀可能是 00 00 00 01 也有可能 00 00 01

int send_rtmp_video(unsigned char *buf, int len, long time) {

int type;

long timeOffset;

timeOffset = time - start_time;/*start_time為開始直播的時間戳*/

/*去掉幀界定符*/

if (buf[2] == 0x00) {/*00 00 00 01*/

buf += 4;

len -= 4;

} else if (buf[2] == 0x01) {

buf += 3;

len - 3;

}

type = buf[0] & 0x1f;

packet = (RTMPPacket *) malloc(RTMP_HEAD_SIZE + len + 9);

memset(packet, 0, RTMP_HEAD_SIZE);

packet->m_body = (char *) packet + RTMP_HEAD_SIZE;

packet->m_nBodySize = len + 9;

/* send video packet*/

body = (unsigned char *) packet->m_body;

memset(body, 0, len + 9);

/*key frame*/

body[0] = 0x27;

if (type == NAL_SLICE_IDR) {

body[0] = 0x17; //關鍵幀

}

body[1] = 0x01;/*nal unit*/

body[2] = 0x00;

body[3] = 0x00;

body[4] = 0x00;

body[5] = (len >> 24) & 0xff;

body[6] = (len >> 16) & 0xff;

body[7] = (len >> 8) & 0xff;

body[8] = (len) & 0xff;

/*copy data*/

memcpy(&body[9], buf, len);

packet->m_hasAbsTimestamp = 0;

packet->m_packetType = RTMP_PACKET_TYPE_VIDEO;

packet->m_nInfoField2 = rtmp->m_stream_id;

packet->m_nChannel = 0x04;

packet->m_headerType = RTMP_PACKET_SIZE_LARGE;

packet->m_nTimeStamp = timeOffset;

if (RTMP_IsConnected(rtmp)) {

RTMP_SendPacket(rtmp, packet, TRUE);

}

free(packet);

}

JNIEXPORT jint JNICALL

Java_com_blueberry_hellortmp_Rtmp_stop(JNIEnv *env, jclass type) {

stop();

return 0;

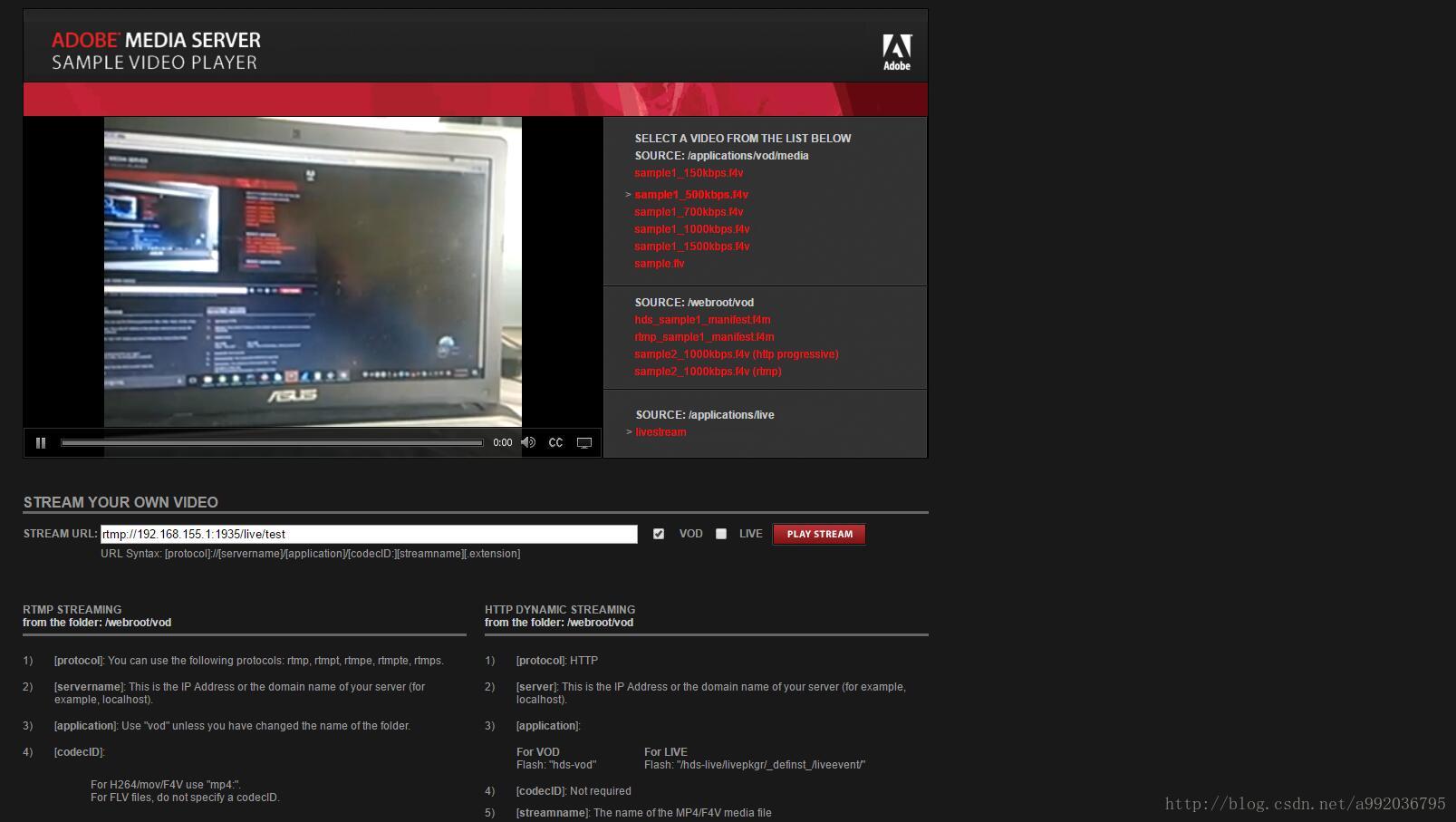

}可以安裝Adobe Media Server進行觀看