TensorFlow的訓練模型在Android和Java的應用及呼叫

環境:Windows 7

當我們開始學習程式設計的時候,第一件事往往是學習列印"Hello World"。就好比程式設計入門有Hello World,機器學習入門有MNIST。

MNIST是一個入門級的計算機視覺資料集,它包含各種手寫數字圖片:

它也包含每一張圖片對應的標籤,告訴我們這個是數字幾。比如,上面這四張圖片的標籤分別是5,0,4,1。

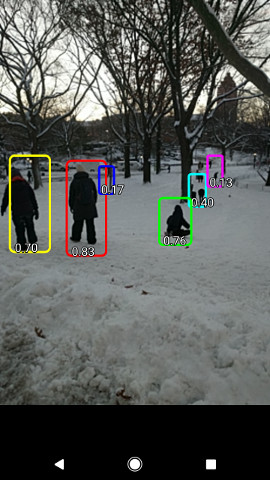

那我我們就將TensorFlow裡的一個訓練後的模型資料集,在Android裡實現呼叫使用。

Tensorflow訓練模型通常使用Python api編寫,訓練模型儲存為二進位制pb檔案,內含資料集。

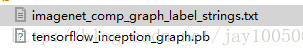

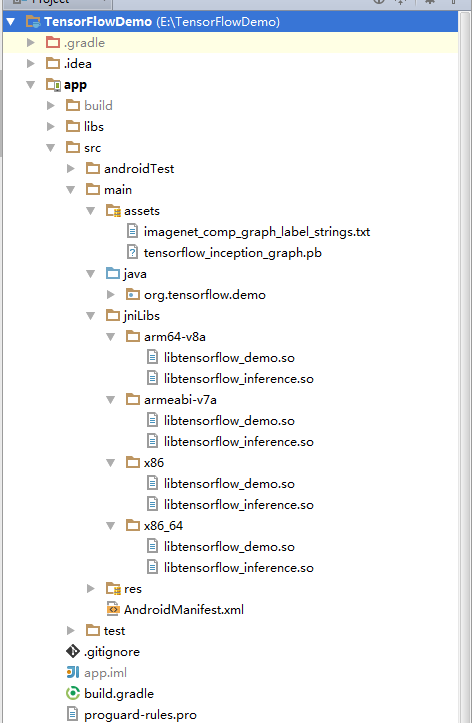

裡面有2個檔案:

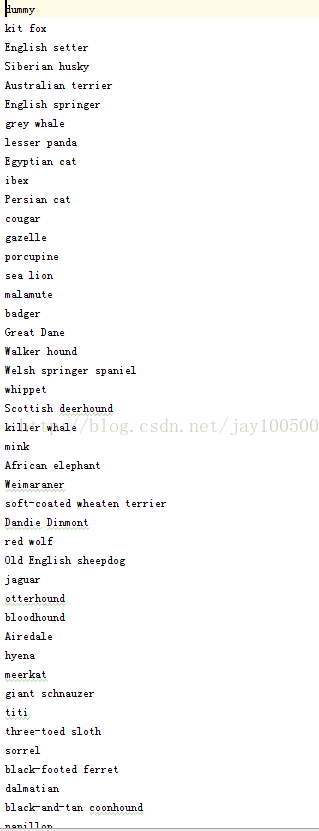

第一個txt檔案展示了這個pb訓練模型可以識別的東西有哪些。

第二個pb檔案為訓練模型資料集,有51.3M大小。

那麼我們接下來就是在android或Java裡呼叫API使用他這個訓練模型,實現影象識別功能。

Android想要使用要編譯so,畢竟是跨平臺呼叫。

jni在官方Demo裡也附帶了。

Android和TensorFlow呼叫API的aar庫可以在gradle裡引用:

compile 'org.tensorflow:tensorflow-android:+'基本結構:

基本API呼叫訓練模型如下程式碼類似:

TensorFlowInferenceInterface tfi = new TensorFlowInferenceInterface("F:/tf_mode/output_graph.pb" 主要的類就是TensorFlowInferenceInterface 、Operation。

那麼接下來把官方Demo的這個類呼叫給出:

他這個是Android的Assets目錄讀取訓練模型, 從

c.inferenceInterface = new TensorFlowInferenceInterface(assetManager, modelFilename);

這句可以看出。

那麼我們可以根據實際訓練模型pb檔案的位置進行修改引用。

/* Copyright 2016 The TensorFlow Authors. All Rights Reserved.

Licensed under the Apache License, Version 2.0 (the "License");

you may not use this file except in compliance with the License.

You may obtain a copy of the License at

http://www.apache.org/licenses/LICENSE-2.0

Unless required by applicable law or agreed to in writing, software

distributed under the License is distributed on an "AS IS" BASIS,

WITHOUT WARRANTIES OR CONDITIONS OF ANY KIND, either express or implied.

See the License for the specific language governing permissions and

limitations under the License.

==============================================================================*/

package org.tensorflow.demo;

import android.content.res.AssetManager;

import android.graphics.Bitmap;

import android.os.Trace;

import android.util.Log;

import java.io.BufferedReader;

import java.io.IOException;

import java.io.InputStreamReader;

import java.util.ArrayList;

import java.util.Comparator;

import java.util.List;

import java.util.PriorityQueue;

import java.util.Vector;

import org.tensorflow.Operation;

import org.tensorflow.contrib.android.TensorFlowInferenceInterface;

/** A classifier specialized to label images using TensorFlow. */

public class TensorFlowImageClassifier implements Classifier {

private static final String TAG = "TensorFlowImageClassifier";

// Only return this many results with at least this confidence.

private static final int MAX_RESULTS = 3;

private static final float THRESHOLD = 0.1f;

// Config values.

private String inputName;

private String outputName;

private int inputSize;

private int imageMean;

private float imageStd;

// Pre-allocated buffers.

private Vector<String> labels = new Vector<String>();

private int[] intValues;

private float[] floatValues;

private float[] outputs;

private String[] outputNames;

private boolean logStats = false;

private TensorFlowInferenceInterface inferenceInterface;

private TensorFlowImageClassifier() {}

/**

* Initializes a native TensorFlow session for classifying images.

*

* @param assetManager The asset manager to be used to load assets.

* @param modelFilename The filepath of the model GraphDef protocol buffer.

* @param labelFilename The filepath of label file for classes.

* @param inputSize The input size. A square image of inputSize x inputSize is assumed.

* @param imageMean The assumed mean of the image values.

* @param imageStd The assumed std of the image values.

* @param inputName The label of the image input node.

* @param outputName The label of the output node.

* @throws IOException

*/

public static Classifier create(

AssetManager assetManager,

String modelFilename,

String labelFilename,

int inputSize,

int imageMean,

float imageStd,

String inputName,

String outputName) {

TensorFlowImageClassifier c = new TensorFlowImageClassifier();

c.inputName = inputName;

c.outputName = outputName;

// Read the label names into memory.

// TODO(andrewharp): make this handle non-assets.

String actualFilename = labelFilename.split("file:///android_asset/")[1];

Log.i(TAG, "Reading labels from: " + actualFilename);

BufferedReader br = null;

try {

br = new BufferedReader(new InputStreamReader(assetManager.open(actualFilename)));

String line;

while ((line = br.readLine()) != null) {

c.labels.add(line);

}

br.close();

} catch (IOException e) {

throw new RuntimeException("Problem reading label file!" , e);

}

c.inferenceInterface = new TensorFlowInferenceInterface(assetManager, modelFilename);

// The shape of the output is [N, NUM_CLASSES], where N is the batch size.

final Operation operation = c.inferenceInterface.graphOperation(outputName);

final int numClasses = (int) operation.output(0).shape().size(1);

Log.i(TAG, "Read " + c.labels.size() + " labels, output layer size is " + numClasses);

// Ideally, inputSize could have been retrieved from the shape of the input operation. Alas,

// the placeholder node for input in the graphdef typically used does not specify a shape, so it

// must be passed in as a parameter.

c.inputSize = inputSize;

c.imageMean = imageMean;

c.imageStd = imageStd;

// Pre-allocate buffers.

c.outputNames = new String[] {outputName};

c.intValues = new int[inputSize * inputSize];

c.floatValues = new float[inputSize * inputSize * 3];

c.outputs = new float[numClasses];

return c;

}

@Override

public List<Recognition> recognizeImage(final Bitmap bitmap) {

// Log this method so that it can be analyzed with systrace.

Trace.beginSection("recognizeImage");

Trace.beginSection("preprocessBitmap");

// Preprocess the image data from 0-255 int to normalized float based

// on the provided parameters.

bitmap.getPixels(intValues, 0, bitmap.getWidth(), 0, 0, bitmap.getWidth(), bitmap.getHeight());

for (int i = 0; i < intValues.length; ++i) {

final int val = intValues[i];

floatValues[i * 3 + 0] = (((val >> 16) & 0xFF) - imageMean) / imageStd;

floatValues[i * 3 + 1] = (((val >> 8) & 0xFF) - imageMean) / imageStd;

floatValues[i * 3 + 2] = ((val & 0xFF) - imageMean) / imageStd;

}

Trace.endSection();

// Copy the input data into TensorFlow.

Trace.beginSection("feed");

inferenceInterface.feed(inputName, floatValues, 1, inputSize, inputSize, 3);

Trace.endSection();

// Run the inference call.

Trace.beginSection("run");

inferenceInterface.run(outputNames, logStats);

Trace.endSection();

// Copy the output Tensor back into the output array.

Trace.beginSection("fetch");

inferenceInterface.fetch(outputName, outputs);

Trace.endSection();

// Find the best classifications.

PriorityQueue<Recognition> pq =

new PriorityQueue<Recognition>(

3,

new Comparator<Recognition>() {

@Override

public int compare(Recognition lhs, Recognition rhs) {

// Intentionally reversed to put high confidence at the head of the queue.

return Float.compare(rhs.getConfidence(), lhs.getConfidence());

}

});

for (int i = 0; i < outputs.length; ++i) {

if (outputs[i] > THRESHOLD) {

pq.add(

new Recognition(

"" + i, labels.size() > i ? labels.get(i) : "unknown", outputs[i], null));

}

}

final ArrayList<Recognition> recognitions = new ArrayList<Recognition>();

int recognitionsSize = Math.min(pq.size(), MAX_RESULTS);

for (int i = 0; i < recognitionsSize; ++i) {

recognitions.add(pq.poll());

}

Trace.endSection(); // "recognizeImage"

return recognitions;

}

@Override

public void enableStatLogging(boolean logStats) {

this.logStats = logStats;

}

@Override

public String getStatString() {

return inferenceInterface.getStatString();

}

@Override

public void close() {

inferenceInterface.close();

}

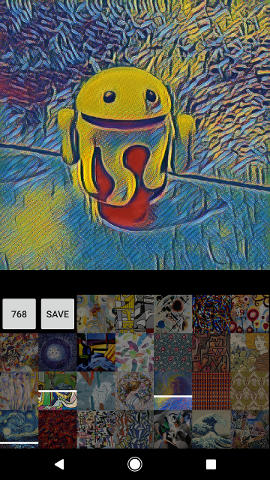

}新版本的api改了下,那我給出舊版本的Android Studio版本的Demo。

這個是國外的一個開發者編譯好so庫的一箇舊的Demo呼叫版本。大家可以參考下,和新版使用方法大同小異。