kfka學習筆記二:使用Python操作Kafka

1、準備工作

使用python操作kafka目前比較常用的庫是kafka-python庫,但是在安裝這個庫的時候需要依賴setuptools庫和six庫,下面就要分別來下載這幾個庫

1、下載setuptools

開啟這個網址會彈出類似下面的額下載視窗,選擇儲存檔案,點選確定可以下載到setuptools-0.6c11-py2.6.egg

2、下載kafka-python

開啟http://pipy.python.org,在搜尋框裡面輸入kafka-python,然後點選【search】就開啟如下圖所示的介面。裡面列出了對python版本的要求,但是根據測試,這個版本在Python 2.6.6下面也是可以正常執行的。

點選Download開啟下面的介面

點選Download開啟下面的介面

選擇 kafka-python-1.3.5.tar.gz (md5) 開始下載

3、下載six

開啟http://pipy.python.org,在搜尋框裡面輸入six,然後點選【search】就開啟如下圖所示的介面。

開啟six1.11.0

點選紅色方框的連結,會下載到six-1.11.0.tar.gz

2、安裝相關python庫

在上一步裡面我們已經下載了好相關的包,下面開始具體安裝,首先建立一個/opt/package/python_lib,然後把這幾個包檔案上傳到這裡

1、安裝setuptools

執行sh setuptools-0.6c11-py2.6.egg

執行結果如下:

setuptools安裝成功。

2、安裝six

1)解壓

執行tar -zxvf six-1.11.0.tar.gz

解壓之後會產生six-1.11.0資料夾

2)安裝

cd six-1.11.0

ll

然後執行python setup.py install

3、安裝kafka-python

執行tar -zxvf kafka-python-1.3.4.tar.gz解壓安裝包,會產生kafka-python-1.3.4資料夾,進入到該資料夾

執行python setup.py install

[[email protected] kafka-python-1.3.4]# python setup.py install

running install

running bdist_egg

running egg_info

creating kafka_python.egg-info

writing kafka_python.egg-info/PKG-INFO

writing top-level names to kafka_python.egg-info/top_level.txt

writing dependency_links to kafka_python.egg-info/dependency_links.txt

writing manifest file 'kafka_python.egg-info/SOURCES.txt'

reading manifest file 'kafka_python.egg-info/SOURCES.txt'

reading manifest template 'MANIFEST.in'

writing manifest file 'kafka_python.egg-info/SOURCES.txt'

installing library code to build/bdist.linux-x86_64/egg

running install_lib

running build_py

creating build

creating build/lib

creating build/lib/kafka

copying kafka/future.py -> build/lib/kafka

copying kafka/client_async.py -> build/lib/kafka

copying kafka/errors.py -> build/lib/kafka

copying kafka/__init__.py -> build/lib/kafka

copying kafka/structs.py -> build/lib/kafka

copying kafka/context.py -> build/lib/kafka

copying kafka/cluster.py -> build/lib/kafka

copying kafka/conn.py -> build/lib/kafka

copying kafka/version.py -> build/lib/kafka

copying kafka/client.py -> build/lib/kafka

copying kafka/codec.py -> build/lib/kafka

copying kafka/util.py -> build/lib/kafka

copying kafka/common.py -> build/lib/kafka

creating build/lib/kafka/serializer

copying kafka/serializer/__init__.py -> build/lib/kafka/serializer

copying kafka/serializer/abstract.py -> build/lib/kafka/serializer

creating build/lib/kafka/partitioner

copying kafka/partitioner/hashed.py -> build/lib/kafka/partitioner

copying kafka/partitioner/roundrobin.py -> build/lib/kafka/partitioner

copying kafka/partitioner/__init__.py -> build/lib/kafka/partitioner

copying kafka/partitioner/base.py -> build/lib/kafka/partitioner

copying kafka/partitioner/default.py -> build/lib/kafka/partitioner

creating build/lib/kafka/consumer

copying kafka/consumer/__init__.py -> build/lib/kafka/consumer

copying kafka/consumer/base.py -> build/lib/kafka/consumer

copying kafka/consumer/group.py -> build/lib/kafka/consumer

copying kafka/consumer/simple.py -> build/lib/kafka/consumer

copying kafka/consumer/subscription_state.py -> build/lib/kafka/consumer

copying kafka/consumer/fetcher.py -> build/lib/kafka/consumer

copying kafka/consumer/multiprocess.py -> build/lib/kafka/consumer

creating build/lib/kafka/producer

copying kafka/producer/future.py -> build/lib/kafka/producer

copying kafka/producer/__init__.py -> build/lib/kafka/producer

copying kafka/producer/buffer.py -> build/lib/kafka/producer

copying kafka/producer/base.py -> build/lib/kafka/producer

copying kafka/producer/record_accumulator.py -> build/lib/kafka/producer

copying kafka/producer/simple.py -> build/lib/kafka/producer

copying kafka/producer/kafka.py -> build/lib/kafka/producer

copying kafka/producer/sender.py -> build/lib/kafka/producer

copying kafka/producer/keyed.py -> build/lib/kafka/producer

creating build/lib/kafka/vendor

copying kafka/vendor/socketpair.py -> build/lib/kafka/vendor

copying kafka/vendor/__init__.py -> build/lib/kafka/vendor

copying kafka/vendor/six.py -> build/lib/kafka/vendor

copying kafka/vendor/selectors34.py -> build/lib/kafka/vendor

creating build/lib/kafka/protocol

copying kafka/protocol/legacy.py -> build/lib/kafka/protocol

copying kafka/protocol/pickle.py -> build/lib/kafka/protocol

copying kafka/protocol/admin.py -> build/lib/kafka/protocol

copying kafka/protocol/struct.py -> build/lib/kafka/protocol

copying kafka/protocol/message.py -> build/lib/kafka/protocol

copying kafka/protocol/__init__.py -> build/lib/kafka/protocol

copying kafka/protocol/offset.py -> build/lib/kafka/protocol

copying kafka/protocol/metadata.py -> build/lib/kafka/protocol

copying kafka/protocol/fetch.py -> build/lib/kafka/protocol

copying kafka/protocol/commit.py -> build/lib/kafka/protocol

copying kafka/protocol/group.py -> build/lib/kafka/protocol

copying kafka/protocol/abstract.py -> build/lib/kafka/protocol

copying kafka/protocol/produce.py -> build/lib/kafka/protocol

copying kafka/protocol/api.py -> build/lib/kafka/protocol

copying kafka/protocol/types.py -> build/lib/kafka/protocol

creating build/lib/kafka/metrics

copying kafka/metrics/quota.py -> build/lib/kafka/metrics

copying kafka/metrics/kafka_metric.py -> build/lib/kafka/metrics

copying kafka/metrics/measurable.py -> build/lib/kafka/metrics

copying kafka/metrics/__init__.py -> build/lib/kafka/metrics

copying kafka/metrics/metric_name.py -> build/lib/kafka/metrics

copying kafka/metrics/measurable_stat.py -> build/lib/kafka/metrics

copying kafka/metrics/dict_reporter.py -> build/lib/kafka/metrics

copying kafka/metrics/stat.py -> build/lib/kafka/metrics

copying kafka/metrics/compound_stat.py -> build/lib/kafka/metrics

copying kafka/metrics/metrics.py -> build/lib/kafka/metrics

copying kafka/metrics/metric_config.py -> build/lib/kafka/metrics

copying kafka/metrics/metrics_reporter.py -> build/lib/kafka/metrics

creating build/lib/kafka/coordinator

copying kafka/coordinator/__init__.py -> build/lib/kafka/coordinator

copying kafka/coordinator/base.py -> build/lib/kafka/coordinator

copying kafka/coordinator/protocol.py -> build/lib/kafka/coordinator

copying kafka/coordinator/heartbeat.py -> build/lib/kafka/coordinator

copying kafka/coordinator/consumer.py -> build/lib/kafka/coordinator

creating build/lib/kafka/metrics/stats

copying kafka/metrics/stats/rate.py -> build/lib/kafka/metrics/stats

copying kafka/metrics/stats/percentile.py -> build/lib/kafka/metrics/stats

copying kafka/metrics/stats/min_stat.py -> build/lib/kafka/metrics/stats

copying kafka/metrics/stats/sampled_stat.py -> build/lib/kafka/metrics/stats

copying kafka/metrics/stats/__init__.py -> build/lib/kafka/metrics/stats

copying kafka/metrics/stats/count.py -> build/lib/kafka/metrics/stats

copying kafka/metrics/stats/histogram.py -> build/lib/kafka/metrics/stats

copying kafka/metrics/stats/max_stat.py -> build/lib/kafka/metrics/stats

copying kafka/metrics/stats/sensor.py -> build/lib/kafka/metrics/stats

copying kafka/metrics/stats/total.py -> build/lib/kafka/metrics/stats

copying kafka/metrics/stats/percentiles.py -> build/lib/kafka/metrics/stats

copying kafka/metrics/stats/avg.py -> build/lib/kafka/metrics/stats

creating build/lib/kafka/coordinator/assignors

copying kafka/coordinator/assignors/roundrobin.py -> build/lib/kafka/coordinator/assignors

copying kafka/coordinator/assignors/__init__.py -> build/lib/kafka/coordinator/assignors

copying kafka/coordinator/assignors/abstract.py -> build/lib/kafka/coordinator/assignors

copying kafka/coordinator/assignors/range.py -> build/lib/kafka/coordinator/assignors

creating build/bdist.linux-x86_64

creating build/bdist.linux-x86_64/egg

creating build/bdist.linux-x86_64/egg/kafka

creating build/bdist.linux-x86_64/egg/kafka/serializer

copying build/lib/kafka/serializer/__init__.py -> build/bdist.linux-x86_64/egg/kafka/serializer

copying build/lib/kafka/serializer/abstract.py -> build/bdist.linux-x86_64/egg/kafka/serializer

creating build/bdist.linux-x86_64/egg/kafka/partitioner

copying build/lib/kafka/partitioner/hashed.py -> build/bdist.linux-x86_64/egg/kafka/partitioner

copying build/lib/kafka/partitioner/roundrobin.py -> build/bdist.linux-x86_64/egg/kafka/partitioner

copying build/lib/kafka/partitioner/__init__.py -> build/bdist.linux-x86_64/egg/kafka/partitioner

copying build/lib/kafka/partitioner/base.py -> build/bdist.linux-x86_64/egg/kafka/partitioner

copying build/lib/kafka/partitioner/default.py -> build/bdist.linux-x86_64/egg/kafka/partitioner

copying build/lib/kafka/future.py -> build/bdist.linux-x86_64/egg/kafka

creating build/bdist.linux-x86_64/egg/kafka/consumer

copying build/lib/kafka/consumer/__init__.py -> build/bdist.linux-x86_64/egg/kafka/consumer

copying build/lib/kafka/consumer/base.py -> build/bdist.linux-x86_64/egg/kafka/consumer

copying build/lib/kafka/consumer/group.py -> build/bdist.linux-x86_64/egg/kafka/consumer

copying build/lib/kafka/consumer/simple.py -> build/bdist.linux-x86_64/egg/kafka/consumer

copying build/lib/kafka/consumer/subscription_state.py -> build/bdist.linux-x86_64/egg/kafka/consumer

copying build/lib/kafka/consumer/fetcher.py -> build/bdist.linux-x86_64/egg/kafka/consumer

copying build/lib/kafka/consumer/multiprocess.py -> build/bdist.linux-x86_64/egg/kafka/consumer

creating build/bdist.linux-x86_64/egg/kafka/producer

copying build/lib/kafka/producer/future.py -> build/bdist.linux-x86_64/egg/kafka/producer

copying build/lib/kafka/producer/__init__.py -> build/bdist.linux-x86_64/egg/kafka/producer

copying build/lib/kafka/producer/buffer.py -> build/bdist.linux-x86_64/egg/kafka/producer

copying build/lib/kafka/producer/base.py -> build/bdist.linux-x86_64/egg/kafka/producer

copying build/lib/kafka/producer/record_accumulator.py -> build/bdist.linux-x86_64/egg/kafka/producer

copying build/lib/kafka/producer/simple.py -> build/bdist.linux-x86_64/egg/kafka/producer

copying build/lib/kafka/producer/kafka.py -> build/bdist.linux-x86_64/egg/kafka/producer

copying build/lib/kafka/producer/sender.py -> build/bdist.linux-x86_64/egg/kafka/producer

copying build/lib/kafka/producer/keyed.py -> build/bdist.linux-x86_64/egg/kafka/producer

copying build/lib/kafka/client_async.py -> build/bdist.linux-x86_64/egg/kafka

copying build/lib/kafka/errors.py -> build/bdist.linux-x86_64/egg/kafka

copying build/lib/kafka/__init__.py -> build/bdist.linux-x86_64/egg/kafka

creating build/bdist.linux-x86_64/egg/kafka/vendor

copying build/lib/kafka/vendor/socketpair.py -> build/bdist.linux-x86_64/egg/kafka/vendor

copying build/lib/kafka/vendor/__init__.py -> build/bdist.linux-x86_64/egg/kafka/vendor

copying build/lib/kafka/vendor/six.py -> build/bdist.linux-x86_64/egg/kafka/vendor

copying build/lib/kafka/vendor/selectors34.py -> build/bdist.linux-x86_64/egg/kafka/vendor

copying build/lib/kafka/structs.py -> build/bdist.linux-x86_64/egg/kafka

creating build/bdist.linux-x86_64/egg/kafka/protocol

copying build/lib/kafka/protocol/legacy.py -> build/bdist.linux-x86_64/egg/kafka/protocol

copying build/lib/kafka/protocol/pickle.py -> build/bdist.linux-x86_64/egg/kafka/protocol

copying build/lib/kafka/protocol/admin.py -> build/bdist.linux-x86_64/egg/kafka/protocol

copying build/lib/kafka/protocol/struct.py -> build/bdist.linux-x86_64/egg/kafka/protocol

copying build/lib/kafka/protocol/message.py -> build/bdist.linux-x86_64/egg/kafka/protocol

copying build/lib/kafka/protocol/__init__.py -> build/bdist.linux-x86_64/egg/kafka/protocol

copying build/lib/kafka/protocol/offset.py -> build/bdist.linux-x86_64/egg/kafka/protocol

copying build/lib/kafka/protocol/metadata.py -> build/bdist.linux-x86_64/egg/kafka/protocol

copying build/lib/kafka/protocol/fetch.py -> build/bdist.linux-x86_64/egg/kafka/protocol

copying build/lib/kafka/protocol/commit.py -> build/bdist.linux-x86_64/egg/kafka/protocol

copying build/lib/kafka/protocol/group.py -> build/bdist.linux-x86_64/egg/kafka/protocol

copying build/lib/kafka/protocol/abstract.py -> build/bdist.linux-x86_64/egg/kafka/protocol

copying build/lib/kafka/protocol/produce.py -> build/bdist.linux-x86_64/egg/kafka/protocol

copying build/lib/kafka/protocol/api.py -> build/bdist.linux-x86_64/egg/kafka/protocol

copying build/lib/kafka/protocol/types.py -> build/bdist.linux-x86_64/egg/kafka/protocol

copying build/lib/kafka/context.py -> build/bdist.linux-x86_64/egg/kafka

copying build/lib/kafka/cluster.py -> build/bdist.linux-x86_64/egg/kafka

copying build/lib/kafka/conn.py -> build/bdist.linux-x86_64/egg/kafka

creating build/bdist.linux-x86_64/egg/kafka/metrics

copying build/lib/kafka/metrics/quota.py -> build/bdist.linux-x86_64/egg/kafka/metrics

copying build/lib/kafka/metrics/kafka_metric.py -> build/bdist.linux-x86_64/egg/kafka/metrics

copying build/lib/kafka/metrics/measurable.py -> build/bdist.linux-x86_64/egg/kafka/metrics

copying build/lib/kafka/metrics/__init__.py -> build/bdist.linux-x86_64/egg/kafka/metrics

copying build/lib/kafka/metrics/metric_name.py -> build/bdist.linux-x86_64/egg/kafka/metrics

creating build/bdist.linux-x86_64/egg/kafka/metrics/stats

copying build/lib/kafka/metrics/stats/rate.py -> build/bdist.linux-x86_64/egg/kafka/metrics/stats

copying build/lib/kafka/metrics/stats/percentile.py -> build/bdist.linux-x86_64/egg/kafka/metrics/stats

copying build/lib/kafka/metrics/stats/min_stat.py -> build/bdist.linux-x86_64/egg/kafka/metrics/stats

copying build/lib/kafka/metrics/stats/sampled_stat.py -> build/bdist.linux-x86_64/egg/kafka/metrics/stats

copying build/lib/kafka/metrics/stats/__init__.py -> build/bdist.linux-x86_64/egg/kafka/metrics/stats

copying build/lib/kafka/metrics/stats/count.py -> build/bdist.linux-x86_64/egg/kafka/metrics/stats

copying build/lib/kafka/metrics/stats/histogram.py -> build/bdist.linux-x86_64/egg/kafka/metrics/stats

copying build/lib/kafka/metrics/stats/max_stat.py -> build/bdist.linux-x86_64/egg/kafka/metrics/stats

copying build/lib/kafka/metrics/stats/sensor.py -> build/bdist.linux-x86_64/egg/kafka/metrics/stats

copying build/lib/kafka/metrics/stats/total.py -> build/bdist.linux-x86_64/egg/kafka/metrics/stats

copying build/lib/kafka/metrics/stats/percentiles.py -> build/bdist.linux-x86_64/egg/kafka/metrics/stats

copying build/lib/kafka/metrics/stats/avg.py -> build/bdist.linux-x86_64/egg/kafka/metrics/stats

copying build/lib/kafka/metrics/measurable_stat.py -> build/bdist.linux-x86_64/egg/kafka/metrics

copying build/lib/kafka/metrics/dict_reporter.py -> build/bdist.linux-x86_64/egg/kafka/metrics

copying build/lib/kafka/metrics/stat.py -> build/bdist.linux-x86_64/egg/kafka/metrics

copying build/lib/kafka/metrics/compound_stat.py -> build/bdist.linux-x86_64/egg/kafka/metrics

copying build/lib/kafka/metrics/metrics.py -> build/bdist.linux-x86_64/egg/kafka/metrics

copying build/lib/kafka/metrics/metric_config.py -> build/bdist.linux-x86_64/egg/kafka/metrics

copying build/lib/kafka/metrics/metrics_reporter.py -> build/bdist.linux-x86_64/egg/kafka/metrics

copying build/lib/kafka/version.py -> build/bdist.linux-x86_64/egg/kafka

copying build/lib/kafka/client.py -> build/bdist.linux-x86_64/egg/kafka

copying build/lib/kafka/codec.py -> build/bdist.linux-x86_64/egg/kafka

copying build/lib/kafka/util.py -> build/bdist.linux-x86_64/egg/kafka

creating build/bdist.linux-x86_64/egg/kafka/coordinator

copying build/lib/kafka/coordinator/__init__.py -> build/bdist.linux-x86_64/egg/kafka/coordinator

copying build/lib/kafka/coordinator/base.py -> build/bdist.linux-x86_64/egg/kafka/coordinator

copying build/lib/kafka/coordinator/protocol.py -> build/bdist.linux-x86_64/egg/kafka/coordinator

creating build/bdist.linux-x86_64/egg/kafka/coordinator/assignors

copying build/lib/kafka/coordinator/assignors/roundrobin.py -> build/bdist.linux-x86_64/egg/kafka/coordinator/assignors

copying build/lib/kafka/coordinator/assignors/__init__.py -> build/bdist.linux-x86_64/egg/kafka/coordinator/assignors

copying build/lib/kafka/coordinator/assignors/abstract.py -> build/bdist.linux-x86_64/egg/kafka/coordinator/assignors

copying build/lib/kafka/coordinator/assignors/range.py -> build/bdist.linux-x86_64/egg/kafka/coordinator/assignors

copying build/lib/kafka/coordinator/heartbeat.py -> build/bdist.linux-x86_64/egg/kafka/coordinator

copying build/lib/kafka/coordinator/consumer.py -> build/bdist.linux-x86_64/egg/kafka/coordinator

copying build/lib/kafka/common.py -> build/bdist.linux-x86_64/egg/kafka

byte-compiling build/bdist.linux-x86_64/egg/kafka/serializer/__init__.py to __init__.pyc

byte-compiling build/bdist.linux-x86_64/egg/kafka/serializer/abstract.py to abstract.pyc

byte-compiling build/bdist.linux-x86_64/egg/kafka/partitioner/hashed.py to hashed.pyc

byte-compiling build/bdist.linux-x86_64/egg/kafka/partitioner/roundrobin.py to roundrobin.pyc

byte-compiling build/bdist.linux-x86_64/egg/kafka/partitioner/__init__.py to __init__.pyc

byte-compiling build/bdist.linux-x86_64/egg/kafka/partitioner/base.py to base.pyc

byte-compiling build/bdist.linux-x86_64/egg/kafka/partitioner/default.py to default.pyc

byte-compiling build/bdist.linux-x86_64/egg/kafka/future.py to future.pyc

byte-compiling build/bdist.linux-x86_64/egg/kafka/consumer/__init__.py to __init__.pyc

byte-compiling build/bdist.linux-x86_64/egg/kafka/consumer/base.py to base.pyc

byte-compiling build/bdist.linux-x86_64/egg/kafka/consumer/group.py to group.pyc

byte-compiling build/bdist.linux-x86_64/egg/kafka/consumer/simple.py to simple.pyc

byte-compiling build/bdist.linux-x86_64/egg/kafka/consumer/subscription_state.py to subscription_state.pyc

byte-compiling build/bdist.linux-x86_64/egg/kafka/consumer/fetcher.py to fetcher.pyc

byte-compiling build/bdist.linux-x86_64/egg/kafka/consumer/multiprocess.py to multiprocess.pyc

byte-compiling build/bdist.linux-x86_64/egg/kafka/producer/future.py to future.pyc

byte-compiling build/bdist.linux-x86_64/egg/kafka/producer/__init__.py to __init__.pyc

byte-compiling build/bdist.linux-x86_64/egg/kafka/producer/buffer.py to buffer.pyc

byte-compiling build/bdist.linux-x86_64/egg/kafka/producer/base.py to base.pyc

byte-compiling build/bdist.linux-x86_64/egg/kafka/producer/record_accumulator.py to record_accumulator.pyc

byte-compiling build/bdist.linux-x86_64/egg/kafka/producer/simple.py to simple.pyc

byte-compiling build/bdist.linux-x86_64/egg/kafka/producer/kafka.py to kafka.pyc

byte-compiling build/bdist.linux-x86_64/egg/kafka/producer/sender.py to sender.pyc

byte-compiling build/bdist.linux-x86_64/egg/kafka/producer/keyed.py to keyed.pyc

byte-compiling build/bdist.linux-x86_64/egg/kafka/client_async.py to client_async.pyc

byte-compiling build/bdist.linux-x86_64/egg/kafka/errors.py to errors.pyc

byte-compiling build/bdist.linux-x86_64/egg/kafka/__init__.py to __init__.pyc

byte-compiling build/bdist.linux-x86_64/egg/kafka/vendor/socketpair.py to socketpair.pyc

byte-compiling build/bdist.linux-x86_64/egg/kafka/vendor/__init__.py to __init__.pyc

byte-compiling build/bdist.linux-x86_64/egg/kafka/vendor/six.py to six.pyc

byte-compiling build/bdist.linux-x86_64/egg/kafka/vendor/selectors34.py to selectors34.pyc

byte-compiling build/bdist.linux-x86_64/egg/kafka/structs.py to structs.pyc

byte-compiling build/bdist.linux-x86_64/egg/kafka/protocol/legacy.py to legacy.pyc

byte-compiling build/bdist.linux-x86_64/egg/kafka/protocol/pickle.py to pickle.pyc

byte-compiling build/bdist.linux-x86_64/egg/kafka/protocol/admin.py to admin.pyc

byte-compiling build/bdist.linux-x86_64/egg/kafka/protocol/struct.py to struct.pyc

byte-compiling build/bdist.linux-x86_64/egg/kafka/protocol/message.py to message.pyc

byte-compiling build/bdist.linux-x86_64/egg/kafka/protocol/__init__.py to __init__.pyc

byte-compiling build/bdist.linux-x86_64/egg/kafka/protocol/offset.py to offset.pyc

byte-compiling build/bdist.linux-x86_64/egg/kafka/protocol/metadata.py to metadata.pyc

byte-compiling build/bdist.linux-x86_64/egg/kafka/protocol/fetch.py to fetch.pyc

byte-compiling build/bdist.linux-x86_64/egg/kafka/protocol/commit.py to commit.pyc

byte-compiling build/bdist.linux-x86_64/egg/kafka/protocol/group.py to group.pyc

byte-compiling build/bdist.linux-x86_64/egg/kafka/protocol/abstract.py to abstract.pyc

byte-compiling build/bdist.linux-x86_64/egg/kafka/protocol/produce.py to produce.pyc

byte-compiling build/bdist.linux-x86_64/egg/kafka/protocol/api.py to api.pyc

byte-compiling build/bdist.linux-x86_64/egg/kafka/protocol/types.py to types.pyc

byte-compiling build/bdist.linux-x86_64/egg/kafka/context.py to context.pyc

byte-compiling build/bdist.linux-x86_64/egg/kafka/cluster.py to cluster.pyc

byte-compiling build/bdist.linux-x86_64/egg/kafka/conn.py to conn.pyc

byte-compiling build/bdist.linux-x86_64/egg/kafka/metrics/quota.py to quota.pyc

byte-compiling build/bdist.linux-x86_64/egg/kafka/metrics/kafka_metric.py to kafka_metric.pyc

byte-compiling build/bdist.linux-x86_64/egg/kafka/metrics/measurable.py to measurable.pyc

byte-compiling build/bdist.linux-x86_64/egg/kafka/metrics/__init__.py to __init__.pyc

byte-compiling build/bdist.linux-x86_64/egg/kafka/metrics/metric_name.py to metric_name.pyc

byte-compiling build/bdist.linux-x86_64/egg/kafka/metrics/stats/rate.py to rate.pyc

byte-compiling build/bdist.linux-x86_64/egg/kafka/metrics/stats/percentile.py to percentile.pyc

byte-compiling build/bdist.linux-x86_64/egg/kafka/metrics/stats/min_stat.py to min_stat.pyc

byte-compiling build/bdist.linux-x86_64/egg/kafka/metrics/stats/sampled_stat.py to sampled_stat.pyc

byte-compiling build/bdist.linux-x86_64/egg/kafka/metrics/stats/__init__.py to __init__.pyc

byte-compiling build/bdist.linux-x86_64/egg/kafka/metrics/stats/count.py to count.pyc

byte-compiling build/bdist.linux-x86_64/egg/kafka/metrics/stats/histogram.py to histogram.pyc

byte-compiling build/bdist.linux-x86_64/egg/kafka/metrics/stats/max_stat.py to max_stat.pyc

byte-compiling build/bdist.linux-x86_64/egg/kafka/metrics/stats/sensor.py to sensor.pyc

byte-compiling build/bdist.linux-x86_64/egg/kafka/metrics/stats/total.py to total.pyc

byte-compiling build/bdist.linux-x86_64/egg/kafka/metrics/stats/percentiles.py to percentiles.pyc

byte-compiling build/bdist.linux-x86_64/egg/kafka/metrics/stats/avg.py to avg.pyc

byte-compiling build/bdist.linux-x86_64/egg/kafka/metrics/measurable_stat.py to measurable_stat.pyc

byte-compiling build/bdist.linux-x86_64/egg/kafka/metrics/dict_reporter.py to dict_reporter.pyc

byte-compiling build/bdist.linux-x86_64/egg/kafka/metrics/stat.py to stat.pyc

byte-compiling build/bdist.linux-x86_64/egg/kafka/metrics/compound_stat.py to compound_stat.pyc

byte-compiling build/bdist.linux-x86_64/egg/kafka/metrics/metrics.py to metrics.pyc

byte-compiling build/bdist.linux-x86_64/egg/kafka/metrics/metric_config.py to metric_config.pyc

byte-compiling build/bdist.linux-x86_64/egg/kafka/metrics/metrics_reporter.py to metrics_reporter.pyc

byte-compiling build/bdist.linux-x86_64/egg/kafka/version.py to version.pyc

byte-compiling build/bdist.linux-x86_64/egg/kafka/client.py to client.pyc

byte-compiling build/bdist.linux-x86_64/egg/kafka/codec.py to codec.pyc

byte-compiling build/bdist.linux-x86_64/egg/kafka/util.py to util.pyc

byte-compiling build/bdist.linux-x86_64/egg/kafka/coordinator/__init__.py to __init__.pyc

byte-compiling build/bdist.linux-x86_64/egg/kafka/coordinator/base.py to base.pyc

byte-compiling build/bdist.linux-x86_64/egg/kafka/coordinator/protocol.py to protocol.pyc

byte-compiling build/bdist.linux-x86_64/egg/kafka/coordinator/assignors/roundrobin.py to roundrobin.pyc

byte-compiling build/bdist.linux-x86_64/egg/kafka/coordinator/assignors/__init__.py to __init__.pyc

byte-compiling build/bdist.linux-x86_64/egg/kafka/coordinator/assignors/abstract.py to abstract.pyc

byte-compiling build/bdist.linux-x86_64/egg/kafka/coordinator/assignors/range.py to range.pyc

byte-compiling build/bdist.linux-x86_64/egg/kafka/coordinator/heartbeat.py to heartbeat.pyc

byte-compiling build/bdist.linux-x86_64/egg/kafka/coordinator/consumer.py to consumer.pyc

byte-compiling build/bdist.linux-x86_64/egg/kafka/common.py to common.pyc

creating build/bdist.linux-x86_64/egg/EGG-INFO

copying kafka_python.egg-info/PKG-INFO -> build/bdist.linux-x86_64/egg/EGG-INFO

copying kafka_python.egg-info/SOURCES.txt -> build/bdist.linux-x86_64/egg/EGG-INFO

copying kafka_python.egg-info/dependency_links.txt -> build/bdist.linux-x86_64/egg/EGG-INFO

copying kafka_python.egg-info/top_level.txt -> build/bdist.linux-x86_64/egg/EGG-INFO

zip_safe flag not set; analyzing archive contents...

kafka.vendor.six: module references __path__

creating dist

creating 'dist/kafka_python-1.3.4-py2.6.egg' and adding 'build/bdist.linux-x86_64/egg' to it

removing 'build/bdist.linux-x86_64/egg' (and everything under it)

Processing kafka_python-1.3.4-py2.6.egg

creating /usr/lib/python2.6/site-packages/kafka_python-1.3.4-py2.6.egg

Extracting kafka_python-1.3.4-py2.6.egg to /usr/lib/python2.6/site-packages

Adding kafka-python 1.3.4 to easy-install.pth file

Installed /usr/lib/python2.6/site-packages/kafka_python-1.3.4-py2.6.egg

Processing dependencies for kafka-python==1.3.4

Finished processing dependencies for kafka-python==1.3.4

[

接下來測試一下,進入python ,匯入KafkaProducer,如果沒有提示找不到包就證明已經安裝OK了

OK,提示kafka-python安裝成功。

3、編寫測試程式碼

無論使用哪種語言操作kafka其本質上都是在圍繞兩個角色進行的,分別是Producer、Consumer

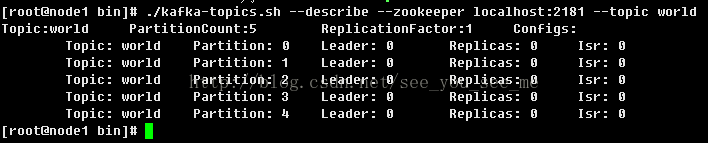

已經在kafka boker裡面建立好一個Topic,

1、建立Produer

1)、命令列方式---普通的傳送方式

[[email protected] python_app]# python

Python 2.6.6 (r266:84292, Nov 22 2013, 12:16:22)

[GCC 4.4.7 20120313 (Red Hat 4.4.7-4)] on linux2

Type "help", "copyright", "credits" or "license" for more information.

>>> from kafka import KafkaProducer

>>> producer = KafkaProducer(bootstrap_servers='192.168.120.11:9092')

>>> for _ in range(100):

... producer.send('world',b'some_message_bytes')

...

<kafka.producer.future.FutureRecordMetadata object at 0xddb5d0>

<kafka.producer.future.FutureRecordMetadata object at 0xddb750>

<kafka.producer.future.FutureRecordMetadata object at 0xddb790>

上面的幾行功能分別是:

匯入KafkaProducer

建立連線到192.168.120.11:9092這個Broker的Producer,

迴圈向world這個Topic傳送100個訊息,訊息內容都是some_message_bytes',這種傳送方式不指定Partition,kafka會均勻大把這些訊息分別寫入5個Partiton裡面,

更詳細的說明可以參考 https://kafka-python.readthedocs.io/en/master/index.html

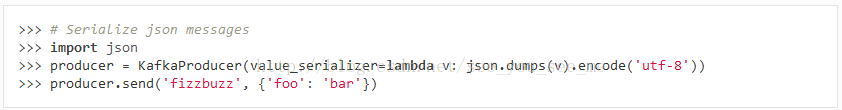

2)、命令列方式---傳送json字串

json作為一種強大的文字格式,已經得到非常普遍的應用,kafak-python也支援傳送json格式的訊息

其實如果你參考https://kafka-python.readthedocs.io/en/master/index.html這裡的KafkaProducer裡面的傳送json

一定會報錯的,這應該是這個文件的一個bug,

>>> producer = KafkaProducer(value_serializer=lambda v: json.dumps(v).encode('utf-8'))

Traceback (most recent call last):

File "<stdin>", line 1, in <module>

File "/usr/lib/python2.6/site-packages/kafka_python-1.3.4-py2.6.egg/kafka/producer/kafka.py", line 347, in __init__

**self.config)

File "/usr/lib/python2.6/site-packages/kafka_python-1.3.4-py2.6.egg/kafka/client_async.py", line 220, in __init__

self.config['api_version'] = self.check_version(timeout=check_timeout)

File "/usr/lib/python2.6/site-packages/kafka_python-1.3.4-py2.6.egg/kafka/client_async.py", line 841, in check_version

raise Errors.NoBrokersAvailable()

kafka.errors.NoBrokersAvailable: NoBrokersAvailable>>> producer = KafkaProducer(bootstrap_servers='192.168.120.11:9092',value_serializer=lambda v: json.dumps(v).encode('utf-8'))

>>> producer.send('world', {'key1': 'value1'})

<kafka.producer.future.FutureRecordMetadata object at 0x2a9ebd0>

>>>

3)、命令列方式---傳送普通字串

>>> producer.send('world', key=b'foo', value=b'bar')

<kafka.producer.future.FutureRecordMetadata object at 0x29dcd90>

>>> 4)、命令列方式--傳送壓縮字串

>>> producer = KafkaProducer(bootstrap_servers='192.168.120.11:9092',compression_type='gzip')

>>> producer.send('world', b'msg 1')經過測試這種方式傳送的內容,在接收方收到的訊息仍然是普通的字串,也許是沒有安裝python-lz4,原文中有這樣的內容:

kafka-python supports gzip compression/decompression natively. To produce or consume lz4 compressed messages, you should install python-lz4 (pip install lz4). To enable snappy, install python-snappy (also requires snappy library). See Installation for more information.

上面都是測試各個命令的使用,接下來,我們寫一個完整的指令碼,這個指令碼的功能是把指定目錄下的檔名傳送到world這個topic

file_monitor.py指令碼

#-*- coding: utf-8 -*-

from kafka import KafkaProducer

import json

import os

import time

from sys import argv

producer = KafkaProducer(bootstrap_servers='192.168.120.11:9092')

def log(str):

t = time.strftime(r"%Y-%m-%d_%H-%M-%S",time.localtime())

print("[%s]%s"%(t,str))

def list_file(path):

dir_list = os.listdir(path);

for f in dir_list:

producer.send('world',f)

producer.flush()

log('send: %s' % (f))

list_file(argv[1])

producer.close()

log('done')假如我們要監控/opt/jdk1.8.0_91/lib/missioncontrol/features這個目錄下的檔案,可以這樣執行

python file_monitor.py /opt/jdk1.8.0_91/lib/missioncontrol/features

執行結果如下:

[[email protected] python_app]# python file_monitor.py /opt/jdk1.8.0_91/lib/missioncontrol/features

[2017-11-07_17-41-04]send: org.eclipse.ecf.filetransfer.ssl.feature_1.0.0.v20140827-1444

[2017-11-07_17-41-04]send: org.eclipse.emf.common_2.10.1.v20140901-1043

[2017-11-07_17-41-04]send: com.jrockit.mc.feature.rcp.ja_5.5.0.165303

[2017-11-07_17-41-04]send: com.jrockit.mc.feature.console_5.5.0.165303

[2017-11-07_17-41-04]send: org.eclipse.ecf.core.feature_1.1.0.v20140827-1444

[2017-11-07_17-41-04]send: org.eclipse.equinox.p2.core.feature_1.3.0.v20140523-0116

[2017-11-07_17-41-04]send: org.eclipse.ecf.filetransfer.httpclient4.ssl.feature_1.0.0.v20140827-1444

[2017-11-07_17-41-04]send: com.jrockit.mc.feature.rcp_5.5.0.165303

[2017-11-07_17-41-04]send: org.eclipse.babel.nls_eclipse_zh_4.4.0.v20140623020002

[2017-11-07_17-41-04]send: com.jrockit.mc.rcp.product_5.5.0.165303

[2017-11-07_17-41-04]send: org.eclipse.help_2.0.102.v20141007-2301

[2017-11-07_17-41-04]send: org.eclipse.ecf.core.ssl.feature_1.0.0.v20140827-1444

[2017-11-07_17-41-04]send: org.eclipse.ecf.filetransfer.httpclient4.feature_3.9.1.v20140827-1444

[2017-11-07_17-41-04]send: org.eclipse.e4.rcp_1.3.100.v20141007-2033

[2017-11-07_17-41-04]send: org.eclipse.babel.nls_eclipse_ja_4.4.0.v20140623020002

[2017-11-07_17-41-04]send: com.jrockit.mc.feature.flightrecorder_5.5.0.165303

[2017-11-07_17-41-04]send: org.eclipse.emf.ecore_2.10.1.v20140901-1043

[2017-11-07_17-41-04]send: org.eclipse.equinox.p2.rcp.feature_1.2.0.v20140523-0116

[2017-11-07_17-41-04]send: org.eclipse.ecf.filetransfer.feature_3.9.0.v20140827-1444

[2017-11-07_17-41-04]send: com.jrockit.mc.feature.core_5.5.0.165303

[2017-11-07_17-41-04]send: org.eclipse.rcp_4.4.0.v20141007-2301

[2017-11-07_17-41-04]send: com.jrockit.mc.feature.rcp.zh_CN_5.5.0.165303

[2017-11-07_17-41-04]done

在consumer上看到的內容是這樣:

world:4:93: key=None value=org.eclipse.ecf.filetransfer.ssl.feature_1.0.0.v20140827-1444

world:1:112: key=None value=org.eclipse.emf.common_2.10.1.v20140901-1043

world:3:119: key=None value=com.jrockit.mc.feature.console_5.5.0.165303

world:1:113: key=None value=com.jrockit.mc.feature.rcp.ja_5.5.0.165303

world:0:86: key=None value=org.eclipse.ecf.core.feature_1.1.0.v20140827-1444

world:1:114: key=None value=org.eclipse.equinox.p2.core.feature_1.3.0.v20140523-0116

world:4:94: key=None value=org.eclipse.ecf.filetransfer.httpclient4.ssl.feature_1.0.0.v20140827-1444

world:0:87: key=None value=com.jrockit.mc.feature.rcp_5.5.0.165303

world:4:95: key=None value=org.eclipse.babel.nls_eclipse_zh_4.4.0.v20140623020002

world:2:66: key=None value=com.jrockit.mc.rcp.product_5.5.0.165303

world:4:96: key=None value=org.eclipse.ecf.core.ssl.feature_1.0.0.v20140827-1444

world:2:67: key=None value=org.eclipse.help_2.0.102.v20141007-2301

world:1:115: key=None value=org.eclipse.ecf.filetransfer.httpclient4.feature_3.9.1.v20140827-1444

world:4:97: key=None value=org.eclipse.e4.rcp_1.3.100.v20141007-2033

world:0:88: key=None value=org.eclipse.babel.nls_eclipse_ja_4.4.0.v20140623020002

world:4:98: key=None value=com.jrockit.mc.feature.flightrecorder_5.5.0.165303

world:3:120: key=None value=org.eclipse.emf.ecore_2.10.1.v20140901-1043

world:1:116: key=None value=org.eclipse.equinox.p2.rcp.feature_1.2.0.v20140523-0116

world:4:99: key=None value=org.eclipse.ecf.filetransfer.feature_3.9.0.v20140827-1444

world:2:68: key=None value=com.jrockit.mc.feature.core_5.5.0.165303

world:4:100: key=None value=com.jrockit.mc.feature.rcp.zh_CN_5.5.0.165303

world:2:69: key=None value=org.eclipse.rcp_4.4.0.v20141007-23012、建立Consumer

通常使用Kafka時會建立不同的Topic,並且在Topic裡面建立多個Partiton,因此作為Consumer,通常是連線到指定的Broker,指定的Topic來消費訊息。

完整的python 指令碼

consumer.py

#-*- coding: utf-8 -*-

from kafka import KafkaConsumer

import time

def log(str):

t = time.strftime(r"%Y-%m-%d_%H-%M-%S",time.localtime())

print("[%s]%s"%(t,str))

log('start consumer')

#消費192.168.120.11:9092上的world 這個Topic,指定consumer group是consumer-20171017

consumer=KafkaConsumer('world',group_id='consumer-20171017',bootstrap_servers=['192.168.120.11:9092'])

for msg in consumer:

recv = "%s:%d:%d: key=%s value=%s" %(msg.topic,msg.partition,msg.offset,msg.key,msg.value)

log(recv)重新啟動file_monitor.py指令碼

[[email protected] python_app]# python file_monitor.py /opt/jdk1.8.0_91/lib/missioncontrol/features

[2017-11-07_18-00-31]send: org.eclipse.ecf.filetransfer.ssl.feature_1.0.0.v20140827-1444

[2017-11-07_18-00-31]send: org.eclipse.emf.common_2.10.1.v20140901-1043

[2017-11-07_18-00-31]send: com.jrockit.mc.feature.rcp.ja_5.5.0.165303

[2017-11-07_18-00-31]send: com.jrockit.mc.feature.console_5.5.0.165303

[2017-11-07_18-00-31]send: org.eclipse.ecf.core.feature_1.1.0.v20140827-1444

[2017-11-07_18-00-31]send: org.eclipse.equinox.p2.core.feature_1.3.0.v20140523-0116

[2017-11-07_18-00-31]send: org.eclipse.ecf.filetransfer.httpclient4.ssl.feature_1.0.0.v20140827-1444

[2017-11-07_18-00-31]send: com.jrockit.mc.feature.rcp_5.5.0.165303

[2017-11-07_18-00-31]send: org.eclipse.babel.nls_eclipse_zh_4.4.0.v20140623020002

[2017-11-07_18-00-31]send: com.jrockit.mc.rcp.product_5.5.0.165303

[2017-11-07_18-00-31]send: org.eclipse.help_2.0.102.v20141007-2301

[2017-11-07_18-00-31]send: org.eclipse.ecf.core.ssl.feature_1.0.0.v20140827-1444

[2017-11-07_18-00-31]send: org.eclipse.ecf.filetransfer.httpclient4.feature_3.9.1.v20140827-1444

[2017-11-07_18-00-31]send: org.eclipse.e4.rcp_1.3.100.v20141007-2033

[2017-11-07_18-00-31]send: org.eclipse.babel.nls_eclipse_ja_4.4.0.v20140623020002

[2017-11-07_18-00-31]send: com.jrockit.mc.feature.flightrecorder_5.5.0.165303

[2017-11-07_18-00-31]send: org.eclipse.emf.ecore_2.10.1.v20140901-1043

[2017-11-07_18-00-31]send: org.eclipse.equinox.p2.rcp.feature_1.2.0.v20140523-0116

[2017-11-07_18-00-31]send: org.eclipse.ecf.filetransfer.feature_3.9.0.v20140827-1444

[2017-11-07_18-00-31]send: com.jrockit.mc.feature.core_5.5.0.165303

[2017-11-07_18-00-31]send: org.eclipse.rcp_4.4.0.v20141007-2301

[2017-11-07_18-00-31]send: com.jrockit.mc.feature.rcp.zh_CN_5.5.0.165303

[2017-11-07_18-00-31]done

然後啟動consumer.py指令碼

[[email protected] python_app]# python consumer.py

[2017-09-23_11-34-00]start consumer

[2017-09-23_11-34-10]world:3:121: key=None value=org.eclipse.ecf.filetransfer.ssl.feature_1.0.0.v20140827-1444

[2017-09-23_11-34-10]world:2:70: key=None value=org.eclipse.emf.common_2.10.1.v20140901-1043

[2017-09-23_11-34-10]world:3:122: key=None value=com.jrockit.mc.feature.rcp.ja_5.5.0.165303

[2017-09-23_11-34-10]world:2:71: key=None value=com.jrockit.mc.feature.console_5.5.0.165303

[2017-09-23_11-34-10]world:0:89: key=None value=org.eclipse.ecf.core.feature_1.1.0.v20140827-1444

[2017-09-23_11-34-10]world:4:101: key=None value=org.eclipse.equinox.p2.core.feature_1.3.0.v20140523-0116

[2017-09-23_11-34-10]world:1:117: key=None value=org.eclipse.ecf.filetransfer.httpclient4.ssl.feature_1.0.0.v20140827-1444

[2017-09-23_11-34-10]world:2:72: key=None value=com.jrockit.mc.feature.rcp_5.5.0.165303

[2017-09-23_11-34-10]world:4:102: key=None value=org.eclipse.babel.nls_eclipse_zh_4.4.0.v20140623020002

[2017-09-23_11-34-10]world:2:73: key=None value=com.jrockit.mc.rcp.product_5.5.0.165303

[2017-09-23_11-34-10]world:3:123: key=None value=org.eclipse.help_2.0.102.v20141007-2301

[2017-09-23_11-34-10]world:3:124: key=None value=org.eclipse.ecf.core.ssl.feature_1.0.0.v20140827-1444

[2017-09-23_11-34-10]world:0:90: key=None value=com.jrockit.mc.feature.flightrecorder_5.5.0.165303

[2017-09-23_11-34-10]world:3:125: key=None value=org.eclipse.ecf.filetransfer.httpclient4.feature_3.9.1.v20140827-1444

[2017-09-23_11-34-10]world:3:126: key=None value=org.eclipse.e4.rcp_1.3.100.v20141007-2033

[2017-09-23_11-34-10]world:3:127: key=None value=org.eclipse.babel.nls_eclipse_ja_4.4.0.v20140623020002

[2017-09-23_11-34-10]world:2:74: key=None value=org.eclipse.emf.ecore_2.10.1.v20140901-1043

[2017-09-23_11-34-10]world:3:128: key=None value=org.eclipse.equinox.p2.rcp.feature_1.2.0.v20140523-0116

[2017-09-23_11-34-10]world:0:91: key=None value=com.jrockit.mc.feature.core_5.5.0.165303

[2017-09-23_11-34-10]world:3:129: key=None value=org.eclipse.ecf.filetransfer.feature_3.9.0.v20140827-1444

[2017-09-23_11-34-11]world:3:130: key=None value=org.eclipse.rcp_4.4.0.v20141007-2301

[2017-09-23_11-34-11]world:3:131: key=None value=com.jrockit.mc.feature.rcp.zh_CN_5.5.0.165303

相關推薦

kfka學習筆記二:使用Python操作Kafka

1、準備工作 使用python操作kafka目前比較常用的庫是kafka-python庫,但是在安裝這個庫的時候需要依賴setuptools庫和six庫,下面就要分別來下載這幾個庫 1、下載setuptools 開啟這個網址會彈出類似下面的額下載視窗,選擇儲存檔案,點選確定

Python學習筆記二:Python基礎

Python語法採用縮排形式,有以下幾點需要注意: 註釋以#開頭; 當語句以冒號:結尾時,縮排的語句視為程式碼塊; 始終堅持4個空格的縮排; 大小寫敏感; 1. 資料型別和變數 1.1 資料型別 1.1.1 整數 在程式中的寫法和數學中的寫法一樣

python學習筆記二:列表

列表通過索引讀取資料: #索引讀取資料 a = [1,2,3] a[-1] 執行結果:3 列表支援巢狀: b = [[1,2,3],[4,5,6]] print(b) 執行結果:[[1, 2, 3], [4, 5, 6]] 列表可以修改: b = [[1,2,3],[4,5,

python爬蟲學習筆記二:Requests庫詳解及HTTP協議

Requests庫的安裝:https://mp.csdn.net/postedit/83715574 r=requests.get(url,params=None,**kwargs) 這個r是Response物件 url :擬獲取頁面的url連結 params:url中的額外引數

Python學習筆記21:資料庫操作(sqlite3)

Python自帶一個輕量級的關係型資料庫SQLite。這一資料庫使用SQL語言。 SQLite作為後端資料庫,可以搭配Python建網站,或者製作有資料儲存需求的工具。 SQLite還在其它領域有廣泛的應用,比如HTML5和移動端。Python標準庫中的sqlite3提供該

Caffe學習筆記(二):使用Python生成caffe所需的lmdb檔案和txt列表清單檔案

轉載請註明作者和出處: http://blog.csdn.net/c406495762 Python版本:Python2.7 執行平臺:Ubuntu14.04 最後修改時間:2017.4.20 在上個筆記中,已經學會了如何使用Caffe利用作者

Python 爬蟲學習筆記二: xpath 模組

Python 爬蟲學習筆記二: xpath from lxml 首先應該知道的是xpath 只是一個元素選擇器, 在python 的另外一個庫lxml 中, 想要使用xpath 必須首先下載lxml 庫 lxml 庫的安裝: 很簡單, 具體請檢視 http:

SpringMVC學習筆記二:常用註解

title c學習 請求 pin 學習 lin att 詳解 stp 轉載請註明原文地址:http://www.cnblogs.com/ygj0930/p/6831976.html 一、用於定義類的註解:@Controller @Controller 用於標記在一個類上,

framework7學習筆記二:基礎知識

部分 cnblogs query 基礎 logs code 自己 $$ pan 一:DOM7 framework7有自己的 DOM7 - 一個集成了大部分常用DOM操作的高性能庫。它的用法和jQuery幾乎是一樣的,包括大部分常用的方法和jquery風格的鏈式調用。 在開發

Spring4學習筆記二:Bean相關

因此 code per cdata 通過 反射機制 特殊符號 cat too 一:Bean的配置形式 基於XML配置:在src目錄下創建 applicationContext.xml 文件,在其中進行配置。 基於註解配置:在創建bean類時,通過註解來

小程序學習筆記二:頁面文件詳解之 .json文件

fresh 小程序 整體 屬性 spa hit rbac style mdi 頁面配置文件—— pageName.json 每一個小程序頁面可以使用.json文件來對本頁面的窗口表現進行配置,頁面中配置項會覆蓋 app.json 的 window 中相同的配置

Python學習筆記01:Python解釋器

3.6 str 2.6 python安裝 blog 時間比較 info ima style 資料參考:廖雪峰的官方網站https://www.liaoxuefeng.com/wiki/001374738125095c955c1e6d8bb493182103fac927076

python學習筆記-day7-【python操作數據庫】

ngs pass insert commit def err 需要 range 執行 上次說到了Python操作數據庫,這裏繼續補充python操作數據庫,如何獲取數據表裏的所有字段值以及將數據導出來到excel裏,作為excel表表頭。 一、上次說到封裝一下mysql

Docker學習筆記二:Docker的安裝

ros -h bionic docker customer tex 鏈接 space 版本 安裝環境:操作系統:Ubuntu 18.04 LTS,code name:bionic (#lsb_release -a)內核版本:4.15.0-29-generic(#uname

基於.NET的CAD二次開發學習筆記二:AutoCAD .NET中的物件

1、CAD物件 一個CAD檔案(DWG檔案)即對應一個數據庫,資料庫中的所有組成部分,看的見(包括點、多段線、文字、圓等)和看不見(圖層、線型、顏色等)的都是物件,資料庫本身也是一個物件。 直線、圓弧、文字和標註等圖形物件都是物件。 線型與標註樣式等樣式設定都是物件。 圖層

分散式學習筆記二:從分散式一致性談到CAP理論、BASE理論

問題的提出 在電腦科學領域,分散式一致性是一個相當重要且被廣泛探索與論證問題,首先來看三種業務場景。 1、火車站售票 假如說我們的終端使用者是一位經常坐火車的旅行家,通常他是去車站的售票處購買車 票,然後拿著車票去檢票口,再坐上火車,開始一段美好的旅行----一切似乎都是那麼和諧。想象一

轉載:InstallShield學習筆記二:元件配置

InstallShield學習筆記二:元件配置 這裡主要講的記錄的是配置檔案的細節。 1.Features配置 在 Installshield 中,可以在 Features 新增需要安裝元件大類,這裡需要注意的是: 在InstallSrcipt MSI ,預設DefaultFeatu

JavaScript 學習筆記二:基本知識

文章目錄 預備知識 JavaScript web 開發人員必須學習的 3 門語言中的一門: 如何編寫JavaScript? 如何執行JavaScript 使用 JavaScript的兩種方式 1. 指令碼是直

csdn學習筆記二:連結串列原型、do原型分析

設計連結串列,並設計其迭代函式 生成連結串列、列印連結串列 arr = {10, 20, 30, 100, 101, 88, 50}; head = nil; local i = 1 while true do if arr[i] then head = {va

mapreduce學習筆記二:去重實驗

bound pac except 計算 throws 問題 多少 tasks tostring 實驗原理 “數據去重”主要是為了掌握和利用並行化思想來對數據進行有意義的篩選。統計大數據集上的數據種類個數、從網站日誌中計算訪問地等這些看似龐雜的任務都