爬蟲學習之14:多程序爬取簡書社會熱點資料儲存到mongodb

阿新 • • 發佈:2019-02-11

本程式碼爬取簡書社會熱點欄目10000頁的資料,使用多程序方式爬取,從簡書網頁可以看出,網頁使用了非同步載入,頁碼只能從response中推測出來,從而構造url,直接上程式碼:

import requests from lxml import etree import pymongo from multiprocessing import Pool import time headers = { 'User-Agent':'Mozilla/5.0 (Windows NT 6.1; WOW64) AppleWebKit/537.36 (KHTML, like Gecko) Chrome/49.0.2623.112 Safari/537.36' } client = pymongo.MongoClient('localhost',27017) mydb = client['mydb'] jianshu = mydb['jianshu_2'] num = 0 def get_jianshu_info(url): global num html = requests.get(url,headers=headers) selector = etree.HTML(html.text) infos = selector.xpath('//ul[@class="note-list"]/li') for info in infos: try: author = info.xpath('div/div/a[1]/text()')[0] title = info.xpath('div/a/text()')[0] abstract = info.xpath('div/p/text()')[0] comment = info.xpath('div/div/a[2]/text()')[1].strip() like = info.xpath('div/div/span/text()')[0].strip() data = { 'author':author, 'title':title, 'abstract':abstract, 'comment':comment, 'like':like } jianshu.insert_one(data) num = num +1 print("已爬取第{}條資訊".format(str(num))) except IndexError: pass if __name__=='__main__': urls = ['https://www.jianshu.com/c/20f7f4031550?utm_medium=index-collections&utm_source=desktop&page={}'.format(str(i)) for i in range(1,10000)] pool = Pool(processes=8) start_time = time.time() pool.map(get_jianshu_info,urls) end_time = time.time() print("八程序爬蟲耗費時間:", end_time - start_time)

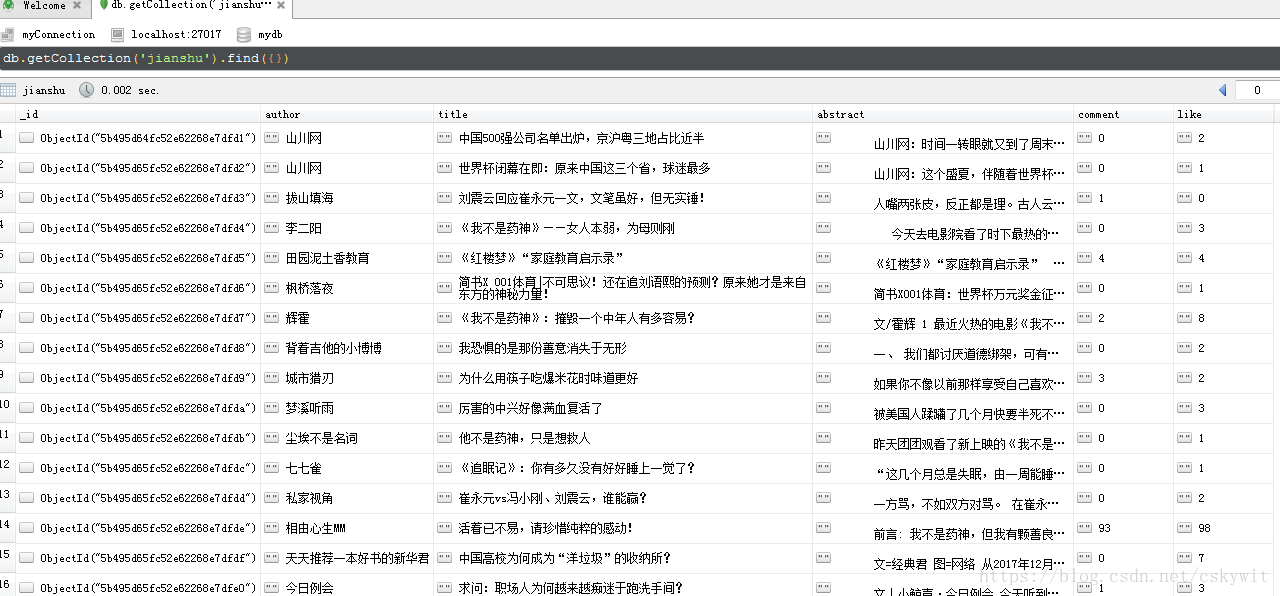

可以看到爬取的資訊已經儲存到了mongodb中: