檢視Spark程序的JVM配置及記憶體使用

阿新 • • 發佈:2019-02-11

如何檢視正在執行的Spark程序的JVM配置以及分代的記憶體使用情況,是線上執行作業常用的監控手段:

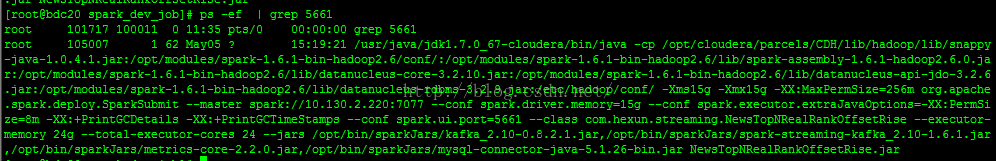

1、通過ps命令查詢PID

ps -ef | grep 5661可以根據命令中的特殊字元來定位pid

2、使用jinfo命令查詢該程序的JVM引數設定

jinfo 105007可以得到詳細的JVM配置資訊

Attaching to process ID 105007, please wait... Debugger attached successfully. Server compiler detected. JVM version is 24.65-b04 Java System Properties: spark.local.dir = /diskb/sparktmp,/diskc/sparktmp,/diskd/sparktmp,/diske/sparktmp,/diskf/sparktmp,/diskg/sparktmp java.runtime.name = Java(TM) SE Runtime Environment java.vm.version = 24.65-b04 sun.boot.library.path = /usr/java/jdk1.7.0_67-cloudera/jre/lib/amd64 java.vendor.url = http://java.oracle.com/ java.vm.vendor = Oracle Corporation path.separator = : file.encoding.pkg = sun.io java.vm.name = Java HotSpot(TM) 64-Bit Server VM sun.os.patch.level = unknown sun.java.launcher = SUN_STANDARD user.country = CN user.dir = /opt/bin/spark_dev_job java.vm.specification.name = Java Virtual Machine Specification java.runtime.version = 1.7.0_67-b01 java.awt.graphicsenv = sun.awt.X11GraphicsEnvironment SPARK_SUBMIT = true os.arch = amd64 java.endorsed.dirs = /usr/java/jdk1.7.0_67-cloudera/jre/lib/endorsed spark.executor.memory = 24g line.separator = java.io.tmpdir = /tmp java.vm.specification.vendor = Oracle Corporation os.name = Linux spark.driver.memory = 15g spark.master = spark://10.130.2.220:7077 sun.jnu.encoding = UTF-8 java.library.path = :/opt/cloudera/parcels/CDH/lib/hadoop/lib/native:/usr/java/packages/lib/amd64:/usr/lib64:/lib64:/lib:/usr/lib sun.nio.ch.bugLevel = java.class.version = 51.0 java.specification.name = Java Platform API Specification sun.management.compiler = HotSpot 64-Bit Tiered Compilers spark.submit.deployMode = client spark.executor.extraJavaOptions = -XX:PermSize=8m -XX:+PrintGCDetails -XX:+PrintGCTimeStamps os.version = 2.6.32-573.8.1.el6.x86_64 user.home = /root user.timezone = PRC java.awt.printerjob = sun.print.PSPrinterJob file.encoding = UTF-8 java.specification.version = 1.7 spark.app.name = com.hexun.streaming.NewsTopNRealRankOffsetRise spark.eventLog.enabled = true user.name = root java.class.path = /opt/cloudera/parcels/CDH/lib/hadoop/lib/snappy-java-1.0.4.1.jar:/opt/modules/spark-1.6.1-bin-hadoop2.6/conf/:/opt/modules/spark-1.6.1-bin-hadoop2.6/lib/spark-assembly-1.6.1-hadoop2.6.0.jar:/opt/modules/spark-1.6.1-bin-hadoop2.6/lib/datanucleus-core-3.2.10.jar:/opt/modules/spark-1.6.1-bin-hadoop2.6/lib/datanucleus-api-jdo-3.2.6.jar:/opt/modules/spark-1.6.1-bin-hadoop2.6/lib/datanucleus-rdbms-3.2.9.jar:/etc/hadoop/conf/ java.vm.specification.version = 1.7 sun.arch.data.model = 64 sun.java.command = org.apache.spark.deploy.SparkSubmit --master spark://10.130.2.220:7077 --conf spark.driver.memory=15g --conf spark.executor.extraJavaOptions=-XX:PermSize=8m -XX:+PrintGCDetails -XX:+PrintGCTimeStamps --conf spark.ui.port=5661 --class com.hexun.streaming.NewsTopNRealRankOffsetRise --executor-memory 24g --total-executor-cores 24 --jars /opt/bin/sparkJars/kafka_2.10-0.8.2.1.jar,/opt/bin/sparkJars/spark-streaming-kafka_2.10-1.6.1.jar,/opt/bin/sparkJars/metrics-core-2.2.0.jar,/opt/bin/sparkJars/mysql-connector-java-5.1.26-bin.jar NewsTopNRealRankOffsetRise.jar java.home = /usr/java/jdk1.7.0_67-cloudera/jre user.language = zh java.specification.vendor = Oracle Corporation awt.toolkit = sun.awt.X11.XToolkit spark.ui.port = 5661 java.vm.info = mixed mode java.version = 1.7.0_67 java.ext.dirs = /usr/java/jdk1.7.0_67-cloudera/jre/lib/ext:/usr/java/packages/lib/ext sun.boot.class.path = /usr/java/jdk1.7.0_67-cloudera/jre/lib/resources.jar:/usr/java/jdk1.7.0_67-cloudera/jre/lib/rt.jar:/usr/java/jdk1.7.0_67-cloudera/jre/lib/sunrsasign.jar:/usr/java/jdk1.7.0_67-cloudera/jre/lib/jsse.jar:/usr/java/jdk1.7.0_67-cloudera/jre/lib/jce.jar:/usr/java/jdk1.7.0_67-cloudera/jre/lib/charsets.jar:/usr/java/jdk1.7.0_67-cloudera/jre/lib/jfr.jar:/usr/java/jdk1.7.0_67-cloudera/jre/classes java.vendor = Oracle Corporation file.separator = / spark.cores.max = 24 spark.eventLog.dir = hdfs://nameservice1/spark-log java.vendor.url.bug = http://bugreport.sun.com/bugreport/ sun.io.unicode.encoding = UnicodeLittle sun.cpu.endian = little spark.jars = file:/opt/bin/sparkJars/kafka_2.10-0.8.2.1.jar,file:/opt/bin/sparkJars/spark-streaming-kafka_2.10-1.6.1.jar,file:/opt/bin/sparkJars/metrics-core-2.2.0.jar,file:/opt/bin/sparkJars/mysql-connector-java-5.1.26-bin.jar,file:/opt/bin/spark_dev_job/NewsTopNRealRankOffsetRise.jar sun.cpu.isalist = VM Flags: -Xms15g -Xmx15g -XX:MaxPermSize=256m

3、使用jmap檢視程序中記憶體分代使用的情況

jmap -heap 105007可以得到該java程序使用記憶體的詳細情況,包括新生代老年代記憶體的使用

Attaching to process ID 105007, please wait... Debugger attached successfully. Server compiler detected. JVM version is 24.65-b04 using thread-local object allocation. Parallel GC with 18 thread(s) Heap Configuration: MinHeapFreeRatio = 0 MaxHeapFreeRatio = 100 MaxHeapSize = 16106127360 (15360.0MB) NewSize = 1310720 (1.25MB) MaxNewSize = 17592186044415 MB OldSize = 5439488 (5.1875MB) NewRatio = 2 SurvivorRatio = 8 PermSize = 21757952 (20.75MB) MaxPermSize = 268435456 (256.0MB) G1HeapRegionSize = 0 (0.0MB) Heap Usage: PS Young Generation Eden Space: capacity = 4945084416 (4716.0MB) used = 2674205152 (2550.320770263672MB) free = 2270879264 (2165.679229736328MB) 54.07804856369109% used From Space: capacity = 217579520 (207.5MB) used = 37486624 (35.750030517578125MB) free = 180092896 (171.74996948242188MB) 17.22893036991717% used To Space: capacity = 206045184 (196.5MB) used = 0 (0.0MB) free = 206045184 (196.5MB) 0.0% used PS Old Generation capacity = 10737418240 (10240.0MB) used = 7431666880 (7087.389831542969MB) free = 3305751360 (3152.6101684570312MB) 69.2127913236618% used PS Perm Generation capacity = 268435456 (256.0MB) used = 128212824 (122.27327728271484MB) free = 140222632 (133.72672271728516MB) 47.762998938560486% used