LibSVM學習(一)---中英文對照使用手冊完整版暨瞭解readme檔案

本文主要內容是

(1)簡單介紹LibSVM

(2)精讀並翻譯LibSVM中的readme檔案

一、LibSVM是什麼?

LIBSVM是臺灣大學林智仁(Lin Chih-Jen)教授等開發設計的一個簡單、易於使用和快速有效的SVM模式識別與迴歸的軟體包,他不但提供了編譯好的可在Windows系列系統的執行檔案,還提供了原始碼,方便改進、修改以及在其它作業系統上應用;該軟體對SVM所涉及的引數調節相對比較少,提供了很多的預設引數,利用這些預設引數可以解決很多問題;並提供了互動檢驗(Cross Validation)的功能。該軟體可以解決C-SVM、ν-SVM、ε-SVR和ν-SVR等問題,包括基於一對一演算法的多類模式識別問題。

–來自百度百科

二、LibSVM中的readme檔案的翻譯以及理解

作者肯定把最希望讀者看到的內容放到了readme當中,所以如果想要深入理解某個project,首先就應該深入理解readme檔案。

Libsvm is a simple, easy-to-use, and efficient software for SVM

classification and regression. It solves C-SVM classification, nu-SVM

classification, one-class-SVM, epsilon-SVM regression, and nu-SVM

regression. It also provides an automatic model selection tool for

C-SVM classification. This document explains the use of libsvm.

Libsvm is available at

http://www.csie.ntu.edu.tw/~cjlin/libsvm

Please read the COPYRIGHT file before using libsvm.

Libsvm是一個簡單、易用、有效的SVM分類和迴歸軟體。它解決了C-SVM分類、nu-SVM分類、one-class-SVM、epsilon-SVM迴歸和nu-SVM迴歸。它還為C-SVM提供了一個自動模型選擇工具。這個文件將解釋libsvm的用法。

Libsvm可以從這裡得到:click to get source

在使用libsvm之前先閱讀COPYRIGHT檔案。

Table of Contents

- Quick Start

- Installation and Data Format

- `svm-train’ Usage

- `svm-predict’ Usage

- `svm-scale’ Usage

- Tips on Practical Use

- Examples

- Precomputed Kernels

- Library Usage

- Java Version

- Building Windows Binaries

- Additional Tools: Sub-sampling, Parameter Selection, Format checking, etc.

- MATLAB/OCTAVE Interface

- Python Interface

- Additional Information

目錄

-快速開始

-安裝和資料格式

-“svm-train”的用法

-“svm-predict”的用法

-“svm-scale”的用法

-實際使用技巧

-例子

Quick Start

If you are new to SVM and if the data is not large, please go to

`tools’ directory and use easy.py after installation. It does

everything automatic – from data scaling to parameter selection.

Usage: easy.py training_file [testing_file]

More information about parameter selection can be found in

`tools/README.’

快速開始

如果你是SVM的一個新手,並且資料不大,那麼在安裝完成之後請開啟tools目錄使用easy.py。它將把一切全自動化,從資料規化到引數選擇。

用法:easy.py training_file [test_file]

你可以在“tools/README”中找到關於引數選擇的更多資訊。

Installation and Data Format

On Unix systems, type make' to build thesvm-train’ and `svm-predict’

programs. Run them without arguments to show the usages of them.

On other systems, consult Makefile' to build them (e.g., see windows’).

'Building Windows binaries' in this file) or use the pre-built

binaries (Windows binaries are in the directory

The format of training and testing data file is:

label index1:value1 index2:value2 …

.

.

.

Each line contains an instance and is ended by a ‘\n’ character. For classification, label is an integer indicating the class label (multi-class is supported).

For regression, label is the target value which can be any real number. For one-class SVM, it’s not used

so can be any number. The pair index:value gives a feature

(attribute) value:index is an integer starting from 1 and value

is a real number. The only exception is the precomputed kernel, where index starts from 0; see the section of precomputed kernels. Indices must be in ASCENDING order. Labels in the testing file are only used

to calculate accuracy or errors. If they are unknown, just fill the first column with any numbers.

A sample classification data included in this package is

heart_scale'. To check if your data is in a correct form, use tools/checkdata.py’ (details in `tools/README’).

Type svm-train heart_scale', and the program will read the training heart_scale.model’. If you have a test

data and output the model file

set called heart_scale.t, then type svm-predict heart_scale.t output’

heart_scale.model output' to see the prediction accuracy. The

file contains the predicted class labels.

For classification, if training data are in only one class (i.e., all

labels are the same), then svm-train' issues a warning message: Warning: training data in only one class. See README for details,’

which means the training data is very unbalanced. The label in the

training data is directly returned when testing.

There are some other useful programs in this package.

svm-scale:

This is a tool for scaling input data file.

svm-toy:

This is a simple graphical interface which shows how SVM

separate data in a plane. You can click in the window to

draw data points. Use "change" button to choose class

1, 2 or 3 (i.e., up to three classes are supported), "load"

button to load data from a file, "save" button to save data to

a file, "run" button to obtain an SVM model, and "clear"

button to clear the window.

You can enter options in the bottom of the window, the syntax of

options is the same as `svm-train'.

Note that "load" and "save" consider dense data format both in

classification and the regression cases. For classification,

each data point has one label (the color) that must be 1, 2,

or 3 and two attributes (x-axis and y-axis values) in

[0,1). For regression, each data point has one target value

(y-axis) and one attribute (x-axis values) in [0, 1).

Type `make' in respective directories to build them.

You need Qt library to build the Qt version.

(available from http://www.trolltech.com)

You need GTK+ library to build the GTK version.

(available from http://www.gtk.org)

The pre-built Windows binaries are in the `windows'

directory. We use Visual C++ on a 32-bit machine, so the

maximal cache size is 2GB.

安裝和資料格式

在Unix系統中,輸入make來生成“svm-train”和“svm-predict”程式。不帶引數地執行它們可以顯示他們的用法。

在其他系統中,參考“Makefile”來生成它們(例如:你可以引數這篇文件中的“生成Windows可執行檔案”)或者使用預生成二進位制檔案(Windows二進位制檔案在”windows“目錄中)

訓練和測試資料檔案中的格式是:

label index1:value1 index2:value2 …

.

.

.

每行包含一個例項,並且以“n”(譯者注:換行符)結束。對於分類來說,label是一個指向該類標誌的整數(支援多類)。對於迴歸來說,label是一個可為任何實數的目標值。對於one-class-SVM來說,它不會被用到,所以可以為任何數值。除非使用預先計算的核(將在另一節介紹),index:value給出了一個特性(屬性)值。index是一個從1開始的整數,value是一個實數。索引必須按升序排列。標籤在測試檔案中只被用來計算精確度或者錯誤。如果它們是未知的,把第一列賦任意值。

這個包內的一個分類資料的例子是“heart_scale”。可以使用“tools/checkdata.py”來檢測你資料格式是否正確。(詳見“tools/README”)。

輸入“svm-train heart_scale”,程式將讀取訓練資料並輸出模型檔案“hear_scale.model”。如果你有一個測試集叫“heart_scale.t”,那麼輸入“svm-predict heart_scale.t heart_scale.model output” 來檢查預測的準確性。“output”檔案包含了預測的類標籤。

這個包裡還有一些其他的有用的程式:

svm-scale:

規化你的輸入資料檔案

svm-toy:

這是一個簡單的圖形介面,它將在一個面板上顯示SVM如果分離資料。你可以在窗口裡單擊來畫資料點。使用“change”按鈕來選擇類1,2或者3(例如:一直到3個類都是支援的),“load”按鈕用來從檔案裡裝入資料,“save”按鈕用來儲存資料到一個檔案,“run”按鈕用來獲取一個SVM模型,“clear”按鈕用來清除視窗。

你可以視窗的底部輸入選項,選項的符號規則和“svm-train”一樣。

注意“load”和“save”只考慮了分類情況下的資料,而沒有考慮迴歸的情況。每一個數據庫有一個標籤(顏色),它必須是1,2或者3,並且兩個屬性(x和y值)範圍必須是[0,1]。

在各個目錄中輸入make來生成它們。

你需要Qt庫來生成Qt版本(可以在這裡得到:http://www.trolltech.com)

你需要GTK+庫來生成GTK版本(可以在這裡得到:http://www.gtk.org)

預生成的Windows二進位制檔案可“Windows”目錄中。我們使用的是32-位機上的Visual C++,所以最大快取是2GB。

`svm-train’ Usage

Usage: svm-train [options] training_set_file [model_file]

options:

-s svm_type : set type of SVM (default 0)

0 – C-SVC (multi-class classification)

1 – nu-SVC (multi-class classification)

2 – one-class SVM

3 – epsilon-SVR (regression)

4 – nu-SVR (regression)

-t kernel_type : set type of kernel function (default 2)

0 – linear: u’*v

1 – polynomial: (gamma*u’*v + coef0)^degree

2 – radial basis function: exp(-gamma*|u-v|^2)

3 – sigmoid: tanh(gamma*u’*v + coef0)

4 – precomputed kernel (kernel values in training_set_file)

-d degree : set degree in kernel function (default 3)

-g gamma : set gamma in kernel function (default 1/num_features)

-r coef0 : set coef0 in kernel function (default 0)

-c cost : set the parameter C of C-SVC, epsilon-SVR, and nu-SVR (default 1)

-n nu : set the parameter nu of nu-SVC, one-class SVM, and nu-SVR (default 0.5)

-p epsilon : set the epsilon in loss function of epsilon-SVR (default 0.1)

-m cachesize : set cache memory size in MB (default 100)

-e epsilon : set tolerance of termination criterion (default 0.001)

-h shrinking : whether to use the shrinking heuristics, 0 or 1 (default 1)

-b probability_estimates : whether to train a SVC or SVR model for probability estimates, 0 or 1 (default 0)

-wi weight : set the parameter C of class i to weight*C, for C-SVC (default 1)

-v n: n-fold cross validation mode

-q : quiet mode (no outputs)

The k in the -g option means the number of attributes in the input data.

option -v randomly splits the data into n parts and calculates cross

validation accuracy/mean squared error on them.

See libsvm FAQ for the meaning of outputs.

“svm-train”的用法

svm-train主要實現對訓練資料集的訓練,並可以獲得SVM模型。

用法:svm-train [options] training_set_file [model_file]

選項:

-s svm_type : 設定SVM的型別 (default 0)

0 — C-SVC

1 — nu-SVC

2 — one-class SVM

3 — epsilon-SVR

4 — nu-SVR

-t kernel_type : 設定核函式的型別 (default 2)

0 — linear: u’*v

1 — polynomial: (gamma*u’*v + coef0)^degree

2 — radial basis function: exp(-gamma*|u-v|^2)

3 — sigmoid: tanh(gamma*u’*v + coef0)

4 — precomputed kernel (kernel values in training_set_file)

-d degree : set degree in kernel function (default 3)

-g gamma : set gamma in kernel function (default 1/k)

-r coef0 : set coef0 in kernel function (default 0)

-c cost : set the parameter C of C-SVC, epsilon-SVR, and nu-SVR (default 1)

-n nu : set the parameter nu of nu-SVC, one-class SVM, and nu-SVR (default 0.5)

-p epsilon : set the epsilon in loss function of epsilon-SVR (default 0.1)

-m cachesize : set cache memory size in MB (default 100)

-e epsilon : set tolerance of termination criterion (default 0.001)

-h shrinking: whether to use the shrinking heuristics, 0 or 1 (default 1)

-b probability_estimates: whether to train an SVC or SVR model for probability estimates, 0 or 1 (default 0)

-wi weight: set the parameter C of class i to weight*C in C-SVC (default 1)

-v n: n-fold cross validation mode

-g中的k表示輸入資料中屬性的數目。

-v選項把資料隨機分成n個部分,並計算它們的交叉驗證精度/均方誤差

通過libsvm FAQ來檢視輸出檔案的含義。

`svm-predict’ Usage

Usage: svm-predict [options] test_file model_file output_file

options:

-b probability_estimates: whether to predict probability estimates, 0 or 1 (default 0); for one-class SVM only 0 is supported

model_file is the model file generated by svm-train.

test_file is the test data you want to predict.

svm-predict will produce output in the output_file.

svmpredict 是根據訓練獲得的模型,對資料集合進行預測。

用法: svm-predict [options] test_file model_file output_file

選項:

-b probability_estimates: 是否預測概率估計, 0 或 1 (預設 0); one-class SVM只支援0

model_file是svm-train生成的model檔案.

test_file 是你想預測的資料.

svm-predict 將把結果輸出到output_file.

`svm-scale’ Usage

Usage: svm-scale [options] data_filename

options:

-l lower : x scaling lower limit (default -1)

-u upper : x scaling upper limit (default +1)

-y y_lower y_upper : y scaling limits (default: no y scaling)

-s save_filename : save scaling parameters to save_filename

-r restore_filename : restore scaling parameters from restore_filename

See ‘Examples’ in this file for examples.

“svm-scale” Usage

svm-scale是用來對原始樣本進行縮放的,範圍可以自己定,一般是[0,1]或[-1,1]。縮放的目的主要是

1)防止某個特徵過大或過小,從而在訓練中起的作用不平衡;

2)為了計算速度。因為在核計算中,會用到內積運算或exp運算,不平衡的資料可能造成計算困難。

用法: svm-scale [options] data_filename

選項:

-l lower : x 規化的最小值 (預設 -1)

-u upper : x 規化的最大值 (預設 +1)

-y y_lower y_upper : y 規化的限定 (預設: 不規化y)

-s save_filename : 儲存規化引數到 save_filename

-r restore_filename : 從restore_filename恢復規化引數

檢視這個文件的’Examples’ 來獲取例子。

Tips on Practical Use

- Scale your data. For example, scale each attribute to [0,1] or [-1,+1].

- For C-SVC, consider using the model selection tool in the tools directory.

- nu in nu-SVC/one-class-SVM/nu-SVR approximates the fraction of training errors and support vectors.

- If data for classification are unbalanced (e.g. many positive and few negative), try different penalty parameters C by -wi (see examples below).

- Specify larger cache size (i.e., larger -m) for huge problems.

實際使用技巧

- 你的資料的規化。例如,規化每一個屬性到[0,1]或[-1,+1]。

- 對於C-SVC,考慮使用tools目錄中的模型選擇工具。

- nu in nu-SVC/one-class-SVM/nu-SVR 近似訓練誤差和支援向量的分數。(暫時沒搞懂這個是什麼意思)

- 如果分類資料不平衡(如太多正數,極少負數),使用-wi嘗試一個不同的罰分引數C。

- 為大的問題指定更大的快取大小(如 larger -m)

Examples/例子

svm-scale -l -1 -u 1 -s range train > train.scale

svm-scale -r range test > test.scale

Scale each feature of the training data to be in [-1,1]. Scaling

factors are stored in the file range and then used for scaling the test data.

其中第一行命令:

-l -1 -u -1的意思是把訓練資料縮放到【-1,1】的區間

-s range的意思是把上述的縮放規則儲存到range檔案中

train > train.scale的意思是對train中的訓練資料進行並且把縮放後的資料儲存到train.scale中,不改動train中的資料

其中第二行命令:

就是引用range中已經儲存的縮放規則來對test進行縮放並且將新資料儲存到test.sacle檔案中

在cmd中輸入svm-scale即可得到所有的option的用法說明:

G:\windows>svm-scale

Usage: svm-scale [options] data_filename

options:

-l lower : x scaling lower limit (default -1)

-u upper : x scaling upper limit (default +1)

-y y_lower y_upper : y scaling limits (default: no y scaling)

-s save_filename : save scaling parameters to save_filename

-r restore_filename : restore scaling parameters from restore_filename下列的解讀方法都一樣,只需要cmd中輸入相應的程式名,即可獲悉各個指令的意義,因此不再做翻譯。

svm-train -s 0 -c 5 -t 2 -g 0.5 -e 0.1 data_file

Train a classifier with RBF kernel exp(-0.5|u-v|^2), C=10, and

stopping tolerance 0.1.

svm-train -s 3 -p 0.1 -t 0 data_file

Solve SVM regression with linear kernel u’v and epsilon=0.1

in the loss function.

svm-train -c 10 -w1 1 -w-2 5 -w4 2 data_file

Train a classifier with penalty 10 = 1 * 10 for class 1, penalty 50 =

5 * 10 for class -2, and penalty 20 = 2 * 10 for class 4.

svm-train -s 0 -c 100 -g 0.1 -v 5 data_file

Do five-fold cross validation for the classifier using

the parameters C = 100 and gamma = 0.1

svm-train -s 0 -b 1 data_file

svm-predict -b 1 test_file data_file.model output_file

Obtain a model with probability information and predict test data with probability estimates

Precomputed Kernels /預計算核函式

(沒看太懂。。。)

Users may precompute kernel values and input them as training and testing files. Then libsvm does not need the original training/testing sets.

使用者可以預先計算好核函式的值並輸入到程式碼中,則libsvm就不再需要原始的訓練/測試集了。

Assume there are L training instances x1, …, xL and.

Let K(x, y) be the kernel

value of two instances x and y. The input formats

are:

New training instance for xi:

label 0:i 1:K(xi,x1) … L:K(xi,xL)

New testing instance for any x:

label 0:? 1:K(x,x1) … L:K(x,xL)

That is, in the training file the first column must be the “ID” of

xi. In testing, ? can be any value.

All kernel values including ZEROs must be explicitly provided. Any permutation or random subsets of the training/testing files are also valid (see examples below).

Note: the format is slightly different from the precomputed kernel package released in libsvmtools earlier.

Examples:

Assume the original training data has three four-feature

instances and testing data has one instance:

15 1:1 2:1 3:1 4:1

45 2:3 4:3

25 3:1

15 1:1 3:1

If the linear kernel is used, we have the following new

training/testing sets:

15 0:1 1:4 2:6 3:1

45 0:2 1:6 2:18 3:0

25 0:3 1:1 2:0 3:1

15 0:? 1:2 2:0 3:1

? can be any value.

Any subset of the above training file is also valid. For example,

25 0:3 1:1 2:0 3:1

45 0:2 1:6 2:18 3:0

implies that the kernel matrix is

[K(2,2) K(2,3)] = [18 0]

[K(3,2) K(3,3)] = [0 1]

Library Usage/庫的使用

這部分內容主要介紹的是LibSVM庫中的一些函式的具體實現,包括一些變數的定義、結構體的構造等等,內容較多,我可能會另開一篇部落格來詳細的描述。

These functions and structures are declared in the header file

svm.h'. You need to #include "svm.h" in your C/C++ source files and svm.cpp’. You can see

link your program withsvm-train.c' and svm-predict.c’ for examples showing how to use them.

下列這些函式和結構體都被定義在標頭檔案“svm.h”當中,你需要include “svm.h”到你的c/c++原始檔並且將你的程式和“svm.cpp”連線。

你可以把svm-train.c、svm-predict.c作為例子檢視來學習如何使用它們。

We define LIBSVM_VERSION and declare extern int libsvm_version; ' in svm.h, so you can check the version number. svm_model’) using training data. A model can also be saved in

我們定義了LIBSVM的版本,你可以檢視版本號。

Before you classify test data, you need to construct an SVM model

在你要分類資料之前,你需要去構造一個SVM的模型

(

a file for later use. Once an SVM model is available, you can use it

to classify new data.

不喜歡把文章寫得太長,未完待續。

—————————-這是文末—————————-

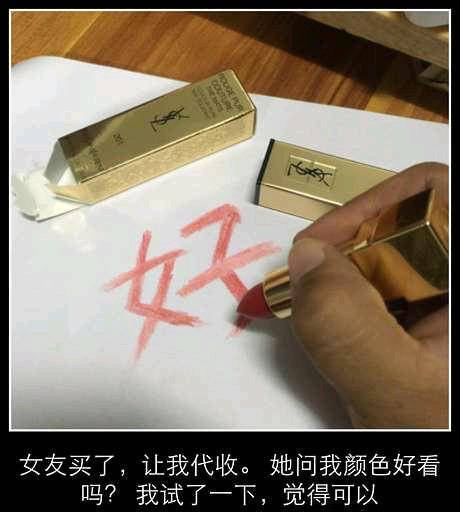

聽話下圖這人會被打:

最近在看《追影子的人》有一段對話頗有感觸,分享一下:

“都結束了,我的真命天女愛上了別人”

“看到她跟馬格在一起,你心痛嗎?”

“你說呢?”

“也許應該說,‘真命天女’指的是會讓你幸福的人,對吧?”

“·····”

“所以咯,也許你的‘真命天女’不是她”

———————–這真的是文末—————————–