ELK日誌分析平臺之logstash

logstash

Logstash 是一個接收,處理,轉發日誌的工具。支援系統日誌,webserver日誌,錯誤日誌,應用日誌,總之包括所有可以丟擲來的日誌型別。在一個典型的使用場景下(ELK):用 Elasticsearch作為後臺資料的儲存,kibana用來前端的報表展示。Logstash在其過程中擔任搬運工的角色,它為資料儲存,報表查詢和日誌解析建立了一個功能強大的管道鏈。Logstash 提供了多種多樣的input,filters,codecs 和 output 元件,讓使用者輕鬆實現強大的功能。

(本文所有主機ip均為172.25.17網段,主機名和ip相對應。比如172.25.17.3對應server3)

一 服務安裝和測試

在server4端安裝logstash:

[root@server4 ~]# ls

elasticsearch-2.3.3.rpm jdk-8u121-linux-x64.rpm

elasticsearch-head-master.zip logstash-2.3.3-1.noarch.rpm

[root@server4 ~]# rpm -ivh logstash-2.3.3-1.noarch.rpm

Preparing... ########################################### [100%] 服務測試:指定格式輸出:

[root@server4 opt]# /opt/logstash/bin/logstash -e 'input { stdin {} } output { stdout {} }'

Settings: Default pipeline workers: 1

Pipeline main started

hello

2018-08-25T02:38:13.829Z server4 hello

world

2018-08-25 另一種格式更加詳細的輸出:

[[email protected] opt]# /opt/logstash/bin/logstash -e 'input { stdin {} } output { stdout { codec => rubydebug} }'

Settings: Default pipeline workers: 1

Pipeline main started

westos

{

"message" => "westos",

"@version" => "1",

"@timestamp" => "2018-08-25T02:39:30.353Z",

"host" => "server4"

}

linux

{

"message" => "linux",

"@version" => "1",

"@timestamp" => "2018-08-25T02:39:33.807Z",

"host" => "server4"

}

將終端的資料傳送到elasticsearch:

[[email protected] opt]# /opt/logstash/bin/logstash -e 'input { stdin {} } output { elasticsearch {hosts => ["172.25.17.4"] index => "logstash-%{+YYYY.MM.dd}"} stdout { codec => rubydebug } }'

Settings: Default pipeline workers: 1

Pipeline main started

test

{

"message" => "test",

"@version" => "1",

"@timestamp" => "2018-08-25T02:44:41.249Z",

"host" => "server4"

}

hello

{

"message" => "hello",

"@version" => "1",

"@timestamp" => "2018-08-25T02:44:48.346Z",

"host" => "server4"

}

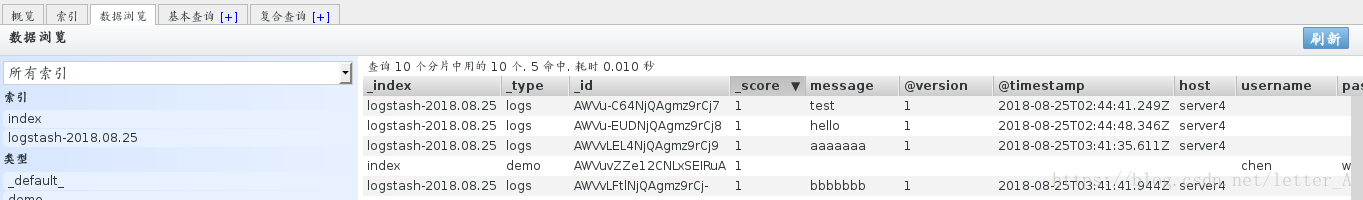

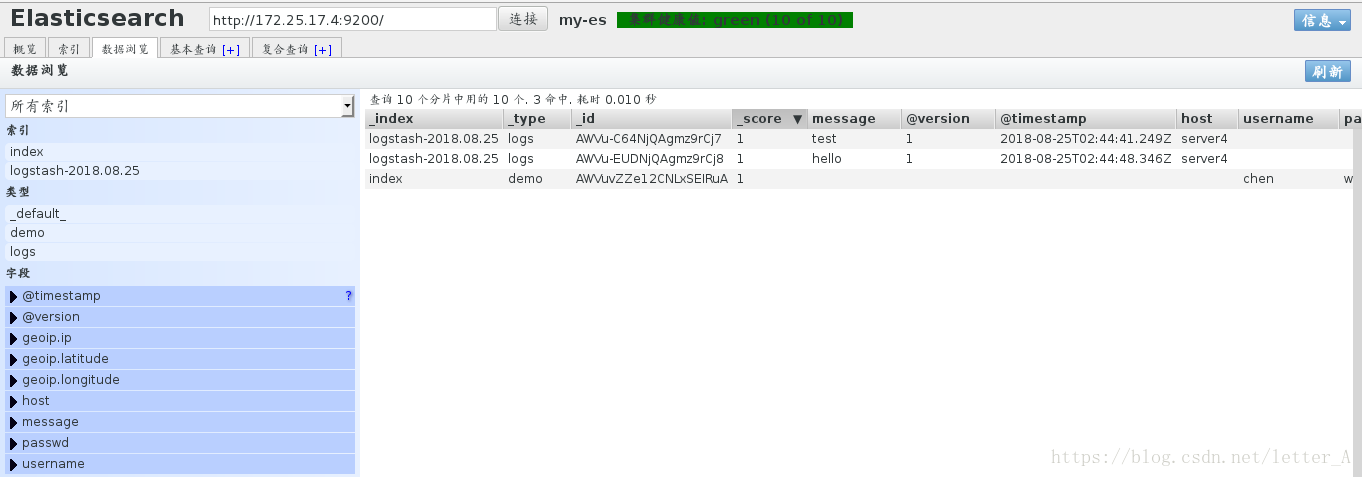

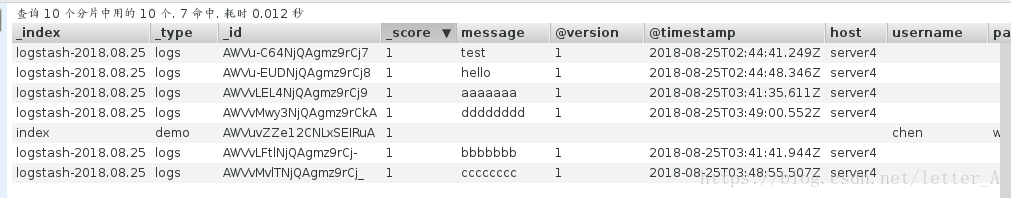

在瀏覽器中檢視接收的資料:

二 新建檔案使用模組輸出

在server4端新建檔案:

[root@server4 opt]# cd /etc/logstash/conf.d/

[root@server4 conf.d]# vim es.conf

檔案內容:

1 input {

2 stdin {}

3 }

4

5 output {

6 elasticsearch {

7 hosts => ["172.25.17.4"]

8 index => "logstash-%{+YYYY.MM.dd}"

9 }

10 stdout {

11 codec => rubydebug

12 }

13 }

指定檔案路徑執行並輸入資料:

[[email protected] conf.d]# /opt/logstash/bin/logstash -f /etc/logstash/conf.d/es.conf

Settings: Default pipeline workers: 1

Pipeline main started

aaaaaaa

{

"message" => "aaaaaaa",

"@version" => "1",

"@timestamp" => "2018-08-25T03:41:35.611Z",

"host" => "server4"

}

bbbbbbb

{

"message" => "bbbbbbb",

"@version" => "1",

"@timestamp" => "2018-08-25T03:41:41.944Z",

"host" => "server4"

}

同樣可以在瀏覽器中檢視到輸入的資料:

三 將輸入的資料存放在指定檔案中:

[root@server4 conf.d]# vim es.conf

加入file模組,將輸入的資料儲存在/tmp/testfile中:

1 input {

2 stdin {}

3 }

4

5 output {

6 elasticsearch {

7 hosts => ["172.25.17.4"]

8 index => "logstash-%{+YYYY.MM.dd}"

9 }

10 stdout {

11 codec => rubydebug

12 }

13 file {

14 path => "/tmp/testfile"

15 codec => line { format => "custom format: %{message}" }

16 }

17 }

執行並輸入資料:

[[email protected] conf.d]# /opt/logstash/bin/logstash -f /etc/logstash/conf.d/es.conf

Settings: Default pipeline workers: 1

Pipeline main started

cccccccc

{

"message" => "cccccccc",

"@version" => "1",

"@timestamp" => "2018-08-25T03:48:55.507Z",

"host" => "server4"

}

dddddddd

{

"message" => "dddddddd",

"@version" => "1",

"@timestamp" => "2018-08-25T03:49:00.552Z",

"host" => "server4"

}

瀏覽器檢視:

指定檔案中檢視到輸入的資料:

[root@server4 conf.d]# cat /tmp/testfile

custom format: cccccccc

custom format: dddddddd

四 將日誌匯入到logstash

編輯檔案:

[root@server4 conf.d]# vim es.conf

設定日誌匯入:

1 input {

2 file {

3 path => "/var/log/messages"

4 start_position => "beginning"

5 }

6 }

7

8 output {

9 elasticsearch {

10 hosts => ["172.25.17.4"]

11 index => "message-%{+YYYY.MM.dd}"

12 }

13 stdout {

14 codec => rubydebug

15 }

16 }

執行:

[[email protected] conf.d]# /opt/logstash/bin/logstash -f /etc/logstash/conf.d/es.conf

Settings: Default pipeline workers: 1

Pipeline main started

重新開啟一個終端ssh到server4,並輸入資料:

[root@server4 conf.d]# logger eeeeeeeeee

[root@server4 conf.d]# logger eeeeeeeeee

[root@server4 conf.d]# logger eeeeeeeeee

[root@server4 conf.d]# logger eeeeeeeeee

[root@server4 conf.d]# logger eeeeeeeeee

[root@server4 conf.d]# logger eeeeeeeeee

之後這些資料會自動顯示在終端裡:

[[email protected] conf.d]# /opt/logstash/bin/logstash -f /etc/logstash/conf.d/es.conf

Settings: Default pipeline workers: 1

Pipeline main started

{

"message" => "Aug 25 12:51:29 server1 root: ddddddddd",

"@version" => "1",

"@timestamp" => "2018-08-25T04:51:30.467Z",

"path" => "/var/log/messages",

"host" => "server4"

}

{

"message" => "Aug 25 12:51:33 server1 root: ddddddddd",

"@version" => "1",

"@timestamp" => "2018-08-25T04:51:34.481Z",

"path" => "/var/log/messages",

"host" => "server4"

}

{

"message" => "Aug 25 12:51:34 server1 root: ddddddddd",

"@version" => "1",

"@timestamp" => "2018-08-25T04:51:34.482Z",

"path" => "/var/log/messages",

"host" => "server4"

}

{

"message" => "Aug 25 12:51:34 server1 root: ddddddddd",

"@version" => "1",

"@timestamp" => "2018-08-25T04:51:35.484Z",

"path" => "/var/log/messages",

"host" => "server4"

}

{

"message" => "Aug 25 12:51:35 server1 root: ddddddddd",

"@version" => "1",

"@timestamp" => "2018-08-25T04:51:35.576Z",

"path" => "/var/log/messages",

"host" => "server4"

}

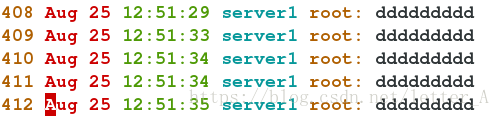

在瀏覽器中可以檢視到:

系統日誌中也有記錄:

[root@server4 conf.d]# vim /var/log/messages

五 通過514埠接收server5的日誌:

server4端:

[root@server4 conf.d]# vim es.conf 1 input {

2 syslog {

3 port => 514

4 }

5 }

6

7 output {

8 elasticsearch {

9 hosts => ["172.25.17.4"]

10 index => "message-%{+YYYY.MM.dd}"

11 }

12 stdout {

13 codec => rubydebug

14 }

15 }

server5端:

[root@server5 elasticsearch]# vim /etc/rsyslog.conf

80 # ### end of the forwarding rule ###

81 *.* @@172.25.17.4:514

重啟服務:

[root@server5 elasticsearch]# /etc/init.d/rsyslog restart

Shutting down system logger: [ OK ]

Starting system logger: [ OK ]

server4端執行:

[[email protected] conf.d]# /opt/logstash/bin/logstash -f /etc/logstash/conf.d/es.conf

Settings: Default pipeline workers: 1

Pipeline main started

server5端鍵入內容:

[root@server5 elasticsearch]# logger hello world

[root@server5 elasticsearch]# logger hello world

[root@server5 elasticsearch]# logger hello world

[root@server5 elasticsearch]# logger hello world

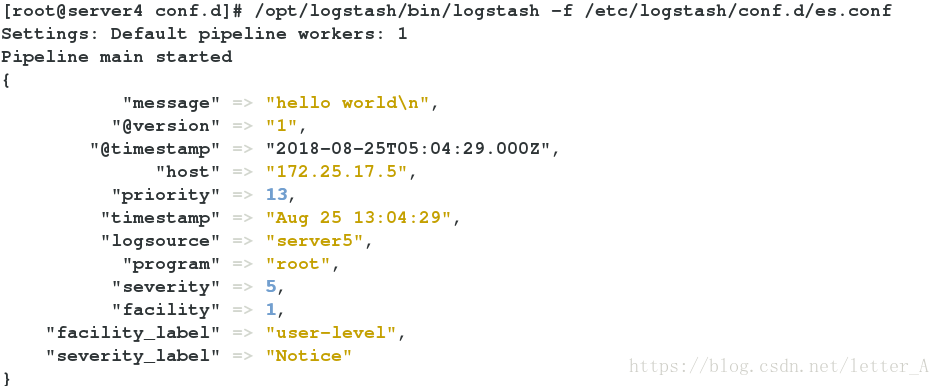

server4端接收到資料:

[[email protected] conf.d]# /opt/logstash/bin/logstash -f /etc/logstash/conf.d/es.conf

Settings: Default pipeline workers: 1

Pipeline main started

{

"message" => "hello world\n",

"@version" => "1",

"@timestamp" => "2018-08-25T05:04:29.000Z",

"host" => "172.25.17.5",

"priority" => 13,

"timestamp" => "Aug 25 13:04:29",

"logsource" => "server5",

"program" => "root",

"severity" => 5,

"facility" => 1,

"facility_label" => "user-level",

"severity_label" => "Notice"

}

{

"message" => "hello world\n",

"@version" => "1",

"@timestamp" => "2018-08-25T05:04:30.000Z",

"host" => "172.25.17.5",

"priority" => 13,

"timestamp" => "Aug 25 13:04:30",

"logsource" => "server5",

"program" => "root",

"severity" => 5,

"facility" => 1,

"facility_label" => "user-level",

"severity_label" => "Notice"

}

{

"message" => "hello world\n",

"@version" => "1",

"@timestamp" => "2018-08-25T05:04:30.000Z",

"host" => "172.25.17.5",

"priority" => 13,

"timestamp" => "Aug 25 13:04:30",

"logsource" => "server5",

"program" => "root",

"severity" => 5,

"facility" => 1,

"facility_label" => "user-level",

"severity_label" => "Notice"

}

六 利用filter模組將日誌按照[ ]符整合成一行:

新建檔案:

[root@server4 conf.d]# vim aaa.conf 檔案內容:

1 input {

2 file {

3 path => "/var/log/elasticsearch/my-es.log"

4 start_position => "beginning"

5 }

6 }

7

8 filter {

9 multiline {

10 # type => "type"

11 pattern => "^\["

12 negate => true

13 what => "previous"

14 }

15 }

16

17 output {

18 elasticsearch {

19 hosts => ["172.25.17.4"]

20 index => "es-%{+YYYY.MM.dd}"

21 }

22 stdout {

23 codec => rubydebug

24 }

25 }

執行:

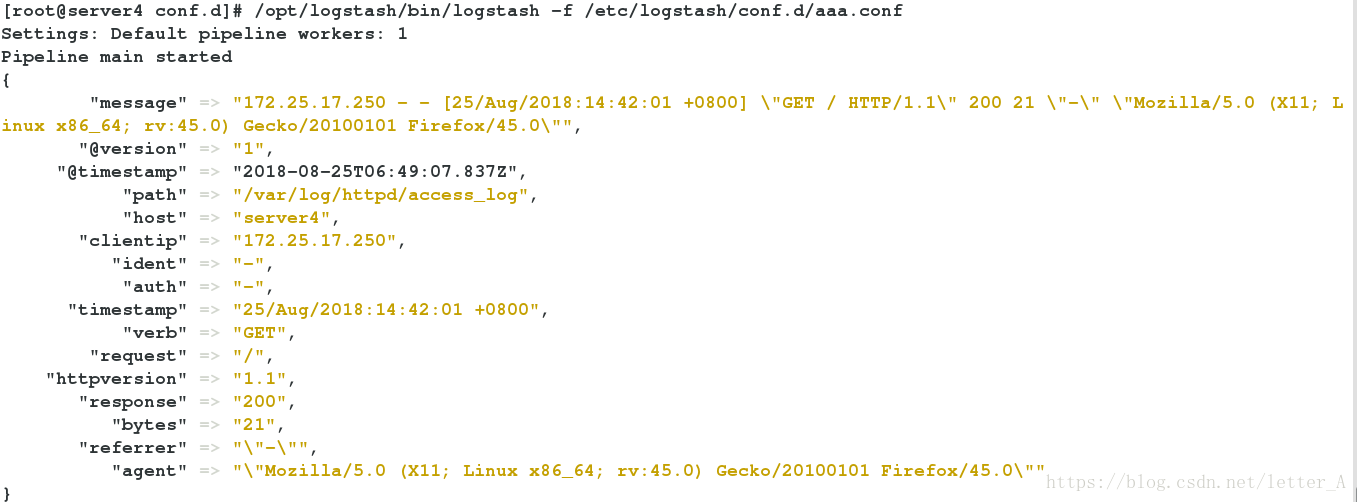

[root@server4 conf.d]# /opt/logstash/bin/logstash -f /etc/logstash/conf.d/aaa.conf

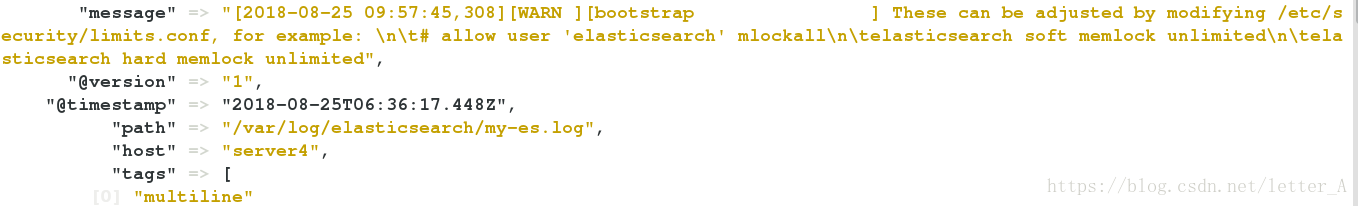

可以看到原來換行的內容合併到了一行:

七 處理apache日誌:

安裝apache新建首頁檔案並開啟服務:

[root@server4 conf.d]# yum install httpd -y

[root@server4 conf.d]# cd /var/www/html/

[root@server4 html]# ls

[root@server4 html]# vim index.html

[root@server4 html]# /etc/init.d/httpd start

Starting httpd: httpd: Could not reliably determine the server's fully qualified domain name, using 172.25.17.4 for ServerName

[ OK ]

編輯檔案:

[root@server4 conf.d]# vim aaa.conf

內容:

1 input {

2 file {

3 path => ["/var/log/httpd/access_log","/var/log/httpd/error_log"]

4 start_position => "beginning"

5 }

6 }

7

8 filter {

9 grok {

10 match => { "message" => "%{COMBINEDAPACHELOG}"}

11 }

12 }

13

14 output {

15 elasticsearch {

16 hosts => ["172.25.17.4"]

17 index => "apache-%{+YYYY.MM.dd}"

18 }

19 stdout {

20 codec => rubydebug

21 }

22 }

執行:

相關推薦

ELK日誌分析平臺之logstash

logstash Logstash 是一個接收,處理,轉發日誌的工具。支援系統日誌,webserver日誌,錯誤日誌,應用日誌,總之包括所有可以丟擲來的日誌型別。在一個典型的使用場景下(ELK):用 Elasticsearch作為後臺資料的儲存,kiba

搭建ELK日誌分析平臺(下)—— 搭建kibana和logstash伺服器

轉:http://blog.51cto.com/zero01/2082794 筆記內容:搭建ELK日誌分析平臺——搭建kibana和logstash伺服器筆記日期:2018-03-03 27.6 安裝kibana 27.7 安裝logstash 27.8 配置logstas

海量視覺化日誌分析平臺之ELK搭建

ELK是什麼? E=ElasticSearch ,一款基於的Lucene的分散式搜尋引擎,我們熟悉的github,就是由ElastiSearch提供的搜尋,據傳已經有10TB+的資料量。 L=LogStash , 一款分散式日誌收集系統,支援多輸入源,並內建一些過濾操作,支

23.ELK實時日誌分析平臺之Beats平臺搭建

在被監控的系統使用Beats平臺,要配合Elasticsearch、Logstash(如果需要的話)、Kibana共同使用。搭建該平臺要求在安裝Beat客戶端的機器上可以訪問到Elasticsearch、Logstash(如果有的話)以及Kibana伺服器。

19.ELK實時日誌分析平臺之Elasticsearch REST API簡介

Elasticsearch提供了一系列RESTful的API,覆蓋瞭如下功能: 檢查叢集、節點、索引的健康度、狀態和統計 管理叢集、節點、索引的資料及元資料 對索引進行CRUD操作及查詢操作 執行其他高階操作如分頁、排序、過濾等。 叢集資訊 使用_

ELK日誌分析平臺搭建全程

elk環境: OS:Centos 6.6 elasticsearch-5.6.3.tar.gzjdk-8u151-linux-x64.tar.gzkibana-5.6.3-linux-x86_64.tar.gzlogstash-5.6.3.tar.gznode-v6.11.4-linux-x64.tar

ELK日誌分析平臺部署實錄

linux elk [root@king01 ~]# rpm --import https://artifacts.elastic.co/GPG-KEY-elasticsearch[root@king01 ~]# vim /etc/yum.repos.d/elasticsearch.repo[elas

極速賽車平臺出租與ELK日誌分析平臺

roo node trigge yml def sco byte curl html 什麽是ELK呢?極速賽車平臺出租 Q2152876294 論壇:diguaym.com ELK是三個組件的縮寫, 分別是elasticsearch, logstash, kibana.

搭建ELK日誌分析平臺(上)—— ELK介紹及搭建 Elasticsearch 分散式叢集

轉:http://blog.51cto.com/zero01/2079879 筆記內容:搭建ELK日誌分析平臺(上)—— ELK介紹及搭建 Elasticsearch 分散式叢集筆記日期:2018-03-02 27.1 ELK介紹 27.2 ELK安裝準備工作 27.3 安

ELK日誌分析平臺搭建

一、使用背景 當生產環境為分散式、很多業務模組的日誌需要每時每刻檢視時 二、環境 系統:centos 6.5 JDK:jdk1.8+ elasticsearch-6.4.2 logstash-6.4.2 kibana-6.4.2 三、安裝 1、安裝JDK

在Windows系統下搭建ELK日誌分析平臺

2018年07月11日 22:29:45 民國周先生 閱讀數:35 再記錄一下elk的搭建,個人覺得挺麻煩的,建議還是在linux系統下搭建,效能會好一些,但我是在windows下搭建的,還是記錄一下吧,像我這種記性差的人還是得靠爛筆頭

ELK日誌分析平臺系統windows環境搭建和基本使用

ELK(ElasticSearch, Logstash, Kibana),三者組合在一起就可以搭建實時的日誌分析平臺啦! Logstash主要用來收集、過濾日誌資訊並將其儲存,所以主要用來提供資訊。 ElasticSearch是一個基於Lucene的開源分散式搜尋引擎,所以主要用來進行資訊

ubuntu16.04 快速搭建ELK日誌分析平臺

假如我們要在一臺伺服器上部署一個ssm應用,部署完,執行一段時間崩了。排查問題得時候,我們自然會想到檢視log4J日誌。可是,如果伺服器不止一個應用,而是好幾個呢,這當然可以檢視每個應用的log4J日誌。那如果不止一臺伺服器,而是好幾臺呢,難道還一個一個看?這顯

【ELK筆記】ELK的安裝,快速搭建一個ELK日誌分析平臺

ELK 是 ElasticSearch、 LogStash、 Kibana 三個開源工具的簡稱,現在還包括 Beats,其分工如下: LogStash/Beats: 負責資料的收集與處理 ElasticSearch: 一個開源的分散式搜尋引擎,負責資料的儲存

民生銀行:我們的 ELK 日誌分析平臺

作者簡介 詹玉林 民生銀行系統管理中心資料庫運維工程師。曾作為研發工程師開發過銀行核心系統,IBM資料庫支援工程師,現關注於大資料的實時解決方案。 前言: 隨著計算機技術的不斷髮展,現實生活中需要處理的資料量越來越大,越來越複雜,對實時性的要求也越來越高。為了適應這種發展,產生了很多大資料計算框

ELK日誌分析平臺加入Kafka訊息佇列

在之前的搭建elk環境中,日誌的處理流程為:filebeat --> logstash --> elasticsearch,隨著業務量的增長,需要對架構做進一步的擴充套件,引入kafka叢集。日誌的處理流程變為:filebeat --> kaf

win10下安裝ELK日誌分析平臺踩坑記錄

Logstash安裝 (1)錯誤提示:(LoadError) Unsupported platform: x86_64-windows 錯誤原因:logstash不支援Java10,換成java8(JDK1.8.1)錯誤消失,沒辦法,Java10出的太快,另外Java8

Windows下搭建ELK日誌分析平臺

ELK介紹 需求背景: 業務發展越來越龐大,伺服器越來越多 各種訪問日誌、應用日誌、錯誤日誌量越來越多,導致運維人員無法很好的去管理日誌 開發人員排查問題,需要到伺服器上查日誌,不方便 運營人員需要一些資料,需要我們運維到伺服器上分析日誌 為什麼要用到ELK: 一

從零編寫日誌分析系統之logstash

概述 logstash是負責採集和解析日誌的,將日誌解析成需要的格式儲存在elasticsearch或者其他地方。logstash提供了很多非常強大的外掛,這些外掛可以有效的把日誌資訊轉換成需要的格式。 一:安裝 二:配置 logstash提供了