R-FCN+ResNet-50用自己的資料集訓練模型(python版本)

阿新 • • 發佈:2019-02-16

說明:

本文假設你已經做好資料集,格式和VOC2007一致,並且Linux系統已經配置好caffe所需環境(部落格裡教程很多),下面是訓練的一些修改。

py-R-FCN原始碼下載地址:

也有Matlab版本:

本文用到的是python版本。

準備工作:

(1)配置caffe環境(網上找教程)

(2)安裝cython, python-opencv, easydict

pip install cython

pip install easydict

apt-get install python-opencv然後,我們就可以開始配置R-FCN了。

1.下載py-R-FCN

git clone https://github.com/Orpine/py-R-FCN.git

下面稱你的py-R-FCN路徑為RFCN_ROOT.

2.下載caffe

注意,該caffe版本是微軟版本cd $RFCN_ROOT

git clone https://github.com/Microsoft/caffe.git3.Build Cython

cd $RFCN_ROOT/lib

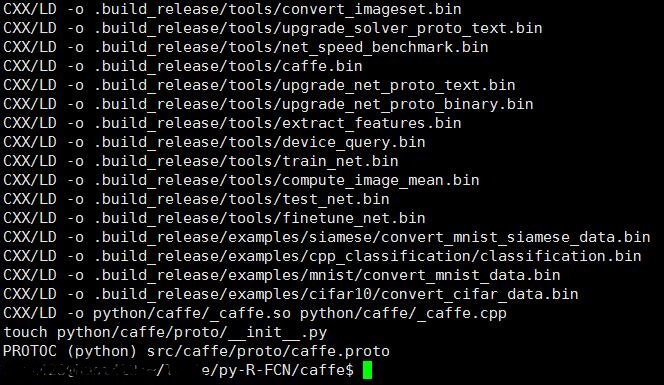

make4.Build caffe和pycaffe

然後修改Makefile.config。caffe必須支援python層,所以WITH_PYTHON_LAYER := 1是必須的。其他配置可參考:Makefile.config 接著:cd $RFCN_ROOT/caffe cp Makefile.config.example Makefile.config

cd $RFCN_ROOT/caffe

make -j8 && make pycaffe

5.測試Demo

經過上面的工作,我們可以測試一下是否可以正常執行。 然後將模型放在$RFCN_ROOT/data。看起來是這樣的:$RFCN_ROOT/data/rfcn_models/resnet50_rfcn_final.caffemodel $RFCN_ROOT/data/rfcn_models/resnet101_rfcn_final.caffemodel

cd $RFCN_ROOT

./tools/demo_rfcn.py --net ResNet-50

6.用我們的資料集訓練

(1)拷貝資料集 假設我們已經做好資料集了,格式是和VOC2007一致,將你的資料集 拷貝到$RFCN_ROOT/data下。看起來是這樣的:$VOCdevkit0712/ # development kit $VOCdevkit/VOCcode/ # VOC utility code $VOCdevkit/VOC0712 # image sets, annotations, etc. # ... and several other directories ...如果你的資料夾名字不是VOCdevkit0712和VOC0712,修改成0712就行了。 (作者是用VOC2007和VOC2012訓練的,所以資料夾名字帶0712。也可以修改程式碼,但是那樣比較麻煩一些,修改資料夾比較簡單) (2)下載預訓練模型 本文以ResNet-50為例,因此下載ResNet-50-model.caffemodel。下載地址:連結:http://pan.baidu.com/s/1slRHD0L 密碼:r3ki 然後將caffemodel放在$RFCN_ROOT/data/imagenet_models (data下沒有該資料夾就新建一個)

(3)修改模型網路

開啟$RFCN_ROOT/models/pascal_voc/ResNet-50/rfcn_end2end (以end2end為例) 注意:下面的cls_num指的是你資料集的類別數+1(背景)。比如我有15類,+1類背景,cls_num=16. <1>修改class-aware/train_ohem.prototxtlayer {

name: 'input-data'

type: 'Python'

top: 'data'

top: 'im_info'

top: 'gt_boxes'

python_param {

module: 'roi_data_layer.layer'

layer: 'RoIDataLayer'

param_str: "'num_classes': 16" #cls_num

}

}layer {

name: 'roi-data'

type: 'Python'

bottom: 'rpn_rois'

bottom: 'gt_boxes'

top: 'rois'

top: 'labels'

top: 'bbox_targets'

top: 'bbox_inside_weights'

top: 'bbox_outside_weights'

python_param {

module: 'rpn.proposal_target_layer'

layer: 'ProposalTargetLayer'

param_str: "'num_classes': 16" #cls_num

}

}layer {

bottom: "conv_new_1"

top: "rfcn_cls"

name: "rfcn_cls"

type: "Convolution"

convolution_param {

num_output: 784 #cls_num*(score_maps_size^2)

kernel_size: 1

pad: 0

weight_filler {

type: "gaussian"

std: 0.01

}

bias_filler {

type: "constant"

value: 0

}

}

param {

lr_mult: 1.0

}

param {

lr_mult: 2.0

}

}layer {

bottom: "conv_new_1"

top: "rfcn_bbox"

name: "rfcn_bbox"

type: "Convolution"

convolution_param {

num_output: 3136 #4*cls_num*(score_maps_size^2)

kernel_size: 1

pad: 0

weight_filler {

type: "gaussian"

std: 0.01

}

bias_filler {

type: "constant"

value: 0

}

}

param {

lr_mult: 1.0

}

param {

lr_mult: 2.0

}

}layer {

bottom: "rfcn_cls"

bottom: "rois"

top: "psroipooled_cls_rois"

name: "psroipooled_cls_rois"

type: "PSROIPooling"

psroi_pooling_param {

spatial_scale: 0.0625

output_dim: 16 #cls_num

group_size: 7

}

}layer {

bottom: "rfcn_bbox"

bottom: "rois"

top: "psroipooled_loc_rois"

name: "psroipooled_loc_rois"

type: "PSROIPooling"

psroi_pooling_param {

spatial_scale: 0.0625

output_dim: 64 #4*cls_num

group_size: 7

}

}<2>修改class-aware/test.prototxt

layer {

bottom: "conv_new_1"

top: "rfcn_cls"

name: "rfcn_cls"

type: "Convolution"

convolution_param {

num_output: 784 #cls_num*(score_maps_size^2)

kernel_size: 1

pad: 0

weight_filler {

type: "gaussian"

std: 0.01

}

bias_filler {

type: "constant"

value: 0

}

}

param {

lr_mult: 1.0

}

param {

lr_mult: 2.0

}

}layer {

bottom: "conv_new_1"

top: "rfcn_bbox"

name: "rfcn_bbox"

type: "Convolution"

convolution_param {

num_output: 3136 #4*cls_num*(score_maps_size^2)

kernel_size: 1

pad: 0

weight_filler {

type: "gaussian"

std: 0.01

}

bias_filler {

type: "constant"

value: 0

}

}

param {

lr_mult: 1.0

}

param {

lr_mult: 2.0

}

}layer {

bottom: "rfcn_cls"

bottom: "rois"

top: "psroipooled_cls_rois"

name: "psroipooled_cls_rois"

type: "PSROIPooling"

psroi_pooling_param {

spatial_scale: 0.0625

output_dim: 16 #cls_num

group_size: 7

}

}layer {

bottom: "rfcn_bbox"

bottom: "rois"

top: "psroipooled_loc_rois"

name: "psroipooled_loc_rois"

type: "PSROIPooling"

psroi_pooling_param {

spatial_scale: 0.0625

output_dim: 64 #4*cls_num

group_size: 7

}

}layer {

name: "cls_prob_reshape"

type: "Reshape"

bottom: "cls_prob_pre"

top: "cls_prob"

reshape_param {

shape {

dim: -1

dim: 16 #cls_num

}

}

}layer {

name: "bbox_pred_reshape"

type: "Reshape"

bottom: "bbox_pred_pre"

top: "bbox_pred"

reshape_param {

shape {

dim: -1

dim: 64 #4*cls_num

}

}

}<3>修改train_agnostic.prototxt

layer {

name: 'input-data'

type: 'Python'

top: 'data'

top: 'im_info'

top: 'gt_boxes'

python_param {

module: 'roi_data_layer.layer'

layer: 'RoIDataLayer'

param_str: "'num_classes': 16" #cls_num

}

}layer {

bottom: "conv_new_1"

top: "rfcn_cls"

name: "rfcn_cls"

type: "Convolution"

convolution_param {

num_output: 784 #cls_num*(score_maps_size^2) ###

kernel_size: 1

pad: 0

weight_filler {

type: "gaussian"

std: 0.01

}

bias_filler {

type: "constant"

value: 0

}

}

param {

lr_mult: 1.0

}

param {

lr_mult: 2.0

}

}layer {

bottom: "rfcn_cls"

bottom: "rois"

top: "psroipooled_cls_rois"

name: "psroipooled_cls_rois"

type: "PSROIPooling"

psroi_pooling_param {

spatial_scale: 0.0625

output_dim: 16 #cls_num ###

group_size: 7

}

}<4>修改train_agnostic_ohem.prototxt

layer {

name: 'input-data'

type: 'Python'

top: 'data'

top: 'im_info'

top: 'gt_boxes'

python_param {

module: 'roi_data_layer.layer'

layer: 'RoIDataLayer'

param_str: "'num_classes': 16" #cls_num ###

}

}layer {

bottom: "conv_new_1"

top: "rfcn_cls"

name: "rfcn_cls"

type: "Convolution"

convolution_param {

num_output: 784 #cls_num*(score_maps_size^2) ###

kernel_size: 1

pad: 0

weight_filler {

type: "gaussian"

std: 0.01

}

bias_filler {

type: "constant"

value: 0

}

}

param {

lr_mult: 1.0

}

param {

lr_mult: 2.0

}

}layer {

bottom: "rfcn_cls"

bottom: "rois"

top: "psroipooled_cls_rois"

name: "psroipooled_cls_rois"

type: "PSROIPooling"

psroi_pooling_param {

spatial_scale: 0.0625

output_dim: 16 #cls_num ###

group_size: 7

}

}layer {

bottom: "conv_new_1"

top: "rfcn_cls"

name: "rfcn_cls"

type: "Convolution"

convolution_param {

num_output: 784 #cls_num*(score_maps_size^2) ###

kernel_size: 1

pad: 0

weight_filler {

type: "gaussian"

std: 0.01

}

bias_filler {

type: "constant"

value: 0

}

}

param {

lr_mult: 1.0

}

param {

lr_mult: 2.0

}

}layer {

bottom: "rfcn_cls"

bottom: "rois"

top: "psroipooled_cls_rois"

name: "psroipooled_cls_rois"

type: "PSROIPooling"

psroi_pooling_param {

spatial_scale: 0.0625

output_dim: 16 #cls_num ###

group_size: 7

}

}layer {

name: "cls_prob_reshape"

type: "Reshape"

bottom: "cls_prob_pre"

top: "cls_prob"

reshape_param {

shape {

dim: -1

dim: 16 #cls_num ###

}

}

}(4)修改程式碼

<1>$RFCN/lib/datasets/pascal_voc.pyclass pascal_voc(imdb):

def __init__(self, image_set, year, devkit_path=None):

imdb.__init__(self, 'voc_' + year + '_' + image_set)

self._year = year

self._image_set = image_set

self._devkit_path = self._get_default_path() if devkit_path is None \

else devkit_path

self._data_path = os.path.join(self._devkit_path, 'VOC' + self._year)

self._classes = ('__background__', # always index 0

'你的標籤1','你的標籤2',你的標籤3','你的標籤4'

)case $DATASET in

pascal_voc)

TRAIN_IMDB="voc_0712_trainval"

TEST_IMDB="voc_0712_test"

PT_DIR="pascal_voc"

ITERS=110000修改ITERS為你想要的迭代次數即可。

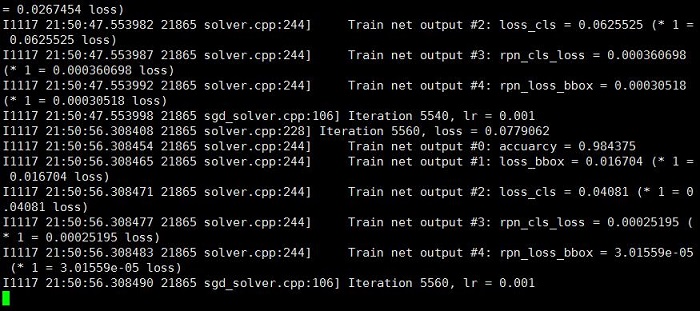

(5)開始訓練

cd $RFCN_ROOT

./experiments/scripts/rfcn_end2end_ohem.sh 0 ResNet-50 pascal_voc

$RFCN_ROOT/experiments/scripts裡還有一些其他的訓練方法,也可以測試一下(經過上面的修改,無ohem的end2end訓練也改好了,其他訓練方法修改的過程差不多)。

(6)結果

將訓練得到的模型($RFCN_ROOT/output/rfcn_end2end_ohem/voc_0712_trainval裡最後的caffemodel)拷貝到$RFCN_ROOT/data/rfcn_models下,然後開啟$RFCN_ROOT/tools/demo_rfcn.py,將CLASSES修改成你的標籤,NETS修改成你的model,im_names修改成你的測試圖片(放在data/demo下),最後:cd $RFCN_ROOT

./tools/demo_rfcn.py --net ResNet-50