最簡單的基於FFmpeg的libswscale的示例(YUV轉RGB)

=====================================================

最簡單的基於FFmpeg的libswscale的示例系列文章列表:

=====================================================

本文記錄一個基於FFmpeg的libswscale的示例。Libswscale裡面實現了各種影象畫素格式的轉換,例如YUV與RGB之間的轉換;以及影象大小縮放(例如640x360拉伸為1280x720)功能。而且libswscale還做了相應指令集的優化,因此它的轉換效率比自己寫的C語言的轉換效率高很多。

本文記錄的程式將畫素格式為YUV420P,解析度為480x272的視訊轉換為畫素格式為RGB24,解析度為1280x720的視訊。

流程

簡單的初始化方法

Libswscale使用起來很方便,最主要的函式只有3個:(1) sws_getContext():使用引數初始化SwsContext結構體。

(2) sws_scale():轉換一幀影象。

(3) sws_freeContext():釋放SwsContext結構體。

其中sws_getContext()也可以用另一個介面函式sws_getCachedContext()取代。

複雜但是更靈活的初始化方法

初始化SwsContext除了呼叫sws_getContext()之外還有另一種方法,更加靈活,可以配置更多的引數。該方法呼叫的函式如下所示。(1) sws_alloc_context():為SwsContext結構體分配記憶體。

(2) av_opt_set_XXX():通過av_opt_set_int(),av_opt_set()…等等一系列方法設定SwsContext結構體的值。在這裡需要注意,SwsContext結構體的定義看不到,所以不能對其中的成員變數直接進行賦值,必須通過av_opt_set()這類的API才能對其進行賦值。

(3) sws_init_context():初始化SwsContext結構體。

這種複雜的方法可以配置一些sws_getContext()配置不了的引數。比如說設定影象的YUV畫素的取值範圍是JPEG標準(Y、U、V取值範圍都是0-255)還是MPEG標準(Y取值範圍是16-235,U、V的取值範圍是16-240)。

幾個知識點

下文記錄幾個影象畫素資料處理過程中的幾個知識點:畫素格式,影象拉伸,YUV畫素取值範圍,色域。

畫素格式

畫素格式的知識此前已經記錄過,不再重複。在這裡記錄一下FFmpeg支援的畫素格式。有幾點注意事項:(1) 所有的畫素格式的名稱都是以“AV_PIX_FMT_”開頭

(2) 畫素格式名稱後面有“P”的,代表是planar格式,否則就是packed格式。Planar格式不同的分量分別儲存在不同的陣列中,例如AV_PIX_FMT_YUV420P儲存方式如下:

data[0]: Y1, Y2, Y3, Y4, Y5, Y6, Y7, Y8……data[1]: U1, U2, U3, U4……

data[2]: V1, V2, V3, V4……

Packed格式的資料都儲存在同一個陣列中,例如AV_PIX_FMT_RGB24儲存方式如下:

data[0]: R1, G1, B1, R2, G2, B2, R3, G3, B3, R4, G4, B4……(3) 畫素格式名稱後面有“BE”的,代表是Big Endian格式;名稱後面有“LE”的,代表是Little Endian格式。

FFmpeg支援的畫素格式的定義位於libavutil\pixfmt.h,是一個名稱為AVPixelFormat的列舉型別,如下所示。

/**

* Pixel format.

*

* @note

* AV_PIX_FMT_RGB32 is handled in an endian-specific manner. An RGBA

* color is put together as:

* (A << 24) | (R << 16) | (G << 8) | B

* This is stored as BGRA on little-endian CPU architectures and ARGB on

* big-endian CPUs.

*

* @par

* When the pixel format is palettized RGB (AV_PIX_FMT_PAL8), the palettized

* image data is stored in AVFrame.data[0]. The palette is transported in

* AVFrame.data[1], is 1024 bytes long (256 4-byte entries) and is

* formatted the same as in AV_PIX_FMT_RGB32 described above (i.e., it is

* also endian-specific). Note also that the individual RGB palette

* components stored in AVFrame.data[1] should be in the range 0..255.

* This is important as many custom PAL8 video codecs that were designed

* to run on the IBM VGA graphics adapter use 6-bit palette components.

*

* @par

* For all the 8bit per pixel formats, an RGB32 palette is in data[1] like

* for pal8. This palette is filled in automatically by the function

* allocating the picture.

*

* @note

* Make sure that all newly added big-endian formats have (pix_fmt & 1) == 1

* and that all newly added little-endian formats have (pix_fmt & 1) == 0.

* This allows simpler detection of big vs little-endian.

*/

enum AVPixelFormat {

AV_PIX_FMT_NONE = -1,

AV_PIX_FMT_YUV420P, ///< planar YUV 4:2:0, 12bpp, (1 Cr & Cb sample per 2x2 Y samples)

AV_PIX_FMT_YUYV422, ///< packed YUV 4:2:2, 16bpp, Y0 Cb Y1 Cr

AV_PIX_FMT_RGB24, ///< packed RGB 8:8:8, 24bpp, RGBRGB...

AV_PIX_FMT_BGR24, ///< packed RGB 8:8:8, 24bpp, BGRBGR...

AV_PIX_FMT_YUV422P, ///< planar YUV 4:2:2, 16bpp, (1 Cr & Cb sample per 2x1 Y samples)

AV_PIX_FMT_YUV444P, ///< planar YUV 4:4:4, 24bpp, (1 Cr & Cb sample per 1x1 Y samples)

AV_PIX_FMT_YUV410P, ///< planar YUV 4:1:0, 9bpp, (1 Cr & Cb sample per 4x4 Y samples)

AV_PIX_FMT_YUV411P, ///< planar YUV 4:1:1, 12bpp, (1 Cr & Cb sample per 4x1 Y samples)

AV_PIX_FMT_GRAY8, ///< Y , 8bpp

AV_PIX_FMT_MONOWHITE, ///< Y , 1bpp, 0 is white, 1 is black, in each byte pixels are ordered from the msb to the lsb

AV_PIX_FMT_MONOBLACK, ///< Y , 1bpp, 0 is black, 1 is white, in each byte pixels are ordered from the msb to the lsb

AV_PIX_FMT_PAL8, ///< 8 bit with PIX_FMT_RGB32 palette

AV_PIX_FMT_YUVJ420P, ///< planar YUV 4:2:0, 12bpp, full scale (JPEG), deprecated in favor of PIX_FMT_YUV420P and setting color_range

AV_PIX_FMT_YUVJ422P, ///< planar YUV 4:2:2, 16bpp, full scale (JPEG), deprecated in favor of PIX_FMT_YUV422P and setting color_range

AV_PIX_FMT_YUVJ444P, ///< planar YUV 4:4:4, 24bpp, full scale (JPEG), deprecated in favor of PIX_FMT_YUV444P and setting color_range

#if FF_API_XVMC

AV_PIX_FMT_XVMC_MPEG2_MC,///< XVideo Motion Acceleration via common packet passing

AV_PIX_FMT_XVMC_MPEG2_IDCT,

#define AV_PIX_FMT_XVMC AV_PIX_FMT_XVMC_MPEG2_IDCT

#endif /* FF_API_XVMC */

AV_PIX_FMT_UYVY422, ///< packed YUV 4:2:2, 16bpp, Cb Y0 Cr Y1

AV_PIX_FMT_UYYVYY411, ///< packed YUV 4:1:1, 12bpp, Cb Y0 Y1 Cr Y2 Y3

AV_PIX_FMT_BGR8, ///< packed RGB 3:3:2, 8bpp, (msb)2B 3G 3R(lsb)

AV_PIX_FMT_BGR4, ///< packed RGB 1:2:1 bitstream, 4bpp, (msb)1B 2G 1R(lsb), a byte contains two pixels, the first pixel in the byte is the one composed by the 4 msb bits

AV_PIX_FMT_BGR4_BYTE, ///< packed RGB 1:2:1, 8bpp, (msb)1B 2G 1R(lsb)

AV_PIX_FMT_RGB8, ///< packed RGB 3:3:2, 8bpp, (msb)2R 3G 3B(lsb)

AV_PIX_FMT_RGB4, ///< packed RGB 1:2:1 bitstream, 4bpp, (msb)1R 2G 1B(lsb), a byte contains two pixels, the first pixel in the byte is the one composed by the 4 msb bits

AV_PIX_FMT_RGB4_BYTE, ///< packed RGB 1:2:1, 8bpp, (msb)1R 2G 1B(lsb)

AV_PIX_FMT_NV12, ///< planar YUV 4:2:0, 12bpp, 1 plane for Y and 1 plane for the UV components, which are interleaved (first byte U and the following byte V)

AV_PIX_FMT_NV21, ///< as above, but U and V bytes are swapped

AV_PIX_FMT_ARGB, ///< packed ARGB 8:8:8:8, 32bpp, ARGBARGB...

AV_PIX_FMT_RGBA, ///< packed RGBA 8:8:8:8, 32bpp, RGBARGBA...

AV_PIX_FMT_ABGR, ///< packed ABGR 8:8:8:8, 32bpp, ABGRABGR...

AV_PIX_FMT_BGRA, ///< packed BGRA 8:8:8:8, 32bpp, BGRABGRA...

AV_PIX_FMT_GRAY16BE, ///< Y , 16bpp, big-endian

AV_PIX_FMT_GRAY16LE, ///< Y , 16bpp, little-endian

AV_PIX_FMT_YUV440P, ///< planar YUV 4:4:0 (1 Cr & Cb sample per 1x2 Y samples)

AV_PIX_FMT_YUVJ440P, ///< planar YUV 4:4:0 full scale (JPEG), deprecated in favor of PIX_FMT_YUV440P and setting color_range

AV_PIX_FMT_YUVA420P, ///< planar YUV 4:2:0, 20bpp, (1 Cr & Cb sample per 2x2 Y & A samples)

#if FF_API_VDPAU

AV_PIX_FMT_VDPAU_H264,///< H.264 HW decoding with VDPAU, data[0] contains a vdpau_render_state struct which contains the bitstream of the slices as well as various fields extracted from headers

AV_PIX_FMT_VDPAU_MPEG1,///< MPEG-1 HW decoding with VDPAU, data[0] contains a vdpau_render_state struct which contains the bitstream of the slices as well as various fields extracted from headers

AV_PIX_FMT_VDPAU_MPEG2,///< MPEG-2 HW decoding with VDPAU, data[0] contains a vdpau_render_state struct which contains the bitstream of the slices as well as various fields extracted from headers

AV_PIX_FMT_VDPAU_WMV3,///< WMV3 HW decoding with VDPAU, data[0] contains a vdpau_render_state struct which contains the bitstream of the slices as well as various fields extracted from headers

AV_PIX_FMT_VDPAU_VC1, ///< VC-1 HW decoding with VDPAU, data[0] contains a vdpau_render_state struct which contains the bitstream of the slices as well as various fields extracted from headers

#endif

AV_PIX_FMT_RGB48BE, ///< packed RGB 16:16:16, 48bpp, 16R, 16G, 16B, the 2-byte value for each R/G/B component is stored as big-endian

AV_PIX_FMT_RGB48LE, ///< packed RGB 16:16:16, 48bpp, 16R, 16G, 16B, the 2-byte value for each R/G/B component is stored as little-endian

AV_PIX_FMT_RGB565BE, ///< packed RGB 5:6:5, 16bpp, (msb) 5R 6G 5B(lsb), big-endian

AV_PIX_FMT_RGB565LE, ///< packed RGB 5:6:5, 16bpp, (msb) 5R 6G 5B(lsb), little-endian

AV_PIX_FMT_RGB555BE, ///< packed RGB 5:5:5, 16bpp, (msb)1A 5R 5G 5B(lsb), big-endian, most significant bit to 0

AV_PIX_FMT_RGB555LE, ///< packed RGB 5:5:5, 16bpp, (msb)1A 5R 5G 5B(lsb), little-endian, most significant bit to 0

AV_PIX_FMT_BGR565BE, ///< packed BGR 5:6:5, 16bpp, (msb) 5B 6G 5R(lsb), big-endian

AV_PIX_FMT_BGR565LE, ///< packed BGR 5:6:5, 16bpp, (msb) 5B 6G 5R(lsb), little-endian

AV_PIX_FMT_BGR555BE, ///< packed BGR 5:5:5, 16bpp, (msb)1A 5B 5G 5R(lsb), big-endian, most significant bit to 1

AV_PIX_FMT_BGR555LE, ///< packed BGR 5:5:5, 16bpp, (msb)1A 5B 5G 5R(lsb), little-endian, most significant bit to 1

AV_PIX_FMT_VAAPI_MOCO, ///< HW acceleration through VA API at motion compensation entry-point, Picture.data[3] contains a vaapi_render_state struct which contains macroblocks as well as various fields extracted from headers

AV_PIX_FMT_VAAPI_IDCT, ///< HW acceleration through VA API at IDCT entry-point, Picture.data[3] contains a vaapi_render_state struct which contains fields extracted from headers

AV_PIX_FMT_VAAPI_VLD, ///< HW decoding through VA API, Picture.data[3] contains a vaapi_render_state struct which contains the bitstream of the slices as well as various fields extracted from headers

AV_PIX_FMT_YUV420P16LE, ///< planar YUV 4:2:0, 24bpp, (1 Cr & Cb sample per 2x2 Y samples), little-endian

AV_PIX_FMT_YUV420P16BE, ///< planar YUV 4:2:0, 24bpp, (1 Cr & Cb sample per 2x2 Y samples), big-endian

AV_PIX_FMT_YUV422P16LE, ///< planar YUV 4:2:2, 32bpp, (1 Cr & Cb sample per 2x1 Y samples), little-endian

AV_PIX_FMT_YUV422P16BE, ///< planar YUV 4:2:2, 32bpp, (1 Cr & Cb sample per 2x1 Y samples), big-endian

AV_PIX_FMT_YUV444P16LE, ///< planar YUV 4:4:4, 48bpp, (1 Cr & Cb sample per 1x1 Y samples), little-endian

AV_PIX_FMT_YUV444P16BE, ///< planar YUV 4:4:4, 48bpp, (1 Cr & Cb sample per 1x1 Y samples), big-endian

#if FF_API_VDPAU

AV_PIX_FMT_VDPAU_MPEG4, ///< MPEG4 HW decoding with VDPAU, data[0] contains a vdpau_render_state struct which contains the bitstream of the slices as well as various fields extracted from headers

#endif

AV_PIX_FMT_DXVA2_VLD, ///< HW decoding through DXVA2, Picture.data[3] contains a LPDIRECT3DSURFACE9 pointer

AV_PIX_FMT_RGB444LE, ///< packed RGB 4:4:4, 16bpp, (msb)4A 4R 4G 4B(lsb), little-endian, most significant bits to 0

AV_PIX_FMT_RGB444BE, ///< packed RGB 4:4:4, 16bpp, (msb)4A 4R 4G 4B(lsb), big-endian, most significant bits to 0

AV_PIX_FMT_BGR444LE, ///< packed BGR 4:4:4, 16bpp, (msb)4A 4B 4G 4R(lsb), little-endian, most significant bits to 1

AV_PIX_FMT_BGR444BE, ///< packed BGR 4:4:4, 16bpp, (msb)4A 4B 4G 4R(lsb), big-endian, most significant bits to 1

AV_PIX_FMT_GRAY8A, ///< 8bit gray, 8bit alpha

AV_PIX_FMT_BGR48BE, ///< packed RGB 16:16:16, 48bpp, 16B, 16G, 16R, the 2-byte value for each R/G/B component is stored as big-endian

AV_PIX_FMT_BGR48LE, ///< packed RGB 16:16:16, 48bpp, 16B, 16G, 16R, the 2-byte value for each R/G/B component is stored as little-endian

/**

* The following 12 formats have the disadvantage of needing 1 format for each bit depth.

* Notice that each 9/10 bits sample is stored in 16 bits with extra padding.

* If you want to support multiple bit depths, then using AV_PIX_FMT_YUV420P16* with the bpp stored separately is better.

*/

AV_PIX_FMT_YUV420P9BE, ///< planar YUV 4:2:0, 13.5bpp, (1 Cr & Cb sample per 2x2 Y samples), big-endian

AV_PIX_FMT_YUV420P9LE, ///< planar YUV 4:2:0, 13.5bpp, (1 Cr & Cb sample per 2x2 Y samples), little-endian

AV_PIX_FMT_YUV420P10BE,///< planar YUV 4:2:0, 15bpp, (1 Cr & Cb sample per 2x2 Y samples), big-endian

AV_PIX_FMT_YUV420P10LE,///< planar YUV 4:2:0, 15bpp, (1 Cr & Cb sample per 2x2 Y samples), little-endian

AV_PIX_FMT_YUV422P10BE,///< planar YUV 4:2:2, 20bpp, (1 Cr & Cb sample per 2x1 Y samples), big-endian

AV_PIX_FMT_YUV422P10LE,///< planar YUV 4:2:2, 20bpp, (1 Cr & Cb sample per 2x1 Y samples), little-endian

AV_PIX_FMT_YUV444P9BE, ///< planar YUV 4:4:4, 27bpp, (1 Cr & Cb sample per 1x1 Y samples), big-endian

AV_PIX_FMT_YUV444P9LE, ///< planar YUV 4:4:4, 27bpp, (1 Cr & Cb sample per 1x1 Y samples), little-endian

AV_PIX_FMT_YUV444P10BE,///< planar YUV 4:4:4, 30bpp, (1 Cr & Cb sample per 1x1 Y samples), big-endian

AV_PIX_FMT_YUV444P10LE,///< planar YUV 4:4:4, 30bpp, (1 Cr & Cb sample per 1x1 Y samples), little-endian

AV_PIX_FMT_YUV422P9BE, ///< planar YUV 4:2:2, 18bpp, (1 Cr & Cb sample per 2x1 Y samples), big-endian

AV_PIX_FMT_YUV422P9LE, ///< planar YUV 4:2:2, 18bpp, (1 Cr & Cb sample per 2x1 Y samples), little-endian

AV_PIX_FMT_VDA_VLD, ///< hardware decoding through VDA

#ifdef AV_PIX_FMT_ABI_GIT_MASTER

AV_PIX_FMT_RGBA64BE, ///< packed RGBA 16:16:16:16, 64bpp, 16R, 16G, 16B, 16A, the 2-byte value for each R/G/B/A component is stored as big-endian

AV_PIX_FMT_RGBA64LE, ///< packed RGBA 16:16:16:16, 64bpp, 16R, 16G, 16B, 16A, the 2-byte value for each R/G/B/A component is stored as little-endian

AV_PIX_FMT_BGRA64BE, ///< packed RGBA 16:16:16:16, 64bpp, 16B, 16G, 16R, 16A, the 2-byte value for each R/G/B/A component is stored as big-endian

AV_PIX_FMT_BGRA64LE, ///< packed RGBA 16:16:16:16, 64bpp, 16B, 16G, 16R, 16A, the 2-byte value for each R/G/B/A component is stored as little-endian

#endif

AV_PIX_FMT_GBRP, ///< planar GBR 4:4:4 24bpp

AV_PIX_FMT_GBRP9BE, ///< planar GBR 4:4:4 27bpp, big-endian

AV_PIX_FMT_GBRP9LE, ///< planar GBR 4:4:4 27bpp, little-endian

AV_PIX_FMT_GBRP10BE, ///< planar GBR 4:4:4 30bpp, big-endian

AV_PIX_FMT_GBRP10LE, ///< planar GBR 4:4:4 30bpp, little-endian

AV_PIX_FMT_GBRP16BE, ///< planar GBR 4:4:4 48bpp, big-endian

AV_PIX_FMT_GBRP16LE, ///< planar GBR 4:4:4 48bpp, little-endian

/**

* duplicated pixel formats for compatibility with libav.

* FFmpeg supports these formats since May 8 2012 and Jan 28 2012 (commits f9ca1ac7 and 143a5c55)

* Libav added them Oct 12 2012 with incompatible values (commit 6d5600e85)

*/

AV_PIX_FMT_YUVA422P_LIBAV, ///< planar YUV 4:2:2 24bpp, (1 Cr & Cb sample per 2x1 Y & A samples)

AV_PIX_FMT_YUVA444P_LIBAV, ///< planar YUV 4:4:4 32bpp, (1 Cr & Cb sample per 1x1 Y & A samples)

AV_PIX_FMT_YUVA420P9BE, ///< planar YUV 4:2:0 22.5bpp, (1 Cr & Cb sample per 2x2 Y & A samples), big-endian

AV_PIX_FMT_YUVA420P9LE, ///< planar YUV 4:2:0 22.5bpp, (1 Cr & Cb sample per 2x2 Y & A samples), little-endian

AV_PIX_FMT_YUVA422P9BE, ///< planar YUV 4:2:2 27bpp, (1 Cr & Cb sample per 2x1 Y & A samples), big-endian

AV_PIX_FMT_YUVA422P9LE, ///< planar YUV 4:2:2 27bpp, (1 Cr & Cb sample per 2x1 Y & A samples), little-endian

AV_PIX_FMT_YUVA444P9BE, ///< planar YUV 4:4:4 36bpp, (1 Cr & Cb sample per 1x1 Y & A samples), big-endian

AV_PIX_FMT_YUVA444P9LE, ///< planar YUV 4:4:4 36bpp, (1 Cr & Cb sample per 1x1 Y & A samples), little-endian

AV_PIX_FMT_YUVA420P10BE, ///< planar YUV 4:2:0 25bpp, (1 Cr & Cb sample per 2x2 Y & A samples, big-endian)

AV_PIX_FMT_YUVA420P10LE, ///< planar YUV 4:2:0 25bpp, (1 Cr & Cb sample per 2x2 Y & A samples, little-endian)

AV_PIX_FMT_YUVA422P10BE, ///< planar YUV 4:2:2 30bpp, (1 Cr & Cb sample per 2x1 Y & A samples, big-endian)

AV_PIX_FMT_YUVA422P10LE, ///< planar YUV 4:2:2 30bpp, (1 Cr & Cb sample per 2x1 Y & A samples, little-endian)

AV_PIX_FMT_YUVA444P10BE, ///< planar YUV 4:4:4 40bpp, (1 Cr & Cb sample per 1x1 Y & A samples, big-endian)

AV_PIX_FMT_YUVA444P10LE, ///< planar YUV 4:4:4 40bpp, (1 Cr & Cb sample per 1x1 Y & A samples, little-endian)

AV_PIX_FMT_YUVA420P16BE, ///< planar YUV 4:2:0 40bpp, (1 Cr & Cb sample per 2x2 Y & A samples, big-endian)

AV_PIX_FMT_YUVA420P16LE, ///< planar YUV 4:2:0 40bpp, (1 Cr & Cb sample per 2x2 Y & A samples, little-endian)

AV_PIX_FMT_YUVA422P16BE, ///< planar YUV 4:2:2 48bpp, (1 Cr & Cb sample per 2x1 Y & A samples, big-endian)

AV_PIX_FMT_YUVA422P16LE, ///< planar YUV 4:2:2 48bpp, (1 Cr & Cb sample per 2x1 Y & A samples, little-endian)

AV_PIX_FMT_YUVA444P16BE, ///< planar YUV 4:4:4 64bpp, (1 Cr & Cb sample per 1x1 Y & A samples, big-endian)

AV_PIX_FMT_YUVA444P16LE, ///< planar YUV 4:4:4 64bpp, (1 Cr & Cb sample per 1x1 Y & A samples, little-endian)

AV_PIX_FMT_VDPAU, ///< HW acceleration through VDPAU, Picture.data[3] contains a VdpVideoSurface

AV_PIX_FMT_XYZ12LE, ///< packed XYZ 4:4:4, 36 bpp, (msb) 12X, 12Y, 12Z (lsb), the 2-byte value for each X/Y/Z is stored as little-endian, the 4 lower bits are set to 0

AV_PIX_FMT_XYZ12BE, ///< packed XYZ 4:4:4, 36 bpp, (msb) 12X, 12Y, 12Z (lsb), the 2-byte value for each X/Y/Z is stored as big-endian, the 4 lower bits are set to 0

AV_PIX_FMT_NV16, ///< interleaved chroma YUV 4:2:2, 16bpp, (1 Cr & Cb sample per 2x1 Y samples)

AV_PIX_FMT_NV20LE, ///< interleaved chroma YUV 4:2:2, 20bpp, (1 Cr & Cb sample per 2x1 Y samples), little-endian

AV_PIX_FMT_NV20BE, ///< interleaved chroma YUV 4:2:2, 20bpp, (1 Cr & Cb sample per 2x1 Y samples), big-endian

/**

* duplicated pixel formats for compatibility with libav.

* FFmpeg supports these formats since Sat Sep 24 06:01:45 2011 +0200 (commits 9569a3c9f41387a8c7d1ce97d8693520477a66c3)

* also see Fri Nov 25 01:38:21 2011 +0100 92afb431621c79155fcb7171d26f137eb1bee028

* Libav added them Sun Mar 16 23:05:47 2014 +0100 with incompatible values (commit 1481d24c3a0abf81e1d7a514547bd5305232be30)

*/

AV_PIX_FMT_RGBA64BE_LIBAV, ///< packed RGBA 16:16:16:16, 64bpp, 16R, 16G, 16B, 16A, the 2-byte value for each R/G/B/A component is stored as big-endian

AV_PIX_FMT_RGBA64LE_LIBAV, ///< packed RGBA 16:16:16:16, 64bpp, 16R, 16G, 16B, 16A, the 2-byte value for each R/G/B/A component is stored as little-endian

AV_PIX_FMT_BGRA64BE_LIBAV, ///< packed RGBA 16:16:16:16, 64bpp, 16B, 16G, 16R, 16A, the 2-byte value for each R/G/B/A component is stored as big-endian

AV_PIX_FMT_BGRA64LE_LIBAV, ///< packed RGBA 16:16:16:16, 64bpp, 16B, 16G, 16R, 16A, the 2-byte value for each R/G/B/A component is stored as little-endian

AV_PIX_FMT_YVYU422, ///< packed YUV 4:2:2, 16bpp, Y0 Cr Y1 Cb

#ifndef AV_PIX_FMT_ABI_GIT_MASTER

AV_PIX_FMT_RGBA64BE=0x123, ///< packed RGBA 16:16:16:16, 64bpp, 16R, 16G, 16B, 16A, the 2-byte value for each R/G/B/A component is stored as big-endian

AV_PIX_FMT_RGBA64LE, ///< packed RGBA 16:16:16:16, 64bpp, 16R, 16G, 16B, 16A, the 2-byte value for each R/G/B/A component is stored as little-endian

AV_PIX_FMT_BGRA64BE, ///< packed RGBA 16:16:16:16, 64bpp, 16B, 16G, 16R, 16A, the 2-byte value for each R/G/B/A component is stored as big-endian

AV_PIX_FMT_BGRA64LE, ///< packed RGBA 16:16:16:16, 64bpp, 16B, 16G, 16R, 16A, the 2-byte value for each R/G/B/A component is stored as little-endian

#endif

AV_PIX_FMT_0RGB=0x123+4, ///< packed RGB 8:8:8, 32bpp, 0RGB0RGB...

AV_PIX_FMT_RGB0, ///< packed RGB 8:8:8, 32bpp, RGB0RGB0...

AV_PIX_FMT_0BGR, ///< packed BGR 8:8:8, 32bpp, 0BGR0BGR...

AV_PIX_FMT_BGR0, ///< packed BGR 8:8:8, 32bpp, BGR0BGR0...

AV_PIX_FMT_YUVA444P, ///< planar YUV 4:4:4 32bpp, (1 Cr & Cb sample per 1x1 Y & A samples)

AV_PIX_FMT_YUVA422P, ///< planar YUV 4:2:2 24bpp, (1 Cr & Cb sample per 2x1 Y & A samples)

AV_PIX_FMT_YUV420P12BE, ///< planar YUV 4:2:0,18bpp, (1 Cr & Cb sample per 2x2 Y samples), big-endian

AV_PIX_FMT_YUV420P12LE, ///< planar YUV 4:2:0,18bpp, (1 Cr & Cb sample per 2x2 Y samples), little-endian

AV_PIX_FMT_YUV420P14BE, ///< planar YUV 4:2:0,21bpp, (1 Cr & Cb sample per 2x2 Y samples), big-endian

AV_PIX_FMT_YUV420P14LE, ///< planar YUV 4:2:0,21bpp, (1 Cr & Cb sample per 2x2 Y samples), little-endian

AV_PIX_FMT_YUV422P12BE, ///< planar YUV 4:2:2,24bpp, (1 Cr & Cb sample per 2x1 Y samples), big-endian

AV_PIX_FMT_YUV422P12LE, ///< planar YUV 4:2:2,24bpp, (1 Cr & Cb sample per 2x1 Y samples), little-endian

AV_PIX_FMT_YUV422P14BE, ///< planar YUV 4:2:2,28bpp, (1 Cr & Cb sample per 2x1 Y samples), big-endian

AV_PIX_FMT_YUV422P14LE, ///< planar YUV 4:2:2,28bpp, (1 Cr & Cb sample per 2x1 Y samples), little-endian

AV_PIX_FMT_YUV444P12BE, ///< planar YUV 4:4:4,36bpp, (1 Cr & Cb sample per 1x1 Y samples), big-endian

AV_PIX_FMT_YUV444P12LE, ///< planar YUV 4:4:4,36bpp, (1 Cr & Cb sample per 1x1 Y samples), little-endian

AV_PIX_FMT_YUV444P14BE, ///< planar YUV 4:4:4,42bpp, (1 Cr & Cb sample per 1x1 Y samples), big-endian

AV_PIX_FMT_YUV444P14LE, ///< planar YUV 4:4:4,42bpp, (1 Cr & Cb sample per 1x1 Y samples), little-endian

AV_PIX_FMT_GBRP12BE, ///< planar GBR 4:4:4 36bpp, big-endian

AV_PIX_FMT_GBRP12LE, ///< planar GBR 4:4:4 36bpp, little-endian

AV_PIX_FMT_GBRP14BE, ///< planar GBR 4:4:4 42bpp, big-endian

AV_PIX_FMT_GBRP14LE, ///< planar GBR 4:4:4 42bpp, little-endian

AV_PIX_FMT_GBRAP, ///< planar GBRA 4:4:4:4 32bpp

AV_PIX_FMT_GBRAP16BE, ///< planar GBRA 4:4:4:4 64bpp, big-endian

AV_PIX_FMT_GBRAP16LE, ///< planar GBRA 4:4:4:4 64bpp, little-endian

AV_PIX_FMT_YUVJ411P, ///< planar YUV 4:1:1, 12bpp, (1 Cr & Cb sample per 4x1 Y samples) full scale (JPEG), deprecated in favor of PIX_FMT_YUV411P and setting color_range

AV_PIX_FMT_BAYER_BGGR8, ///< bayer, BGBG..(odd line), GRGR..(even line), 8-bit samples */

AV_PIX_FMT_BAYER_RGGB8, ///< bayer, RGRG..(odd line), GBGB..(even line), 8-bit samples */

AV_PIX_FMT_BAYER_GBRG8, ///< bayer, GBGB..(odd line), RGRG..(even line), 8-bit samples */

AV_PIX_FMT_BAYER_GRBG8, ///< bayer, GRGR..(odd line), BGBG..(even line), 8-bit samples */

AV_PIX_FMT_BAYER_BGGR16LE, ///< bayer, BGBG..(odd line), GRGR..(even line), 16-bit samples, little-endian */

AV_PIX_FMT_BAYER_BGGR16BE, ///< bayer, BGBG..(odd line), GRGR..(even line), 16-bit samples, big-endian */

AV_PIX_FMT_BAYER_RGGB16LE, ///< bayer, RGRG..(odd line), GBGB..(even line), 16-bit samples, little-endian */

AV_PIX_FMT_BAYER_RGGB16BE, ///< bayer, RGRG..(odd line), GBGB..(even line), 16-bit samples, big-endian */

AV_PIX_FMT_BAYER_GBRG16LE, ///< bayer, GBGB..(odd line), RGRG..(even line), 16-bit samples, little-endian */

AV_PIX_FMT_BAYER_GBRG16BE, ///< bayer, GBGB..(odd line), RGRG..(even line), 16-bit samples, big-endian */

AV_PIX_FMT_BAYER_GRBG16LE, ///< bayer, GRGR..(odd line), BGBG..(even line), 16-bit samples, little-endian */

AV_PIX_FMT_BAYER_GRBG16BE, ///< bayer, GRGR..(odd line), BGBG..(even line), 16-bit samples, big-endian */

#if !FF_API_XVMC

AV_PIX_FMT_XVMC,///< XVideo Motion Acceleration via common packet passing

#endif /* !FF_API_XVMC */

AV_PIX_FMT_NB, ///< number of pixel formats, DO NOT USE THIS if you want to link with shared libav* because the number of formats might differ between versions

#if FF_API_PIX_FMT

#include "old_pix_fmts.h"

#endif

};FFmpeg有一個專門用於描述畫素格式的結構體AVPixFmtDescriptor。該結構體的定義位於libavutil\pixdesc.h,如下所示。

/**

* Descriptor that unambiguously describes how the bits of a pixel are

* stored in the up to 4 data planes of an image. It also stores the

* subsampling factors and number of components.

*

* @note This is separate of the colorspace (RGB, YCbCr, YPbPr, JPEG-style YUV

* and all the YUV variants) AVPixFmtDescriptor just stores how values

* are stored not what these values represent.

*/

typedef struct AVPixFmtDescriptor{

const char *name;

uint8_t nb_components; ///< The number of components each pixel has, (1-4)

/**

* Amount to shift the luma width right to find the chroma width.

* For YV12 this is 1 for example.

* chroma_width = -((-luma_width) >> log2_chroma_w)

* The note above is needed to ensure rounding up.

* This value only refers to the chroma components.

*/

uint8_t log2_chroma_w; ///< chroma_width = -((-luma_width )>>log2_chroma_w)

/**

* Amount to shift the luma height right to find the chroma height.

* For YV12 this is 1 for example.

* chroma_height= -((-luma_height) >> log2_chroma_h)

* The note above is needed to ensure rounding up.

* This value only refers to the chroma components.

*/

uint8_t log2_chroma_h;

uint8_t flags;

/**

* Parameters that describe how pixels are packed.

* If the format has 2 or 4 components, then alpha is last.

* If the format has 1 or 2 components, then luma is 0.

* If the format has 3 or 4 components,

* if the RGB flag is set then 0 is red, 1 is green and 2 is blue;

* otherwise 0 is luma, 1 is chroma-U and 2 is chroma-V.

*/

AVComponentDescriptor comp[4];

}AVPixFmtDescriptor;關於AVPixFmtDescriptor這個結構體不再做過多解釋。它的定義比較簡單,看註釋就可以理解。通過av_pix_fmt_desc_get()可以獲得指定畫素格式的AVPixFmtDescriptor結構體。

/**

* @return a pixel format descriptor for provided pixel format or NULL if

* this pixel format is unknown.

*/

const AVPixFmtDescriptor *av_pix_fmt_desc_get(enum AVPixelFormat pix_fmt);通過AVPixFmtDescriptor結構體可以獲得不同畫素格式的一些資訊。例如下文中用到了av_get_bits_per_pixel(),通過該函式可以獲得指定畫素格式每個畫素佔用的位元數(Bit Per Pixel)。

/**

* Return the number of bits per pixel used by the pixel format

* described by pixdesc. Note that this is not the same as the number

* of bits per sample.

*

* The returned number of bits refers to the number of bits actually

* used for storing the pixel information, that is padding bits are

* not counted.

*/

int av_get_bits_per_pixel(const AVPixFmtDescriptor *pixdesc);其他的API在這裡不做過多記錄。

影象拉伸

FFmpeg支援多種畫素拉伸的方式。這些方式的定義位於libswscale\swscale.h中,如下所示。#define SWS_FAST_BILINEAR 1

#define SWS_BILINEAR 2

#define SWS_BICUBIC 4

#define SWS_X 8

#define SWS_POINT 0x10

#define SWS_AREA 0x20

#define SWS_BICUBLIN 0x40

#define SWS_GAUSS 0x80

#define SWS_SINC 0x100

#define SWS_LANCZOS 0x200

#define SWS_SPLINE 0x400其中SWS_BICUBIC效能比較好;SWS_FAST_BILINEAR在效能和速度之間有一個比好好的平衡,

而SWS_POINT的效果比較差。

有關這些方法的評測可以參考文章:

簡單解釋一下SWS_BICUBIC、SWS_BILINEAR和SWS_POINT的原理。SWS_POINT(Nearest-neighbor interpolation, 鄰域插值)

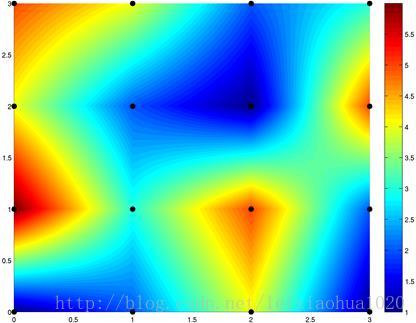

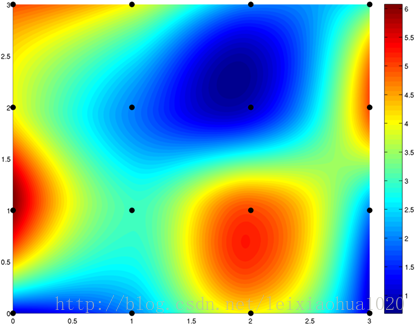

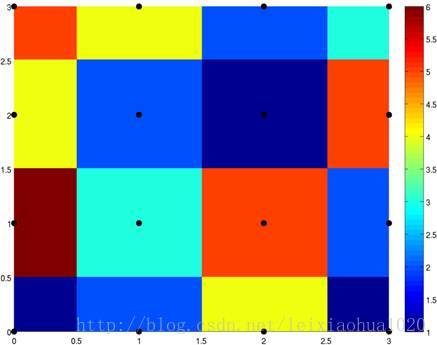

領域插值可以簡單說成“1個點確定插值的點”。例如當影象放大後,新的樣點根據距離它最近的樣點的值取得自己的值。換句話說就是簡單拷貝附近距離它最近的樣點的值。領域插值是一種最基礎的插值方法,速度最快,插值效果最不好,一般情況下不推薦使用。一般情況下使用鄰域插值之後,畫面會產生很多的“鋸齒”。下圖顯示了4x4=16個彩色樣點經過鄰域插值後形成的圖形。

SWS_BILINEAR(Bilinear interpolation, 雙線性插值)

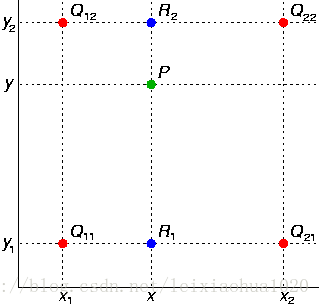

雙線性插值可以簡單說成“4個點確定插值的點”。它的計算過程可以簡單用下圖表示。圖中綠色的P點是需要插值的點。首先通過Q11,Q21求得R1;Q12,Q22求得R2。然後根據R1,R2求得P。

其中求值的過程是一個簡單的加權計算的過程。

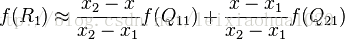

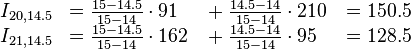

設定Q11 = (x1, y1),Q12 = (x1, y2),Q21 = (x2, y1),Q22 = (x2, y2)則各點的計算公式如下。

可以看出距離插值的點近一些的樣點權值會大一些,遠一些的樣點權值要小一些。

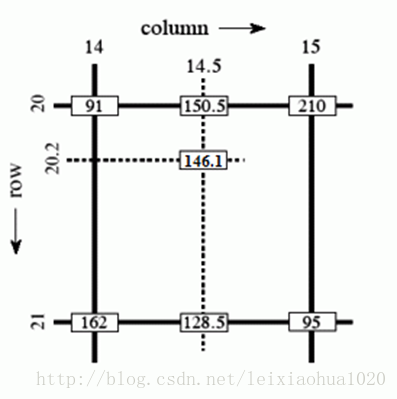

下面看一個維基百科上的雙線性插值的例項。該例子根據座標為(20, 14), (20, 15), (21, 14),(21, 15)的4個樣點計算座標為(20.2, 14.5)的插值點的值。

SWS_BICUBIC(Bicubic interpolation, 雙三次插值)

雙三次插值可以簡單說成“16個點確定插值的點”。該插值演算法比前兩種演算法複雜很多,插值後圖像的質量也是最好的。有關它的插值方式比較複雜不再做過多記錄。它的差值方法可以簡單表述為下述公式。

其中aij的過程依賴於插值資料的特性。

維基百科上使用同樣的樣點進行鄰域插值,雙線性插值,雙三次插值對比如下圖所示。

Bilinear interpolation,雙線性插值

YUV畫素取值範圍

FFmpeg中可以通過使用av_opt_set()設定“src_range”和“dst_range”來設定輸入和輸出的YUV的取值範圍。如果“dst_range”欄位設定為“1”的話,則代表輸出的YUV的取值範圍遵循“jpeg”標準;如果“dst_range”欄位設定為“0”的話,則代表輸出的YUV的取值範圍遵循“mpeg”標準。下面記錄一下YUV的取值範圍的概念。

與RGB每個畫素點的每個分量取值範圍為0-255不同(每個分量佔8bit),YUV取值範圍有兩種:

(1) 以Rec.601為代表(還包括BT.709 / BT.2020)的廣播電視標準中,Y的取值範圍是16-235,U、V的取值範圍是16-240。FFmpeg中稱之為“mpeg”範圍。(2) 以JPEG為代表的標準中,Y、U、V的取值範圍都是0-255。FFmpeg中稱之為“jpeg” 範圍。

實際中最常見的是第1種取值範圍的YUV(可以自己觀察一下YUV的資料,會發現其中亮度分量沒有取值為0、255這樣的數值)。很多人在這個地方會有疑惑,為什麼會去掉“兩邊”的取值呢?

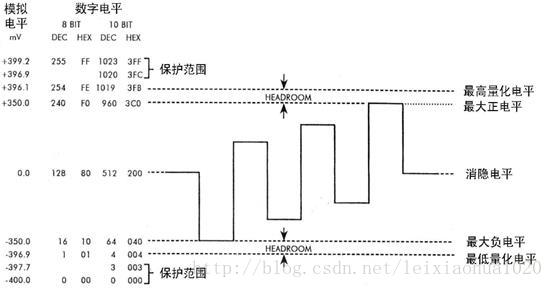

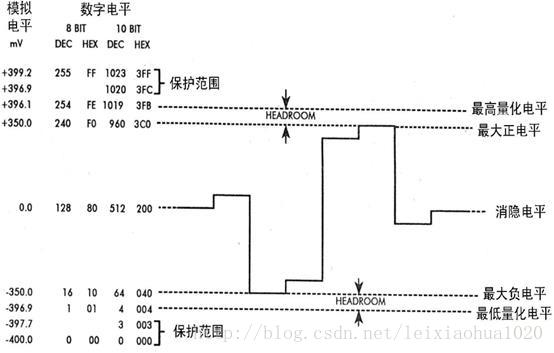

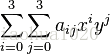

在廣播電視系統中不傳輸很低和很高的數值,實際上是為了防止訊號變動造成過載,因而把這“兩邊”的數值作為“保護帶”。下面這張圖是數字電視中亮度訊號量化後的電平分配圖。從圖中可以看出,對於8bit量化來說,訊號的白電平為235,對應模擬電平為700mV;黑電平為16,對應模擬電平為0mV。訊號上方的“保護帶”取值範圍是236至254,而訊號下方的“保護帶”取值範圍是1-15。最邊緣的0和255兩個電平是保護電平,是不允許出現在資料流中的。與之類似,10bit量化的時候,白電平是235*4=940,黑電平是16*4=64。 下面兩張圖是數字電視中色度訊號量化後的電平分配圖。可以看出,色度最大正電平為240,對應模擬電平為+350mV;色度最大負電平為16,對應模擬電平為-350mV。需要注意的是,色度訊號數字電平128對應的模擬電平是0mV。

下面兩張圖是數字電視中色度訊號量化後的電平分配圖。可以看出,色度最大正電平為240,對應模擬電平為+350mV;色度最大負電平為16,對應模擬電平為-350mV。需要注意的是,色度訊號數字電平128對應的模擬電平是0mV。色域

Libswscale支援色域的轉換。有關色域的轉換我目前還沒有做太多的研究,僅記錄一下目前最常見的三個標準中的色域:BT.601,BT.709,BT.2020。這三個標準中的色域逐漸增大。在這裡先簡單解釋一下CIE 1931顏色空間。這個空間圍繞的區域像一個“舌頭”,其中包含了自然界所有的顏色。CIE 1931顏色空間中的橫座標是x,縱座標是y,x、y、z滿足如下關係:

x + y + z = 1“舌頭”的邊緣叫做“舌形曲線”,代表著飽和度為100%的光譜色。“舌頭”的中心點(1/3,1/3)對應著白色,飽和度為0。

受顯示器件效能的限制,電視螢幕是無法重現所有的顏色的,尤其是位於“舌形曲線”上的100% 飽和度的光譜色一般情況下是無法顯示出來的。因此電視螢幕只能根據其具體的熒光粉的配方,有選擇性的顯示一部分的顏色,這部分可以顯示的顏色稱為色域。下文分別比較標清電視、高清電視和超高清電視標準中規定的色域。可以看出隨著技術的進步,色域的範圍正變得越來越大。

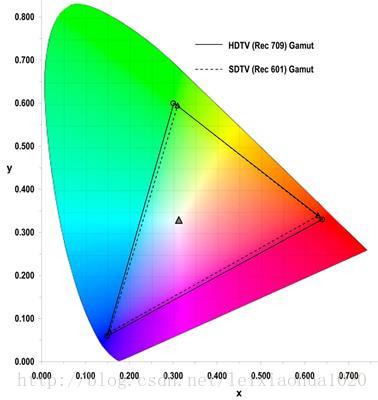

標清電視(SDTV)色域的規定源自於BT.601。高清電視(HDTV)色域的規定源自於BT.709。他們兩個標準中的色域在CIE 1931顏色空間中的對比如下圖所示。從圖中可以看出,BT.709和BT.601色域差別不大,BT.709的色域要略微大於BT.601。

超高清電視(UHDTV)色域的規定源自於BT.2020。BT.2020和BT.709的色域在CIE 1931 顏色空間中的對比如下圖所示。從圖中可以看出,BT.2020的色域要遠遠大於BT.709。

從上面的對比也可以看出,對超高清電視(UHDTV)的顯示器件的效能的要求更高了。這樣超高清電視可以還原出一個更“真實”的世界。

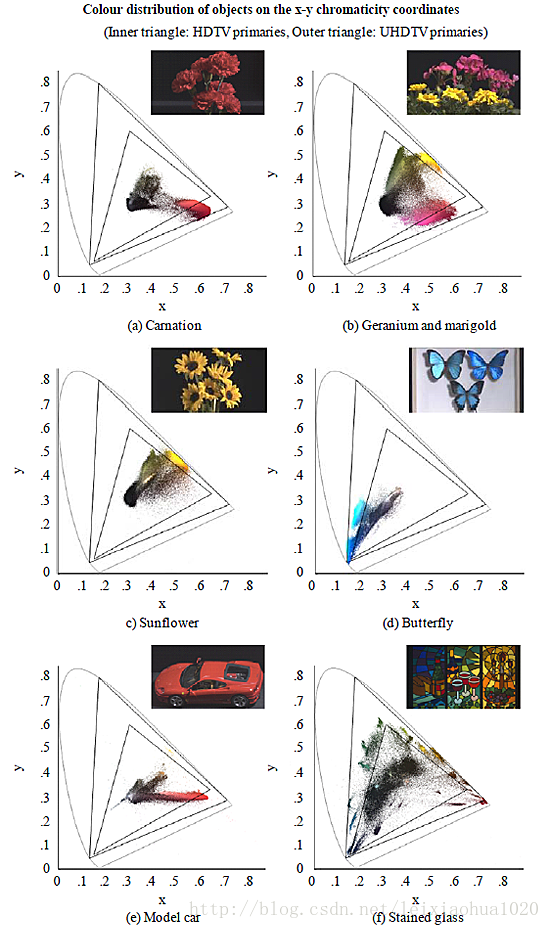

下面這張圖則使用實際的例子反映出色域範圍大的重要性。圖中的兩個黑色三角形分別標識出了BT.709(小三角形)和BT.2020(大三角形)標準中的色域。從圖中可以看出,如果使用色域較小的顯示裝置顯示圖片的話,將會損失掉很多的顏色。

原始碼

本示例程式包含一個輸入和一個輸出,實現了從輸入影象格式(YUV420P)到輸出影象格式(RGB24)之間的轉換;同時將輸入視訊的解析度從480x272拉伸為1280x720。/**

* 最簡單的基於FFmpeg的Swscale示例

* Simplest FFmpeg Swscale

*

* 雷霄驊 Lei Xiaohua

* [email protected]

* 中國傳媒大學/數字電視技術

* Communication University of China / Digital TV Technology

* http://blog.csdn.net/leixiaohua1020

*

* 本程式使用libswscale對畫素資料進行縮放轉換等處理。

* 它中實現了YUV420P格式轉換為RGB24格式,

* 同時將解析度從480x272拉伸為1280x720

* 它是最簡單的libswscale的教程。

*

* This software uses libswscale to scale / convert pixels.

* It convert YUV420P format to RGB24 format,

* and changes resolution from 480x272 to 1280x720.

* It's the simplest tutorial about libswscale.

*/

#include <stdio.h>

#define __STDC_CONSTANT_MACROS

#ifdef _WIN32

//Windows

extern "C"

{

#include "libswscale/swscale.h"

#include "libavutil/opt.h"

#include "libavutil/imgutils.h"

};

#else

//Linux...

#ifdef __cplusplus

extern "C"

{

#endif

#include <libswscale/swscale.h>

#include <libavutil/opt.h>

#include <libavutil/imgutils.h>

#ifdef __cplusplus

};

#endif

#endif

int main(int argc, char* argv[])

{

//Parameters

FILE *src_file =fopen("sintel_480x272_yuv420p.yuv", "rb");

const int src_w=480,src_h=272;

AVPixelFormat src_pixfmt=AV_PIX_FMT_YUV420P;

int src_bpp=av_get_bits_per_pixel(av_pix_fmt_desc_get(src_pixfmt));

FILE *dst_file = fopen("sintel_1280x720_rgb24.rgb", "wb");

const int dst_w=1280,dst_h=720;

AVPixelFormat dst_pixfmt=AV_PIX_FMT_RGB24;

int dst_bpp=av_get_bits_per_pixel(av_pix_fmt_desc_get(dst_pixfmt));

//Structures

uint8_t *src_data[4];

int src_linesize[4];

uint8_t *dst_data[4];

int dst_linesize[4];

int rescale_method=SWS_BICUBIC;

struct SwsContext *img_convert_ctx;

uint8_t *temp_buffer=(uint8_t *)malloc(src_w*src_h*src_bpp/8);

int frame_idx=0;

int ret=0;

ret= av_image_alloc(src_data, src_linesize,src_w, src_h, src_pixfmt, 1);

if (ret< 0) {

printf( "Could not allocate source image\n");

return -1;

}

ret = av_image_alloc(dst_data, dst_linesize,dst_w, dst_h, dst_pixfmt, 1);

if (ret< 0) {

printf( "Could not allocate destination image\n");

return -1;

}

//-----------------------------

//Init Method 1

img_convert_ctx =sws_alloc_context();

//Show AVOption

av_opt_show2(img_convert_ctx,stdout,AV_OPT_FLAG_VIDEO_PARAM,0);

//Set Value

av_opt_set_int(img_convert_ctx,"sws_flags",SWS_BICUBIC|SWS_PRINT_INFO,0);

av_opt_set_int(img_convert_ctx,"srcw",src_w,0);

av_opt_set_int(img_convert_ctx,"srch",src_h,0);

av_opt_set_int(img_convert_ctx,"src_format",src_pixfmt,0);

//'0' for MPEG (Y:0-235);'1' for JPEG (Y:0-255)

av_opt_set_int(img_convert_ctx,"src_range",1,0);

av_opt_set_int(img_convert_ctx,"dstw",dst_w,0);

av_opt_set_int(img_convert_ctx,"dsth",dst_h,0);

av_opt_set_int(img_convert_ctx,"dst_format",dst_pixfmt,0);

av_opt_set_int(img_convert_ctx,"dst_range",1,0);

sws_init_context(img_convert_ctx,NULL,NULL);

//Init Method 2

//img_convert_ctx = sws_getContext(src_w, src_h,src_pixfmt, dst_w, dst_h, dst_pixfmt,

// rescale_method, NULL, NULL, NULL);

//-----------------------------

/*

//Colorspace

ret=sws_setColorspaceDetails(img_convert_ctx,sws_getCoefficients(SWS_CS_ITU601),0,

sws_getCoefficients(SWS_CS_ITU709),0,

0, 1 << 16, 1 << 16);

if (ret==-1) {

printf( "Colorspace not support.\n");

return -1;

}

*/

while(1)

{

if (fread(temp_buffer, 1, src_w*src_h*src_bpp/8, src_file) != src_w*src_h*src_bpp/8){

break;

}

switch(src_pixfmt){

case AV_PIX_FMT_GRAY8:{

memcpy(src_data[0],temp_buffer,src_w*src_h);

break;

}

case AV_PIX_FMT_YUV420P:{

memcpy(src_data[0],temp_buffer,src_w*src_h); //Y

memcpy(src_data[1],temp_buffer+src_w*src_h,src_w*src_h/4); //U

memcpy(src_data[2],temp_buffer+src_w*src_h*5/4,src_w*src_h/4); //V

break;

}

case AV_PIX_FMT_YUV422P:{

memcpy(src_data[0],temp_buffer,src_w*src_h); //Y

memcpy(src_data[1],temp_buffer+src_w*src_h,src_w*src_h/2); //U

memcpy(src_data[2],temp_buffer+src_w*src_h*3/2,src_w*src_h/2); //V

break;

}

case AV_PIX_FMT_YUV444P:{

memcpy(src_data[0],temp_buffer,src_w*src_h); //Y

memcpy(src_data[1],temp_buffer+src_w*src_h,src_w*src_h); //U

memcpy(src_data[2],temp_buffer+src_w*src_h*2,src_w*src_h); //V

break;

}

case AV_PIX_FMT_YUYV422:{

memcpy(src_data[0],temp_buffer,src_w*src_h*2); //Packed

break;

}

case AV_PIX_FMT_RGB24:{

memcpy(src_data[0],temp_buffer,src_w*src_h*3); //Packed

break;

}

default:{

printf("Not Support Input Pixel Format.\n");

break;

}

}

sws_scale(img_convert_ctx, src_data, src_linesize, 0, src_h, dst_data, dst_linesize);

printf("Finish process frame %5d\n",frame_idx);

frame_idx++;

switch(dst_pixfmt){

case AV_PIX_FMT_GRAY8:{

fwrite(dst_data[0],1,dst_w*dst_h,dst_file);

break;

}

case AV_PIX_FMT_YUV420P:{

fwrite(dst_data[0],1,dst_w*dst_h,dst_file); //Y

fwrite(dst_data[1],1,dst_w*dst_h/4,dst_file); //U

fwrite(dst_data[2],1,dst_w*dst_h/4,dst_file); //V

break;

}

case AV_PIX_FMT_YUV422P:{

fwrite(dst_data[0],1,dst_w*dst_h,dst_file); //Y

fwrite(dst_data[1],1,dst_w*dst_h/2,dst_file); //U

fwrite(dst_data[2],1,dst_w*dst_h/2,dst_file); //V

break;

}

case AV_PIX_FMT_YUV444P:{

fwrite(dst_data[0],1,dst_w*dst_h,dst_file); //Y

fwrite(dst_data[1],1,dst_w*dst_h,dst_file); //U

fwrite(dst_data[2],1,dst_w*dst_h,dst_file); //V

break;

}

case AV_PIX_FMT_YUYV422:{

fwrite(dst_data[0],1,dst_w*dst_h*2,dst_file); //Packed

break;

}

case AV_PIX_FMT_RGB24:{

fwrite(dst_data[0],1,dst_w*dst_h*3,dst_file); //Packed

break;

}

default:{

printf("Not Support Output Pixel Format.\n");

break;

}

}

}

sws_freeContext(img_convert_ctx);

free(temp_buffer);

fclose(dst_file);

av_freep(&src_data[0]);

av_freep(&dst_data[0]);

return 0;

}

執行結果

程式的輸入為一個名稱為“sintel_480x272_yuv420p.yuv”的視訊。該視訊畫素格式是YUV420P,解析度為480x272。

程式的輸出為一個名稱為“sintel_1280x720_rgb24.rgb”的視訊。該視訊畫素格式是RGB24,解析度為1280x720。

下載

Simplest FFmpeg Swscale

專案主頁

本教程是最簡單的基於FFmpeg的libswscale進行畫素處理的教程。它包含了兩個工程:

simplest_ffmpeg_swscale: 最簡單的libswscale的教程。

simplest_pic_gen: 生成各種測試圖片的工具。

更新-1.1 (2015.2.13)=========================================

這次考慮到了跨平臺的要求,調整了原始碼。經過這次調整之後,原始碼可以在以下平臺編譯通過:

VC++:開啟sln檔案即可編譯,無需配置。

cl.exe:開啟compile_cl.bat即可命令列下使用cl.exe進行編譯,注意可能需要按照VC的安裝路徑調整腳本里面的引數。編譯命令如下。

::VS2010 Environment

call "D:\Program Files\Microsoft Visual Studio 10.0\VC\vcvarsall.bat"

::include

@set INCLUDE=include;%INCLUDE%

::lib

@set LIB=lib;%LIB%

::compile and link

cl simplest_ffmpeg_swscale.cpp /link swscale.lib avutil.lib /OPT:NOREFMinGW:MinGW命令列下執行compile_mingw.sh即可使用MinGW的g++進行編譯。編譯命令如下。

g++ simplest_ffmpeg_swscale.cpp -g -o simplest_ffmpeg_swscale.exe \

-I /usr/local/include -L /usr/local/lib -lswscale -lavutilGCC:Linux或者MacOS命令列下執行compile_gcc.sh即可使用GCC進行編譯。編譯命令如下。

gcc simplest_ffmpeg_swscale.cpp -g -o simplest_ffmpeg_swscale.out -I /usr/local/include -L /usr/local/lib \

-lswscale -lavutilPS:相關的編譯命令已經儲存到了工程資料夾中

SourceForge上已經更新。

相關推薦

最簡單的基於FFmpeg的libswscale的示例(YUV轉RGB)

=====================================================最簡單的基於FFmpeg的libswscale的示例系列文章列表:====================================================

SDL2---編譯SDL庫、測試播放簡單畫素資料(YUV、RGB等)

本篇博文整理自雷神(雷霄驊https://blog.csdn.net/leixiaohua1020/article/list/3)多篇博文,多謝分享,在此致敬! SDL簡介: SDL庫的作用說白了就是封裝了複雜的視音訊底層操作,簡化了視音訊處理的難度。 以下轉自WiKi:

簡單遞迴的(十進位制轉二進位制)

問題 D: 十->二進位制轉換 時間限制: 1 Sec 記憶體限制: 128 MB 提交: 231 解決: 75 [提交][狀態][討論版][命題人:外部匯入] 題目描述 將十進位制整數轉換成二進位

最簡單的線性迴歸(6行程式碼)

6行程式碼,使用決策樹進行二分類預測。 Decison Tree決策樹作為分類器,特點是簡單易讀,易於理解,具有很強的可解釋性。 from sklearn import tree #呼叫decision tree決策樹 features=[ [140,0] ,[130,

JavaScript: 最簡單的事件代理(JS Event Proxy)原理程式碼

假設有HTML <ul id="parent-list"> <li id="post-1">Item 1</li> <li id="p

史上最簡單的 MySQL 教程(二十五)「外來鍵」

外來鍵外來鍵:foreign key,外面的鍵,即不在自己表中的鍵。如果一張表中有一個非主鍵的欄位指向另外一張表的主鍵,那麼將該欄位稱之為外來鍵。每張表中,可以有多個外來鍵。新增外來鍵外來鍵既可以在建立表的時候增加,也可以在建立表之後增加(但是要考慮資料的問題)。第 1 種:在建立表的時候,增加外來鍵基本語法

史上最簡單的 MySQL 教程(四十一)「觸發器」

溫馨提示:本系列博文已經同步到 GitHub,地址為「mysql-tutorial」,歡迎感興趣的童鞋Star、Fork,糾錯。 案例:網上購物,根據生產訂單的型別,商品的庫存量對應的進行增和減。此案例涉及兩張表,分別為訂單表和商品表,下單時,商

史上最簡單的 MySQL 教程(二十四)「連線查詢」

連線查詢連線查詢:將多張表(大於等於 2 張表)按照某個指定的條件進行資料的拼接,其最終結果記錄數可能有變化,但欄位數一定會增加。連線查詢的意義:在使用者查詢資料的時候,需要顯示的資料來自多張表。連線查詢為join,使用方式為:左表join右表。左表:join左邊的表;右表:join右邊的表。連線查詢分類:在

[Android] Android讀取Asset下文件的最簡單的方法總結(用於MediaPlayer中)

assets ring row tst blog 資源 sse str contex 方法一:getAssets().openFd //讀取asset內容 private void openAssetMusic(String index) throws IOExcep

ffmpeg最簡單的解碼保存YUV數據 <轉>

context 陣列 log fop content const www. += fopen video的raw data一般都是YUV420p的格式,簡單的記錄下這個格式的細節,如有不對希望大家能指出。 YUV圖像通常有兩種格式,一種是packet 還有一種是plan

Linux socket編程示例(最簡單的TCP和UDP兩個例子)

步驟 proto 詳解 dto 應該 pro sock bind ram 一、socket編程 網絡功能是Uinux/Linux的一個重要特點,有著悠久的歷史,因此有一個非常固定的編程套路。 基於TCP的網絡編程: 基於連接, 在交互過程中, 服務器

(二)Web框架-龍捲風Tornado之世界上最簡單的Tornado示例

原始碼 # _*_coding:utf-8_*_ import tornado.ioloop import tornado.web class MainHandler(tornado.web.R

一個簡單的MapReduce示例(多個MapReduce任務處理)

.lib exceptio apr private util sum length reat lin 一、需求 有一個列表,只有兩列:id、pro,記錄了id與pro的對應關系,但是在同一個id下,pro有可能是重復的。 現在需要寫一個程序,統計一下每個id下有

Python最簡單版本的MergeSort (歸併排序)

def MergeSort(l, left, right): if left >= right: return mid = left + (right - left) // 2 #注意這裡的寫法 MergeSort(l, left

LeetCode-53. 最大子序和-最簡單的動態規劃(Python3)

題目連結: 53.最大子序和 題目描述: 給定一個整數陣列 nums ,找到一個具有最大和的連續子陣列(子陣列最少包含一個元素),返回其最大和。 示例: 輸入: [-2,1,-3,4,-1,2,1,-5,4], 輸出: 6 解釋: 連續子陣列&n

webpack 最簡單的入門教程(基礎的檔案打包以及實現熱載入)

webpack安裝 我們可以用npm安裝webpack,要用npm我們就需要安裝node.js環境,作為我們的平臺。 下載node.js 下載好之後安裝,我們在cmd或者GitBashHere中輸入 npm -v node -v 如果出現版本號

在樹莓派上建立一個最簡單手寫體識別系統(二)

首先得先把opencv安裝上。 在PC上我使用的是anaconda,直接輸入: conda install --channel https://conda.anaconda.org/menpo opencv3 測試程式碼: import cv2

最簡單的目標跟蹤(模版匹配)

一、概述 目標跟蹤是計算機視覺領域的一個重要分支。研究的人很多,近幾年也出現了很多很多的演算法。大家看看淋漓滿目的paper就知道了。但在這裡,我們也聚焦下比較簡單的演算法,看看它的優勢在哪裡。畢竟有時候簡單就是一種美。 在這裡我們一起來欣賞下“

Android 最簡單的三級聯動(地區)第三方庫實現

一 : 效果圖展示 二 因為用到是第三方庫,要匯入下面的依賴 1 compile 'liji.library.dev:citypickerview:1.1.0' 2 xml佈局: <RelativeLayout android:id="

最簡單的行列轉換(交叉表)例項

declare @sql varchar(8000)set @sql = 'select name'select @sql = @sql + ',sum(case km when '''+km+''' then cj end) ['+km+']' from (select d