OpenAI Gym構建自定義強化學習環境

阿新 • • 發佈:2019-02-19

OpenAI Gym是開發和比較強化學習演算法的工具包。

OpenAI Gym由兩部分組成:

- gym開源庫:測試問題的集合。當你測試強化學習的時候,測試問題就是環境,比如機器人玩遊戲,環境的集合就是遊戲的畫面。這些環境有一個公共的介面,允許使用者設計通用的演算法。

- OpenAI Gym服務。提供一個站點(比如對於遊戲cartpole-v0:https://gym.openai.com/envs/CartPole-v0)和api,允許使用者對他們的測試結果進行比較。

gym的核心介面是Env,作為統一的環境介面。Env包含下面幾個核心方法:

- reset(self):重置環境的狀態,返回觀察。

- step(self, action):推進一個時間步長,返回observation,reward,done,info

- render(self, mode=’human’, close=False):重繪環境的一幀。預設模式一般比較友好,如彈出一個視窗。

自定義環境

背景

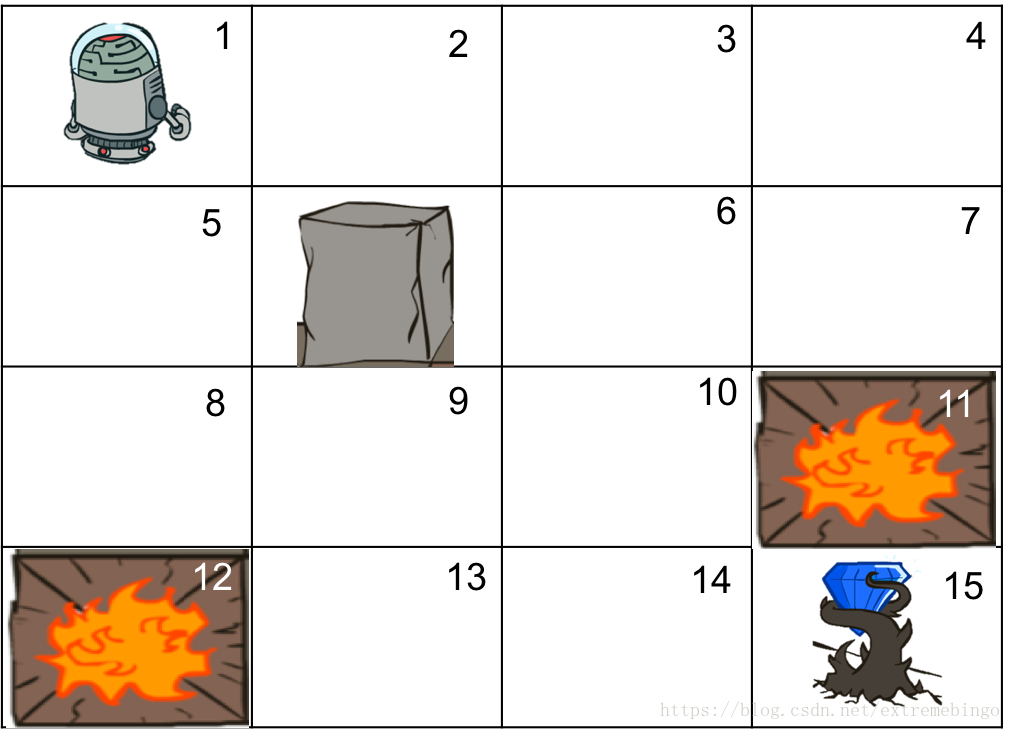

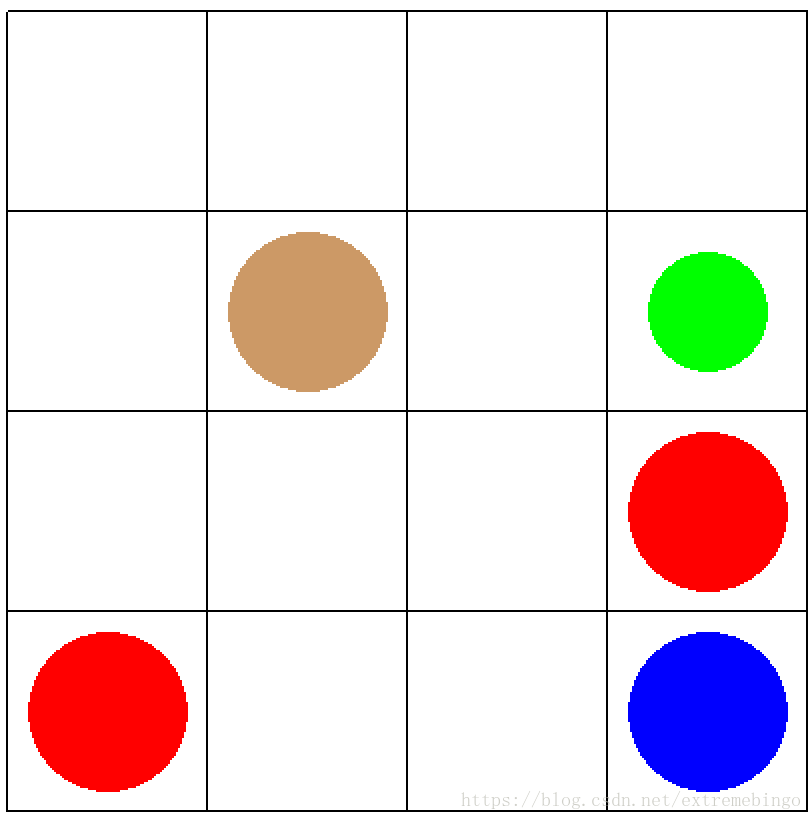

機器人在一個二維迷宮中走動,迷宮中有火坑、石柱、鑽石。如果機器人掉到火坑中,遊戲結束,如果找到鑽石,可以得到獎勵,遊戲也結束!設計最佳的策略,讓機器人儘快地找到鑽石,獲得獎勵。

操作環境

Python環境:anaconda5.2

pip安裝gym

步驟

在 anaconda3/lib/python3.6/site-packages/gym/envs 下新建目錄 user ,用於存放自定義的強化學習環境。

在 user

grid_mdp_v1.py

import logging

import random

import gym

logger = logging.getLogger(__name__)

class GridEnv1(gym.Env):

metadata = {

'render.modes': ['human', 'rgb_array'],

'video.frames_per_second': 2

}

def __init__(self):

self.states = range(1,17) #狀態空間 在 user 目錄下新建 __init__.py

from gym.envs.user.grid_mdp_v1 import GridEnv1在 anaconda3/lib/python3.6/site-packages/gym/envs/__init__.py 中進行註冊,在最後加入

register(

id='GridWorld-v1',

entry_point='gym.envs.user:GridEnv1',

max_episode_steps=200,

reward_threshold=100.0,

)測試

import gym

env = gym.make('GridWorld-v1')

env.reset()

env.render()

env.close()