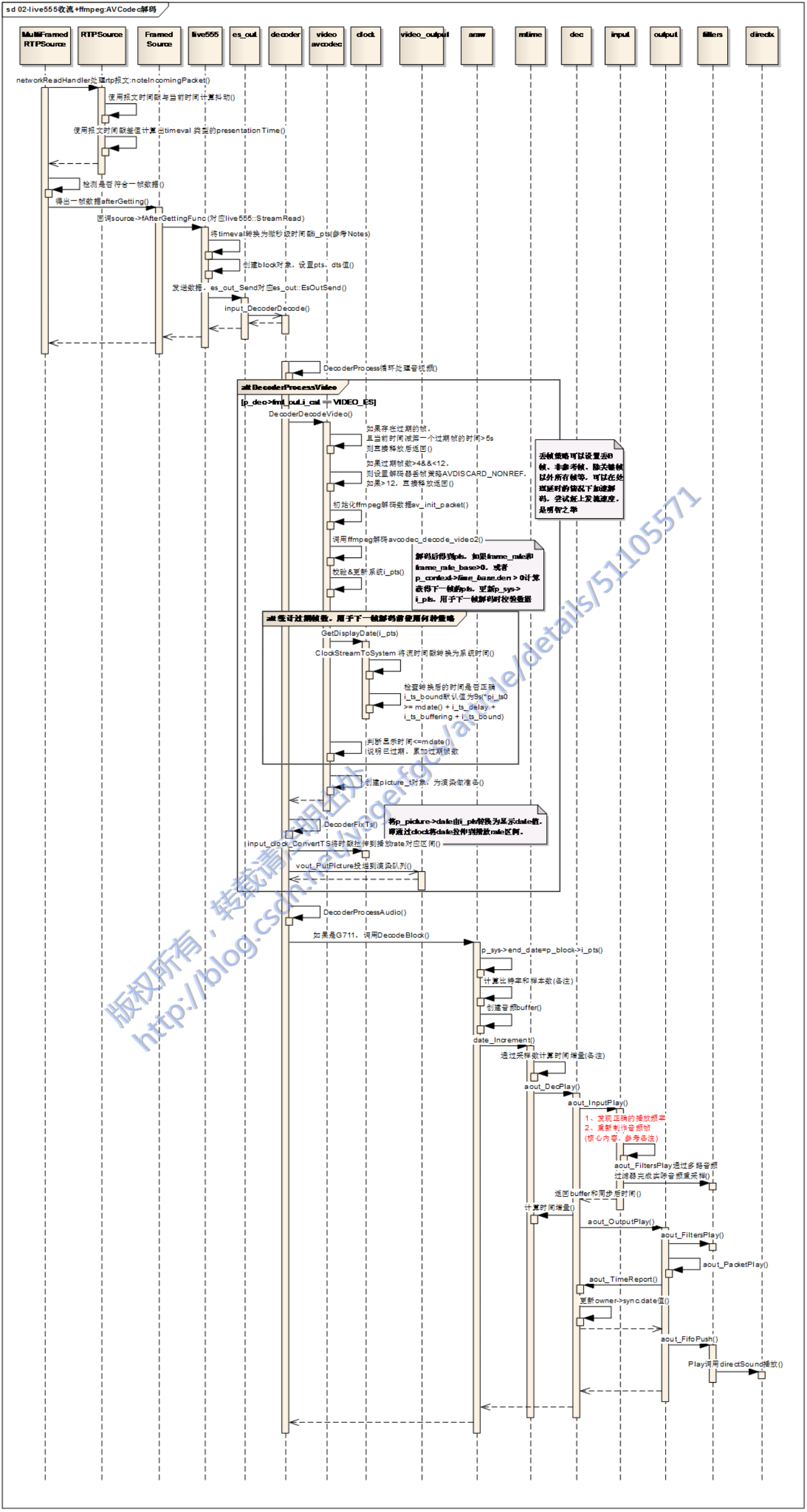

【VLC核心一】播放流程梳理->live555收流+ffmpeg:AVCodec解碼

一、前言

VLC播放音視訊的核心流程梳理,從live555收流到ffmpeg解碼的整套流程

涉及到MultiFramedRTPSource、RTPSource、FramedSource、live555、es_out、decoder、video、clock、video_output、araw、mtime、dec、input、output、filters、directx等核心類。

二、核心點備註

1、RTPSource中使用報文時間戳與當前時間計算抖動的核心程式碼

unsigned arrival = (timestampFrequency*timeNow.tv_sec);//音訊8000,視訊90000 arrival += (unsigned) ((2.0*timestampFrequency*timeNow.tv_usec + 1000000.0)/2000000); // note: rounding int transit = arrival - rtpTimestamp; if (fLastTransit == (~0)) fLastTransit = transit; // hack for first time int d = transit - fLastTransit; fLastTransit = transit; if (d < 0) d = -d; fJitter += (1.0/16.0) * ((double)d - fJitter);

2、RTPSource中使用報文時間戳差值計算出timeval 型別的presentationTime的核心程式碼

// Return the 'presentation time' that corresponds to "rtpTimestamp": if (fSyncTime.tv_sec == 0 && fSyncTime.tv_usec == 0) { // This is the first timestamp that we've seen, so use the current // 'wall clock' time as the synchronization time. (This will be // corrected later when we receive RTCP SRs.) fSyncTimestamp = rtpTimestamp; fSyncTime = timeNow; } int timestampDiff = rtpTimestamp - fSyncTimestamp; // Note: This works even if the timestamp wraps around // (as long as "int" is 32 bits) // Divide this by the timestamp frequency to get real time://視訊timeDiff =0.040000000000000001 double timeDiff = timestampDiff/(double)timestampFrequency; // Add this to the 'sync time' to get our result: unsigned const million = 1000000; unsigned seconds, uSeconds; if (timeDiff >= 0.0) { seconds = fSyncTime.tv_sec + (unsigned)(timeDiff); uSeconds = fSyncTime.tv_usec + (unsigned)((timeDiff - (unsigned)timeDiff)*million); if (uSeconds >= million) { uSeconds -= million; ++seconds; } } else { timeDiff = -timeDiff; seconds = fSyncTime.tv_sec - (unsigned)(timeDiff); uSeconds = fSyncTime.tv_usec - (unsigned)((timeDiff - (unsigned)timeDiff)*million); if ((int)uSeconds < 0) { uSeconds += million; --seconds; } }

3、live555.cpp中將timeval轉換為微秒級時間戳i_pts的要點

4、處理H.264視訊的要點int64_t i_pts = (int64_t)pts.tv_sec * INT64_C(1000000) + (int64_t)pts.tv_usec; /* XXX Beurk beurk beurk Avoid having negative value XXX */ i_pts &= INT64_C(0x00ffffffffffffff); <span style="color:#3333FF;">1)注意避免翻轉為負數 2、block裡i_pts+1,i_dts根據sdp中的packetization-mode=1欄位判斷</span>

1)丟幀策略可以設定丟B幀、非參考幀、除關鍵幀以外所有幀等,可以在處理延時的情況下加速解碼,嘗試趕上發流速度,是明智之舉;

2)如果存在過期的幀,且當前時間減第一個過期幀的時間>5S,則認為該幀過期時間太長,直接釋放;如果統計的過期幀數>4且<12,則設定ffmpeg的解碼器丟幀策略;

3)解碼後得到pts,如果frame_rate和frame_rate_base>0,或者p_context->time_base.den > 0計算獲得下一幀的pts,更新p_sys->i_pts,用於下一幀解碼時校驗資料;

4)每次ffmpeg解碼後,使用clock機制將pts流時間戳轉換為系統時間,然後檢查轉換後的時間是否過期,過期演算法為:判斷顯示時間<=mdate() 說明已過期,累加過期幀數;備註:*pi_ts0 >= mdate() + i_ts_delay + i_ts_buffering + i_ts_bound( i_ts_bound預設值為9s)

5)最後在送渲染佇列前使用clock::input_clock_ConvertTS將時戳拉伸到播放rate對應區間。

5、處理音訊的要點

1)計算位元率和樣本數 unsigned samples = (8 * p_block->i_buffer) / framebits;

2)通過取樣數計算時間增量 mtime_t date_Increment( date_t *p_date, uint32_t i_nb_samples )

3)a、發現正確的播放頻率 b、重新制作音訊幀。核心流程如下:

1、僅正常播放速率支援音訊播放,否則丟棄

2、if ( start_date != VLC_TS_INVALID && start_date < now )期望時間戳幀已嚴重過期,重刷音訊快取資料,停止重取樣(主要發生在使用者暫停或解碼器錯誤)

3、if ( p_buffer->i_pts < now + AOUT_MIN_PREPARE_TIME )幀已過期,直接丟棄;AOUT_MIN_PREPARE_TIME為40ms

4、mtime_t drift = start_date - p_buffer->i_pts;偏移量drift = 期望播放時間戳-當前幀的實際時間戳,drift<0說明幀滯後來了,drift>0說明幀提前來了;

5、if( drift < -i_input_rate * 3 * AOUT_MAX_PTS_ADVANCE / INPUT_RATE_DEFAULT )如果偏移量<-3*40ms,則停止緩衝,停止重取樣。預設值。

6、if( drift > +i_input_rate * 3 * AOUT_MAX_PTS_DELAY / INPUT_RATE_DEFAULT )如果偏移量>3*60ms,緩衝太晚,直接丟棄

7、if ( ( p_input->i_resampling_type == AOUT_RESAMPLING_NONE ) && ( drift < -AOUT_MAX_PTS_ADVANCE || drift > +AOUT_MAX_PTS_DELAY ) && p_input->i_nb_resamplers > 0 )drift漂移量<-40ms或>60ms,則重新發現播放頻率.即滯後40ms或提前60ms(主要原因是:1、輸入時鐘偏移有誤2、使用者短暫暫停 3、輸出端有點延時,丟失了一點同步--費解,4、現網有部分攝像頭的取樣率和碼流裡的時間戳不匹配,也會導致該問題)

8、根據偏移結果微調取樣頻率

9、p_buffer->i_pts = start_date;最終將期望時間戳送渲染;

三、核心流程時序圖