jenkins的容器化部署以及k8s應用的CI/CD實現

??上一篇博文談到了如何使用Helm安裝Redis和RabbitMQ,下來我們來聊聊如何用Helm安裝mysql.

??本人對於Mysql數據庫不是非常熟悉,因為我們公司的分工比較明確,數據庫這塊的工作主要由DBA負責,運維同學只負責應用的維護。

??按照我們前面博文的描述,首先是在官方文檔查看helm安裝mysql的書名: https://github.com/helm/charts/tree/master/stable/mysql

??我根據官方文檔的描述配置的value.yaml文件如下:

## mysql image version ## ref: https://hub.docker.com/r/library/mysql/tags/ ## image: "k8s.harbor.maimaiti.site/system/mysql" imageTag: "5.7.14" busybox: image: "k8s.harbor.maimaiti.site/system/busybox" tag: "1.29.3" testFramework: image: "k8s.harbor.maimaiti.site/system/bats" tag: "0.4.0" ## Specify password for root user ## ## Default: random 10 character string mysqlRootPassword: admin123 ## Create a database user ## mysqlUser: test ## Default: random 10 character string mysqlPassword: test123 ## Allow unauthenticated access, uncomment to enable ## # mysqlAllowEmptyPassword: true ## Create a database ## mysqlDatabase: test ## Specify an imagePullPolicy (Required) ## It‘s recommended to change this to ‘Always‘ if the image tag is ‘latest‘ ## ref: http://kubernetes.io/docs/user-guide/images/#updating-images ## imagePullPolicy: IfNotPresent extraVolumes: | # - name: extras # emptyDir: {} extraVolumeMounts: | # - name: extras # mountPath: /usr/share/extras # readOnly: true extraInitContainers: | # - name: do-something # image: busybox # command: [‘do‘, ‘something‘] # Optionally specify an array of imagePullSecrets. # Secrets must be manually created in the namespace. # ref: https://kubernetes.io/docs/concepts/containers/images/#specifying-imagepullsecrets-on-a-pod # imagePullSecrets: # - name: myRegistryKeySecretName ## Node selector ## ref: https://kubernetes.io/docs/concepts/configuration/assign-pod-node/#nodeselector nodeSelector: {} ## Tolerations for pod assignment ## Ref: https://kubernetes.io/docs/concepts/configuration/taint-and-toleration/ ## tolerations: [] livenessProbe: initialDelaySeconds: 30 periodSeconds: 10 timeoutSeconds: 5 successThreshold: 1 failureThreshold: 3 readinessProbe: initialDelaySeconds: 5 periodSeconds: 10 timeoutSeconds: 1 successThreshold: 1 failureThreshold: 3 ## Persist data to a persistent volume persistence: enabled: true ## database data Persistent Volume Storage Class ## If defined, storageClassName: <storageClass> ## If set to "-", storageClassName: "", which disables dynamic provisioning ## If undefined (the default) or set to null, no storageClassName spec is ## set, choosing the default provisioner. (gp2 on AWS, standard on ## GKE, AWS & OpenStack) ## storageClass: "dynamic" accessMode: ReadWriteOnce size: 8Gi annotations: {} ## Configure resource requests and limits ## ref: http://kubernetes.io/docs/user-guide/compute-resources/ ## resources: requests: memory: 256Mi cpu: 100m # Custom mysql configuration files used to override default mysql settings configurationFiles: {} # mysql.cnf: |- # [mysqld] # skip-name-resolve # ssl-ca=/ssl/ca.pem # ssl-cert=/ssl/server-cert.pem # ssl-key=/ssl/server-key.pem # Custom mysql init SQL files used to initialize the database initializationFiles: {} # first-db.sql: |- # CREATE DATABASE IF NOT EXISTS first DEFAULT CHARACTER SET utf8 DEFAULT COLLATE utf8_general_ci; # second-db.sql: |- # CREATE DATABASE IF NOT EXISTS second DEFAULT CHARACTER SET utf8 DEFAULT COLLATE utf8_general_ci; metrics: enabled: true image: k8s.harbor.maimaiti.site/system/mysqld-exporter imageTag: v0.10.0 imagePullPolicy: IfNotPresent resources: {} annotations: {} # prometheus.io/scrape: "true" # prometheus.io/port: "9104" livenessProbe: initialDelaySeconds: 15 timeoutSeconds: 5 readinessProbe: initialDelaySeconds: 5 timeoutSeconds: 1 ## Configure the service ## ref: http://kubernetes.io/docs/user-guide/services/ service: annotations: {} ## Specify a service type ## ref: https://kubernetes.io/docs/concepts/services-networking/service/#publishing-services---service-types type: ClusterIP port: 3306 # nodePort: 32000 ssl: enabled: false secret: mysql-ssl-certs certificates: # - name: mysql-ssl-certs # ca: |- # -----BEGIN CERTIFICATE----- # ... # -----END CERTIFICATE----- # cert: |- # -----BEGIN CERTIFICATE----- # ... # -----END CERTIFICATE----- # key: |- # -----BEGIN RSA PRIVATE KEY----- # ... # -----END RSA PRIVATE KEY----- ## Populates the ‘TZ‘ system timezone environment variable ## ref: https://dev.mysql.com/doc/refman/5.7/en/time-zone-support.html ## ## Default: nil (mysql will use image‘s default timezone, normally UTC) ## Example: ‘Australia/Sydney‘ # timezone: # To be added to the database server pod(s) podAnnotations: {} podLabels: {} ## Set pod priorityClassName # priorityClassName: {}

??主要修改了如下幾點的配置:

- 將所有的鏡像都改為了私服鏡像地址;

- 配置了mysql的初始化root密碼,普通用戶賬戶和密碼,創建一個測試數據庫;

- 配置了持久化存儲;

??使用helm install的時候也可以自定義參數,具體參數如何使用要看官方文檔;比如:

helm install --values=mysql.yaml --set mysqlRootPassword=abc123 --name r1 stable/mysql??查看安裝好之後的mysql如何連接,有一個my-mysql的服務直接調用即可;

[[email protected] mysql]# helm status my-mysql LAST DEPLOYED: Thu Apr 25 15:08:27 2019 NAMESPACE: kube-system STATUS: DEPLOYED RESOURCES: ==> v1/Pod(related) NAME READY STATUS RESTARTS AGE my-mysql-5fd54bd9cb-948td 2/2 Running 3 6d22h ==> v1/Secret NAME TYPE DATA AGE my-mysql Opaque 2 6d22h ==> v1/ConfigMap NAME DATA AGE my-mysql-test 1 6d22h ==> v1/PersistentVolumeClaim NAME STATUS VOLUME CAPACITY ACCESS MODES STORAGECLASS AGE my-mysql Bound pvc-ed8a9252-6728-11e9-8b25-480fcf659569 8Gi RWO dynamic 6d22h ==> v1/Service NAME TYPE CLUSTER-IP EXTERNAL-IP PORT(S) AGE my-mysql ClusterIP 10.200.200.169 <none> 3306/TCP,9104/TCP 6d22h ==> v1beta1/Deployment NAME DESIRED CURRENT UP-TO-DATE AVAILABLE AGE my-mysql 1 1 1 1 6d22h NOTES: MySQL can be accessed via port 3306 on the following DNS name from within your cluster: my-mysql.kube-system.svc.cluster.local To get your root password run: MYSQL_ROOT_PASSWORD=$(kubectl get secret --namespace kube-system my-mysql -o jsonpath="{.data.mysql-root-password}" | base64 --decode; echo) To connect to your database: 1. Run an Ubuntu pod that you can use as a client: kubectl run -i --tty ubuntu --image=ubuntu:16.04 --restart=Never -- bash -il 2. Install the mysql client: $ apt-get update && apt-get install mysql-client -y 3. Connect using the mysql cli, then provide your password: $ mysql -h my-mysql -p To connect to your database directly from outside the K8s cluster: MYSQL_HOST=127.0.0.1 MYSQL_PORT=3306 # Execute the following command to route the connection: kubectl port-forward svc/my-mysql 3306 mysql -h ${MYSQL_HOST} -P${MYSQL_PORT} -u root -p${MYSQL_ROOT_PASSWORD} [[email protected] mysql]#

2. 使用prometheus監控公共組件:

2.1 在prometheus的configmap裏面增加配置:

- job_name: "mysql"

static_configs:

- targets: [‘my-mysql:9104‘]

- job_name: "redis"

static_configs:

- targets: [‘my-redis-redis-ha:9121‘]

??然後重新刪除和建立configmap,熱更新prometheus的配置;

kubectl replace -f configmap.yaml --force curl -X POST "http://10.109.108.37:9090/-/reload"

3. 使用helm安裝jenkins

??首先還是搜索一下jenkins的chart

[[email protected] k8sdemo2]# helm search jenkins

NAME CHART VERSION APP VERSION DESCRIPTION

stable/jenkins 1.1.10 lts Open source continuous integration server. It supports mu...

[[email protected] k8sdemo2]#?? 然後我們把它下載下來並解壓

helm fetch stable/jenkins --untar --untardir ./??在kubernetes集群裏面使用jenkins的原理主要包括:

-

在k8s集群裏面運行jenkins master的pod,使用持久化存儲,保證jenkins pod重啟或者遷移之後,jenkins的插件和頁面的job配置不會丟失;

- 在K8S集群裏面運行jenkins slave Pod,當有一個job運行的時候,就會啟動一個jenkins slave的Pod,當job運行完成之後這個slave pod會自動銷毀,可以節省資源;

-

因為jenkins主要是CI/CD工具,所以jenkins完成的任務包括調用gitlab下載代碼--->使用maven編譯打包--->使用sonar代碼檢查(可選)--->使用docker build構建鏡像--->使用docker push上傳鏡像到私有倉庫--->

使用kubectl命令發布應用到k8s集群 - 我們發布一個k8s應用一般需要用到Dockerfile、k8s YAML清單配置文件、jenkins pipeline流水線配置文件;

-

Dockerfile文件的主要目的是構建鏡像,將jenkins maven打包之後的war包或者jar包ADD到鏡像內,然後定義鏡像的啟動命令,環境變量(比如JAVA_HOME)等;

-

YAML文件主要定義的K8S部署應用的規則,比如部署幾個副本,應用的資源限制是多少,應用啟動之後的健康檢查是curl還是tcp端口檢查;應用是否需要掛載存儲等;除了配置應用的deployment文件之外,

一般還要配置service清單文件,用於其他應用調用服務名來訪問本應用,如果應用還需要對外提供訪問,還需要配置Ingress文件,甚至還包括配置文件configmap需要創建,應用依賴的數據庫,MQ賬戶信息等需要

使用secrets配置清單文件等等,所以建議熟悉Helm的同學在jenkins裏面還是調用helm命令部署應用是最好的; -

pipeline文件就是jenkins的配置內容了,現在使用jenkins都是推薦使用流水線模式,因為流水線模式非常利於jenkins的遷移等;pipeline文件主要定義了step,包括上面描述的打包、構建、鏡像制作、

k8s應用發布等動作,這些動作的實現都是依靠jenkins slave這個POD。所以這個jenkins slave鏡像的制作就是非常重要的了。 - jenkins slave鏡像主要包含JAVA命令、maven命令、docker命令、kubectl命令等;還要掛載docker.sock文件和kubectl config文件等;

??緊接著就是參考官方文檔的說明修改value.yaml文件: https://github.com/helm/charts/tree/master/stable/jenkins

# Default values for jenkins.

# This is a YAML-formatted file.

# Declare name/value pairs to be passed into your templates.

# name: value

## Overrides for generated resource names

# See templates/_helpers.tpl

# nameOverride:

# fullnameOverride:

master:

# Used for label app.kubernetes.io/component

componentName: "jenkins-master"

image: "k8s.harbor.maimaiti.site/system/jenkins"

imageTag: "lts"

imagePullPolicy: "Always"

imagePullSecretName:

# Optionally configure lifetime for master-container

lifecycle:

# postStart:

# exec:

# command:

# - "uname"

# - "-a"

numExecutors: 0

# configAutoReload requires UseSecurity is set to true:

useSecurity: true

# Allows to configure different SecurityRealm using Jenkins XML

securityRealm: |-

<securityRealm class="hudson.security.LegacySecurityRealm"/>

# Allows to configure different AuthorizationStrategy using Jenkins XML

authorizationStrategy: |-

<authorizationStrategy class="hudson.security.FullControlOnceLoggedInAuthorizationStrategy">

<denyAnonymousReadAccess>true</denyAnonymousReadAccess>

</authorizationStrategy>

hostNetworking: false

# When enabling LDAP or another non-Jenkins identity source, the built-in admin account will no longer exist.

# Since the AdminUser is used by configAutoReload, in order to use configAutoReload you must change the

# .master.adminUser to a valid username on your LDAP (or other) server. This user does not need

# to have administrator rights in Jenkins (the default Overall:Read is sufficient) nor will it be granted any

# additional rights. Failure to do this will cause the sidecar container to fail to authenticate via SSH and enter

# a restart loop. Likewise if you disable the non-Jenkins identity store and instead use the Jenkins internal one,

# you should revert master.adminUser to your preferred admin user:

adminUser: "admin"

adminPassword: [email protected]

# adminSshKey: <defaults to auto-generated>

# If CasC auto-reload is enabled, an SSH (RSA) keypair is needed. Can either provide your own, or leave unconfigured to allow a random key to be auto-generated.

# If you supply your own, it is recommended that the values file that contains your key not be committed to source control in an unencrypted format

rollingUpdate: {}

# Ignored if Persistence is enabled

# maxSurge: 1

# maxUnavailable: 25%

resources:

requests:

cpu: "2000m"

memory: "2048Mi"

limits:

cpu: "2000m"

memory: "4096Mi"

# Environment variables that get added to the init container (useful for e.g. http_proxy)

# initContainerEnv:

# - name: http_proxy

# value: "http://192.168.64.1:3128"

# containerEnv:

# - name: http_proxy

# value: "http://192.168.64.1:3128"

# Set min/max heap here if needed with:

# javaOpts: "-Xms512m -Xmx512m"

# jenkinsOpts: ""

# jenkinsUrl: ""

# If you set this prefix and use ingress controller then you might want to set the ingress path below

# jenkinsUriPrefix: "/jenkins"

# Enable pod security context (must be `true` if runAsUser or fsGroup are set)

usePodSecurityContext: true

# Set runAsUser to 1000 to let Jenkins run as non-root user ‘jenkins‘ which exists in ‘jenkins/jenkins‘ docker image.

# When setting runAsUser to a different value than 0 also set fsGroup to the same value:

# runAsUser: <defaults to 0>

# fsGroup: <will be omitted in deployment if runAsUser is 0>

servicePort: 8080

# For minikube, set this to NodePort, elsewhere use LoadBalancer

# Use ClusterIP if your setup includes ingress controller

serviceType: LoadBalancer

# Jenkins master service annotations

serviceAnnotations: {}

# Jenkins master custom labels

deploymentLabels: {}

# foo: bar

# bar: foo

# Jenkins master service labels

serviceLabels: {}

# service.beta.kubernetes.io/aws-load-balancer-backend-protocol: https

# Put labels on Jenkins master pod

podLabels: {}

# Used to create Ingress record (should used with ServiceType: ClusterIP)

# hostName: jenkins.cluster.local

# nodePort: <to set explicitly, choose port between 30000-32767

# Enable Kubernetes Liveness and Readiness Probes

# ~ 2 minutes to allow Jenkins to restart when upgrading plugins. Set ReadinessTimeout to be shorter than LivenessTimeout.

healthProbes: true

healthProbesLivenessTimeout: 90

healthProbesReadinessTimeout: 60

healthProbeReadinessPeriodSeconds: 10

healthProbeLivenessFailureThreshold: 12

slaveListenerPort: 50000

slaveHostPort:

disabledAgentProtocols:

- JNLP-connect

- JNLP2-connect

csrf:

defaultCrumbIssuer:

enabled: true

proxyCompatability: true

cli: false

# Kubernetes service type for the JNLP slave service

# slaveListenerServiceType is the Kubernetes Service type for the JNLP slave service,

# either ‘LoadBalancer‘, ‘NodePort‘, or ‘ClusterIP‘

# Note if you set this to ‘LoadBalancer‘, you *must* define annotations to secure it. By default

# this will be an external load balancer and allowing inbound 0.0.0.0/0, a HUGE

# security risk: https://github.com/kubernetes/charts/issues/1341

slaveListenerServiceType: "ClusterIP"

slaveListenerServiceAnnotations: {}

slaveKubernetesNamespace:

# Example of ‘LoadBalancer‘ type of slave listener with annotations securing it

# slaveListenerServiceType: LoadBalancer

# slaveListenerServiceAnnotations:

# service.beta.kubernetes.io/aws-load-balancer-internal: "True"

# service.beta.kubernetes.io/load-balancer-source-ranges: "172.0.0.0/8, 10.0.0.0/8"

# LoadBalancerSourcesRange is a list of allowed CIDR values, which are combined with ServicePort to

# set allowed inbound rules on the security group assigned to the master load balancer

loadBalancerSourceRanges:

- 0.0.0.0/0

# Optionally assign a known public LB IP

# loadBalancerIP: 1.2.3.4

# Optionally configure a JMX port

# requires additional javaOpts, ie

# javaOpts: >

# -Dcom.sun.management.jmxremote.port=4000

# -Dcom.sun.management.jmxremote.authenticate=false

# -Dcom.sun.management.jmxremote.ssl=false

# jmxPort: 4000

# Optionally configure other ports to expose in the master container

extraPorts:

# - name: BuildInfoProxy

# port: 9000

# List of plugins to be install during Jenkins master start

installPlugins:

- kubernetes:1.14.0

- workflow-job:2.31

- workflow-aggregator:2.6

- credentials-binding:1.17

- git:3.9.1

# Enable to always override the installed plugins with the values of ‘master.installPlugins‘ on upgrade or redeployment.

# overwritePlugins: true

# Enable HTML parsing using OWASP Markup Formatter Plugin (antisamy-markup-formatter), useful with ghprb plugin.

# The plugin is not installed by default, please update master.installPlugins.

enableRawHtmlMarkupFormatter: false

# Used to approve a list of groovy functions in pipelines used the script-security plugin. Can be viewed under /scriptApproval

scriptApproval:

# - "method groovy.json.JsonSlurperClassic parseText java.lang.String"

# - "new groovy.json.JsonSlurperClassic"

# List of groovy init scripts to be executed during Jenkins master start

initScripts:

# - |

# print ‘adding global pipeline libraries, register properties, bootstrap jobs...‘

# Kubernetes secret that contains a ‘credentials.xml‘ for Jenkins

# credentialsXmlSecret: jenkins-credentials

# Kubernetes secret that contains files to be put in the Jenkins ‘secrets‘ directory,

# useful to manage encryption keys used for credentials.xml for instance (such as

# master.key and hudson.util.Secret)

# secretsFilesSecret: jenkins-secrets

# Jenkins XML job configs to provision

jobs:

# test: |-

# <<xml here>>

# Below is the implementation of Jenkins Configuration as Code. Add a key under configScripts for each configuration area,

# where each corresponds to a plugin or section of the UI. Each key (prior to | character) is just a label, and can be any value.

# Keys are only used to give the section a meaningful name. The only restriction is they may only contain RFC 1123 \ DNS label

# characters: lowercase letters, numbers, and hyphens. The keys become the name of a configuration yaml file on the master in

# /var/jenkins_home/casc_configs (by default) and will be processed by the Configuration as Code Plugin. The lines after each |

# become the content of the configuration yaml file. The first line after this is a JCasC root element, eg jenkins, credentials,

# etc. Best reference is https://<jenkins_url>/configuration-as-code/reference. The example below creates a welcome message:

JCasC:

enabled: false

pluginVersion: 1.5

supportPluginVersion: 1.5

configScripts:

welcome-message: |

jenkins:

systemMessage: Welcome to our CI\CD server. This Jenkins is configured and managed ‘as code‘.

# Optionally specify additional init-containers

customInitContainers: []

# - name: CustomInit

# image: "alpine:3.7"

# imagePullPolicy: Always

# command: [ "uname", "-a" ]

sidecars:

configAutoReload:

# If enabled: true, Jenkins Configuration as Code will be reloaded on-the-fly without a reboot. If false or not-specified,

# jcasc changes will cause a reboot and will only be applied at the subsequent start-up. Auto-reload uses the Jenkins CLI

# over SSH to reapply config when changes to the configScripts are detected. The admin user (or account you specify in

# master.adminUser) will have a random SSH private key (RSA 4096) assigned unless you specify adminSshKey. This will be saved to a k8s secret.

enabled: false

image: shadwell/k8s-sidecar:0.0.2

imagePullPolicy: IfNotPresent

resources:

# limits:

# cpu: 100m

# memory: 100Mi

# requests:

# cpu: 50m

# memory: 50Mi

# SSH port value can be set to any unused TCP port. The default, 1044, is a non-standard SSH port that has been chosen at random.

# Is only used to reload jcasc config from the sidecar container running in the Jenkins master pod.

# This TCP port will not be open in the pod (unless you specifically configure this), so Jenkins will not be

# accessible via SSH from outside of the pod. Note if you use non-root pod privileges (runAsUser & fsGroup),

# this must be > 1024:

sshTcpPort: 1044

# folder in the pod that should hold the collected dashboards:

folder: "/var/jenkins_home/casc_configs"

# If specified, the sidecar will search for JCasC config-maps inside this namespace.

# Otherwise the namespace in which the sidecar is running will be used.

# It‘s also possible to specify ALL to search in all namespaces:

# searchNamespace:

# Allows you to inject additional/other sidecars

other:

## The example below runs the client for https://smee.io as sidecar container next to Jenkins,

## that allows to trigger build behind a secure firewall.

## https://jenkins.io/blog/2019/01/07/webhook-firewalls/#triggering-builds-with-webhooks-behind-a-secure-firewall

##

## Note: To use it you should go to https://smee.io/new and update the url to the generete one.

# - name: smee

# image: docker.io/twalter/smee-client:1.0.2

# args: ["--port", "{{ .Values.master.servicePort }}", "--path", "/github-webhook/", "--url", "https://smee.io/new"]

# resources:

# limits:

# cpu: 50m

# memory: 128Mi

# requests:

# cpu: 10m

# memory: 32Mi

# Node labels and tolerations for pod assignment

# ref: https://kubernetes.io/docs/concepts/configuration/assign-pod-node/#nodeselector

# ref: https://kubernetes.io/docs/concepts/configuration/assign-pod-node/#taints-and-tolerations-beta-feature

nodeSelector: {}

tolerations: []

# Leverage a priorityClass to ensure your pods survive resource shortages

# ref: https://kubernetes.io/docs/concepts/configuration/pod-priority-preemption/

# priorityClass: system-cluster-critical

podAnnotations: {}

# The below two configuration-related values are deprecated and replaced by Jenkins Configuration as Code (see above

# JCasC key). They will be deleted in an upcoming version.

customConfigMap: false

# By default, the configMap is only used to set the initial config the first time

# that the chart is installed. Setting `overwriteConfig` to `true` will overwrite

# the jenkins config with the contents of the configMap every time the pod starts.

# This will also overwrite all init scripts

overwriteConfig: false

# By default, the Jobs Map is only used to set the initial jobs the first time

# that the chart is installed. Setting `overwriteJobs` to `true` will overwrite

# the jenkins jobs configuration with the contents of Jobs every time the pod starts.

overwriteJobs: false

ingress:

enabled: true

# For Kubernetes v1.14+, use ‘networking.k8s.io/v1beta1‘

apiVersion: "extensions/v1beta1"

labels: {}

annotations:

kubernetes.io/ingress.class: traefik

# kubernetes.io/tls-acme: "true"

# Set this path to jenkinsUriPrefix above or use annotations to rewrite path

# path: "/jenkins"

hostName: k8s.jenkins.maimaiti.site

tls:

# - secretName: jenkins.cluster.local

# hosts:

# - jenkins.cluster.local

# Openshift route

route:

enabled: false

labels: {}

annotations: {}

# path: "/jenkins"

additionalConfig: {}

# master.hostAliases allows for adding entries to Pod /etc/hosts:

# https://kubernetes.io/docs/concepts/services-networking/add-entries-to-pod-etc-hosts-with-host-aliases/

hostAliases: []

# - ip: 192.168.50.50

# hostnames:

# - something.local

# - ip: 10.0.50.50

# hostnames:

# - other.local

agent:

enabled: true

image: "10.83.74.102/jenkins/jnlp"

imageTag: "v11"

customJenkinsLabels: []

# name of the secret to be used for image pulling

imagePullSecretName:

componentName: "jenkins-slave"

privileged: false

resources:

requests:

cpu: "2000m"

memory: "4096Mi"

limits:

cpu: "2000m"

memory: "4096Mi"

# You may want to change this to true while testing a new image

alwaysPullImage: false

# Controls how slave pods are retained after the Jenkins build completes

# Possible values: Always, Never, OnFailure

podRetention: "Never"

# You can define the volumes that you want to mount for this container

# Allowed types are: ConfigMap, EmptyDir, HostPath, Nfs, Pod, Secret

# Configure the attributes as they appear in the corresponding Java class for that type

# https://github.com/jenkinsci/kubernetes-plugin/tree/master/src/main/java/org/csanchez/jenkins/plugins/kubernetes/volumes

# Pod-wide ennvironment, these vars are visible to any container in the slave pod

envVars:

# - name: PATH

# value: /usr/local/bin

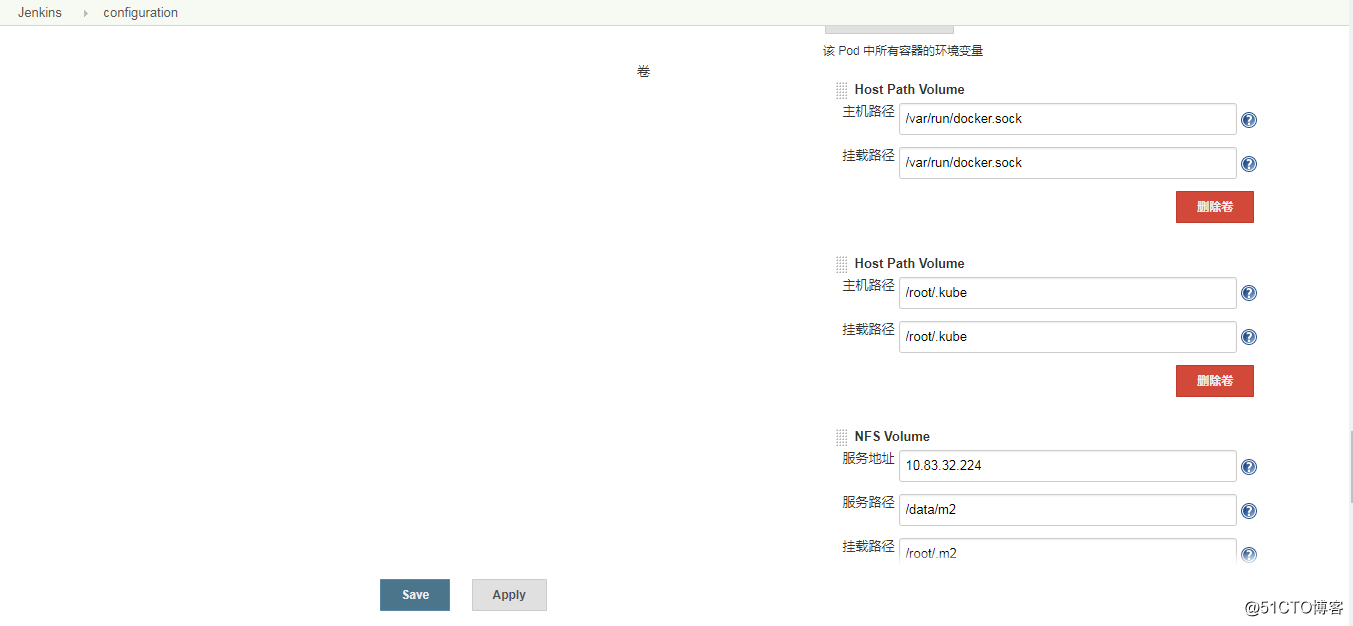

volumes:

- type: HostPath

hostPath: /var/run/docker.sock

mountPath: /var/run/docker.sock

- type: HostPath

hostPath: /root/.kube

mountPath: /root/.kube

- type: Nfs

mountPath: /root/.m2

serverAddress: 10.83.32.224

serverPath: /data/m2

# - type: Secret

# secretName: mysecret

# mountPath: /var/myapp/mysecret

nodeSelector: {}

# Key Value selectors. Ex:

# jenkins-agent: v1

# Executed command when side container gets started

command:

args:

# Side container name

sideContainerName: "jnlp"

# Doesn‘t allocate pseudo TTY by default

TTYEnabled: false

# Max number of spawned agent

containerCap: 10

# Pod name

podName: "jenkins-slave"

persistence:

enabled: true

## A manually managed Persistent Volume and Claim

## Requires persistence.enabled: true

## If defined, PVC must be created manually before volume will be bound

existingClaim:

## jenkins data Persistent Volume Storage Class

## If defined, storageClassName: <storageClass>

## If set to "-", storageClassName: "", which disables dynamic provisioning

## If undefined (the default) or set to null, no storageClassName spec is

## set, choosing the default provisioner. (gp2 on AWS, standard on

## GKE, AWS & OpenStack)

##

storageClass: "dynamic"

annotations: {}

accessMode: "ReadWriteOnce"

size: "8Gi"

volumes:

# - name: nothing

# emptyDir: {}

mounts:

# - mountPath: /var/nothing

# name: nothing

# readOnly: true

networkPolicy:

# Enable creation of NetworkPolicy resources.

enabled: false

# For Kubernetes v1.4, v1.5 and v1.6, use ‘extensions/v1beta1‘

# For Kubernetes v1.7, use ‘networking.k8s.io/v1‘

apiVersion: networking.k8s.io/v1

## Install Default RBAC roles and bindings

rbac:

create: true

serviceAccount:

create: true

# The name of the service account is autogenerated by default

name:

annotations: {}

## Backup cronjob configuration

## Ref: https://github.com/nuvo/kube-tasks

backup:

# Backup must use RBAC

# So by enabling backup you are enabling RBAC specific for backup

enabled: false

# Used for label app.kubernetes.io/component

componentName: "backup"

# Schedule to run jobs. Must be in cron time format

# Ref: https://crontab.guru/

schedule: "0 2 * * *"

annotations:

# Example for authorization to AWS S3 using kube2iam

# Can also be done using environment variables

iam.amazonaws.com/role: "jenkins"

image:

repository: "nuvo/kube-tasks"

tag: "0.1.2"

# Additional arguments for kube-tasks

# Ref: https://github.com/nuvo/kube-tasks#simple-backup

extraArgs: []

# Add additional environment variables

env:

# Example environment variable required for AWS credentials chain

- name: "AWS_REGION"

value: "us-east-1"

resources:

requests:

memory: 1Gi

cpu: 1

limits:

memory: 1Gi

cpu: 1

# Destination to store the backup artifacts

# Supported cloud storage services: AWS S3, Minio S3, Azure Blob Storage

# Additional support can added. Visit this repository for details

# Ref: https://github.com/nuvo/skbn

destination: "s3://nuvo-jenkins-data/backup"

checkDeprecation: true

??我主要修改了value.yaml以下幾個配置參數,主要包括:

-

修改了鏡像的參數為私服倉庫的鏡像。這裏有一個特別註意的點就是jenkins slave鏡像,這個鏡像如果只是使用

官方的鏡像還是不行的,需要自己制作鏡像。鏡像裏面要包含kubectl命令、docker命令、mvn打包命令等; -

配置了jenkins的登錄密碼;

-

配置了資源限制情況,這裏要特別註意一點,jenkins slave鏡像的默認資源限制太小,經常會因為這個資源不足導致jinkins slave Pod終端;所以需要將jenkins slave鏡像的資源限制調高一點;

-

再就是配置ingress,因為我們需要通過k8s集群外訪問jenkins應用;

-

最主要的是agent這一塊的配置,這裏的鏡像要自己制作,並且要把資源限額調大,並且要掛載docker.sock和kubectl的配置文件,將jenkins slaves

的.m2目錄配置成nfs存儲。這樣的話,重新啟動的jenkins slave構建的時候就不用每次都在apache官網下載依賴的jar包了 - 配置持久化存儲,因為持久化存儲需要存儲插件和jenkins的頁面配置;

??在jenkins這個應用部署到k8s集群過程中,我踩過了幾個坑,現在把這些坑都總結一下,給大家參考:

-

我在測試jenkins的時候,刪除一個chart。由於一直習慣於使用命令

helm delete jenkins --purge來刪除應用,結果我使用了這個命令之後,再次使用

helm install --name jenkins --namespace=kube-system ./jenkins當我登錄jenkins的時候,發現所有的配置都沒有了。包括我已經配置的job、安裝的插件等等;所以大家在使用helm delete的時候一定要記得--purge慎用,加了--purge就代表pvc也會一並刪除;

- 我在制作jenkins slave鏡像的時候,運行起來的slave pod,當執行docker命令的時候,總是提示如下報錯:

+ docker version

Client:

Version: 18.06.0-ce

API version: 1.38

Go version: go1.10.3

Git commit: 0ffa825

Built: Wed Jul 18 19:04:39 2018

OS/Arch: linux/amd64

Experimental: false

Got permission denied while trying to connect to the Docker daemon socket at unix:///var/run/docker.sock: Get http://%2Fvar%2Frun%2Fdocker.sock/v1.38/version: dial unix /var/run/docker.sock: connect: permission denied

Build step ‘Execute shell‘ marked build as failure

Finished: FAILURE其實這個報錯的原因是因為官方的jenkins slave鏡像是已jenkins(id為10010)的用戶啟動的,但是我們映射到

slave鏡像的/var/run/docker.sock文件,必須要root用戶才有權限讀取。所以就產生了這個問題;解決方案有兩種:

- 把所有的k8s宿主機的/var/run/docker.sock文件權限修改為chmod -R 777 /var/run/docker.sock 再mount進去,因為不安全不推薦這樣;

- 在jenkins slave鏡像中設置jenkins用戶可以使用sudo命令執行root權限的命令

jenkins ALL=(ALL) NOPASSWD: ALL - 直接定義jenkins slave鏡像由root用戶啟動 USER root即可;

??我這裏選擇了第三種方案;其實第二種方案是最安全的,只是因為我的pipeline文件裏面調用了多個docker插件,來實現docker build和docker login等,不知道插件裏面怎麽實現sudo命令;自定義jenkins slave鏡像的Dockerfile可以這樣寫:

FROM 10.83.74.102/jenkins/jnlp:v2

MAINTAINER Yang Gao "[email protected]"

USER root

ADD jdk /root/

ADD maven /root/maven

ENV JAVA_HOME /root/jdk/

ENV MAVEN_HOME /root/maven/

ENV PATH $PATH:$JAVA_HOME/bin:$MAVEN_HOME/bin

RUN echo "deb http://apt.dockerproject.org/repo debian-jessie main" > /etc/apt/sources.list.d/docker.list && apt-key adv --keyserver hkp://p80.pool.sks-keyservers.net:80 --recv-keys 58118E89F3A912897C070ADBF76221572C52609D && apt-get update && apt-get install -y apt-transport-https && apt-get install -y sudo && apt-get install -y docker-engine && rm -rf /var/lib/apt/lists/*

RUN echo "jenkins ALL=NOPASSWD: ALL" >> /etc/sudoers

RUN curl -L https://github.com/docker/compose/releases/download/1.8.0/docker-compose-`uname -s`-`uname -m` > /usr/local/bin/docker-compose; chmod +x /usr/local/bin/docker-compose??然後使用docker build命令構建鏡像

docker build -t harbor.k8s.maimaiti.site/system/jenkins-jnlp:v11

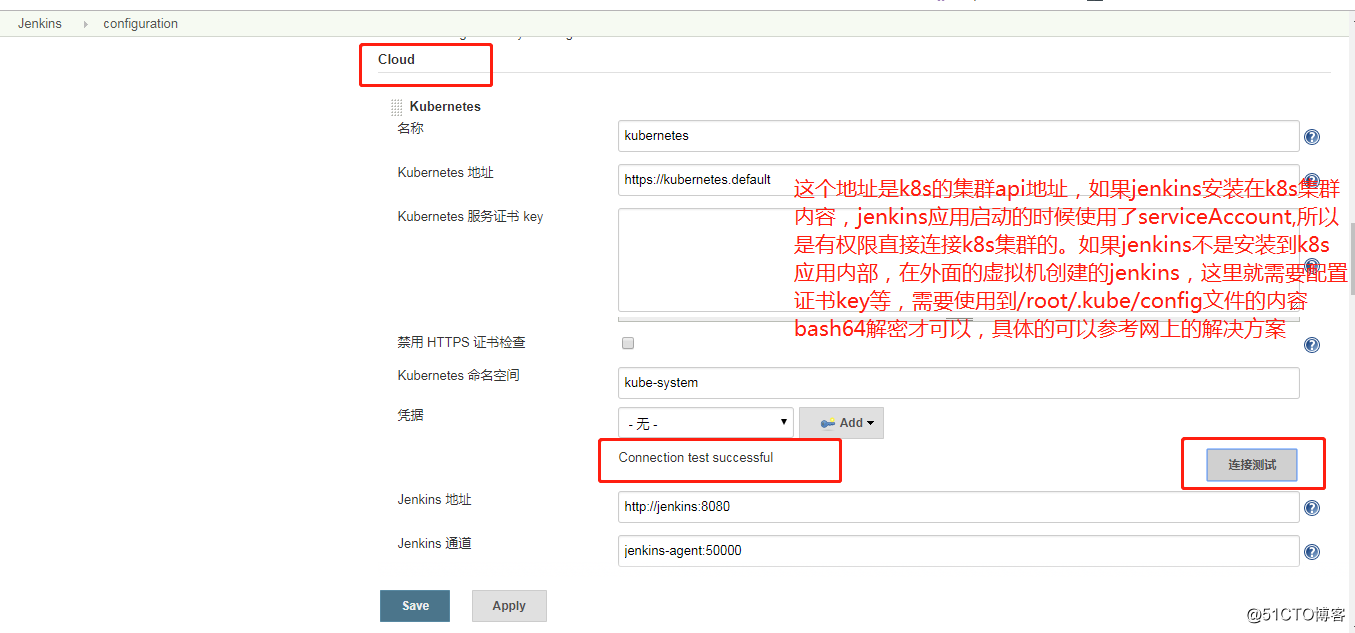

docker push harbor.k8s.maimaiti.site/system/jenkins-jnlp:v11- jenkins slave pod模板可以在兩個地方配置,一個就是helm chart value.yaml文件裏面定義,包括slave

鏡像的版本,掛載等;還有一種就是在jenkins頁面安裝了kubernetes插件之後有個雲的配置,裏面也可以配置jenkins slave pod模板。

但是有個問題,如果你使用了helm upgrade 命令修改了helm裏面的參數,包括slave的版本等,實際上是不生效的。因為jenkins還是

會使用管理頁面的那個配置。當使用helm install jenkins的時候,第一次登陸jenkins頁面,這個jenkins的helm默認就安裝了

kubernetes插件,所以可以直接到系統管理---系統配置裏面找雲的配置,默認就有了slave pod的配置,這個配置和helm value.yaml裏面是一樣的。

但是後續再helm upgrade jenkins的時候,實際上是不能更新jenkins頁面的這個地方的slave 配置,所以會發現一直更改jenkins slave不生效。

??講完了我探究jenkins in kubernetes遇到的坑,再看看一個k8s應用發布,到底都需要配置哪些jenkins的設置。這裏我列舉了一個我們公司的內部項目

其中一些地方做了脫敏處理,大家只需要關註流程和架構即可,具體的配置已各公司的應用不同會有所不通;

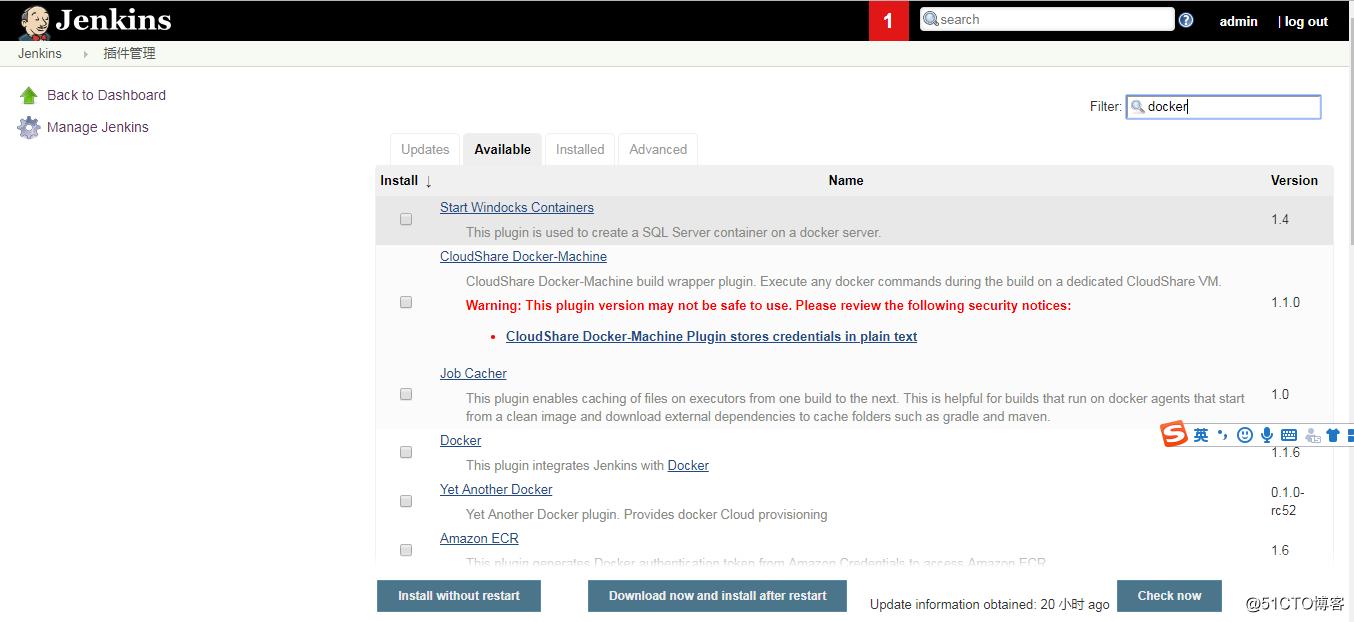

-

登錄jenkins,配置插件;系統管理---插件管理---available插件---安裝docker、kubernetes插件等

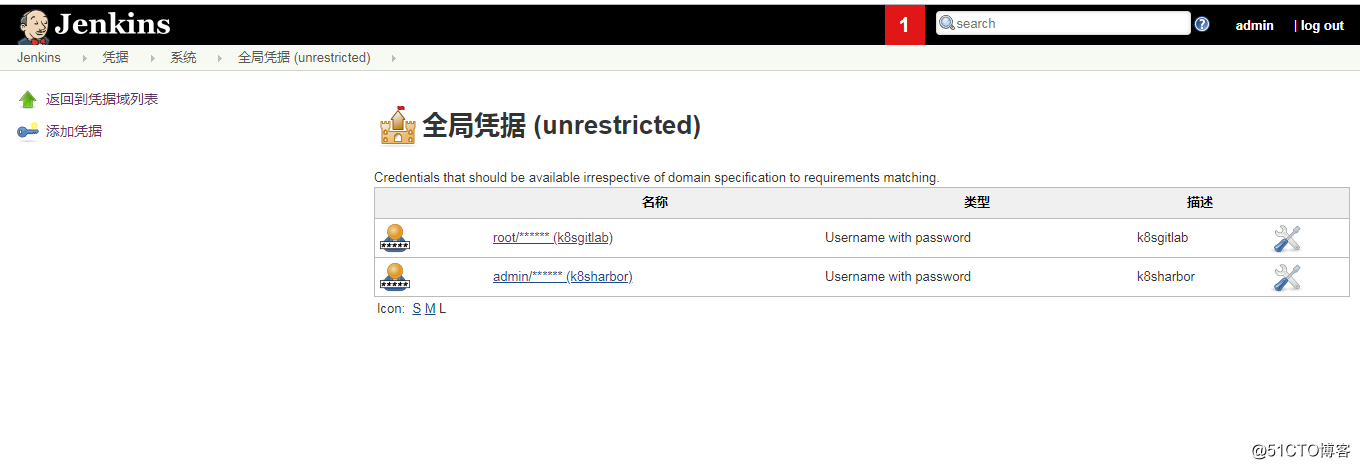

- 配置憑據:配置包括gitlab和harbor的賬戶和密碼,後面再pipeline裏面會使用到

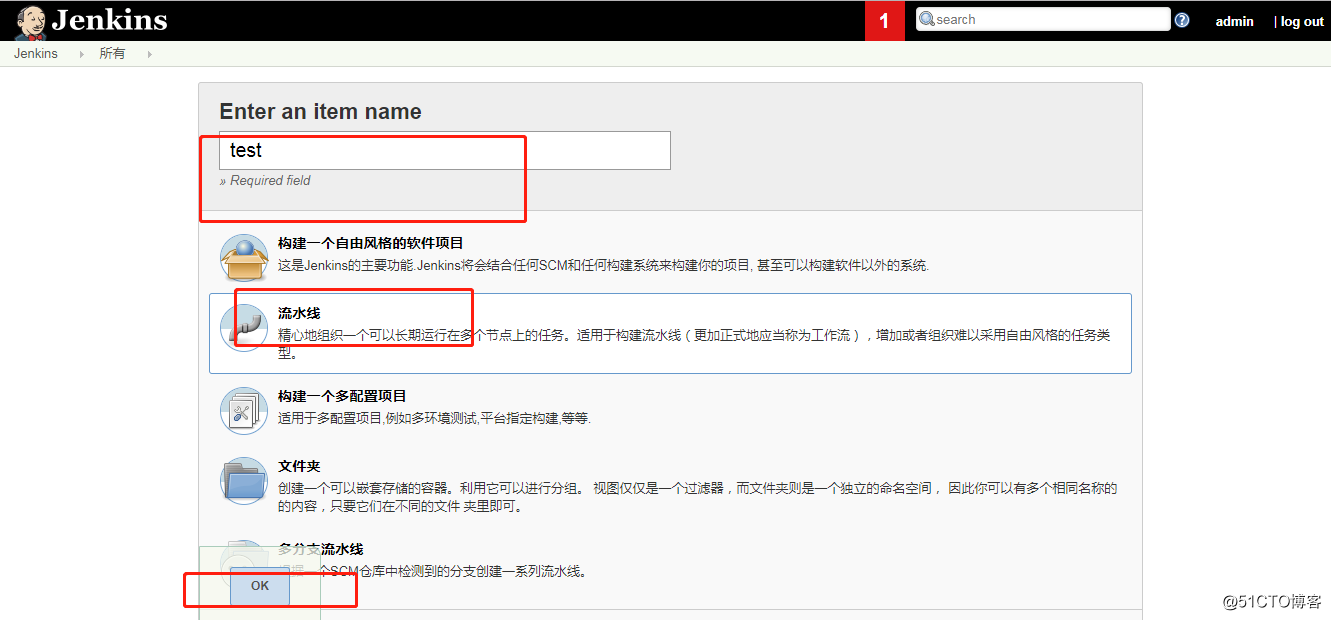

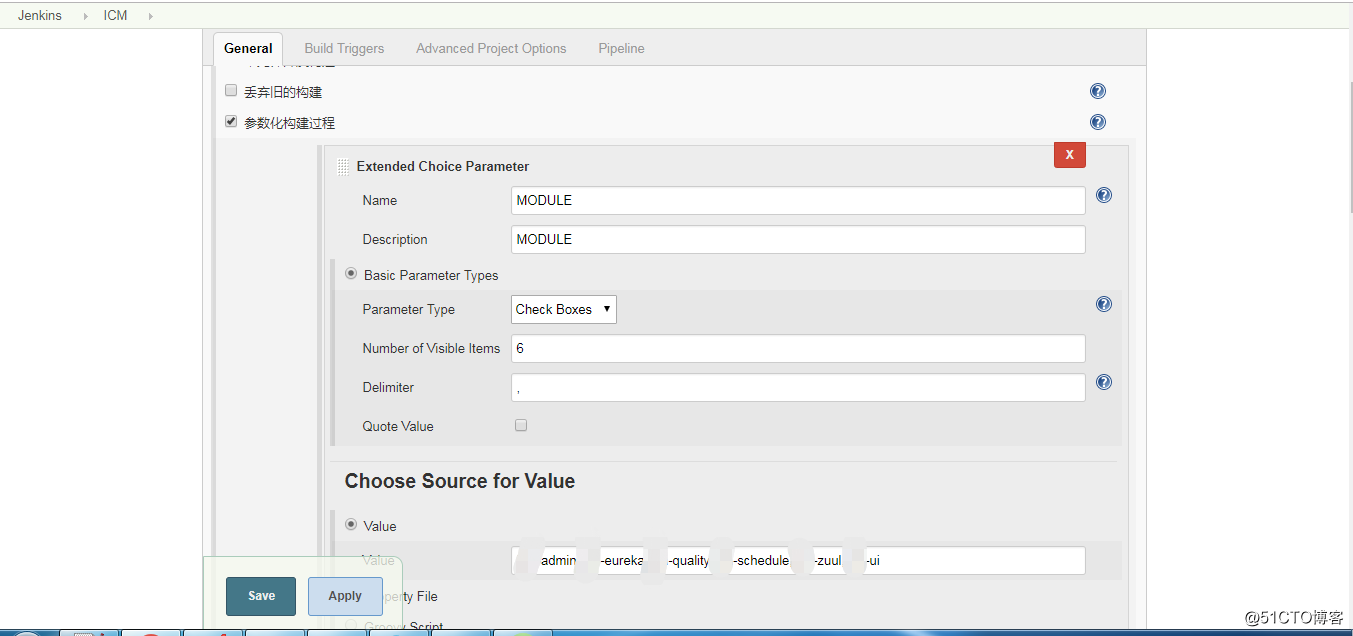

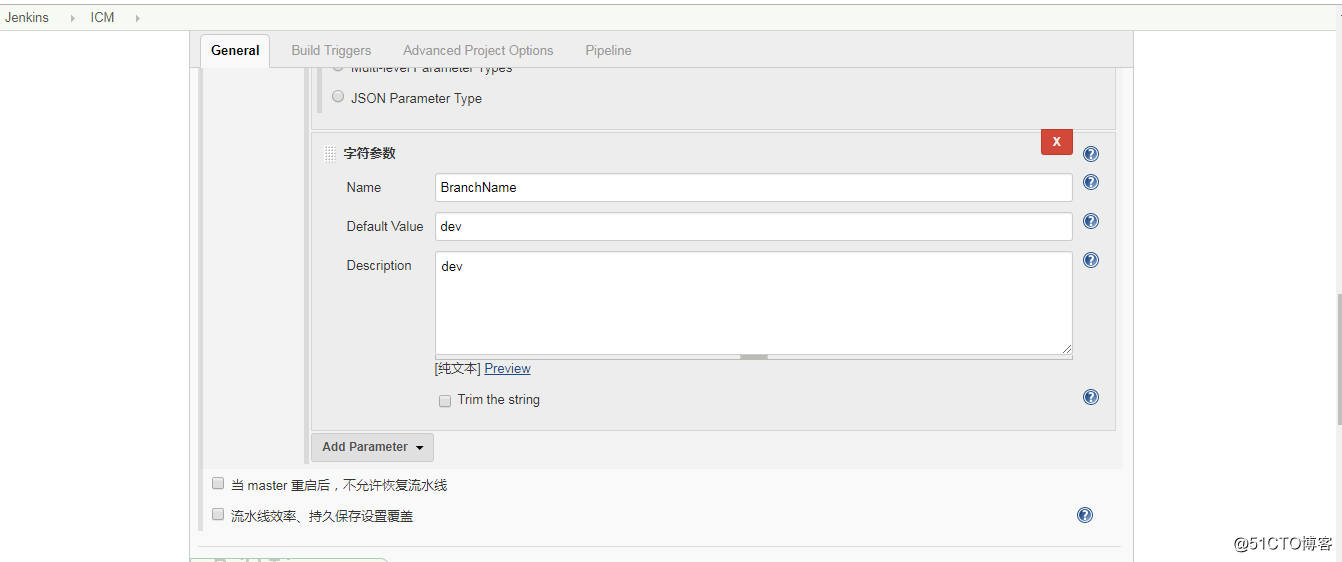

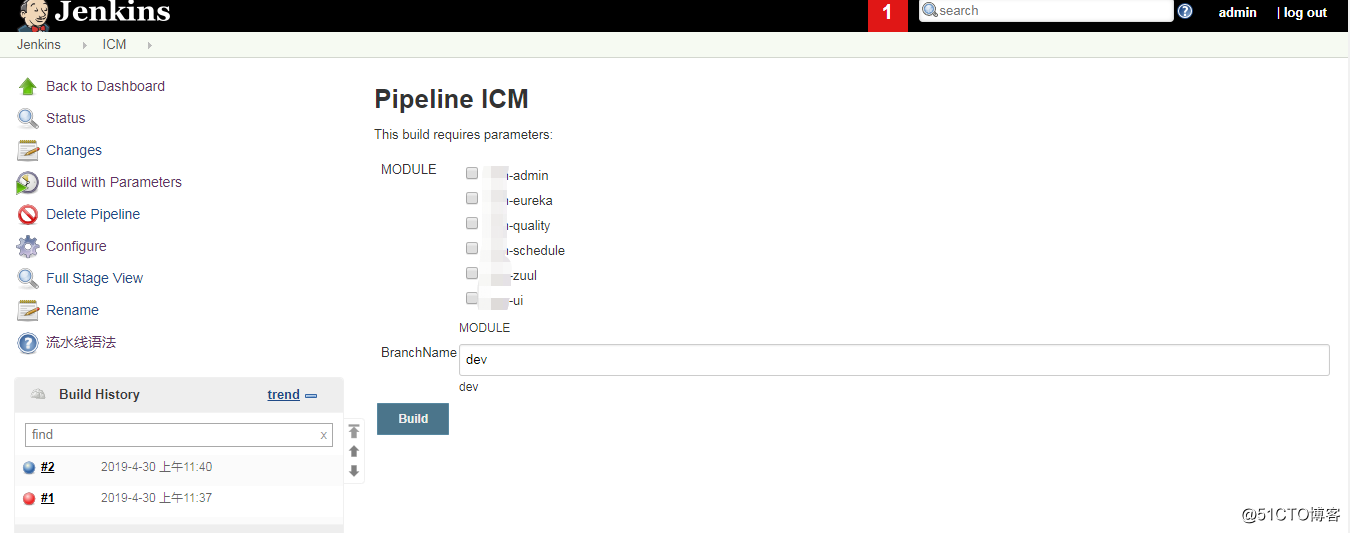

- 創建一個pipeline的job,配置包括:

- 配置發布應用的模塊變量

- 配置發布應用的版本分支名稱;

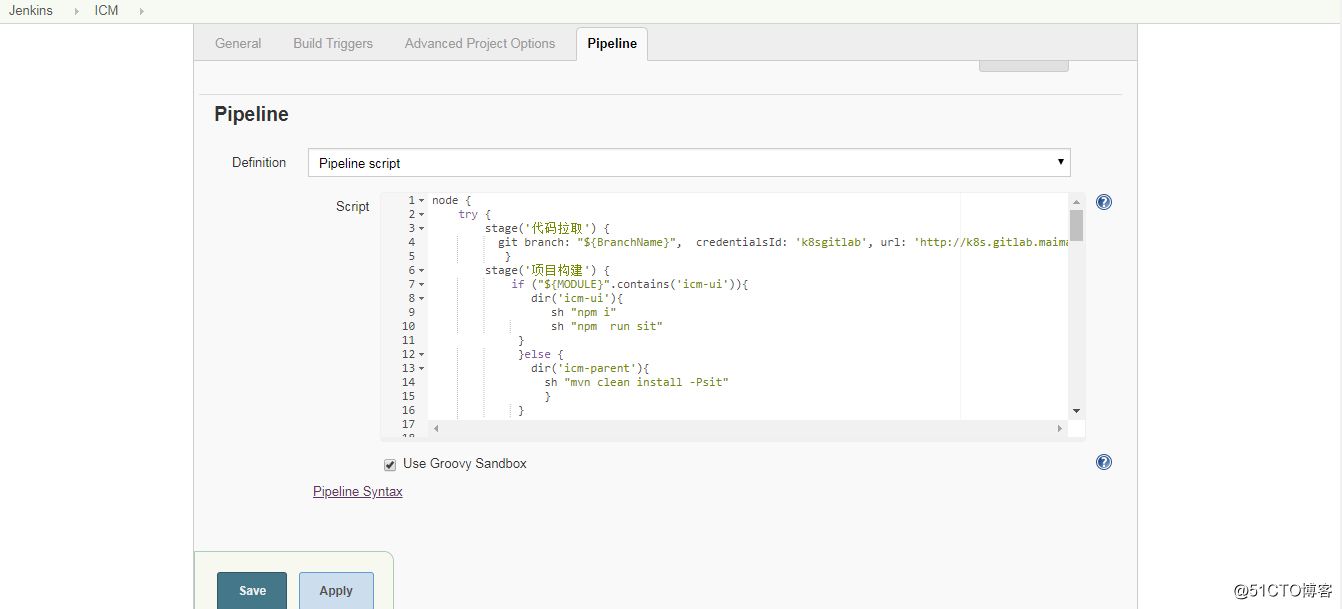

- 配置pipeline的內容;

k8s應用清單文件:

node {

try {

stage(‘代碼拉取‘) {

git branch: "${BranchName}", credentialsId: ‘k8sgitlab‘, url: ‘http://k8s.gitlab.test.site/root/test.git‘

}

stage(‘項目構建‘) {

if ("${MODULE}".contains(‘test-ui‘)){

dir(‘test-ui‘){

sh "npm i"

sh "npm run sit"

}

}else {

dir(‘test-parent‘){

sh "mvn clean install -Psit"

}

}

}

def regPrefix = ‘k8s.harbor.test.site/test/‘

stage(‘構建鏡像‘){

docker.withRegistry(‘http://k8s.harbor.test.site/‘,‘k8sharbor‘){

if ("${MODULE}".contains(‘test-admin‘)){

dir(‘test-parent/test-admin/target‘) {

sh "cp ../Dockerfile . && cp -rf ../BOOT-INF ./ &&cp -rf ../../pinpoint-agent ./"

sh "jar -uvf admin.jar BOOT-INF/classes/application.yml"

def imageName = docker.build("${regPrefix}admin:V1.0-${env.BUILD_ID}")

imageName.push("V1.0-${env.BUILD_ID}")

//imageName.push("latest")

sh "/usr/bin/docker rmi ${regPrefix}admin:V1.0-${env.BUILD_ID}"

}

}

if ("${MODULE}".contains(‘test-eureka‘)){

dir(‘test-parent/test-eureka/target‘) {

sh "cp ../Dockerfile . && cp -rf ../BOOT-INF ./ &&cp -rf ../../pinpoint-agent ./"

sh "jar -uvf testEurekaServer.jar BOOT-INF/classes/application.yml"

def imageName = docker.build("${regPrefix}eureka:V1.0-${env.BUILD_ID}")

imageName.push("V1.0-${env.BUILD_ID}")

//imageName.push("latest")

sh "/usr/bin/docker rmi ${regPrefix}eureka:V1.0-${env.BUILD_ID}"

}

}

if ("${MODULE}".contains(‘test-quality‘)){

dir(‘test-parent/test-quality/target‘) {

sh "cp ../Dockerfile . && cp -rf ../BOOT-INF ./ &&cp -rf ../../pinpoint-agent ./"

sh "jar -uvf quality.jar BOOT-INF/classes/application.yml"

def imageName = docker.build("${regPrefix}quality:V1.0-${env.BUILD_ID}")

imageName.push("V1.0-${env.BUILD_ID}")

//imageName.push("latest")

sh "/usr/bin/docker rmi ${regPrefix}quality:V1.0-${env.BUILD_ID}"

}

}

if ("${MODULE}".contains(‘test-schedule‘)){

dir(‘test-parent/test-schedule/target‘) {

sh "cp ../Dockerfile . && cp -rf ../BOOT-INF ./ &&cp -rf ../../pinpoint-agent ./"

sh "jar -uvf schedule.jar BOOT-INF/classes/application.yml "

def imageName = docker.build("${regPrefix}schedule:V1.0-${env.BUILD_ID}")

imageName.push("V1.0-${env.BUILD_ID}")

//imageName.push("latest")

sh "/usr/bin/docker rmi ${regPrefix}schedule:V1.0-${env.BUILD_ID}"

}

}

if ("${MODULE}".contains(‘test-zuul‘)){

dir(‘test-parent/test-zuul/target‘) {

sh "cp ../Dockerfile . && cp -rf ../BOOT-INF ./ &&cp -rf ../../pinpoint-agent ./"

sh "jar -uvf test-api.jar BOOT-INF/classes/application.yml "

def imageName = docker.build("${regPrefix}zuul:V1.0-${env.BUILD_ID}")

imageName.push("V1.0-${env.BUILD_ID}")

//imageName.push("latest")

sh "/usr/bin/docker rmi ${regPrefix}zuul:V1.0-${env.BUILD_ID}"

}

}

}

}

stage(‘重啟應用‘){

if ("${MODULE}".contains(‘test-admin‘)){

sh "sed -i \‘s/latest/V1.0-${env.BUILD_ID}/g\‘ test-parent/DockerCompose/test-admin.yml "

sh "/usr/local/bin/kubectl --kubeconfig=test-parent/DockerCompose/config apply -f test-parent/DockerCompose/test-admin.yml --record "

}

if ("${MODULE}".contains(‘test-eureka‘)){

sh "sed -i \‘s/latest/V1.0-${env.BUILD_ID}/g\‘ test-parent/DockerCompose/test-eureka.yml "

sh "/usr/local/bin/kubectl apply -f test-parent/DockerCompose/test-eureka.yml --record "

}

if ("${MODULE}".contains(‘test-quality‘)){

sh "sed -i \‘s/latest/V1.0-${env.BUILD_ID}/g\‘ test-parent/DockerCompose/test-quality.yml "

sh "/usr/local/bin/kubectl apply -ftest-parent/DockerCompose/test-quality.yml --record "

}

if ("${MODULE}".contains(‘test-schedule‘)){

sh "sed -i \‘s/latest/V1.0-${env.BUILD_ID}/g\‘ test-parent/DockerCompose/test-schedule.yml "

sh "/usr/local/bin/kubectl apply -f test-parent/DockerCompose/test-schedule.yml --record "

}

if ("${MODULE}".contains(‘test-zuul‘)){

sh "sed -i \‘s/latest/V1.0-${env.BUILD_ID}/g\‘ test-parent/DockerCompose/test-zuul.yml "

sh "/usr/local/bin/kubectl apply -f test-parent/DockerCompose/test-zuul.yml --record "

}

}

}catch (any) {

currentBuild.result = ‘FAILURE‘

throw any}

}

??Dockerfile的內容主要如下:

FROM 10.10.10.10/library/java:8-jdk-alpine

ENV TZ=Asia/Shanghai

VOLUME /tmp

ADD admin.jar /app/test/admin.jar

ADD skywalking-agent/ /app/icm/skywalking-agent

ENTRYPOINT ["java","-javaagent:/app/test/skywalking-agent/skywalking-agent.jar","-Djava.security.egd=file:/dev/./urandom","-XX:+UnlockExperimentalVMOptions","-XX:+UseCGroupMemoryLimitForHeap","-jar","/app/test/admin.jar"]

??Yaml清單文件的內容主要如下:

apiVersion: apps/v1beta2

kind: Deployment

metadata:

name: test-admin

namespace: sit

labels:

k8s-app: test-admin

spec:

replicas: 3

revisionHistoryLimit: 3

#滾動升級時70s後認為該pod就緒

minReadySeconds: 70

strategy:

##由於replicas為3,則整個升級,pod個數在2-4個之間

rollingUpdate:

#滾動升級時會先啟動1個pod

maxSurge: 1

#滾動升級時允許的最大Unavailable的pod個數

maxUnavailable: 1

selector:

matchLabels:

k8s-app: test-admin

template:

metadata:

labels:

k8s-app: test-admin

spec:

containers:

- name: test-admin

image: k8s.harbor.test.site/test/admin:latest

resources:

# need more cpu upon initialization, therefore burstable class

#limits:

# memory: 1024Mi

# cpu: 200m

#requests:

# cpu: 100m

# memory: 256Mi

ports:

#容器的端口

- containerPort: 8281

#name: ui

protocol: TCP

livenessProbe:

httpGet:

path: /admin/health

port: 8281

scheme: HTTP

initialDelaySeconds: 180

timeoutSeconds: 5

periodSeconds: 15

successThreshold: 1

failureThreshold: 2

#volumeMounts:

#- mountPath: "/download"

# name: data

#volumes:

#- name: data

# persistentVolumeClaim:

# claimName: download-pvc

---

apiVersion: v1

kind: Service

metadata:

name: test-admin

namespace: sit

labels:

k8s-app: test-admin

spec:

type: NodePort

ports:

#集群IP的端口

- port: 8281

protocol: TCP

#容器的端口

targetPort: 8281

#nodePort: 28281

selector:

k8s-app: test-admin推薦關註我的個人微信公眾號 “雲時代IT運維”,周期性更新最新的應用運維類技術文檔。關註虛擬化和容器技術、CI/CD、自動化運維等最新前沿運維技術和趨勢;

jenkins的容器化部署以及k8s應用的CI/CD實現