Spark2.4.5叢集安裝與本地開發

阿新 • • 發佈:2020-05-15

# 下載

官網地址:https://www.apache.org/dyn/closer.lua/spark/spark-2.4.5/spark-2.4.5-bin-hadoop2.7.tgz

## 驗證Java是否安裝

```

java -verison

```

[JDK下載地址](https://www.oracle.com/java/technologies/javase-jdk14-downloads.html)

解壓安裝

```

tar -zxvf jdk-14.0.1_linux-x64_bin.tar.gz

mv jdk-14.0.1 /usr/local/java

```

## 驗證Scala是否安裝

```

scala -verison

wget https://downloads.lightbend.com/scala/2.13.1/scala-2.13.1.tgz

tar xvf scala-2.13.1.tgz

mv scala-2.13.1 /usr/local/

```

- 設定jdk與scala的環境變數

```

vi /etc/profile

export JAVA_HOME=/usr/local/java

export SPARK_HOME=/usr/local/spark

export CLASSPATH=$JAVA_HOME/jre/lib/ext:$JAVA_HOME/lib/tools.jar

export PATH=$JAVA_HOME/bin:$PATH:$SPARK_HOME/bin

source /etc/profile

```

- 再次驗證一下是否安裝成功

```

scala -version

java -verison

```

## 安裝spark

1. 解壓並移動到相應的目錄

```

tar -zxvf spark-2.4.5-bin-hadoop2.7.tgz

mv spark-2.4.5-bin-hadoop2.7 /usr/local/spark

```

2. 設定spark環境變數

```

vi /etc/profile

export PATH=$PATH:/usr/local/spark/bin

```

儲存,重新整理

```

source /etc/profile

```

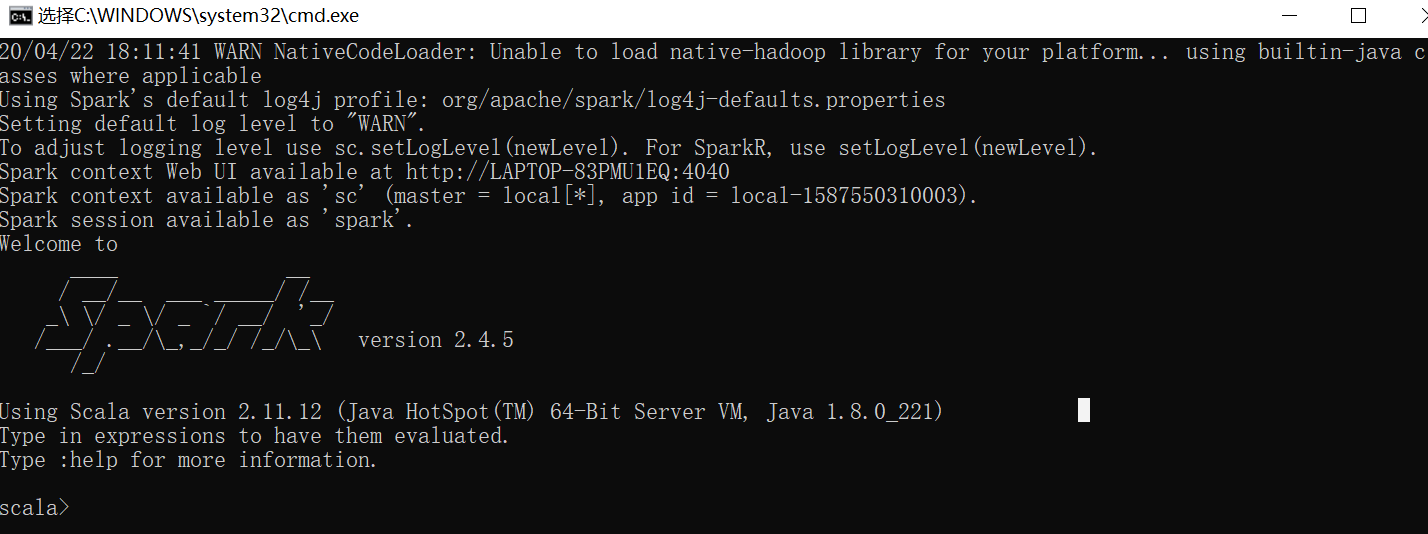

3. 驗證一下spark shell

```

spark-shell

```

出現以下資訊,即成功

# 設定Spark主結點

spark配置都提供了相應的模板配置,我們複製一份出來

```

cd /usr/local/spark/conf/

cp spark-env.sh.template spark-env.sh

vi spark-env.sh

```

- 設定主結點Master的IP

```

SPARK_MASTER_HOST='192.168.56.109'

JAVA_HOME=/usr/local/java

```

- 如果是單機啟動

```

./sbin/start-master.sh

```

- 開啟 http://192.168.56.109:8080/

出現以下介面即成功:

- 停止

```

./sbin/stop-master.sh

```

- 設定hosts

```

192.168.56.109 master

192.168.56.110 slave01

192.168.56.111 slave02

```

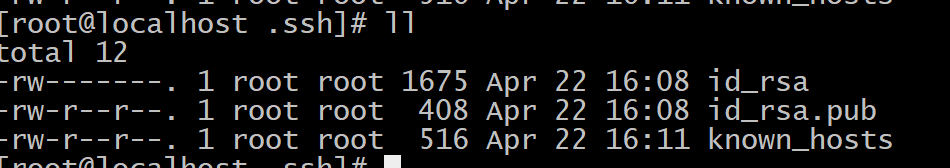

# 免密登入

Master上執行

```

ssh-keygen -t rsa -P ""

```

生成三個檔案

將id_rsa.pub複製到slave,注意authorized_keys就是id_rsa.pub,在slave機器上名為authorized_keys,操作

```

scp -r id_rsa.pub [email protected]:/root/.ssh/authorized_keys

scp -r id_rsa.pub [email protected]:/root/.ssh/authorized_keys

cp id_rsa.pub authorized_keys

```

到slava機器上

```

chmod 700 .ssh

```

- 檢查一下是否可以免密登入到slave01,slave02

```

ssh slave01

ssh slave02

```

# Master與Slave配置worker結點

```

cd /usr/local/spark/conf

cp slaves.template slaves

```

加入兩個slave,注意:slaves檔案中不要加master,不然master也成為一個slave結點

```

vi slaves

slave01

slave02

```

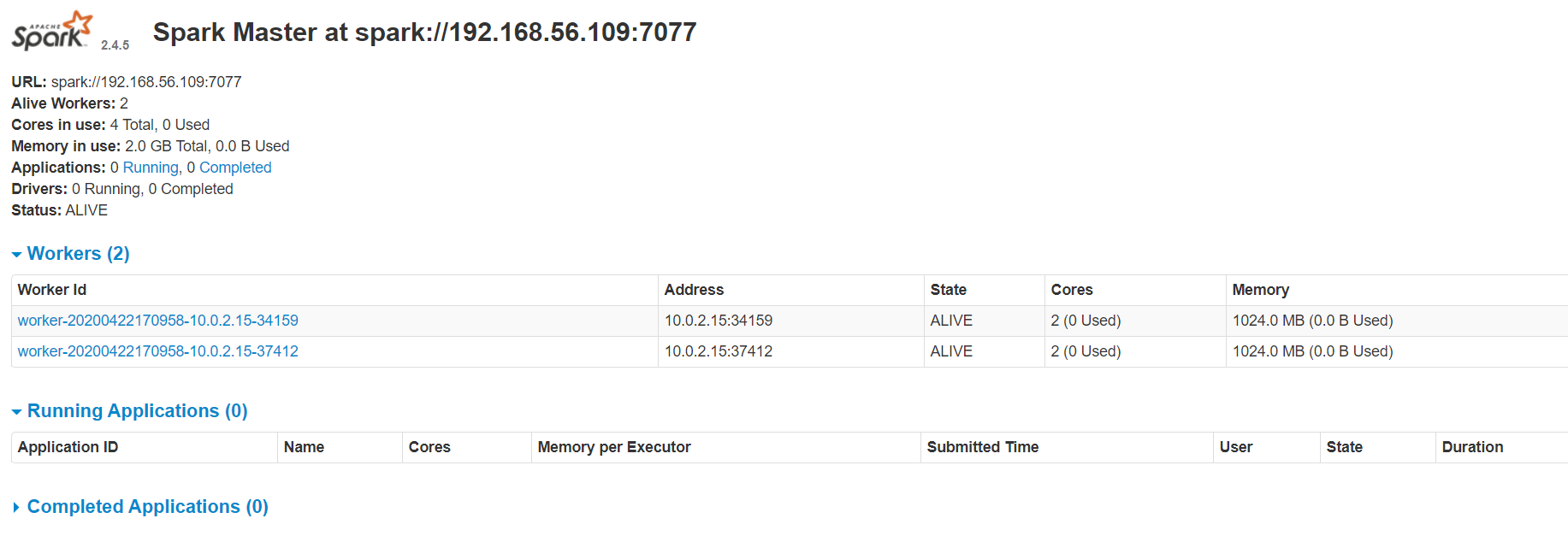

# Master結點啟動

```

cd /usr/local/spark

./sbin/start-all.sh

```

如果出現 JAVA_HOME is not set 錯誤,則需要在slave結點的配置目錄中的spark-env.sh中加入JAVA_HOME=/usr/local/java

如果啟動成功訪問:http://192.168.56.109:8080/,會出現兩個worker

# 本地開發

將上面spark-2.4.5-bin-hadoop2.7解壓到本地,到bin目錄雙擊spark-shell.cmd,不出意外應該會報錯

```

Could not locate executable null\bin\winutils.exe in the Hadoop binaries.

```

錯誤原因是因為沒有下載Hadoop windows可執行檔案。因為我們本地沒有hadoop環境,這裡可以用winutils來模擬,並不需要我們真的去搭建hadoop

可以到這裡[下載](https://github.com/steveloughran/winutils/blob/master/hadoop-2.7.1/bin/winutils.exe),如果要下載其它版本的可以自行選擇

- 設定本機環境變數

再次重啟,可以看到如下資訊即成功

- idea裡Run/Debug配置里加入以下環境變數

- idea裡還需要加入scala外掛,後面可以愉快的用data.show()查看錶格了