YOLOv4: Darknet 如何於 Docker 編譯,及訓練 COCO 子集

阿新 • • 發佈:2020-09-11

YOLO 演算法是非常著名的目標檢測演算法。從其全稱 You Only Look Once: Unified, Real-Time Object Detection ,可以看出它的特性:

* Look Once: one-stage (one-shot object detectors) 演算法,把目標檢測的兩個任務分類和定位一步完成。

* Unified: 統一的架構,提供 end-to-end 的訓練和預測。

* Real-Time: 實時性,初代論文給出的指標 FPS 45 , mAP 63.4 。

YOLOv4: Optimal Speed and Accuracy of Object Detection ,於今年 4 月公佈,採用了很多近些年 CNN 領域優秀的優化技巧。其平衡了精度與速度,目前在實時目標檢測演算法中精度是最高的。

論文地址:

* YOLO: https://arxiv.org/abs/1506.02640

* YOLO v4: https://arxiv.org/abs/2004.10934

原始碼地址:

* YOLO: https://github.com/pjreddie/darknet

* YOLO v4: https://github.com/AlexeyAB/darknet

本文將介紹 YOLOv4 官方 Darknet 實現,如何於 Docker 編譯使用。以及從 MS COCO 2017 資料集中怎麼選出部分物體,訓練出模型。

主要內容有:

* 準備 Docker 映象

* 準備 COCO 資料集

* 用預訓練模型進行推斷

* 準備 COCO 資料子集

* 訓練自己的模型並推斷

* 參考內容

## 準備 Docker 映象

首先,準備 Docker ,請見:[Docker: Nvidia Driver, Nvidia Docker 推薦安裝步驟](https://mp.weixin.qq.com/s/fOjWV5TUAxRF5Mjj0Y0Dlw) 。

之後,開始準備映象,從下到上的層級為:

* nvidia/cuda: https://hub.docker.com/r/nvidia/cuda

* OpenCV: https://github.com/opencv/opencv

* Darknet: https://github.com/AlexeyAB/darknet

### nvidia/cuda

準備 Nvidia 基礎 CUDA 映象。這裡我們選擇 CUDA 10.2 ,不用最新 CUDA 11,因為現在 PyTorch 等都還都是 10.2 呢。

拉取映象:

```bash

docker pull nvidia/cuda:10.2-cudnn7-devel-ubuntu18.04

```

測試映象:

```bash

$ docker run --gpus all nvidia/cuda:10.2-cudnn7-devel-ubuntu18.04 nvidia-smi

Sun Aug 8 00:00:00 2020

+-----------------------------------------------------------------------------+

| NVIDIA-SMI 440.100 Driver Version: 440.100 CUDA Version: 10.2 |

|-------------------------------+----------------------+----------------------+

| GPU Name Persistence-M| Bus-Id Disp.A | Volatile Uncorr. ECC |

| Fan Temp Perf Pwr:Usage/Cap| Memory-Usage | GPU-Util Compute M. |

|===============================+======================+======================|

| 0 GeForce RTX 208... Off | 00000000:07:00.0 On | N/A |

| 0% 48C P8 14W / 300W | 340MiB / 11016MiB | 2% Default |

+-------------------------------+----------------------+----------------------+

| 1 GeForce RTX 208... Off | 00000000:08:00.0 Off | N/A |

| 0% 45C P8 19W / 300W | 1MiB / 11019MiB | 0% Default |

+-------------------------------+----------------------+----------------------+

+-----------------------------------------------------------------------------+

| Processes: GPU Memory |

| GPU PID Type Process name Usage |

|=============================================================================|

+-----------------------------------------------------------------------------+

```

### OpenCV

基於 nvidia/cuda 映象,構建 OpenCV 的映象:

```bash

cd docker/ubuntu18.04-cuda10.2/opencv4.4.0/

docker build \

-t joinaero/ubuntu18.04-cuda10.2:opencv4.4.0 \

--build-arg opencv_ver=4.4.0 \

--build-arg opencv_url=https://gitee.com/cubone/opencv.git \

--build-arg opencv_contrib_url=https://gitee.com/cubone/opencv_contrib.git \

.

```

其 Dockerfile 可見這裡: https://github.com/ikuokuo/start-yolov4/blob/master/docker/ubuntu18.04-cuda10.2/opencv4.4.0/Dockerfile 。

### Darknet

基於 OpenCV 映象,構建 Darknet 映象:

```bash

cd docker/ubuntu18.04-cuda10.2/opencv4.4.0/darknet/

docker build \

-t joinaero/ubuntu18.04-cuda10.2:opencv4.4.0-darknet \

.

```

其 Dockerfile 可見這裡: https://github.com/ikuokuo/start-yolov4/blob/master/docker/ubuntu18.04-cuda10.2/opencv4.4.0/darknet/Dockerfile 。

上述映象已上傳 Docker Hub 。如果 Nvidia 驅動能夠支援 CUDA 10.2 ,那可以直接拉取該映象:

```bash

docker pull joinaero/ubuntu18.04-cuda10.2:opencv4.4.0-darknet

```

## 準備 COCO 資料集

MS COCO 2017 下載地址: http://cocodataset.org/#download

影象,包括:

* 2017 Train images [118K/18GB]

* http://images.cocodataset.org/zips/train2017.zip

* 2017 Val images [5K/1GB]

* http://images.cocodataset.org/zips/val2017.zip

* 2017 Test images [41K/6GB]

* http://images.cocodataset.org/zips/test2017.zip

* 2017 Unlabeled images [123K/19GB]

* http://images.cocodataset.org/zips/unlabeled2017.zip

標註,包括:

* 2017 Train/Val annotations [241MB]

* http://images.cocodataset.org/annotations/annotations_trainval2017.zip

* 2017 Stuff Train/Val annotations [1.1GB]

* http://images.cocodataset.org/annotations/stuff_annotations_trainval2017.zip

* 2017 Panoptic Train/Val annotations [821MB]

* http://images.cocodataset.org/annotations/panoptic_annotations_trainval2017.zip

* 2017 Testing Image info [1MB]

* http://images.cocodataset.org/annotations/image_info_test2017.zip

* 2017 Unlabeled Image info [4MB]

* http://images.cocodataset.org/annotations/image_info_unlabeled2017.zip

## 用預訓練模型進行推斷

預訓練模型 yolov4.weights ,下載地址 https://github.com/AlexeyAB/darknet/releases/download/darknet_yolo_v3_optimal/yolov4.weights 。

執行映象:

```bash

xhost +local:docker

docker run -it --gpus all \

-e DISPLAY \

-e QT_X11_NO_MITSHM=1 \

-v /tmp/.X11-unix:/tmp/.X11-unix \

-v $HOME/.Xauthority:/root/.Xauthority \

--name darknet \

--mount type=bind,source=$HOME/Codes/devel/datasets/coco2017,target=/home/coco2017 \

--mount type=bind,source=$HOME/Codes/devel/models/yolov4,target=/home/yolov4 \

joinaero/ubuntu18.04-cuda10.2:opencv4.4.0-darknet

```

進行推斷:

```bash

./darknet detector test cfg/coco.data cfg/yolov4.cfg /home/yolov4/yolov4.weights \

-thresh 0.25 -ext_output -show -out /home/coco2017/result.json \

/home/coco2017/test2017/000000000001.jpg

```

推斷結果:

## 準備 COCO 資料子集

MS COCO 2017 資料集有 80 個物體標籤。我們從中選取自己關注的物體,重組個子資料集。

首先,獲取樣例程式碼:

```bash

git clone https://github.com/ikuokuo/start-yolov4.git

```

* scripts/coco2yolo.py: COCO 資料集轉 YOLO 資料集的指令碼

* scripts/coco/label.py: COCO 資料集的物體標籤有哪些

* cfg/coco/coco.names: 編輯我們想要的那些物體標籤

之後,準備資料集:

```bash

cd start-yolov4/

pip install -r scripts/requirements.txt

export COCO_DIR=$HOME/Codes/devel/datasets/coco2017

# train

python scripts/coco2yolo.py \

--coco_img_dir $COCO_DIR/train2017/ \

--coco_ann_file $COCO_DIR/annotations/instances_train2017.json \

--yolo_names_file ./cfg/coco/coco.names \

--output_dir ~/yolov4/coco2017/ \

--output_name train2017 \

--output_img_prefix /home/yolov4/coco2017/train2017/

# valid

python scripts/coco2yolo.py \

--coco_img_dir $COCO_DIR/val2017/ \

--coco_ann_file $COCO_DIR/annotations/instances_val2017.json \

--yolo_names_file ./cfg/coco/coco.names \

--output_dir ~/yolov4/coco2017/ \

--output_name val2017 \

--output_img_prefix /home/yolov4/coco2017/val2017/

```

資料集,內容如下:

```txt

~/yolov4/coco2017/

├── train2017/

│ ├── 000000000071.jpg

│ ├── 000000000071.txt

│ ├── ...

│ ├── 000000581899.jpg

│ └── 000000581899.txt

├── train2017.txt

├── val2017/

│ ├── 000000001353.jpg

│ ├── 000000001353.txt

│ ├── ...

│ ├── 000000579818.jpg

│ └── 000000579818.txt

└── val2017.txt

```

## 訓練自己的模型並推斷

### 準備必要檔案

* [cfg/coco/coco.names](../cfg/coco/coco.names) <[cfg/coco/coco.names.bak](../cfg/coco/coco.names.bak) has original 80 objects>

* Edit: keep desired objects

* [cfg/coco/yolov4.cfg](../cfg/coco/yolov4.cfg) <[cfg/coco/yolov4.cfg.bak](../cfg/coco/yolov4.cfg.bak) is original file>

* Download [yolov4.cfg](https://raw.githubusercontent.com/AlexeyAB/darknet/master/cfg/yolov4.cfg), then changed:

* `batch`=64, `subdivisions`=32 <32 for 8-12 GB GPU-VRAM>

* `width`=512, `height`=512 <any value multiple of 32>

* `classes`=<your number of objects in each of 3 [yolo]-layers>

* `max_batches`=<classes\*2000, but not less than number of training images and not less than 6000>

* `steps`=<max_batches\*0.8, max_batches\*0.9>

* `filters`=<(classes+5)x3, in the 3 [convolutional] before each [yolo] layer>

* `filters`=<(classes+9)x3, in the 3 [convolutional] before each [Gaussian_yolo] layer>

* [cfg/coco/coco.data](../cfg/coco/coco.data)

* Edit: `train`, `valid` to YOLO datas

* csdarknet53-omega.conv.105

* Download [csdarknet53-omega_final.weights](https://drive.google.com/open?id=18jCwaL4SJ-jOvXrZNGHJ5yz44g9zi8Hm), then run:

```bash

docker run -it --rm --gpus all \

--mount type=bind,source=$HOME/Codes/devel/models/yolov4,target=/home/yolov4 \

joinaero/ubuntu18.04-cuda10.2:opencv4.4.0-darknet

./darknet partial cfg/csdarknet53-omega.cfg /home/yolov4/csdarknet53-omega_final.weights /home/yolov4/csdarknet53-omega.conv.105 105

```

### 訓練自己的模型

執行映象:

```bash

cd start-yolov4/

xhost +local:docker

docker run -it --gpus all \

-e DISPLAY \

-e QT_X11_NO_MITSHM=1 \

-v /tmp/.X11-unix:/tmp/.X11-unix \

-v $HOME/.Xauthority:/root/.Xauthority \

--name darknet \

--mount type=bind,source=$HOME/Codes/devel/models/yolov4,target=/home/yolov4 \

--mount type=bind,source=$HOME/yolov4/coco2017,target=/home/yolov4/coco2017 \

--mount type=bind,source=$PWD/cfg/coco,target=/home/cfg \

joinaero/ubuntu18.04-cuda10.2:opencv4.4.0-darknet

```

進行訓練:

```bash

mkdir -p /home/yolov4/coco2017/backup

# Training command

./darknet detector train /home/cfg/coco.data /home/cfg/yolov4.cfg /home/yolov4/csdarknet53-omega.conv.105 -map

```

中途可以中斷訓練,然後這樣繼續:

```bash

# Continue training

./darknet detector train /home/cfg/coco.data /home/cfg/yolov4.cfg /home/yolov4/coco2017/backup/yolov4_last.weights -map

```

`yolov4_last.weights` 每迭代 100 次,會被記錄。

如果多 GPU 訓練,可以在 1000 次迭代後,加引數 `-gpus 0,1` ,再繼續:

```bash

# How to train with multi-GPU

# 1. Train it first on 1 GPU for like 1000 iterations

# 2. Then stop and by using partially-trained model `/backup/yolov4_1000.weights` run training with multigpu

./darknet detector train /home/cfg/coco.data /home/cfg/yolov4.cfg /home/yolov4/coco2017/backup/yolov4_1000.weights -gpus 0,1 -map

```

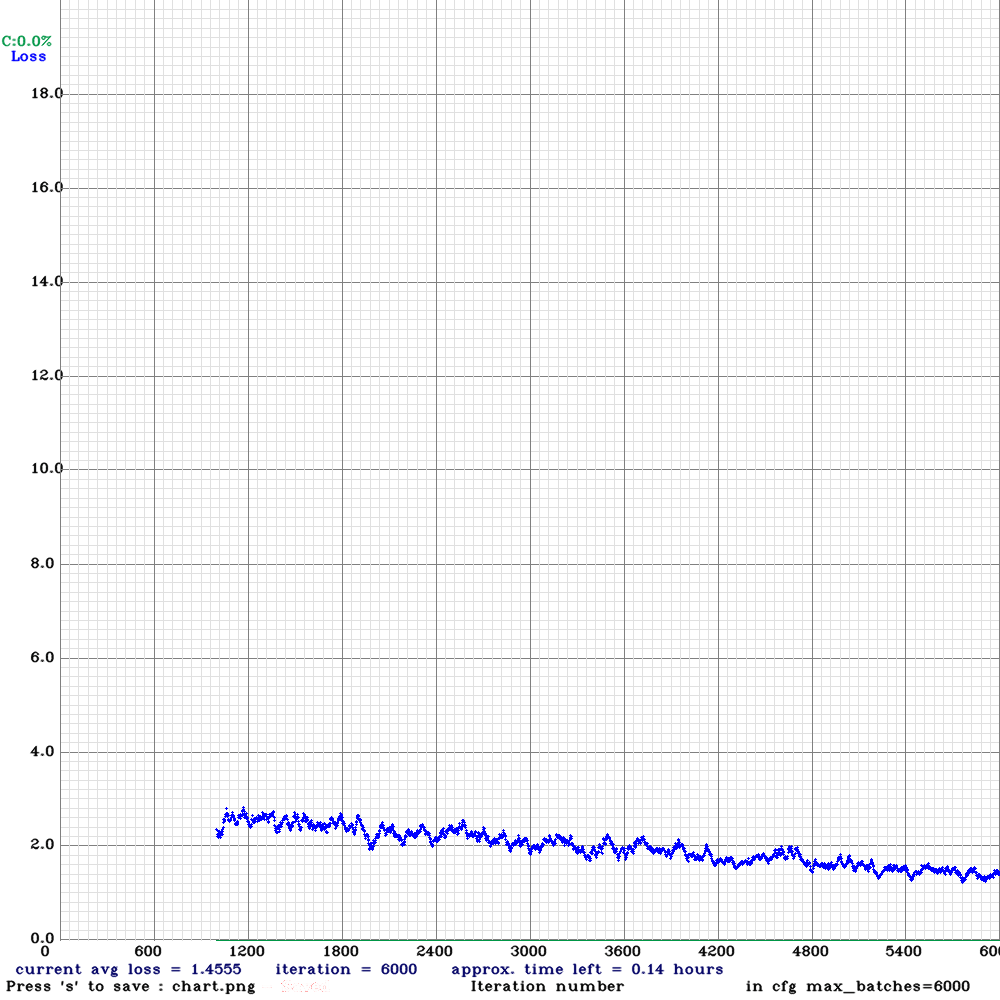

訓練過程,記錄如下:

加引數 `-map` 後,上圖會顯示有紅線 `mAP`。

檢視模型 mAP@IoU=50 精度:

```bash

$ ./darknet detector map /home/cfg/coco.data /home/cfg/yolov4.cfg /home/yolov4/coco2017/backup/yolov4_final.weights

...

Loading weights from /home/yolov4/coco2017/backup/yolov4_final.weights...

seen 64, trained: 384 K-images (6 Kilo-batches_64)

Done! Loaded 162 layers from weights-file

calculation mAP (mean average precision)...

Detection layer: 139 - type = 27

Detection layer: 150 - type = 27

Detection layer: 161 - type = 27

160

detections_count = 745, unique_truth_count = 190

class_id = 0, name = train, ap = 80.61% (TP = 142, FP = 18)

for conf_thresh = 0.25, precision = 0.89, recall = 0.75, F1-score = 0.81

for conf_thresh = 0.25, TP = 142, FP = 18, FN = 48, average IoU = 75.31 %

IoU threshold = 50 %, used Area-Under-Curve for each unique Recall

mean average precision ([email protected]) = 0.806070, or 80.61 %

Total Detection Time: 4 Seconds

```

進行推斷:

```bash

./darknet detector test /home/cfg/coco.data /home/cfg/yolov4.cfg /home/yolov4/coco2017/backup/yolov4_final.weights \

-ext_output -show /home/yolov4/coco2017/val2017/000000006040.jpg

```

推斷結果:

## 參考內容

* [Train Detector on MS COCO (trainvalno5k 2014) dataset](https://github.com/AlexeyAB/darknet/wiki/Train-Detector-on-MS-COCO-(trainvalno5k-2014)-dataset)

* [How to evaluate accuracy and speed of YOLOv4](https://github.com/AlexeyAB/darknet/wiki/How-to-evaluate-accuracy-and-speed-of-YOLOv4)

* [How to train (to detect your custom objects)](https://github.com/AlexeyAB/darknet#how-to-train-to-detect-your-custom-objects)

## 結語

為什麼用 Docker ? Docker 匯出映象,可簡化環境部署。如 PyTorch 也都有映象,可以直接上手使用。

關於 Darknet 還有什麼? 下回介紹 Darknet 於 Ubuntu 編譯,及使用 Python 介面 。

Let's go