一次訊息消費服務的記憶體洩漏排查小記

線上有一個訊息消費服務xxx-consumer,使用spring-kafka框架,主執行緒批量從消費佇列(kafka)拉取交易系統生產的訊息,然後提交到子執行緒池中挨個處理消費。

public abstract class AbstractMessageDispatchListener implements

BatchAcknowledgingMessageListener<String, Msg>, ApplicationListener<ApplicationReadyEvent> {

private ThreadPoolExecutor executor;

public abstract MessageWorker chooseWorker(ConsumerRecord<String, Msg> data);

@Override

public void onMessage(List<ConsumerRecord<String, Msg>> datas, Acknowledgment acknowledgment) {

List<Future<?>> futureList = new ArrayList<>(datas.size());

try {

CountDownLatch countDownLatch = new CountDownLatch(datas.size());

for (ConsumerRecord<String, Msg> data : datas) {

Future<?> future = executor.submit(new Worker(data, countDownLatch));

futureList.add(future);

}

countDownLatch.await(20000L - 2000, TimeUnit.MILLISECONDS);

long countDownLatchCount = countDownLatch.getCount();

if (countDownLatchCount > 0) {

return;

}

acknowledgment.acknowledge();

} catch (Exception e) {

logger.error("onMessage error ", e);

} finally {

for (Future<?> future : futureList) {

if (future.isDone() || future.isCancelled()) {

continue;

}

future.cancel(true);

}

}

}

@Override

public void onApplicationEvent(ApplicationReadyEvent event) {

ThreadFactoryBuilder builder = new ThreadFactoryBuilder();

builder.setNameFormat(this.getClass().getSimpleName() + "-pool-%d");

builder.setDaemon(false);

executor = new ThreadPoolExecutor(12,

12 * 2,

60L,

TimeUnit.SECONDS,

new ArrayBlockingQueue<>(100),

builder.build());

}

private class Worker implements Runnable {

private ConsumerRecord<String, Msg> data;

private CountDownLatch countDownLatch;

Worker(ConsumerRecord<String, Msg> data, CountDownLatch countDownLatch) {

this.data = data;

this.countDownLatch = countDownLatch;

}

@Override

public void run() {

try {

MessageWorker worker = chooseWorker(data);

worker.work(data.value());

} finally {

countDownLatch.countDown();

}

}

}

}

1. 問題背景

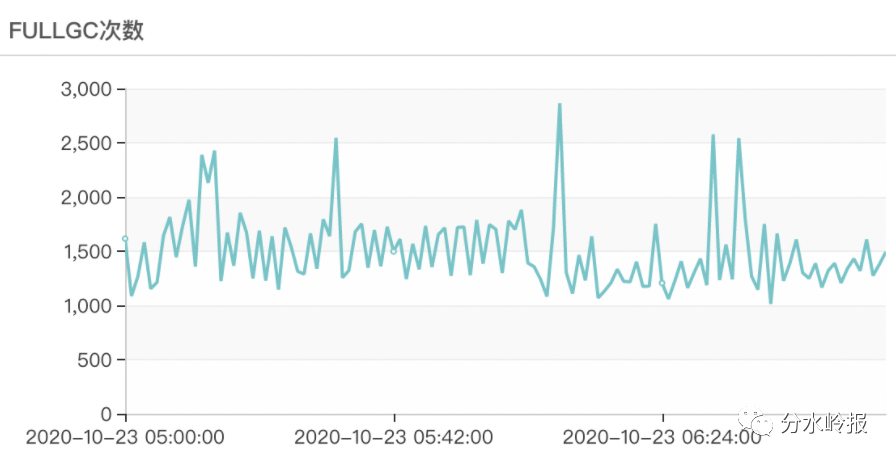

有一天早上xxx-consumer服務出現大量報警,人工排查發現30w+的訊息未處理,業務日誌正常,gc日誌有大量Full gc,初步判斷因為Full gc導致訊息處理慢,大量的訊息積壓。

2. 堆疊分析

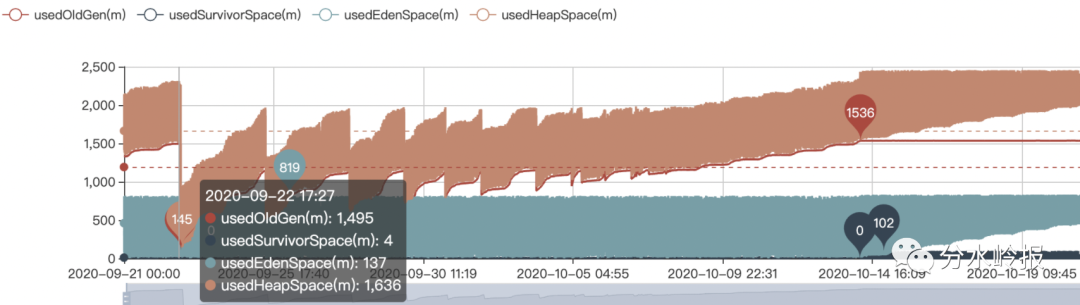

查看了近一個月的JVM記憶體資訊,發現老年代記憶體無法被回收(9月22號的下降是因為服務有一次上線重啟),初步判斷髮生了記憶體洩漏。

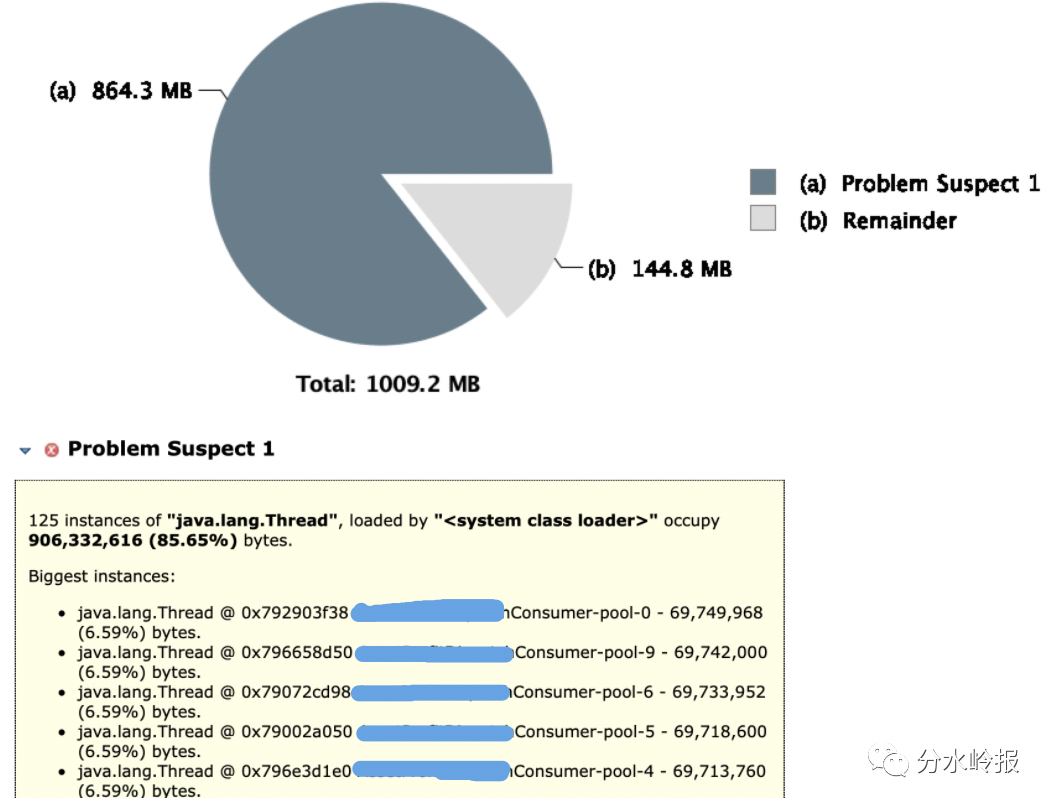

通過<jmap -dump:format=b,file=/home/work/app/xxx-consumer/logs/jmap_dump.hprof -F>命令匯出記憶體快照,使用Memory Analyzer解析記憶體快照檔案jmap_dump.hprof,發現有很明顯的記憶體洩漏提示:

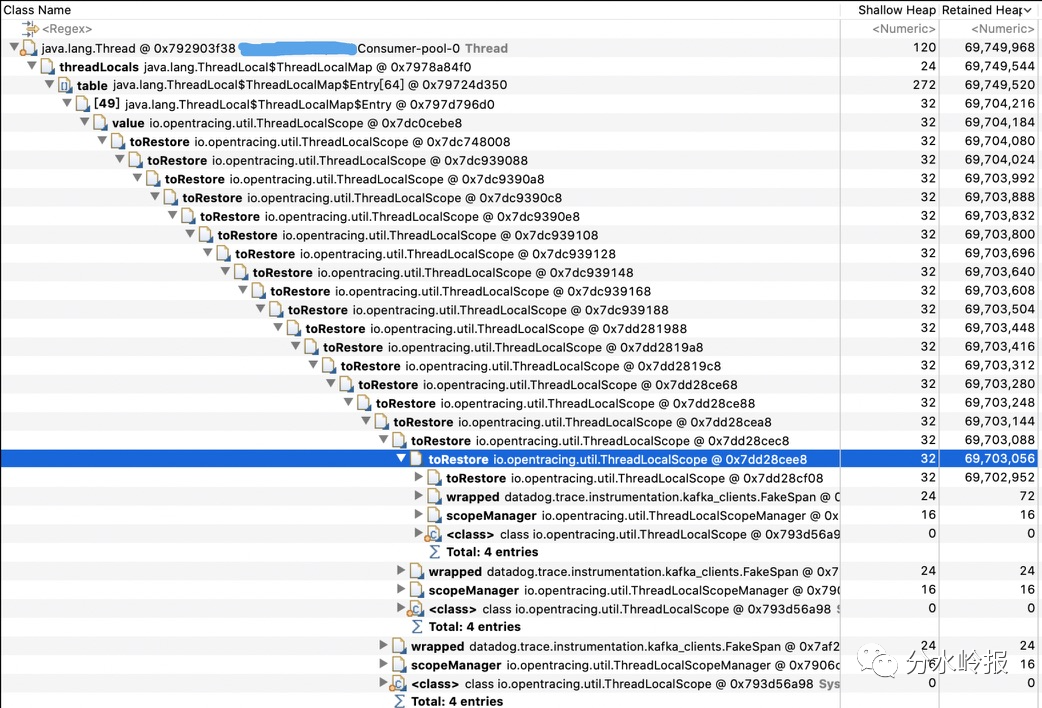

進一步檢視執行緒細節,發現建立了大量的ThreadLocalScope物件且迴圈引用:

同時我們也看到了分散式追蹤(dd-trace-java)jar包中的FakeSpan類,初步判斷是dd-trace-java中自研擴充套件的kafka外掛存在記憶體洩漏bug。

3. 程式碼分析

繼續檢視dd-trace-java中kafka外掛的程式碼,其處理流程如下:

第一批訊息

-

(SpringKafkaConsumerInstrumentation:L22)BatchAcknowledgingMessageListener.onMessage進入時,主執行緒會建立一個scope00=ThreadLocalScope(Type_BatchMessageListener_Value,toRestore=null)

-

(ExecutorInstrumentation:L21L47)訊息被submit到執行緒池中處理時,子執行緒會建立一個scope10=ThreadLocalScope(Type_BatchMessageListener_Value,toRestore=null)

-

(SpringKafkaConsumerInstrumentation:L68)子執行緒處理訊息時(ConsumerRecord.value),會建立一個scope11=ThreadLocalScope(Type_ConsumberRecord_Value,toRestore=scope10)

-

(ExecutorInstrumentation:L54)子執行緒處理完訊息後,執行scope10.close(),而scopeManager.tlsScope.get()=scope11,命中ThreadLocalScope:L19,scope10和scope11均無法被GC

-

(SpringKafkaConsumerInstrumentation:L42)BatchAcknowledgingMessageListener.onMessage退出時,主執行緒會執行scope00.close(),scope00會被GC

第二批訊息

-

(SpringKafkaConsumerInstrumentation:L22)BatchAcknowledgingMessageListener.onMessage進入時,主執行緒會建立一個scope01=ThreadLocalScope(Type_BatchMessageListener_Value,toRestore=null)

-

(ExecutorInstrumentation:L21L47)訊息被submit到執行緒池中處理時,子執行緒會建立一個scope12=ThreadLocalScope(Type_BatchMessageListener_Value,toRestore=scope11)

-

(SpringKafkaConsumerInstrumentation:L68)子執行緒處理訊息時(ConsumerRecord.value),會建立一個scope13=ThreadLocalScope(Type_ConsumberRecord_Value,toRestore=scope12)

-

(ExecutorInstrumentation:L54)子執行緒處理完訊息後,執行scope12.close(),而scopeManager.tlsScope.get()=scope13,命中ThreadLocalScope:L19,scope12和scope13均無法被GC

-

(SpringKafkaConsumerInstrumentation:L42)BatchAcknowledgingMessageListener.onMessage退出時,主執行緒會執行scope01.close(),scope01會被GC

從上可以看到,主執行緒建立的ThreadLocalScope能被正確GC,而執行緒池中建立的ThreadLocalScope被迴圈引用,無法被正確GC,從而造成記憶體洩漏。

@AutoService(Instrumenter.class)

public final class SpringKafkaConsumerInstrumentation extends Instrumenter.Configurable {

@Override

public AgentBuilder apply(final AgentBuilder agentBuilder) {

return agentBuilder

.type(hasSuperType(named("org.springframework.kafka.listener.BatchAcknowledgingMessageListener")))

.transform(DDAdvice.create().advice(isMethod().and(isPublic()).and(named("onMessage")),

BatchMessageListenerAdvice.class.getName()))

.type(named("org.apache.kafka.clients.consumer.ConsumerRecord"))

.transform(DDAdvice.create().advice(isMethod().and(isPublic()).and(named("value")),

RecoredValueAdvice.class.getName()))

.asDecorator();

}

public static class BatchMessageListenerAdvice {

@Advice.OnMethodEnter(suppress = Throwable.class)

public static Scope before() {

FakeSpan span = new FakeSpan();

span.setKind(FakeSpan.Type_BatchMessageListener_Value);

Scope scope = GlobalTracer.get().scopeManager().activate(span, false);

return scope;

}

@Advice.OnMethodExit(suppress = Throwable.class)

public static void after(@Advice.Enter Scope scope) {

while (true) {

Span span = GlobalTracer.get().activeSpan();

if (span != null && span instanceof FakeSpan) {

FakeSpan fakeSpan = (FakeSpan) span;

if (fakeSpan.getKind().equals(FakeSpan.Type_ConsumberRecord_Value)) {

GlobalTracer.get().scopeManager().active().close();

} else {

break;

}

} else {

break;

}

}

if (scope != null) {

scope.close();

}

}

}

public static class RecoredValueAdvice {

@Advice.OnMethodEnter(suppress = Throwable.class)

public static void before(@Advice.This ConsumerRecord record) {

Span activeSpan = GlobalTracer.get().activeSpan();

if (activeSpan instanceof FakeSpan) {

FakeSpan proxy = (FakeSpan) activeSpan;

if (proxy.getKind().equals(FakeSpan.Type_ConsumberRecord_Value)) {

GlobalTracer.get().scopeManager().active().close();

activeSpan = GlobalTracer.get().activeSpan();

if (activeSpan instanceof FakeSpan) {

proxy = (FakeSpan) activeSpan;

}

}

if (proxy.getKind().equals(FakeSpan.Type_BatchMessageListener_Value)) {

final SpanContext spanContext = TracingKafkaUtils.extractSecond(record.headers(), GlobalTracer.get());

if (spanContext != null) {

FakeSpan consumerProxy = new FakeSpan();

consumerProxy.setContext(spanContext);

consumerProxy.setKind(FakeSpan.Type_ConsumberRecord_Value);

GlobalTracer.get().scopeManager().activate(consumerProxy, false);

}

}

}

}

}

}

@AutoService(Instrumenter.class)

public final class ExecutorInstrumentation extends Instrumenter.Configurable {

@Override

public AgentBuilder apply(final AgentBuilder agentBuilder) {

return agentBuilder

.type(not(isInterface()).and(hasSuperType(named(ExecutorService.class.getName()))))

.transform(DDAdvice.create().advice(named("submit").and(takesArgument(0, Runnable.class)),

SubmitTracedRunnableAdvice.class.getName()))

.asDecorator();

}

public static class SubmitTracedRunnableAdvice {

@Advice.OnMethodEnter(suppress = Throwable.class)

public static TracedRunnable wrapJob(

@Advice.This Object dis,

@Advice.Argument(value = 0, readOnly = false) Runnable task) {

if (task != null && (!dis.getClass().getName().startsWith("slick.util.AsyncExecutor"))) {

task = new TracedRunnable(task, GlobalTracer.get());

return (TracedRunnable) task;

}

return null;

}

}

public static class TracedRunnable implements Runnable {

private final Runnable delegate;

private final Span span;

private final Tracer tracer;

public TracedRunnable(Runnable delegate, Tracer tracer) {

this.delegate = delegate;

this.tracer = tracer;

if (tracer != null) {

this.span = tracer.activeSpan();

} else {

this.span = null;

}

}

@Override

public void run() {

Scope scope = null;

if (span != null && tracer != null) {

scope = tracer.scopeManager().activate(span, false);

}

try {

delegate.run();

} finally {

if (scope != null) {

scope.close();

}

}

}

}

}

public class ThreadLocalScopeManager implements ScopeManager {

final ThreadLocal<ThreadLocalScope> tlsScope = new ThreadLocal<ThreadLocalScope>();

@Override

public Scope activate(Span span, boolean finishOnClose) {

return new ThreadLocalScope(this, span, finishOnClose);

}

@Override

public Scope active() {

return tlsScope.get();

}

}

public class ThreadLocalScope implements Scope {

private final ThreadLocalScopeManager scopeManager;

private final Span wrapped;

private final boolean finishOnClose;

private final ThreadLocalScope toRestore;

ThreadLocalScope(ThreadLocalScopeManager scopeManager, Span wrapped, boolean finishOnClose) {

this.scopeManager = scopeManager;

this.wrapped = wrapped;

this.finishOnClose = finishOnClose;

this.toRestore = scopeManager.tlsScope.get();

scopeManager.tlsScope.set(this);

}

@Override

public void close() {

if (scopeManager.tlsScope.get() != this) {

// This shouldn't happen if users call methods in the expected order. Bail out.

return;

}

if (finishOnClose) {

wrapped.finish();

}

scopeManager.tlsScope.set(toRestore);

}

@Override

public Span span() {

return wrapped;

}

}

End

RecoredValueAdvice沒有銷燬自己建立的物件,而是寄希望於BatchMessageListenerAdvice去銷燬。

但(SpringKafkaConsumerInstrumentation:L27)BatchAcknowledgingMessageListener.onMessage退出時,只會close主執行緒建立的ThreadLocalScope,不會close執行緒池中建立的ThreadLocalScope,導致子執行緒建立的ThreadLocalScope被迴圈引用,無法被正確GC,從而造成記憶體洩