(實際應用)ELK環境搭建

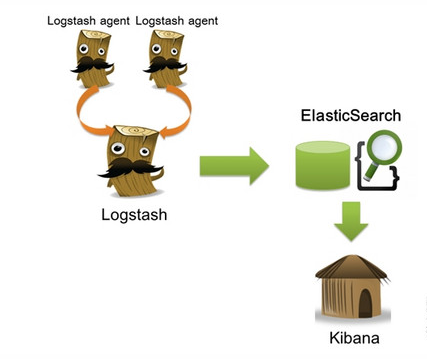

今天給大家帶來的是開源實時日誌分析 ELK , ELK 由 ElasticSearch 、 Logstash 和 Kiabana 三個開源工具組成。官方網站:https://www.elastic.co

其中的3個軟件是:

Elasticsearch 是個開源分布式搜索引擎,它的特點有:分布式,零配置,自動發現,索引自動分片,索引副本機制, restful 風格接口,多數據源,自動搜索負載等。

Logstash 是一個完全開源的工具,他可以對你的日誌進行收集、分析,並將其存儲供以後使用(如,搜索)。

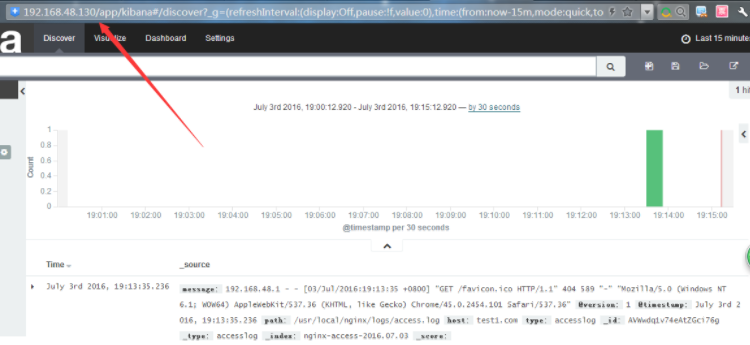

kibana 也是一個開源和免費的工具,他 Kibana 可以為 Logstash 和 ElasticSearch 提供的日誌分析友好的 Web 界面,可以幫助您匯總、分析和搜索重要數據日誌

| 系統 | 系統需要安裝的軟件 | ip | 描述 |

| centos6.5 | Elasticsearch/test5 | 192.168.253.210 | 搜索存儲日誌 |

| centos6.5 | Elasticsearch/test4 | 192.168.253.200 | 搜索存儲日誌 |

| centos6.5 | Logstash/nginx/test1 | 192.168.253.150 | 用來收集日誌給上面 |

| centos6.5 | Kibana/nginx/test2 | 192.168.253.100 | 用來後端的展示 |

架構原理圖:

一、先安裝elasticsearch集群,並測試通過再進行其他軟件安裝。

在test5,test4上安裝分別安裝elasticsearch-2.3.3.rpm 前提要安裝java1.8 步驟如下:

yum remove java-1.7.0-openjdk

rpm -ivh jdk-8u51-linux-x64.rpm

java -version

yum localinstall elasticsearch-2.3.3.rpm -y

service elasticsearch start

cd /etc/elasticsearch/

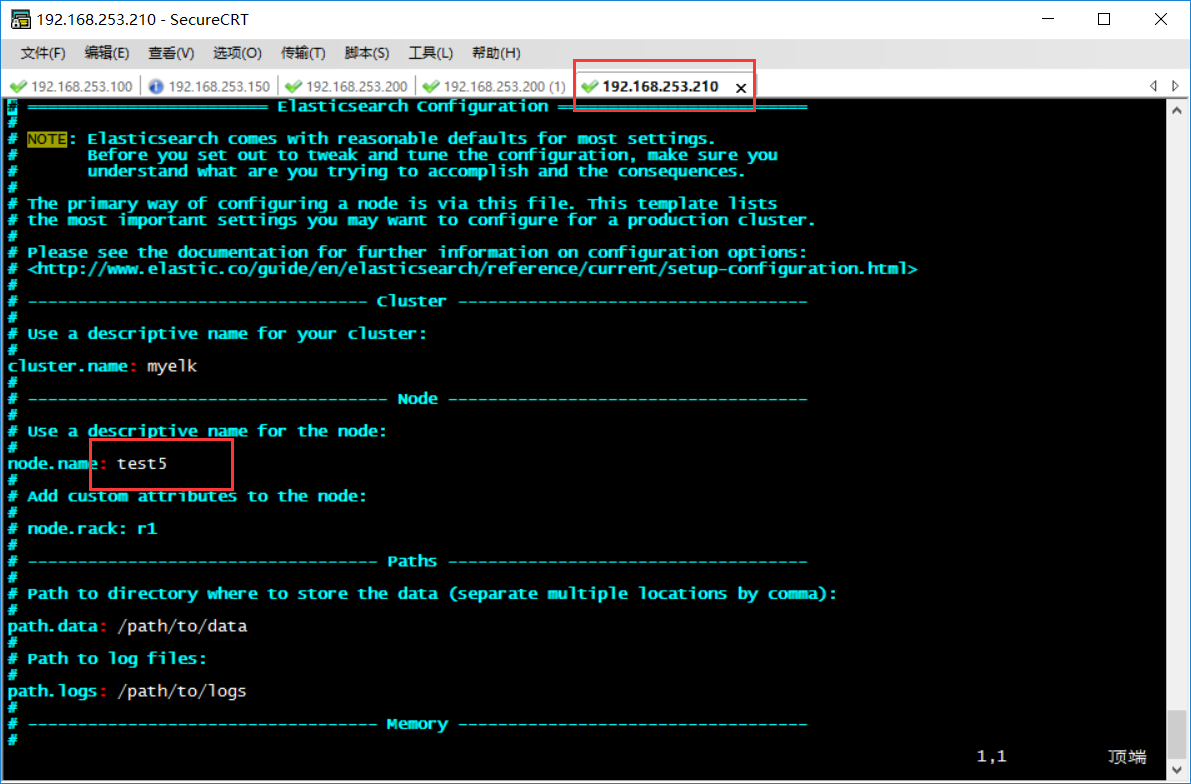

vim elasticsearch.yml

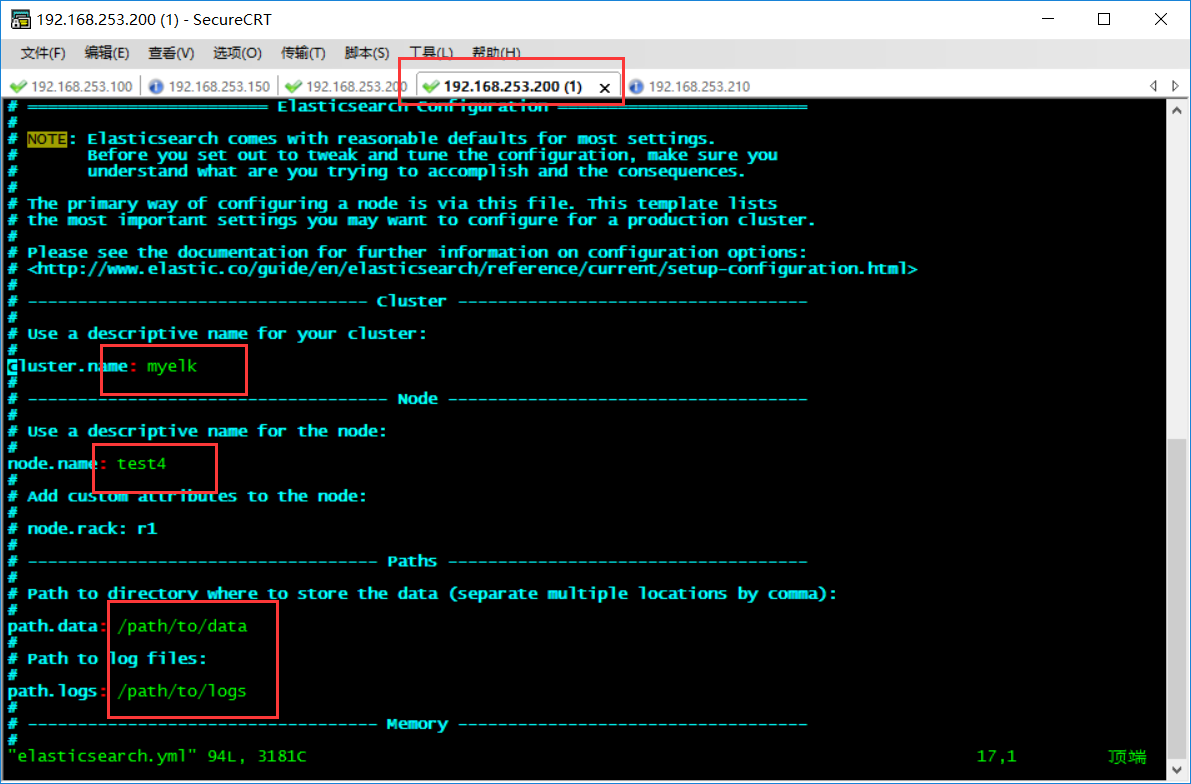

修改如下配置

cluster.name: myelk #設置集群的名稱,在一個集群裏面都是這個名稱,必須相同

node.name: test5 #設置每一個節點的名,每個節點的名稱必須不一樣。

path.data: /path/to/data #指定數據的存放位置,線上的機器這個要放到單一的大分區裏面。

path.logs: /path/to/logs #日誌的目錄

bootstrap.mlockall: true #啟動最優內存配置,啟動就分配了足夠的內存,性能會好很多,測試我就不啟動了。

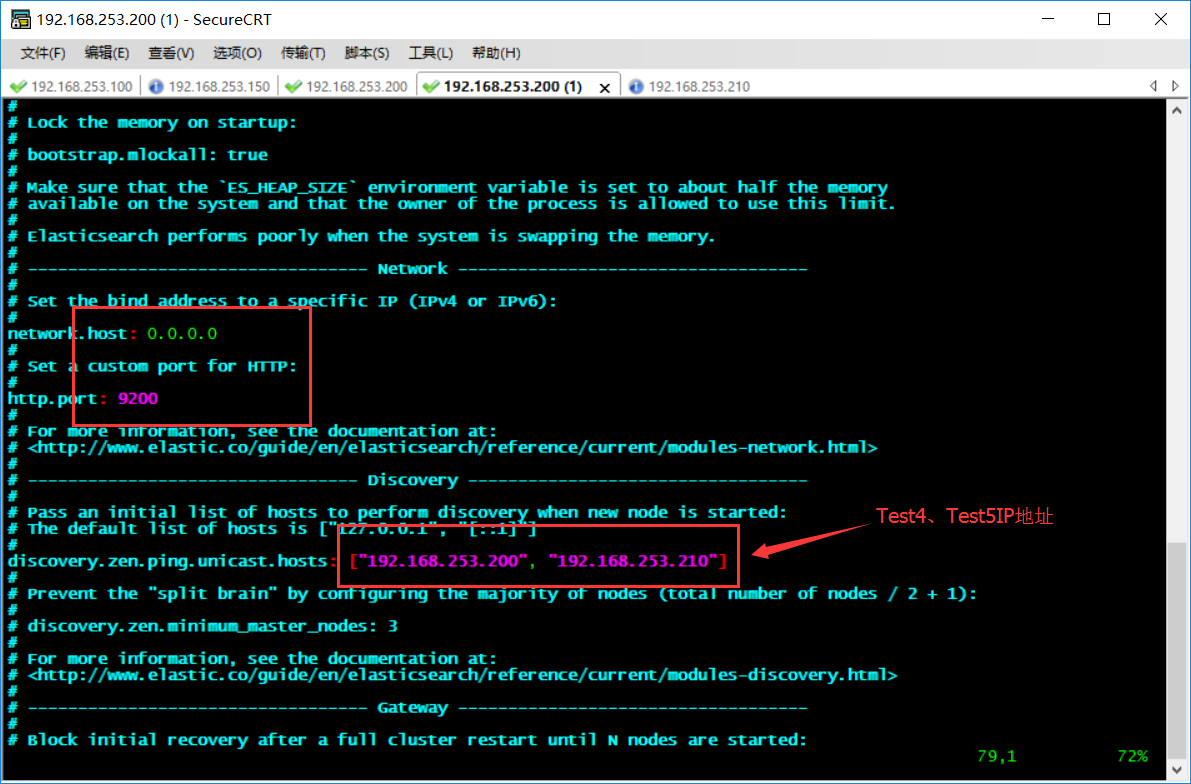

network.host: 0.0.0.0 #監聽的ip地址,這個表示所有的地址。

http.port: 9200 #監聽的端口號

discovery.zen.ping.unicast.hosts: ["hostip", "hostip"] #知道集群的ip有那些,沒有集群就會出現就一臺工作

mkdir -pv /path/to/{data,logs}

chown elasticsearch.elasticsearch /path -R

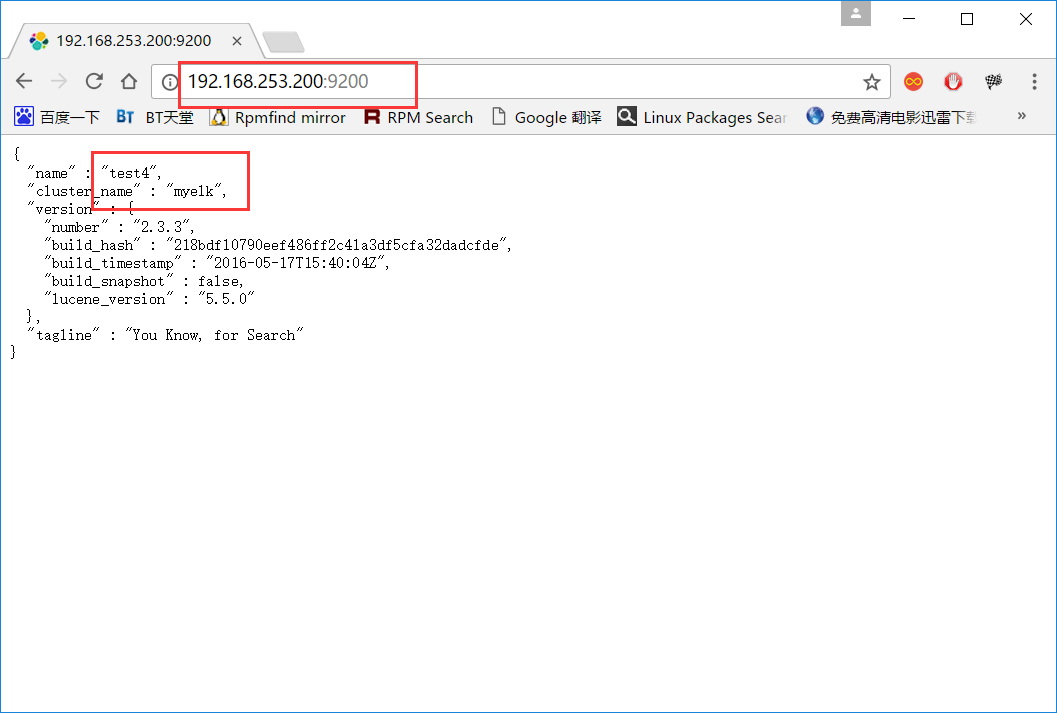

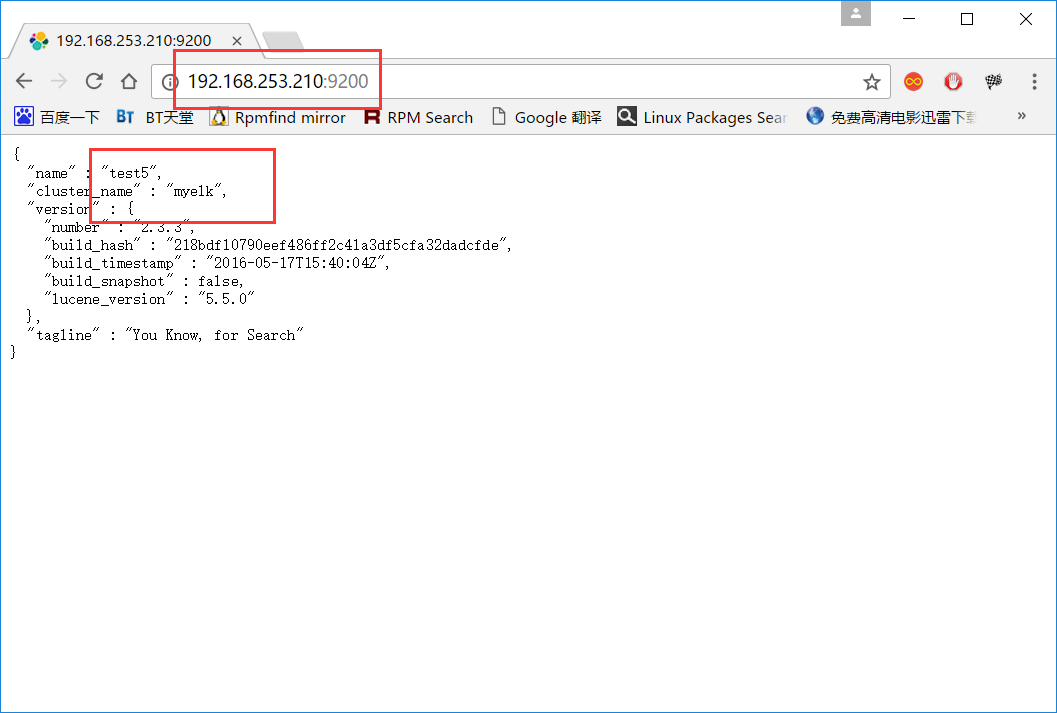

啟動服務器 service elasticsearch start 並查看監控端口啟動

[[email protected] ~]# ss -tln

State Recv-Q Send-Q Local Address:Port Peer Address:Port

LISTEN 0 128 :::54411 :::*

LISTEN 0 128 :::111 :::*

LISTEN 0 128 *:111 *:*

LISTEN 0 50 :::9200 :::*

LISTEN 0 50 :::9300 :::*

LISTEN 0 128 :::22 :::*

LISTEN 0 128 *:22 *:*

LISTEN 0 128 *:51574 *:*

LISTEN 0 128 127.0.0.1:631 *:*

LISTEN 0 128 ::1:631 :::*

LISTEN 0 100 ::1:25 :::*

LISTEN 0 100 127.0.0.1:25 *:*

兩臺的配置都一樣就是上面的IP和note名稱要配置不一樣就行

[[email protected] ~]# ss -tln

State Recv-Q Send-Q Local Address:Port Peer Address:Port

LISTEN 0 128 *:45822 *:*

LISTEN 0 128 :::39620 :::*

LISTEN 0 128 :::111 :::*

LISTEN 0 128 *:111 *:*

LISTEN 0 50 :::9200 :::*

LISTEN 0 50 :::9300 :::*

LISTEN 0 128 :::22 :::*

LISTEN 0 128 *:22 *:*

LISTEN 0 128 127.0.0.1:631 *:*

LISTEN 0 128 ::1:631 :::*

LISTEN 0 100 ::1:25 :::*

LISTEN 0 100 127.0.0.1:25 *:*

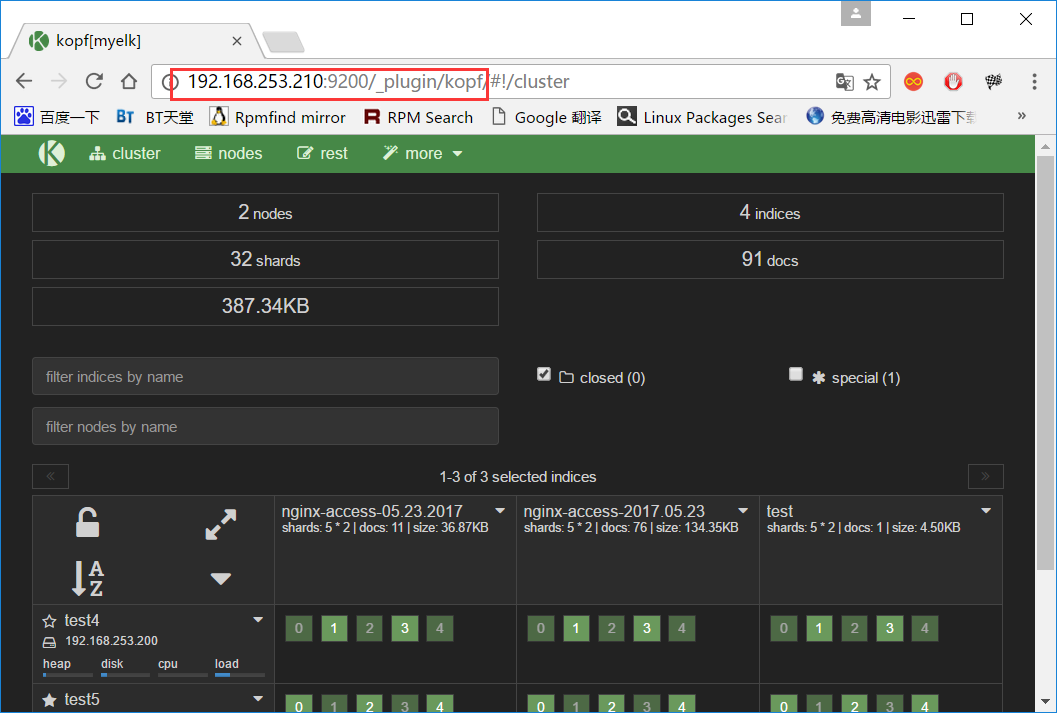

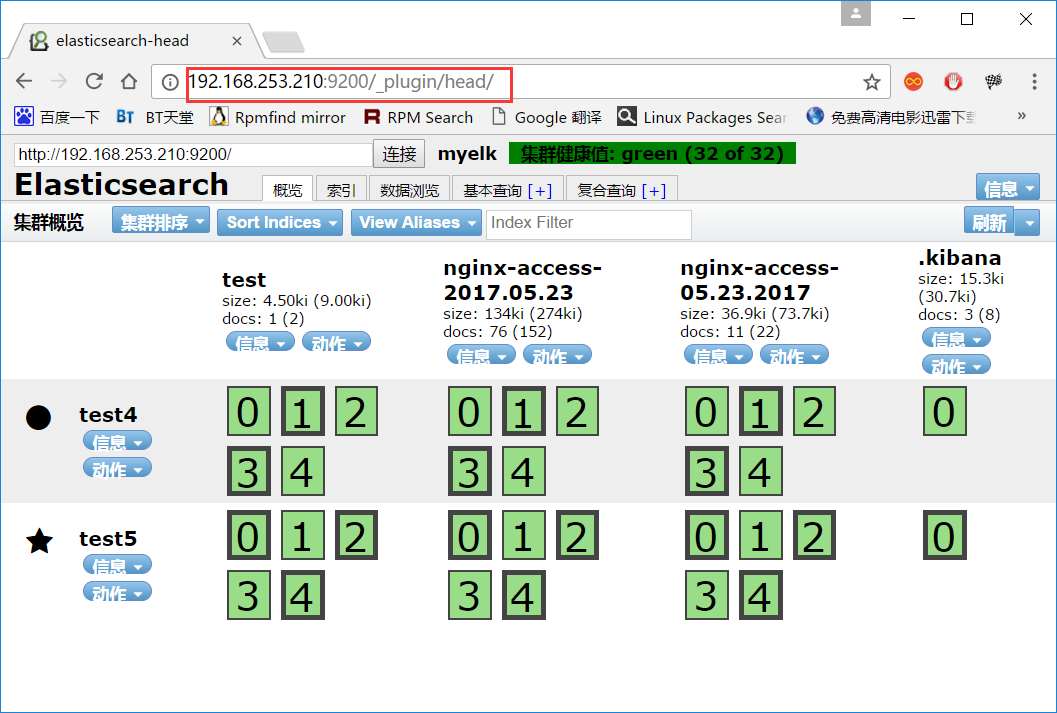

安裝插件 head和kopf 之後訪問 ip:9200/_plugin/head 和ip:9200/_plugin/kopf (插件可以圖形查看elasticsearch的狀態和刪除創建索引)

/usr/share/elasticsearch/bin/plugin install lmenezes/elasticsearch-kopf

/usr/share/elasticsearch/bin/plugin install mobz/elasticsearch-head

[[email protected]]# /usr/share/elasticsearch/bin/plugin install lmenezes/elasticsearch-kopf

-> Installing lmenezes/elasticsearch-kopf...

Trying https://github.com/lmenezes/elasticsearch-kopf/archive/master.zip ...

Downloading ......................................................................................................................................................................................................................................................................................................................................................................................................................................................................................................................................................................................................................................................................................................................................................................................................................................................................................................................................................................................................................................................................................................................................................................................................................................................................................................................................................................................................................................................................................................................................................................................................................................................................................DONE

Verifying https://github.com/lmenezes/elasticsearch-kopf/archive/master.zip checksums if available ...

NOTE: Unable to verify checksum for downloaded plugin (unable to find .sha1 or .md5 file to verify)

Installed kopf into /usr/share/elasticsearch/plugins/kopf

[[email protected] ]# /usr/share/elasticsearch/bin/plugin install mobz/elasticsearch-head

-> Installing mobz/elasticsearch-head...

Trying https://github.com/mobz/elasticsearch-head/archive/master.zip ...

Downloading ........................................................................................................................................................................................................................................................................................................................................................................................................................................................................................................................................................................................................................................................................................DONE

Verifying https://github.com/mobz/elasticsearch-head/archive/master.zip checksums if available ...

NOTE: Unable to verify checksum for downloaded plugin (unable to find .sha1 or .md5 file to verify)

Installed head into /usr/share/elasticsearch/plugins/head

二、安裝nginx和logstash軟件

yum -y install zlib zlib-devel openssl openssl--devel pcre pcre-devel

nginx-1.8.1-1.el6.ngx.x86_64.rpm

logstash-2.3.3-1.noarch.rpm

jdk-8u51-linux-x64.rpm

在test1上安裝好nginx服務 就是收集它的日誌呢

日誌在/var/log/nginx/access.log

然後在test1上安裝logstash-2.3.3-1.noarch.rpm

yum remove java-1.7.0-openjdk

rpm -ivh jdk-8u91-linux-x64.rpm

rpm -ivh logstash-2.3.3-1.noarch.rpm

/etc/init.d/logstash start #啟動服務

/opt/logstash/bin/logstash -e "input {stdin{}} output{stdout{ codec=>"rubydebug"}}" #檢測環境 執行這個命令檢測環境正常否,啟動完成後 直接輸入東西就會出現

Settings: Default pipeline workers: 1

Pipeline main started

hello world

{

"message" => "hello world",

"@version" => "1",

"@timestamp" => "2017-05-24T08:04:46.993Z",

"host" => "0.0.0.0"

}

之後輸入/opt/logstash/bin/logstash -e ‘input {stdin{}} output{ elasticsearch { hosts => ["192.168.253.200:9200"] index => "test"}}‘

就是輸入東西到253.200的elasticsearch上 會在/path/to/data/myelk/nodes/0/indices 生成你名稱test索引文件目錄 可以多輸入幾個到253.200的目錄看看有沒有文件有就證明正常。

[[email protected] ~]# ls /path/to/data/myelk/nodes/0/indices/

test

之後在test1的/etc/logstash/conf.d 建立以.conf結尾的配置文件,我收集nginx就叫nginx.conf了內容如下

[[email protected] nginx]# cd /etc/logstash/conf.d/

[[email protected] conf.d]# ls

nginx.conf

[[email protected] conf.d]# cat nginx.conf

input {

file {

type => "accesslog"

path => "/var/log/nginx/access.log"

start_position => "beginning"

}

}

output {

if [type] == "accesslog" {

elasticsearch {

hosts => ["192.168.253.200"]

index => "nginx-access-%{+YYYY.MM.dd}"

}

}

}

/etc/init.d/logstash configtest

ps -ef |grep java

/opt/logstash/bin/logstash -f nginx.conf

之後在elasticearch查看有沒有索引生成。多訪問下nginx服務

如果沒有就修改這個文件

vi /etc/init.d/logstash

LS_USER=root ###把這裏換成root或者把訪問的日誌加個權限可以讓logstash可以讀取它 重啟服務就會生成索引了

LS_GROUP=root

LS_HOME=/var/lib/logstash

LS_HEAP_SIZE="1g"

LS_LOG_DIR=/var/log/logstash

LS_LOG_FILE="${LS_LOG_DIR}/$name.log"

LS_CONF_DIR=/etc/logstash/conf.d

LS_OPEN_FILES=16384

LS_NICE=19

KILL_ON_STOP_TIMEOUT=${KILL_ON_STOP_TIMEOUT-0} #default value is zero to this variable but could be updated by user request

LS_OPTS=""

test4查看:

[[email protected] ~]# ls /path/to/data/myelk/nodes/0/indices/

nginx-access-2017.05.23 test

[[email protected] logstash]# cat logstash.log

{:timestamp=>"2017-05-24T16:05:19.659000+0800", :message=>"Pipeline main started"}

三、安裝kibana軟件

上面的都安裝完成後在test2上面安裝kibana

rpm -ivh kibana-4.5.1-1.x86_64.rpm

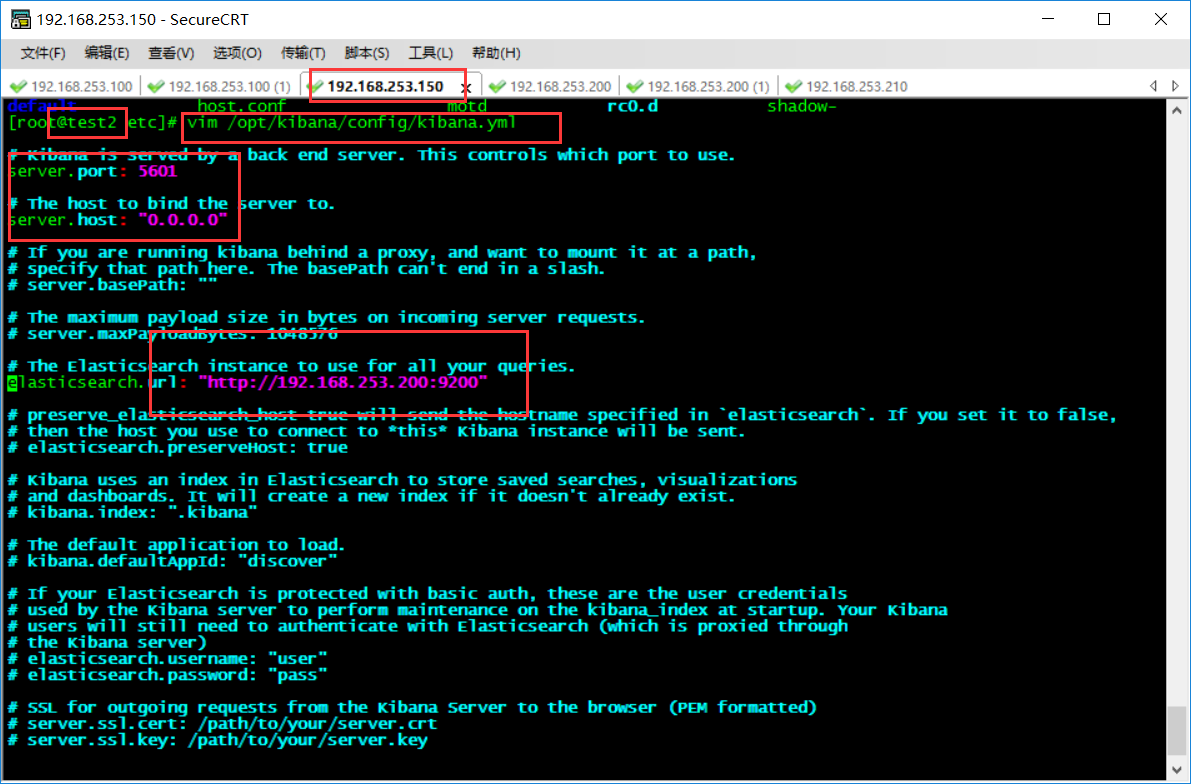

編輯配置文件在這裏/opt/kibana/config/kibana.yml 就修改下面幾項就行

server.port: 5601 端口

server.host: "0.0.0.0" 監聽

elasticsearch.url: "http://192.168.48.200:9200" elasticsearch地址

/etc/init.d/kibana start 啟動服務

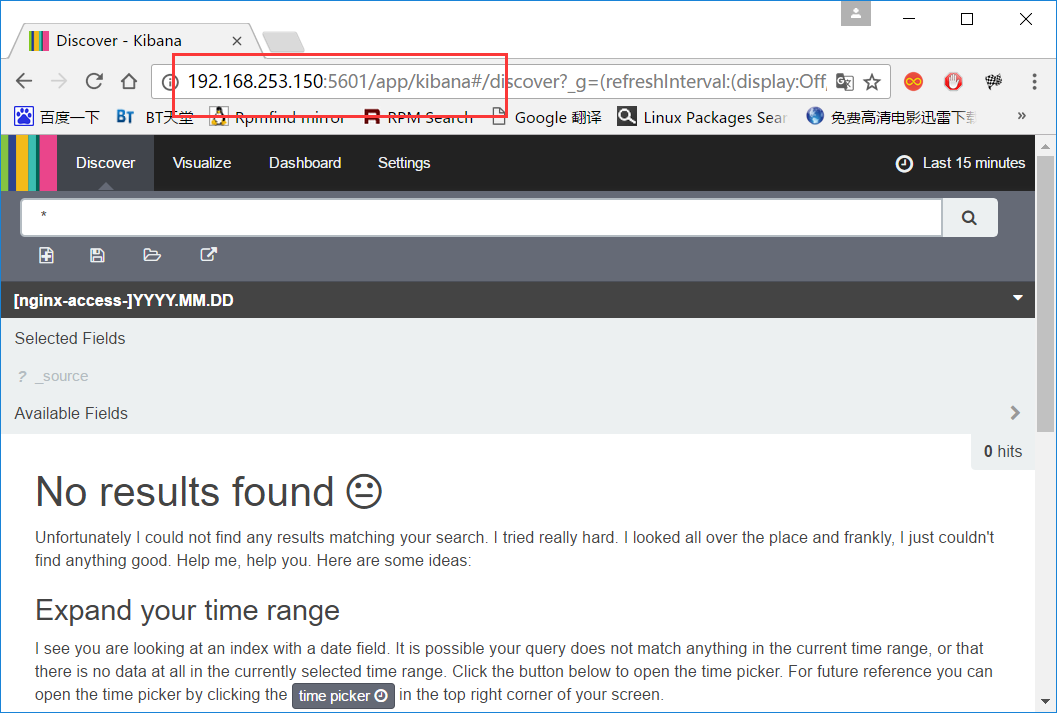

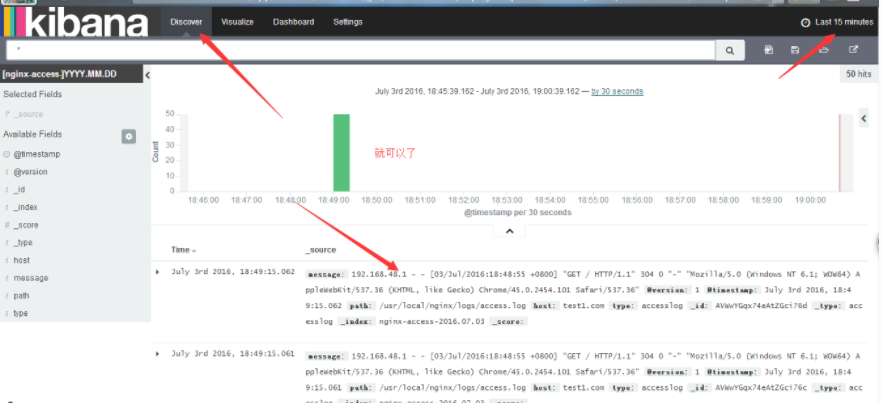

訪問kibana http://ip:5601

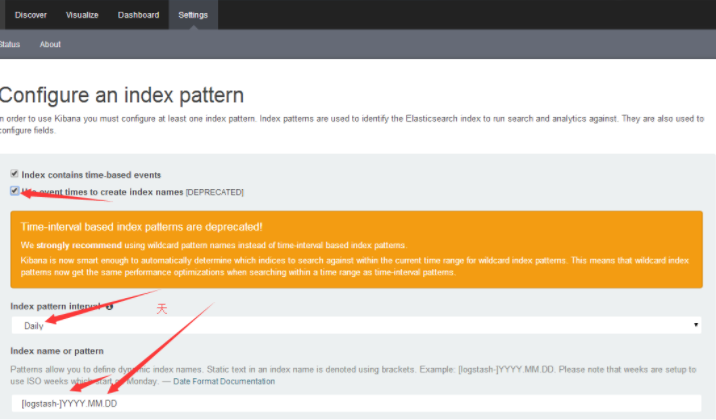

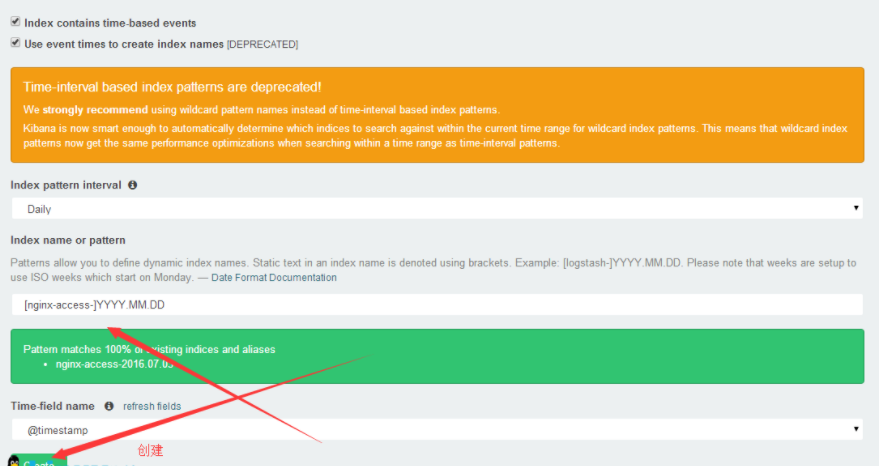

添加展示的索引,就是在上面定義的 nginx-access-2016.07.03

配置kibana上面的收集Nginx日誌的logstash

在kibana那臺服務器上面安裝logstash(按照之前的步驟安裝)

然後再logstash的/etc/logstash/conf.d/下面

寫一個配置文件:

[[email protected] conf.d]# vim nginx.conf

input {

file {

type => "accesslog"

path => "/var/log/nginx/access.log"

start_position => "beginning"

}

}

output {

if [type] == "accesslog" {

elasticsearch {

hosts => ["192.168.253.200"]

index => "nginx-access-%{+MM.dd.YYYY}"

}

}

}

/opt/logstash/bin/logstash -f nginx.conf

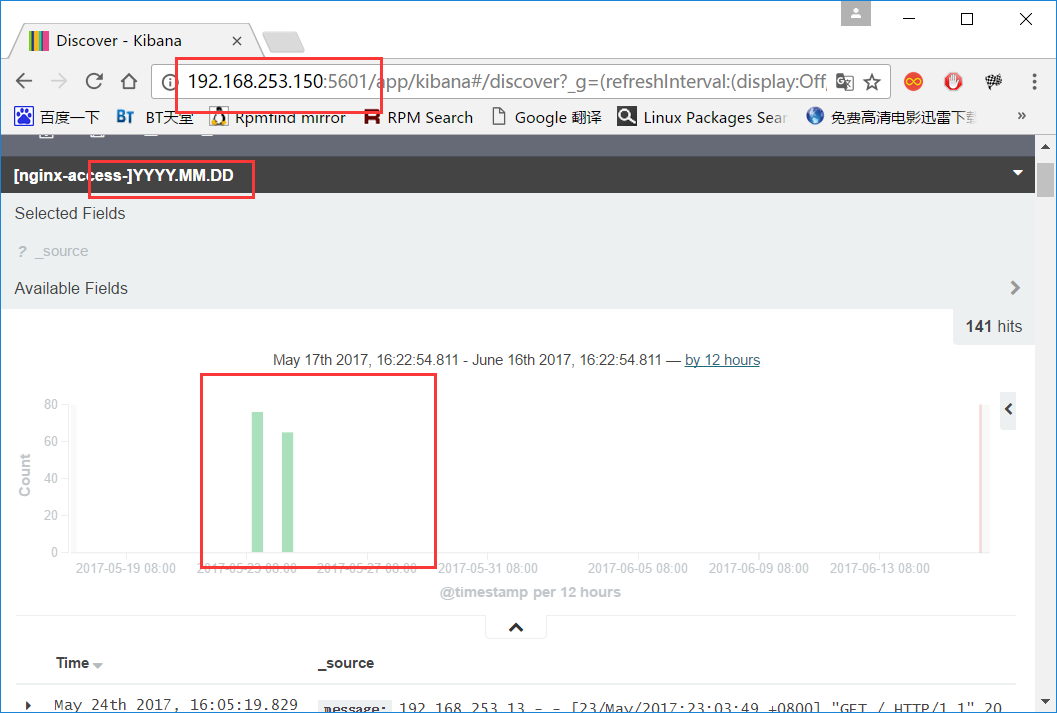

查看Elasticsearch中多出了一個以月-日-年的Nginx訪問日誌索引

[[email protected] ~]# ls /path/to/data/myelk/nodes/0/indices/

nginx-access-05.23.2017 nginx-access-2017.05.23 test

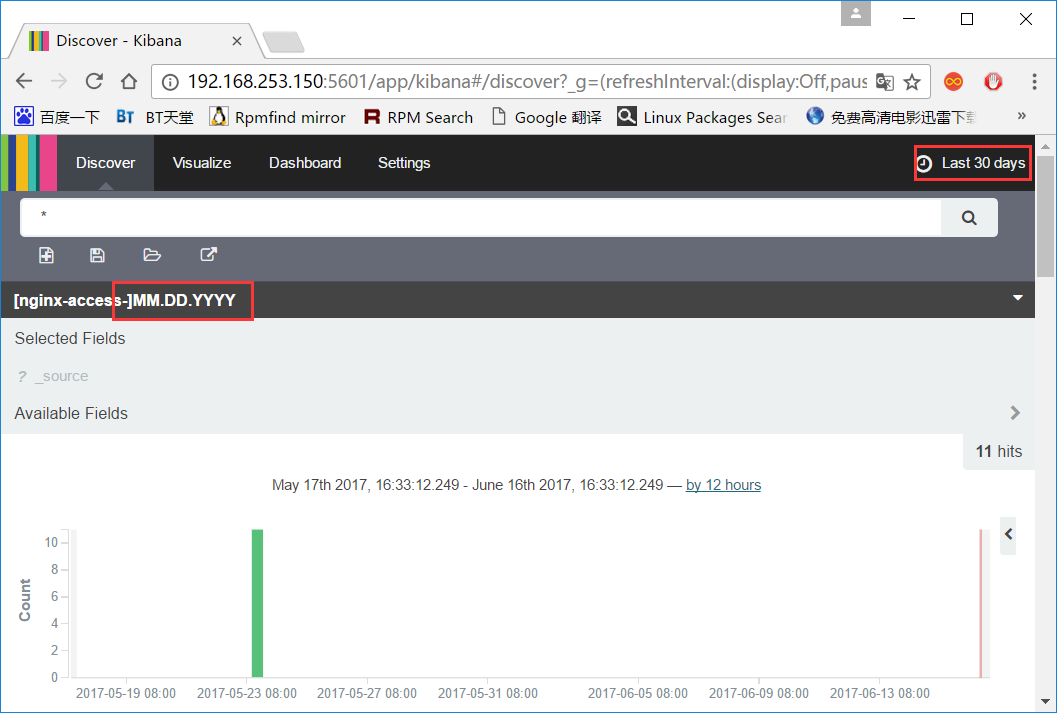

然後在kibana,瀏覽器上面按照之前的創建,生成一個新的日誌文件

四、其他的一些配置。

kibana是直接訪問的比較不安全,我們需要用nginx訪問代理,並設置權限用戶名和密碼訪問

先在kibana服務器上安裝nginx 不介紹了

在nginx裏面配置

#############################################################################

server

{

listen 80;

server_name localhost;

auth_basic "Restricted Access";

auth_basic_user_file /usr/local/nginx/conf/htpasswd.users; #密碼和用戶

location / {

proxy_pass http://localhost:5601; #代理kibana的5601之後就可以直接80訪問了

proxy_set_header Host $host;

proxy_set_header X-Real-IP $remote_addr;

proxy_set_header REMOTE-HOST $remote_addr;

proxy_set_header X-Forwarded-For $proxy_add_x_forwarded_for;

}

}

#############################################################################

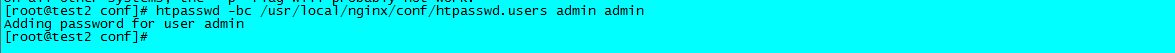

創建密碼和用戶文件:htpasswd.users

需要安裝httpd-tool包先安裝它

htpasswd -bc /usr/local/nginx/conf/htpasswd.users admin paswdadmin #前面是用戶後面是密碼

之後通過訪問需要密碼和用戶並且是80端口了

本文出自 “Change life Start fresh.” 博客,請務必保留此出處http://ahcwy.blog.51cto.com/9853317/1940684

(實際應用)ELK環境搭建