中國何時亮劍 k8s搭建

前言

最近中國和印度的局勢也是愈演愈烈。作為一個愛國青年我有些憤怒,但有時又及其的驕傲。不知道是因為中國外交強勢還是軟弱,怎樣也應該有個態度吧?這是幹嘛?就會抗議 在不就搞一些軍演。有毛用啊?

自己判斷可能是國家有自己的打算吧!就好比獅子和瘋狗一樣何必那!中國和印度的紛紛擾擾,也不知道怎樣霸氣側漏還是在傷仲永。

霸氣側漏是航母的電子彈射還是核潛艇或者是無人機.....

項目開始

我想大家都知道docker 但是也都玩過k8s吧!

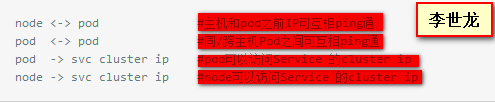

搭建kubernetes集群時遇到一些問題,網上有不少搭建文檔可以參考,但是滿足以下網絡互通才能算k8s集群ready。

需求如下:

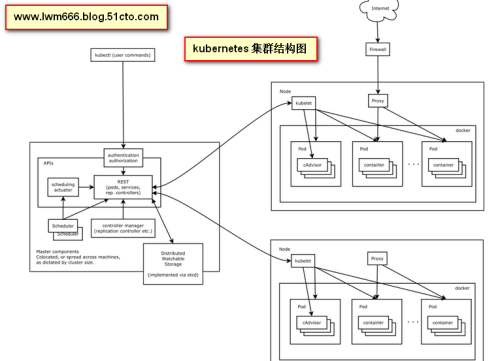

k8s結構圖如下:

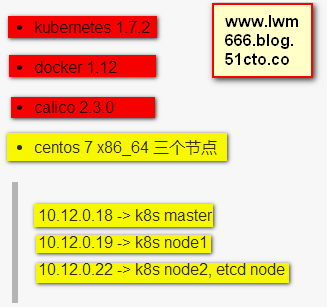

以下是版本和機器信息:

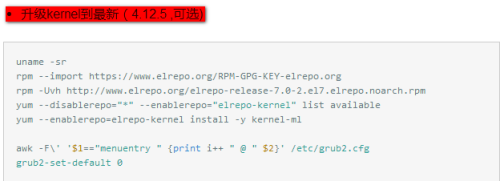

節點初始化

更新CentOS-Base.repo為阿裏雲yum源

mv -f /etc/yum.repos.d/CentOS-Base.repo /etc/yum.repos.d/CentOS-Base.repo.bk; curl -o /etc/yum.repos.d/CentOS-Base.repo http://mirrors.aliyun.com/repo/Centos-7.repo

設置bridge

cat <<EOF > /etc/sysctl.d/k8s.conf net.bridge.bridge-nf-call-ip6tables = 1 net.bridge.bridge-nf-call-iptables = 1 net.bridge.bridge-nf-call-arptables = 1 EOF sudo sysctl --system

disable selinux (請不要用setenforce 0)

sed -i ‘s/SELINUX=enforcing/SELINUX=disabled/‘ /etc/selinux/config

關閉防火墻

sudo systemctl disable firewalld.service sudo systemctl stop firewalld.service

關閉iptables

sudo yum install -y iptables-services;iptables -F; #可略過sudo systemctl disable iptables.service sudo systemctl stop iptables.service

安裝相關軟件

sudo yum install -y vim wget curl screen git etcd ebtables flannel sudo yum install -y socat net-tools.x86_64 iperf bridge-utils.x86_64

安裝docker (目前默認安裝是1.12)

sudo yum install -y yum-utils device-mapper-persistent-data lvm2 sudo yum install -y libdevmapper* docker

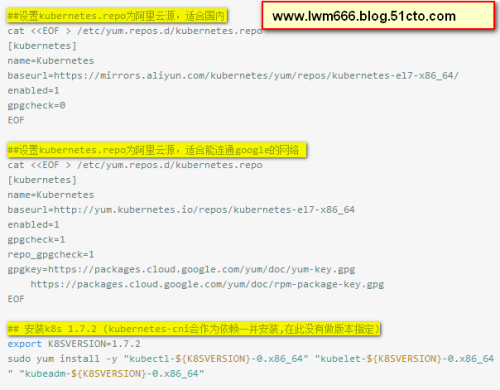

安裝kubernetes

方便復制粘貼如下:

##設置kubernetes.repo為阿裏雲源,適合國內cat <<EOF > /etc/yum.repos.d/kubernetes.repo [kubernetes] name=Kubernetes baseurl=https://mirrors.aliyun.com/kubernetes/yum/repos/kubernetes-el7-x86_64/ enabled=1 gpgcheck=0 EOF##設置kubernetes.repo為阿裏雲源,適合能連通google的網絡cat <<EOF > /etc/yum.repos.d/kubernetes.repo [kubernetes] name=Kubernetes baseurl=http://yum.kubernetes.io/repos/kubernetes-el7-x86_64 enabled=1 gpgcheck=1 repo_gpgcheck=1 gpgkey=https://packages.cloud.google.com/yum/doc/yum-key.gpg https://packages.cloud.google.com/yum/doc/rpm-package-key.gpg EOF## 安裝k8s 1.7.2 (kubernetes-cni會作為依賴一並安裝,在此沒有做版本指定)export K8SVERSION=1.7.2 sudo yum install -y "kubectl-${K8SVERSION}-0.x86_64" "kubelet-${K8SVERSION}-0.x86_64" "kubeadm-${K8SVERSION}-0.x86_64"

重啟機器 (這一步是需要的)

reboot

重啟機器後執行如下步驟

配置docker daemon並啟動docker

cat <<EOF >/etc/sysconfig/docker OPTIONS="-H unix:///var/run/docker.sock -H tcp://127.0.0.1:2375 --storage-driver=overlay --exec-opt native.cgroupdriver=cgroupfs --graph=/localdisk/docker/graph --insecure-registry=gcr.io --insecure-registry=quay.io --insecure-registry=registry.cn-hangzhou.aliyuncs.com --registry-mirror=http://138f94c6.m.daocloud.io"EOF systemctl start docker systemctl status docker -l

拉取k8s 1.7.2 需要的鏡像

quay.io/calico/node:v1.3.0 quay.io/calico/cni:v1.9.1 quay.io/calico/kube-policy-controller:v0.6.0 gcr.io/google_containers/pause-amd64:3.0 gcr.io/google_containers/kube-proxy-amd64:v1.7.2 gcr.io/google_containers/kube-apiserver-amd64:v1.7.2 gcr.io/google_containers/kube-controller-manager-amd64:v1.7.2 gcr.io/google_containers/kube-scheduler-amd64:v1.7.2 gcr.io/google_containers/etcd-amd64:3.0.17 gcr.io/google_containers/k8s-dns-dnsmasq-nanny-amd64:1.14.4 gcr.io/google_containers/k8s-dns-kube-dns-amd64:1.14.4 gcr.io/google_containers/k8s-dns-sidecar-amd64:1.14.4

在非k8s master節點 10.12.0.22 上啟動ETCD (也可搭建成ETCD集群)

screen etcd -name="EtcdServer" -initial-advertise-peer-urls=http://10.12.0.22:2380 -listen-peer-urls=http://0.0.0.0:2380 -listen-client-urls=http://10.12.0.22:2379 -advertise-client-urls http://10.12.0.22:2379 -data-dir /var/lib/etcd/default.etcd

在每個節點上check是否可通達ETCD, 必須可通才行, 不通需要看下防火墻是不是沒有關閉

etcdctl --endpoint=http://10.12.0.22:2379 member list etcdctl --endpoint=http://10.12.0.22:2379 cluster-health

在k8s master節點上使用kubeadm啟動,

pod-ip網段設定為10.68.0.0/16, cluster-ip網段為默認10.96.0.0/16如下命令在master節點上執行

cat << EOF >kubeadm_config.yaml apiVersion: kubeadm.k8s.io/v1alpha1 kind: MasterConfiguration api: advertiseAddress: 10.12.0.18 bindPort: 6443 etcd: endpoints: - http://10.12.0.22:2379 networking: dnsDomain: cluster.local serviceSubnet: 10.96.0.0/16 podSubnet: 10.68.0.0/16 kubernetesVersion: v1.7.2#token: <string>#tokenTTL: 0EOF##kubeadm init --config kubeadm_config.yaml

執行kubeadm init命令後稍等幾十秒,master上api-server, scheduler, controller-manager容器都啟動起來,以下命令來check下master

如下命令在master節點上執行

rm -rf $HOME/.kube mkdir -p $HOME/.kube sudo cp -i /etc/kubernetes/admin.conf $HOME/.kube/config sudo chown $(id -u):$(id -g) $HOME/.kube/config kubectl get cs -o wide --show-labels kubectl get nodes -o wide --show-labels

節點加入, 需要kubeadm init命令輸出的token, 如下命令在node節點上執行

systemctl start docker

systemctl start kubelet

kubeadm join --token *{6}.*{16} 10.12.0.18:6443 --skip-preflight-checks在master節點上觀察節點加入情況, 因為還沒有創建網絡,所以,所有master和node節點都是NotReady狀態, kube-dns也是pending狀態

kubectl get nodes -o wide watch kubectl get all --all-namespaces -o wide

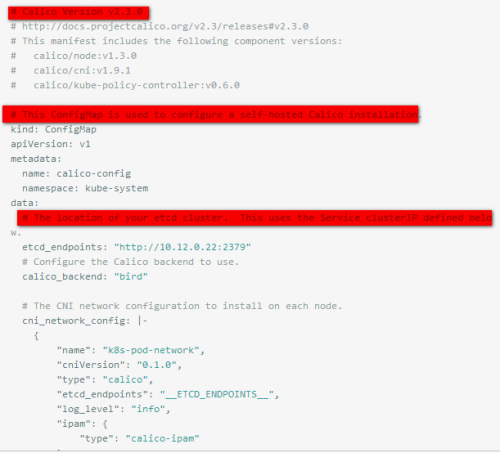

對calico.yaml做了修改

刪除ETCD創建部分,使用外部ETCD

修改CALICO_IPV4POOL_CIDR為10.68.0.0/16

calico.yaml如下:

# Calico Version v2.3.0

# http://docs.projectcalico.org/v2.3/releases#v2.3.0

# This manifest includes the following component versions:

# calico/node:v1.3.0

# calico/cni:v1.9.1

# calico/kube-policy-controller:v0.6.0

# This Config Map is used to configure a self-hosted Calico installation.kind: Config MapapiVersion: v1metadata: name: calico-config namespace: kube-systemdata:

# The location of your etcd cluster. This uses the Service clusterIP defined below. etcd_endpoints: "http://10.12.0.22:2379"

# Configure the Calico backend to use. calico_backend: "bird"

# The CNI network configuration to install on each node. cni_network_config: |- { "name": "k8s-pod-network", "cniVersion": "0.1.0", "type": "calico", "etcd_endpoints": "__ETCD_ENDPOINTS__", "log_level": "info", "ipam": { "type": "calico-ipam" }, "policy": { "type": "k8s", "k8s_api_root": "https://__KUBERNETES_SERVICE_HOST__:__KUBERNETES_SERVICE_PORT__", "k8s_auth_token": "__SERVICEACCOUNT_TOKEN__" }, "kubernetes": { "kubeconfig": "/etc/cni/net.d/__KUBECONFIG_FILENAME__" } }

# This manifest installs the calico/node container, as well

# as the Calico CNI plugins and network config on

# each master and worker node in a Kubernetes cluster.kind: DaemonSetapiVersion: extensions/v1beta1metadata: name: calico-node namespace: kube-system labels: k8s-app: calico-nodespec:selector:matchLabels:k8s-app: calico-node template: metadata:labels: k8s-app: calico-node annotations:

# Mark this pod as a critical add-on; when enabled, the critical add-on scheduler

# reserves resources for critical add-on pods so that they can be rescheduled after

# a failure. This annotation works in tandem with the toleration below. scheduler.alpha.kubernetes.io/critical-pod: ‘‘ spec: hostNetwork: true tolerations: - key: node-role.kubernetes.io/master effect: NoSchedule

# Allow this pod to be rescheduled while the node is in "critical add-ons only" mode.

# This, along with the annotation above marks this pod as a critical add-on. key: CriticalAddonsOnly operator: Exists serviceAccountName: calico-cni-plugin containers:

# Runs calico/node container on each Kubernetes node. This # container programs network policy and routes on each # host.- name: calico-node image:quay.io/calico/node:v1.3.0 env:

# The location of the Calico etcd cluster. - name: ETCD_ENDPOINTS valueFrom:configMapKeyRef: name: calico-config key: etcd_endpoints

# Enable BGP. Disable to enforce policy only. - name: CALICO_NETWORKING_BACKEND valueFrom:config MapKeyRef: name: calico-config key: calico_backend

# Disable file logging so `kubectl logs` works. - name: CALICO_DISABLE_FILE_LOGGING value: "true"

# Set Felix endpoint to host default action to ACCEPT. - name: FELIX_DEFAULTENDPOINTTOHOSTACTION value: "ACCEPT"

# Configure the IP Pool from which Pod IPs will be chosen. - name: CALICO_IPV4POOL_CIDR value: "10.68.0.0/16" - name: CALICO_IPV4POOL_IPIP value: "always"

# Disable IPv6 on Kubernetes. - name: FELIX_IPV6SUPPORT value: "false"

# Set Felix logging to "info" - name:FELIX_LOGSEVERITYSCREEN value: "info"

# Auto-detect the BGP IP address. - name: IP value: "" securityContext:privileged: true resources: requests: cpu: 250m volumeMounts: - mountPath: /lib/modules name: lib-modules readOnly: true - mountP/var/run/calico name: var-run-calico readOnly: false

# This container installs the Calico CNI binaries

# and CNI network config file on each node. - name: install-cni image: quay.io/calico/cni:v1.9.1 command: ["/install-cni.sh"] env:

# The location of the Calico etcd cluster. - name: ETCD_ENDPOINTS valueFrom: configMapKeyRef: name: calico-config key: etcd_endpoints

# The CNI network config to install on each node. - name: CNI_NETWORK_CONFIG valueFrom: configMapKeyRef: name: calico-config key: cni_network_config volumeMounts: - mountPath: /host/opt/cni/bin ame: cni-bin-dir - mountPath: /host/etc/cni/net.d name: cni-net-dir volumes:

# Used by calico/node. - name: lib-modules hostPath: path: /lib/modules - name: var-run-calico hostPath: path: /var/run/calico # Used to install CNI. - name: cni-bin-dir hostPath: path: /opt/cni/bin - name: cni-net-dir hostPath: path: /etc/cni/net.d# This manifest deploys the Calico policy controller on Kubernetes.

# See https://github.com/projectcalico/k8s-policyapiVersion: extensions/v1beta1kind: Deploymentmetadata: name: calico-policy-controller namespace: kube-system labels: k8s-app: calico-policyspec:

# The policy controller can only have a single active instance. replicas: 1 strategy: type: Recreate template: metadata: name: calico-policy-controller namespace: kube-system labels: k8s-app: calico-policy-controller annotations:

# Mark this pod as a critical add-on; when enabled, the critical add-on scheduler

# reserves resources for critical add-on pods so that they can be rescheduled after

# a failure. This annotation works in tandem with the toleration below. scheduler.alpha.kubernetes.io/critical-pod: ‘‘ spec:

# The policy controller must run in the host network namespace so that

# it isn‘t governed by policy that would prevent it from working. hostNetwork: true tolerations: - key: node-role.kubernetes.io/master effect: NoSchedule # Allow this pod to be rescheduled while the node is in "critical add-ons only" mode.

# This, along with the annotation above marks this pod as a critical add-on. - key: CriticalAddonsOnly operator: Exists serviceAccountName: calico-policy-controller containers: - name: calico-policy-controller image: quay.io/calico/kube-policy-controller:v0.6.0 env:

# The location of the Calico etcd cluster. - name: ETCD_ENDPOINTS valueFrom: configMapKeyRef: name: calico-config key: etcd_endpoints

# The location of the Kubernetes API. Use the default Kubernetes

# service for API access. - name: K8S_API value: "https://kubernetes.default:443"

# Since we‘re running in the host namespace and might not have KubeDNS

# access, configure the container‘s /etc/hosts to resolve

# kubernetes.default to the correct service clusterIP. - name: CONFIGURE_ETC_HOSTS value: "true"apiVersion: rbac.authorization.k8s.io/v1beta1kind: ClusterRoleBindingmetadata: name: calico-cni-pluginroleRef: apiGroup: rbac.authorization.k8s.io kind: ClusterRole name: calico-cni-pluginsubjects:- kind: ServiceAccount name: calico-cni-plugin namespace: kube-systemkind: ClusterRoleapiVersion: rbac.authorization.k8s.io/v1beta1metadata: name: calico-cni-plugin namespace: kube-systemrules: - apiGroups: [""] resources: - pods - nodes verbs: - getapiVersion: v1kind: ServiceAccountmetadata: name: calico-cni-plugin namespace: kube-systemapiVersion: rbac.authorization.k8s.io/v1beta1kind: ClusterRoleBindingmetadata: name: calico-policy-controllerroleRef: apiGroup: rbac.authorization.k8s.io kind: ClusterRole name: calico-policy-controllersubjects:- kind: ServiceAccount name: calico-policy-controller namespace: kube-systemkind: ClusterRoleapiVersion: rbac.authorization.k8s.io/v1beta1metadata: name: calico-policy-controller namespace: kube-systemrules: - apiGroups: - "" - extensions resources: - pods - namespaces - networkpolicies verbs: - watch - listapiVersion: v1kind: ServiceAccountmetadata: name: calico-policy-controller namespace: kube-system

創建calico跨主機網絡, 在master節點上執行如下命令

kubectl apply -f calico.yaml

註意觀察每個節點上會有名為calico-node-****的pod起來, calico-policy-controller和kube-dns也會起來, 這些pod都在kube-system名字空間裏

>kubectl get all --all-namespaces NAMESPACE NAME READY STATUS RESTARTS AGE kube-system po/calico-node-2gqf2 2/2 Running 0 19h kube-system po/calico-node-fg8gh 2/2 Running 0 19h kube-system po/calico-node-ksmrn 2/2 Running 0 19h kube-system po/calico-policy-controller-1727037546-zp4lp 1/1 Running 0 19h kube-system po/etcd-izuf6fb3vrfqnwbct6ivgwz 1/1 Running 0 19h kube-system po/kube-apiserver-izuf6fb3vrfqnwbct6ivgwz 1/1 Running 0 19h kube-system po/kube-controller-manager-izuf6fb3vrfqnwbct6ivgwz 1/1 Running 0 19h kube-system po/kube-dns-2425271678-3t4g6 3/3 Running 0 19h kube-system po/kube-proxy-6fg1l 1/1 Running 0 19h kube-system po/kube-proxy-fdbt2 1/1 Running 0 19h kube-system po/kube-proxy-lgf3z 1/1 Running 0 19h kube-system po/kube-scheduler-izuf6fb3vrfqnwbct6ivgwz 1/1 Running 0 19h NAMESPACE NAME CLUSTER-IP EXTERNAL-IP PORT(S) AGE default svc/kubernetes 10.96.0.1 <none> 443/TCP 19h kube-system svc/kube-dns 10.96.0.10 <none> 53/UDP,53/TCP 19h NAMESPACE NAME DESIRED CURRENT UP-TO-DATE AVAILABLE AGE kube-system deploy/calico-policy-controller 1 1 1 1 19h kube-system deploy/kube-dns 1 1 1 1 19h NAMESPACE NAME DESIRED CURRENT READY AGE kube-system rs/calico-policy-controller-1727037546 1 1 1 19h kube-system rs/kube-dns-2425271678 1 1 1 19h

部署dash-board

wget https://raw.githubusercontent.com/kubernetes/dashboard/master/src/deploy/kubernetes-dashboard.yaml kubectl create -f kubernetes-dashboard.yaml

部署heapster

wget https://github.com/kubernetes/heapster/archive/v1.4.0.tar.gz tar -zxvf v1.4.0.tar.gzcd heapster-1.4.0/deploy/kube-config/influxdb kubectl create -f ./

其他命令

強制刪除某個pod

kubectl delete pod <podname> --namespace=<namspacer> --grace-period=0 --force

重置某個node節點

kubeadm reset systemctl stop kubelet; docker ps -aq | xargs docker rm -fv find /var/lib/kubelet | xargs -n 1 findmnt -n -t tmpfs -o TARGET -T | uniq | xargs -r umount -v; rm -rf /var/lib/kubelet /etc/kubernetes/ /var/lib/etcd systemctl start kubelet;

訪問dashboard (在master節點上執行)

kubectl proxy --address=0.0.0.0 --port=8001 --accept-hosts=‘^.*‘ or kubectl proxy --port=8011 --address=192.168.61.100 --accept-hosts=‘^192\.168\.61\.*‘ access to http://0.0.0.0:8001/ui

Access to API with authentication token

APISERVER=$(kubectl config view | grep server | cut -f 2- -d ":" | tr -d " ") TOKEN=$(kubectl describe secret $(kubectl get secrets | grep default | cut -f1 -d ‘ ‘) | grep -E ‘^token‘ | cut -f2 -d‘:‘ | tr -d ‘\t‘) curl $APISERVER/api --header "Authorization: Bearer $TOKEN" --insecure

讓master節點參與調度,默認master是不參與到任務調度中的

kubectl taint nodes --all node-role.kubernetes.io/master- or kubectl taint nodes --all dedicated-

kubernetes master 消除隔離之前 Annotations

Name: izuf6fb3vrfqnwbct6ivgwzRole:Labels: beta.kubernetes.io/arch=amd64

beta.kubernetes.io/os=linux

kubernetes.io/hostname=izuf6fb3vrfqnwbct6ivgwz

node-role.kubernetes.io/master=Annotations: node.alpha.kubernetes.io/ttl=0

volumes.kubernetes.io/controller-managed-attach-detach=truekubernetes master 消除隔離之後 Annotations

Name: izuf6fb3vrfqnwbct6ivgwzRole:Labels: beta.kubernetes.io/arch=amd64

beta.kubernetes.io/os=linux

kubernetes.io/hostname=izuf6fb3vrfqnwbct6ivgwz

node-role.kubernetes.io/master=Annotations: node.alpha.kubernetes.io/ttl=0

volumes.kubernetes.io/controller-managed-attach-detach=trueTaints: <none>總結:通過測試已經完成但是還有錯看過文檔的夥伴能猜到嗎?

本文出自 “李世龍” 博客,謝絕轉載!

中國何時亮劍 k8s搭建