爬去證件會的首次公開發行反饋意見並做詞頻分析

阿新 • • 發佈:2017-10-09

extract req roc object container 及其 嘉興 鶴壁 阿裏

利用國慶8天假期,從頭開始學爬蟲,現在分享一下自己項目過程。

技術思路:

1,使用scrapy爬去證監會反饋意見

- 分析網址特點,並利用scrapy shell測試選擇器

- 加載代理服務器:IP池

- 模擬瀏覽器:user-agent

- 編寫pipeitem,將數據寫入數據庫中

2,安裝並配置mysql

- 安裝pymysql

- 參考mysql手冊,建立數據庫以及表格

3,利用進行數據分析

- 使用對反饋意見進行整理

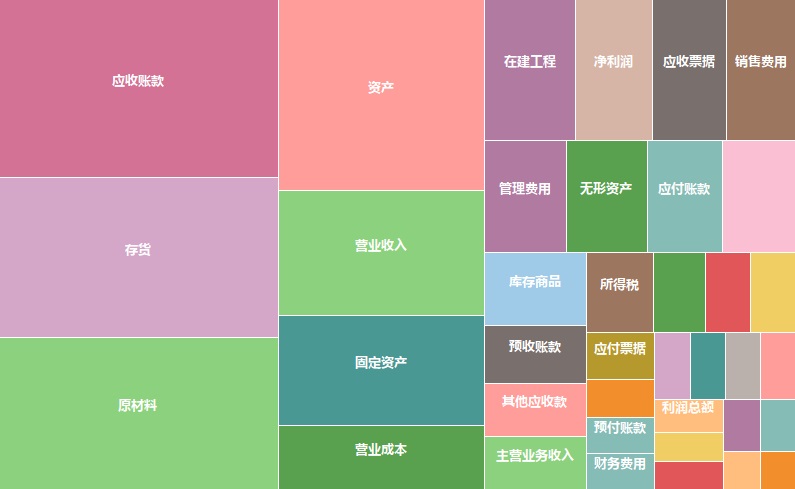

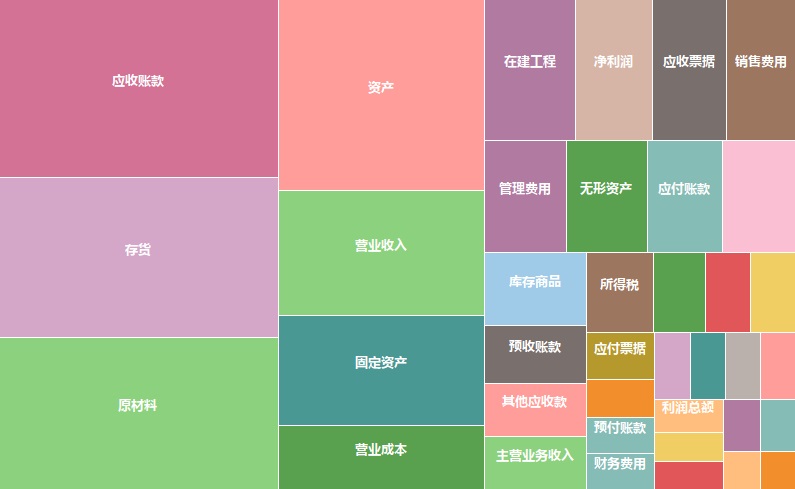

- 利用jieba庫進行分析,制作財務報表專用字典,獲取詞匯以及其頻率

- 使用pandas分析數據並作圖

- 使用tableau作圖

分析思路:

- 分析公司名字是否含有地域信息

- 分析反饋意見的主要焦點:財務與法律

核心代碼:

- 爬蟲核心代碼

# -*- coding: utf-8 -*- import scrapy from scrapy.selector import Selector from fkyj.items import FkyjItem import urllib.request from scrapy.http import HtmlResponse from scrapy.selector import HtmlXPathSelector def gen_url_indexpage():

#證監會的網站是通過javascript生成的,因此網址無法提取,必須是自己生成 pre= "http://www.csrc.gov.cn/pub/newsite/fxjgb/scgkfxfkyj/index" url_list = [] for i in range(25): if i ==0: url = pre+".html" url_list.append(url) else: url = pre+"_"+str(i)+".html" url_list.append(url) return url_list class Spider1Spider(scrapy.Spider): name= ‘spider1‘ allowed_domains = [‘http://www.csrc.gov.cn‘] start_urls = gen_url_indexpage() def parse(self, response): item = FkyjItem() page_lst = response.xpath(‘//ul[@id="myul"]/li/a/@href‘).extract() name_lst = response.xpath(‘//ul[@id="myul"]/li/a/@title‘).extract() date_lst= response.xpath(‘//ul[@id="myul"]/li/span/text()‘).extract() for i in range(len(name_lst)): item["name"] = name_lst[i] item["date"] = date_lst[i] url_page = "http://www.csrc.gov.cn/pub/newsite/fxjgb/scgkfxfkyj" +page_lst[i] pre_final = "http://www.csrc.gov.cn/pub/newsite/fxjgb/scgkfxfkyj/" + page_lst[i].split("/")[1] res = Selector(text= urllib.request.urlopen(url_page).read().decode("utf-8")) #給res裝上HtmlXPathSelector url_extract = res.xpath("//script").re(r‘<a href="(\./P\d+?\.docx?)">|<a href="(\./P\d+?\.pdf)">‘)[0][1:] url_final = pre_final+ url_extract print ("-"*10,url_final,"-"*10) item["content"] = "" try: file =urllib.request.urlopen(url_final).read() filepath = r"C:\\Users\\tc\\fkyj\\fkyj\\files\\" filetype = url_extract.split(".")[1] with open(filepath+item["name"]+"."+filetype,‘wb‘) as f: f.write(file) except urllib.request.HTTPError: item["content"] = "wrong:HTTPERROR" yield item

這裏不足之處在於沒有體現針對不同網站書寫不同代碼,建議建立不同callback函數

建議思路:

parse():正對初始網址

parse_page:針對導航頁

parse_item:提取公司名稱與日期

parse_doc:提取doc文檔

---------------------------------------------------------------------pipeitem代碼-------------------------------------------------------------

import pymysql

class FkyjPipeline(object):

def __init__(self):

#連接數據庫

self.con = pymysql.connect(host=‘localhost‘, port=3306, user=‘root‘, passwd="密碼",db="數據庫名字")

def process_item(self, item, spider):

name = item["name"]

date = item["date"]

content = item["content"]

self.con.query("Insert Into zjh_fkyj.fkyj(name,date_fk,content) Values(‘" + name + "‘,‘" + date + "‘,‘"+content+"‘)")

#必須要提交,否則沒用

self.con.commit()

return item

def close_spider(self):

#在運行時關閉數據庫

self.con.close()

2,分析用代碼--主要部分

In [9]:

import pandas as pdIn [10]:

data =pd.read_csv(r"C:\\Users\\tc\\fkyj\\fkyj.csv")In [11]:

data.columnsOut[11]:

Index([‘Unnamed: 0‘, ‘id‘, ‘name‘, ‘date‘, ‘content‘], dtype=‘object‘)In [11]:

data.drop(["Unnamed: 0",‘id‘],axis=1,inplace = True)In [12]:

def get_year_month(datetime): return "-".join(datetime.split("-")[:2])In [13]:

group_month_data = data.groupby(data["date"].apply(get_year_month)).count()In [25]:

get_year_month("2017-2-1")Out[25]:

‘2017-2‘In [6]:

%matplotlib

Using matplotlib backend: Qt5AggIn [49]:

group_month_data["name"].plot(kind="bar")Out[49]:

<matplotlib.axes._subplots.AxesSubplot at 0x14babc679b0>In [36]:

import matplotlib.pyplot as pltIn [38]:

from matplotlib import font_manager zh_font = font_manager.FontProperties(fname=r‘c:\windows\fonts\simsun.ttc‘, size=14)In [66]:

fig, ax = plt.subplots() width =0.35 ax.set_xticks(ticks=range(len(group_month_data))) plt.xticks(rotation=20) res = ax.bar(left = range(len(group_month_data)),height=group_month_data["name"]) ax.set_title("證監會反饋意見",fontproperties=zh_font) ax.set_ylabel("數量",fontproperties=zh_font) ax.set_xticklabels( i for i in (group_month_data.index.values)) plt.show()In [47]:

ax.set_xticklabels(group_month_data.index.values) plt.show()In [50]:

group_month_data.index.valuesOut[50]:

array([‘2016-10‘, ‘2016-11‘, ‘2016-12‘, ‘2017-01‘, ‘2017-02‘, ‘2017-03‘,

‘2017-04‘, ‘2017-05‘, ‘2017-06‘, ‘2017-07‘, ‘2017-08‘, ‘2017-09‘], dtype=object)

In [51]:

len(group_month_data)Out[51]:

12In [8]:

china_map = [("北京","|東城|西城|崇文|宣武|朝陽|豐臺|石景山|海澱|門頭溝|房山|通州|順義|昌平|大興|平谷|懷柔|密雲|延慶"), ("上海","|黃浦|盧灣|徐匯|長寧|靜安|普陀|閘北|虹口|楊浦|閔行|寶山|嘉定|浦東|金山|松江|青浦|南匯|奉賢|崇明"), ("天津","|和平|東麗|河東|西青|河西|津南|南開|北辰|河北|武清|紅撟|塘沽|漢沽|大港|寧河|靜海|寶坻|薊縣"), ("重慶","|萬州|涪陵|渝中|大渡口|江北|沙坪壩|九龍坡|南岸|北碚|萬盛|雙撟|渝北|巴南|黔江|長壽|綦江|潼南|銅梁|大足|榮昌|壁山|梁平|城口|豐都|墊江|武隆|忠縣|開縣|雲陽|奉節|巫山|巫溪|石柱|秀山|酉陽|彭水|江津|合川|永川|南川"), ("河北","|石家莊|邯鄲|邢臺|保定|張家口|承德|廊坊|唐山|秦皇島|滄州|衡水"), ("山西","|太原|大同|陽泉|長治|晉城|朔州|呂梁|忻州|晉中|臨汾|運城"), ("內蒙古","|呼和浩特|包頭|烏海|赤峰|呼倫貝爾盟|阿拉善盟|哲裏木盟|興安盟|烏蘭察布盟|錫林郭勒盟|巴彥淖爾盟|伊克昭盟"), ("遼寧","|沈陽|大連|鞍山|撫順|本溪|丹東|錦州|營口|阜新|遼陽|盤錦|鐵嶺|朝陽|葫蘆島"), ("吉林","|長春|吉林|四平|遼源|通化|白山|松原|白城|延邊"), ("黑龍江","|哈爾濱|齊齊哈爾|牡丹江|佳木斯|大慶|綏化|鶴崗|雞西|黑河|雙鴨山|伊春|七臺河|大興安嶺"), ("江蘇","|南京|鎮江|蘇州|南通|揚州|鹽城|徐州|連雲港|常州|無錫|宿遷|泰州|淮安"), ("浙江","|杭州|寧波|溫州|嘉興|湖州|紹興|金華|衢州|舟山|臺州|麗水"), ("安徽","|合肥|蕪湖|蚌埠|馬鞍山|淮北|銅陵|安慶|黃山|滁州|宿州|池州|淮南|巢湖|阜陽|六安|宣城|亳州"), ("福建","|福州|廈門|莆田|三明|泉州|漳州|南平|龍巖|寧德"), ("江西","|南昌市|景德鎮|九江|鷹潭|萍鄉|新餘|贛州|吉安|宜春|撫州|上饒"), ("山東","|濟南|青島|淄博|棗莊|東營|煙臺|濰坊|濟寧|泰安|威海|日照|萊蕪|臨沂|德州|聊城|濱州|菏澤"), ("河南","|鄭州|開封|洛陽|平頂山|安陽|鶴壁|新鄉|焦作|濮陽|許昌|漯河|三門峽|南陽|商丘|信陽|周口|駐馬店|濟源"), ("湖北","|武漢|宜昌|荊州|襄樊|黃石|荊門|黃岡|十堰|恩施|潛江|天門|仙桃|隨州|鹹寧|孝感|鄂州"), ("湖南","|長沙|常德|株洲|湘潭|衡陽|嶽陽|邵陽|益陽|婁底|懷化|郴州|永州|湘西|張家界"), ("廣東","|廣州|深圳|珠海|汕頭|東莞|中山|佛山|韶關|江門|湛江|茂名|肇慶|惠州|梅州|汕尾|河源|陽江|清遠|潮州|揭陽|雲浮"), ("廣西","|南寧|柳州|桂林|梧州|北海|防城港|欽州|貴港|玉林|南寧地區|柳州地區|賀州|百色|河池"), ("海南","|海口|三亞"), ("四川","|成都|綿陽|德陽|自貢|攀枝花|廣元|內江|樂山|南充|宜賓|廣安|達川|雅安|眉山|甘孜|涼山|瀘州"), ("貴州","|貴陽|六盤水|遵義|安順|銅仁|黔西南|畢節|黔東南|黔南"), ("雲南","|昆明|大理|曲靖|玉溪|昭通|楚雄|紅河|文山|思茅|西雙版納|保山|德宏|麗江|怒江|迪慶|臨滄"), ("西藏","|拉薩|日喀則|山南|林芝|昌都|阿裏|那曲"), ("陜西","|西安|寶雞|鹹陽|銅川|渭南|延安|榆林|漢中|安康|商洛"), ("甘肅","|蘭州|嘉峪關|金昌|白銀|天水|酒泉|張掖|武威|定西|隴南|平涼|慶陽|臨夏|甘南"), ("寧夏","|銀川|石嘴山|吳忠|固原"), ("青海","|西寧|海東|海南|海北|黃南|玉樹|果洛|海西"), ("新疆","|烏魯木齊|石河子|克拉瑪依|伊犁|巴音郭勒|昌吉|克孜勒蘇柯爾克孜|博爾塔拉|吐魯番|哈密|喀什|和田|阿克蘇"), ("香港",""), ("澳門",""), ("臺灣","|臺北|高雄|臺中|臺南|屏東|南投|雲林|新竹|彰化|苗栗|嘉義|花蓮|桃園|宜蘭|基隆|臺東|金門|馬祖|澎湖")] city_map = {} for i in china_map: if i != "澳門" or i != "香港": city_map[i[0]] = i[1].split("|")[1:] elif i == "澳門" or i == "香港": city_map[i[0]] = ""In [27]:

def get_province(name,con_loc = False): keys = city_map.keys() for j in keys: if j in name: province = j location = "province" break else: for k in city_map[j]: if k in name: province = j location = "city" break else: province = "unknow" location = "unknow" if con_loc: return (province,location) else: return province #count the name that contain the locationIn [31]:

data["province"] = data["name"].apply(get_province)In [13]:

data["name"][:5]Out[13]:

0 名臣健康用品股份有限公司首次公開發行股票申請文件反饋意見 1 浙江捷眾科技股份有限公司首次公開發行股票申請文件反饋意見 2 江蘇天智互聯科技股份有限公司創業板首次公開發行股票申請文件反饋意見 3 雲南神農農業產業集團股份有限公司首次公開發行股票申請文件反饋意見 4 浙江臺華新材料股份有限公司首次公開發行股票申請文件反饋意見 Name: name, dtype: objectIn [32]:

data["province"][:20]Out[32]:

0 unknow 1 浙江 2 江蘇 3 雲南 4 浙江 5 浙江 6 unknow 7 unknow 8 北京 9 unknow 10 江蘇 11 unknow 12 北京 13 unknow 14 unknow 15 四川 16 江蘇 17 unknow 18 unknow 19 unknow Name: province, dtype: objectIn [44]:

name_data = data.groupby(data["province"]).count()["name"] fig, ax = plt.subplots() width =0.35 ax.set_xticks(ticks=range(len(name_data))) plt.xticks(rotation=60) res = ax.bar(left = range(len(name_data)),height= name_data) ax.set_title("反饋意見--公司名稱是否含有地域信息",fontproperties=zh_font) ax.set_ylabel("數量",fontproperties=zh_font) ax.set_xticklabels( [i for i in name_data.index.values],fontproperties=zh_font) plt.show()In [15]:

import jiebaIn [16]:

jieba.load_userdict(r"C:\\ProgramData\\Anaconda3\\Lib\\site-packages\\jieba\\userdict.txt")

Building prefix dict from the default dictionary ... Loading model from cache C:\Users\tc\AppData\Local\Temp\jieba.cache Loading model cost 1.158 seconds. Prefix dict has been built succesfully.In [17]:

import re def remove_rn(data): return re.sub("[\\n\\r]+","",data) remove_rn("\r\n\r")Out[17]:

‘‘In [18]:

data["content"] = data["content"].apply(remove_rn)In [11]:

data["content"][:1]Out[11]:

0 名臣健康用品股份有限公司首次公開發行股票申請文件反饋意見\r\r\n\r\r\n\r\r\n... Name: content, dtype: objectIn [17]:

remove_rn("\r\n\r45463")Out[17]:

‘45463‘In [14]:

data["content"] = data["content"].astype(str)In [16]:

f1 = open(r"C:\Users\tc\Desktop\user_dict.txt",encoding ="utf-8") f2 = open(r"C:\Users\tc\Desktop\userdict.txt","w") for i in f1.readlines(): f2.write(i[:-1] + " 5 n\n") f1.close() f2.close()In [23]:

list(jieba.cut("hellotc") )Out[23]:

[‘hellotc‘]In [24]:

list(jieba.cut("我是唐誠的弟弟"))Out[24]:

[‘我‘, ‘是‘, ‘唐誠‘, ‘的‘, ‘弟弟‘]In [19]:

type(pd.Series(list( jieba.cut(data["content"][1]))).value_counts())Out[19]:

pandas.core.series.SeriesIn [ ]:

s = pd.Series([0 for i in len(data["content"])],index = ) for i in data["content"]: pd.Series(list( jieba.cut(data["content"][1]))).value_counts()In [7]:

s1 = pd.Series(range(3),index = ["a","b","c"]) s2 = pd.Series(range(3),index = ["d","b","c"]) s1.add(s2,fill_value=0)Out[7]:

a 0.0 b 2.0 c 4.0 d 0.0 dtype: float64In [8]:

def add_series(s1,s2): r = {} s1 = s1.to_dict() s2 = s2.to_dict() common = set(s1.keys()).intersection(s2.keys()) for i in common: r[i] = s1[i]+s2[i] for j in set(s1.keys()).difference(s2.keys()): r[j] = s1[j] for k in set(s2.keys()).difference(s1.keys()): r[k] = s2[k] return pd.Series(r)In [21]:

series_list = [] for i in data["content"]: series_list.append(pd.Series(list( jieba.cut(i))).value_counts())In [23]:

start = pd.Series([0,0],index = [‘a‘,‘b‘]) for i in series_list: start = add_series(start,i)In [25]:

start[:4]Out[25]:

2

599

\t 195

30

dtype: int64

In [26]:

start.sort_values() start.to_csv(r"C:\\Users\\tc\\fkyj\\rank_word.csv")3,分析結果--部分

爬去證件會的首次公開發行反饋意見並做詞頻分析