DNN的BP算法Python簡單實現

阿新 • • 發佈:2017-10-19

images 相等 1.0 [] 重要 work arange imp into

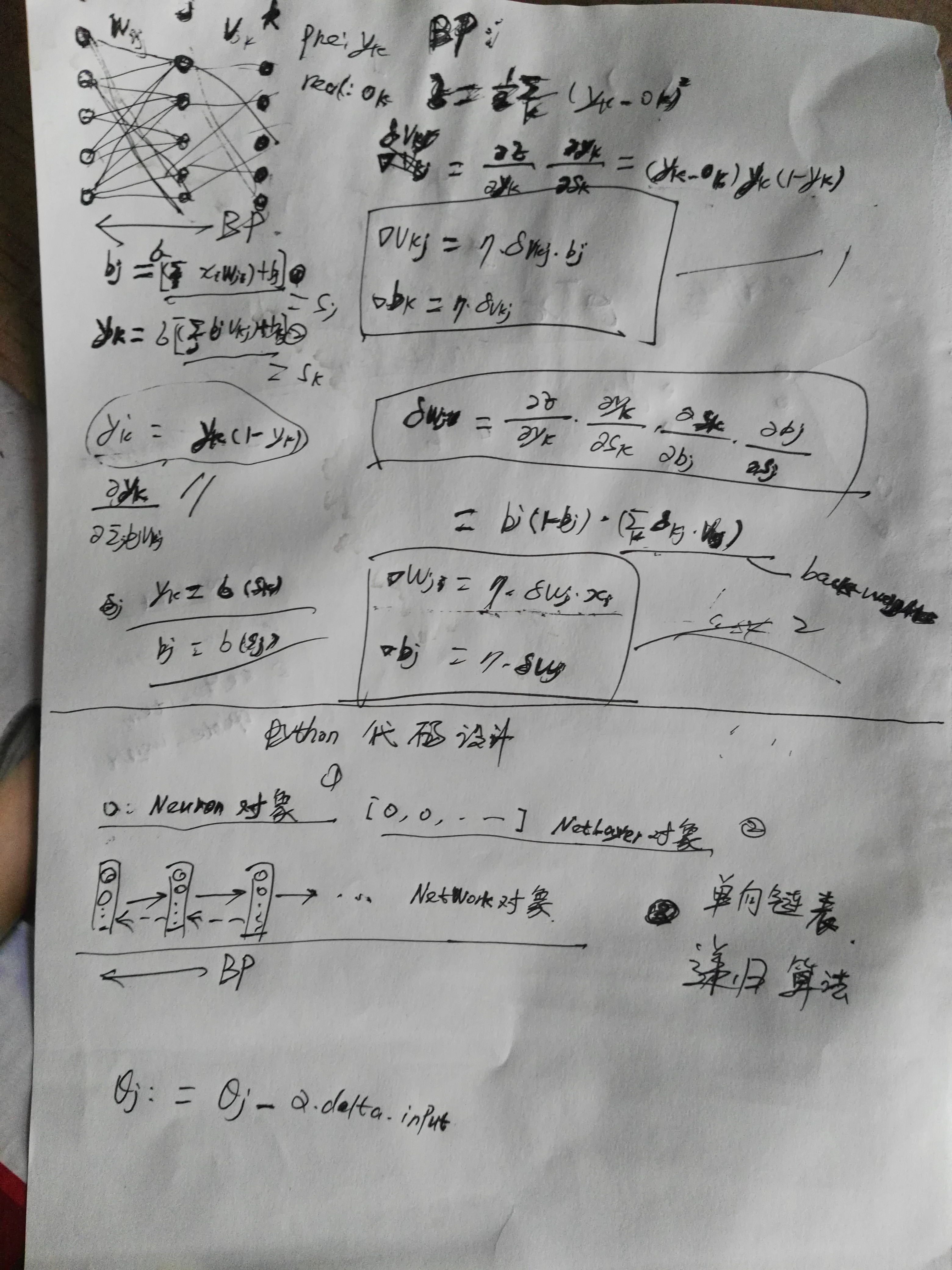

BP算法是神經網絡的基礎,也是最重要的部分。由於誤差反向傳播的過程中,可能會出現梯度消失或者爆炸,所以需要調整損失函數。在LSTM中,通過sigmoid來實現三個門來解決記憶問題,用tensorflow實現的過程中,需要進行梯度修剪操作,以防止梯度爆炸。RNN的BPTT算法同樣存在著這樣的問題,所以步數超過5步以後,記憶效果大大下降。LSTM的效果能夠支持到30多步數,太長了也不行。如果要求更長的記憶,或者考慮更多的上下文,可以把多個句子的LSTM輸出組合起來作為另一個LSTM的輸入。下面上傳用Python實現的普通DNN的BP算法,激活為sigmoid.

字跡有些潦草,湊合用吧,習慣了手動繪圖,個人習慣。後面的代碼實現思路是最重要的:每個層有多個節點,層與層之間單向鏈接(前饋網絡),因此數據結構可以設計為單向鏈表。實現的過程屬於典型的遞歸,遞歸調用到最後一層後把每一層的back_weights反饋給上一層,直到推導結束。上傳代碼(未經過優化的代碼):

測試代碼:

import numpy as np

import NeuralNetWork as nw

if __name__ == ‘__main__‘:

print("test neural network")

data = np.array([[1, 0, 0, 0, 0, 0, 0, 0],

[0, 1, 0, 0, 0, 0, 0, 0],

[0, 0, 1, 0, 0, 0, 0, 0],

[0, 0, 0, 1, 0, 0, 0, 0],

[0, 0, 0, 0, 1, 0, 0, 0],

[0, 0, 0, 0, 0, 1, 0, 0],

[0, 0, 0, 0, 0, 0, 1, 0],

[0, 0, 0, 0, 0, 0, 0, 1]])

np.set_printoptions(precision=3, suppress=True)

for i in range(10):

network = nw.NeuralNetWork([8, 20, 8])

# 讓輸入數據與輸出數據相等

network.fit(data, data, learning_rate=0.1, epochs=150)

print("\n\n", i, "result")

for item in data:

print(item, network.predict(item))

#NeuralNetWork.py

# encoding: utf-8

#NeuralNetWork.py

import numpy as np;

def logistic(inX):

return 1 / (1+np.exp(-inX))

def logistic_derivative(x):

return logistic(x) * (1 - logistic(x))

class Neuron:

‘‘‘

構建神經元單元,每個單元都有如下屬性:1.input;2.output;3.back_weight;4.deltas_item;5.weights.

每個神經元單元更新自己的weights,多個神經元構成layer,形成weights矩陣

‘‘‘

def __init__(self,len_input):

#輸入的初始參數,隨機取很小的值(<0.1)

self.weights = np.random.random(len_input) * 0.1

#當前實例的輸入

self.input = np.ones(len_input)

#對下一層的輸出值

self.output = 1.0

#誤差項

self.deltas_item = 0.0

# 上一次權重增加的量,記錄起來方便後面擴展時可考慮增加沖量

self.last_weight_add = 0

def calculate_output(self,x):

#計算輸出值

self.input = x;

self.output = logistic(np.dot(self.weights,self.input))

return self.output

def get_back_weight(self):

#獲取反饋差值

return self.weights * self.deltas_item

def update_weight(self,target = 0,back_weight = 0,learning_rate=0.1,layer="OUTPUT"):

#更新權重

if layer == "OUTPUT":

self.deltas_item = (target - self.output) * logistic_derivative(self.input)

elif layer == "HIDDEN":

self.deltas_item = back_weight * logistic_derivative(self.input)

delta_weight = self.input * self.deltas_item * learning_rate + 0.9 * self.last_weight_add #添加沖量

self.weights += delta_weight

self.last_weight_add = delta_weight

class NetLayer:

‘‘‘

網絡層封裝,管理當前網絡層的神經元列表

‘‘‘

def __init__(self,len_node,in_count):

‘‘‘

:param len_node: 當前層的神經元數

:param in_count: 當前層的輸入數

‘‘‘

# 當前層的神經元列表

self.neurons = [Neuron(in_count) for _ in range(len_node)];

# 記錄下一層的引用,方便遞歸操作

self.next_layer = None

def calculate_output(self,inX):

output = np.array([node.calculate_output(inX) for node in self.neurons])

if self.next_layer is not None:

return self.next_layer.calculate_output(output)

return output

def get_back_weight(self):

return sum([node.get_back_weight() for node in self.neurons])

def update_weight(self,learning_rate,target):

layer = "OUTPUT"

back_weight = np.zeros(len(self.neurons))

if self.next_layer is not None:

back_weight = self.next_layer.update_weight(learning_rate,target)

layer = "HIDDEN"

for i,node in enumerate(self.neurons):

target_item = 0 if len(target) <= i else target[i]

node.update_weight(target = target_item,back_weight = back_weight[i],learning_rate=learning_rate,layer=layer)

return self.get_back_weight()

class NeuralNetWork:

def __init__(self, layers):

self.layers = []

self.construct_network(layers)

pass

def construct_network(self, layers):

last_layer = None

for i, layer in enumerate(layers):

if i == 0:

continue

cur_layer = NetLayer(layer, layers[i - 1])

self.layers.append(cur_layer)

if last_layer is not None:

last_layer.next_layer = cur_layer

last_layer = cur_layer

def fit(self, x_train, y_train, learning_rate=0.1, epochs=100000, shuffle=False):

‘‘‘‘‘

訓練網絡, 默認按順序來訓練

方法 1:按訓練數據順序來訓練

方法 2: 隨機選擇測試

:param x_train: 輸入數據

:param y_train: 輸出數據

:param learning_rate: 學習率

:param epochs:權重更新次數

:param shuffle:隨機取數據訓練

‘‘‘

indices = np.arange(len(x_train))

for _ in range(epochs):

if shuffle:

np.random.shuffle(indices)

for i in indices:

self.layers[0].calculate_output(x_train[i])

self.layers[0].update_weight(learning_rate, y_train[i])

pass

def predict(self, x):

return self.layers[0].calculate_output(x)

DNN的BP算法Python簡單實現