Caffe框架原始碼剖析(4)—卷積層基類BaseConvolutionLayer

阿新 • • 發佈:2018-10-31

資料層DataLayer正向傳導的目標層是卷積層ConvolutionLayer。卷積層的是用一系列的權重濾波核與輸入影象進行卷積,具體實現是通過將影象展開成向量,作用矩陣乘法實現卷積。

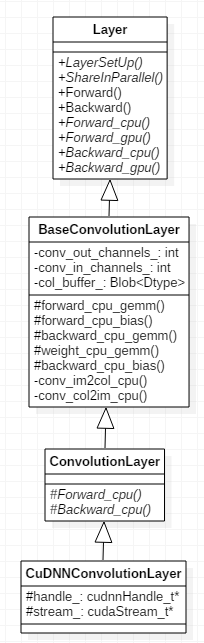

同樣,首先看一下卷積層的類圖。

BaseConvolutionLayer類是所有卷積層的基類,它負責初始化濾波核、將影象轉成向量,以及封裝了BLAS庫的矩陣運算函式。Yangqing Jia在Convolution in Caffe: a memo 一文中說明了卷積的實現方式和優缺點:優點是速度快,缺點也很明顯,記憶體開銷大。也還有Alex Krizhevsky等人更好的實現方式,但是由於時間和精力原因並沒有採用,畢竟是不想寫博士論文意外創作的

ConvolutionLayer類是卷積層的例項類,如果系統支援cuDNN,則例項類為CuDNNConvolutionLayer。提醒一下,在CUDA計算能力3.0及以上的GPU上才支援cuDNN,這裡CUDA GPUs 可以看到GPU的計算能力。如果當前GPU不支援cuDNN,Caffe又是預設開啟cuDNN的,那麼執行時構造CuDNNConvolutionLayer物件例項時將報錯。這時需要在CommonSettings.props中將<UseCuDNN>標籤改為false,或者在工程屬性“C/C++”->“前處理器”中將USE_CUDNN

template <typename Dtype> class BaseConvolutionLayer : public Layer<Dtype> { public: explicit BaseConvolutionLayer(const LayerParameter& param) : Layer<Dtype>(param) {} virtual void LayerSetUp(const vector<Blob<Dtype>*>& bottom, const vector<Blob<Dtype>*>& top); virtual void Reshape(const vector<Blob<Dtype>*>& bottom, const vector<Blob<Dtype>*>& top); virtual inline int MinBottomBlobs() const { return 1; } virtual inline int MinTopBlobs() const { return 1; } virtual inline bool EqualNumBottomTopBlobs() const { return true; } protected: // 一系列BLAS輔助函式 void forward_cpu_gemm(const Dtype* input, const Dtype* weights, Dtype* output, bool skip_im2col = false); void forward_cpu_bias(Dtype* output, const Dtype* bias); void backward_cpu_gemm(const Dtype* input, const Dtype* weights, Dtype* output); void weight_cpu_gemm(const Dtype* input, const Dtype* output, Dtype* weights); void backward_cpu_bias(Dtype* bias, const Dtype* input); #ifndef CPU_ONLY void forward_gpu_gemm(const Dtype* col_input, const Dtype* weights, Dtype* output, bool skip_im2col = false); void forward_gpu_bias(Dtype* output, const Dtype* bias); void backward_gpu_gemm(const Dtype* input, const Dtype* weights, Dtype* col_output); void weight_gpu_gemm(const Dtype* col_input, const Dtype* output, Dtype* weights); void backward_gpu_bias(Dtype* bias, const Dtype* input); #endif /// @brief The spatial dimensions of the input. inline int input_shape(int i) { return (*bottom_shape_)[channel_axis_ + i]; } // 純虛擬函式,用來識別是卷積(return false)還是反捲積(return true) virtual bool reverse_dimensions() = 0; // 純虛擬函式,計算卷積後輸出尺寸虛擬函式 virtual void compute_output_shape() = 0; /// 卷積核尺寸 Blob<int> kernel_shape_; /// 卷積核平移步幅 Blob<int> stride_; /// 影象補齊畫素數 Blob<int> pad_; /// 影象膨脹畫素數 Blob<int> dilation_; /// @brief The spatial dimensions of the convolution input. Blob<int> conv_input_shape_; /// @brief The spatial dimensions of the col_buffer. vector<int> col_buffer_shape_; /// @brief The spatial dimensions of the output. vector<int> output_shape_; const vector<int>* bottom_shape_; int num_spatial_axes_; int bottom_dim_; int top_dim_; int channel_axis_; int num_; int channels_; // 通道數 int group_; // 組數 int out_spatial_dim_; int weight_offset_; // 權重offset,此處為25*20 = 500 int num_output_; // 輸出數 bool bias_term_; // 是否包含偏置項 bool is_1x1_; // 是否為1x1模式 bool force_nd_im2col_; private: // 將影象轉成列向量 inline void conv_im2col_cpu(const Dtype* data, Dtype* col_buff) { if (!force_nd_im2col_ && num_spatial_axes_ == 2) { im2col_cpu(data, conv_in_channels_, conv_input_shape_.cpu_data()[1], conv_input_shape_.cpu_data()[2], kernel_shape_.cpu_data()[0], kernel_shape_.cpu_data()[1], pad_.cpu_data()[0], pad_.cpu_data()[1], stride_.cpu_data()[0], stride_.cpu_data()[1], dilation_.cpu_data()[0], dilation_.cpu_data()[1], col_buff); } else { im2col_nd_cpu(data, num_spatial_axes_, conv_input_shape_.cpu_data(), col_buffer_shape_.data(), kernel_shape_.cpu_data(), pad_.cpu_data(), stride_.cpu_data(), dilation_.cpu_data(), col_buff); } } inline void conv_col2im_cpu(const Dtype* col_buff, Dtype* data) { if (!force_nd_im2col_ && num_spatial_axes_ == 2) { col2im_cpu(col_buff, conv_in_channels_, conv_input_shape_.cpu_data()[1], conv_input_shape_.cpu_data()[2], kernel_shape_.cpu_data()[0], kernel_shape_.cpu_data()[1], pad_.cpu_data()[0], pad_.cpu_data()[1], stride_.cpu_data()[0], stride_.cpu_data()[1], dilation_.cpu_data()[0], dilation_.cpu_data()[1], data); } else { col2im_nd_cpu(col_buff, num_spatial_axes_, conv_input_shape_.cpu_data(), col_buffer_shape_.data(), kernel_shape_.cpu_data(), pad_.cpu_data(), stride_.cpu_data(), dilation_.cpu_data(), data); } } #ifndef CPU_ONLY inline void conv_im2col_gpu(const Dtype* data, Dtype* col_buff) { if (!force_nd_im2col_ && num_spatial_axes_ == 2) { im2col_gpu(data, conv_in_channels_, conv_input_shape_.cpu_data()[1], conv_input_shape_.cpu_data()[2], kernel_shape_.cpu_data()[0], kernel_shape_.cpu_data()[1], pad_.cpu_data()[0], pad_.cpu_data()[1], stride_.cpu_data()[0], stride_.cpu_data()[1], dilation_.cpu_data()[0], dilation_.cpu_data()[1], col_buff); } else { im2col_nd_gpu(data, num_spatial_axes_, num_kernels_im2col_, conv_input_shape_.gpu_data(), col_buffer_.gpu_shape(), kernel_shape_.gpu_data(), pad_.gpu_data(), stride_.gpu_data(), dilation_.gpu_data(), col_buff); } } inline void conv_col2im_gpu(const Dtype* col_buff, Dtype* data) { if (!force_nd_im2col_ && num_spatial_axes_ == 2) { col2im_gpu(col_buff, conv_in_channels_, conv_input_shape_.cpu_data()[1], conv_input_shape_.cpu_data()[2], kernel_shape_.cpu_data()[0], kernel_shape_.cpu_data()[1], pad_.cpu_data()[0], pad_.cpu_data()[1], stride_.cpu_data()[0], stride_.cpu_data()[1], dilation_.cpu_data()[0], dilation_.cpu_data()[1], data); } else { col2im_nd_gpu(col_buff, num_spatial_axes_, num_kernels_col2im_, conv_input_shape_.gpu_data(), col_buffer_.gpu_shape(), kernel_shape_.gpu_data(), pad_.gpu_data(), stride_.gpu_data(), dilation_.gpu_data(), data); } } #endif int num_kernels_im2col_; int num_kernels_col2im_; int conv_out_channels_; // 卷積操作輸出通道數 int conv_in_channels_; // 卷積操作輸入通道數 int conv_out_spatial_dim_; int kernel_dim_; // 卷積核的尺寸(例如5x5卷積核,kernel_dim_為25) int col_offset_; int output_offset_; Blob<Dtype> col_buffer_; Blob<Dtype> bias_multiplier_; };

base_conv_layer.cpp檔案較長

template <typename Dtype>

void BaseConvolutionLayer<Dtype>::LayerSetUp(const vector<Blob<Dtype>*>& bottom,

const vector<Blob<Dtype>*>& top) {

// 設定濾波核尺寸等引數

ConvolutionParameter conv_param = this->layer_param_.convolution_param();

force_nd_im2col_ = conv_param.force_nd_im2col();

channel_axis_ = bottom[0]->CanonicalAxisIndex(conv_param.axis());

const int first_spatial_axis = channel_axis_ + 1;

const int num_axes = bottom[0]->num_axes();

num_spatial_axes_ = num_axes - first_spatial_axis;

CHECK_GE(num_spatial_axes_, 0);

vector<int> bottom_dim_blob_shape(1, num_spatial_axes_ + 1);

vector<int> spatial_dim_blob_shape(1, std::max(num_spatial_axes_, 1));

// 設定卷積核維度

kernel_shape_.Reshape(spatial_dim_blob_shape);

int* kernel_shape_data = kernel_shape_.mutable_cpu_data();

if (conv_param.has_kernel_h() || conv_param.has_kernel_w()) {

CHECK_EQ(num_spatial_axes_, 2)

<< "kernel_h & kernel_w can only be used for 2D convolution.";

CHECK_EQ(0, conv_param.kernel_size_size())

<< "Either kernel_size or kernel_h/w should be specified; not both.";

kernel_shape_data[0] = conv_param.kernel_h();

kernel_shape_data[1] = conv_param.kernel_w();

} else {

const int num_kernel_dims = conv_param.kernel_size_size();

CHECK(num_kernel_dims == 1 || num_kernel_dims == num_spatial_axes_)

<< "kernel_size must be specified once, or once per spatial dimension "

<< "(kernel_size specified " << num_kernel_dims << " times; "

<< num_spatial_axes_ << " spatial dims).";

for (int i = 0; i < num_spatial_axes_; ++i) {

kernel_shape_data[i] =

conv_param.kernel_size((num_kernel_dims == 1) ? 0 : i);

}

}

for (int i = 0; i < num_spatial_axes_; ++i) {

CHECK_GT(kernel_shape_data[i], 0) << "Filter dimensions must be nonzero.";

}

// 設定卷積核平移步幅

stride_.Reshape(spatial_dim_blob_shape);

int* stride_data = stride_.mutable_cpu_data();

if (conv_param.has_stride_h() || conv_param.has_stride_w()) {

CHECK_EQ(num_spatial_axes_, 2)

<< "stride_h & stride_w can only be used for 2D convolution.";

CHECK_EQ(0, conv_param.stride_size())

<< "Either stride or stride_h/w should be specified; not both.";

stride_data[0] = conv_param.stride_h();

stride_data[1] = conv_param.stride_w();

} else {

const int num_stride_dims = conv_param.stride_size();

CHECK(num_stride_dims == 0 || num_stride_dims == 1 ||

num_stride_dims == num_spatial_axes_)

<< "stride must be specified once, or once per spatial dimension "

<< "(stride specified " << num_stride_dims << " times; "

<< num_spatial_axes_ << " spatial dims).";

const int kDefaultStride = 1;

for (int i = 0; i < num_spatial_axes_; ++i) {

stride_data[i] = (num_stride_dims == 0) ? kDefaultStride :

conv_param.stride((num_stride_dims == 1) ? 0 : i);

CHECK_GT(stride_data[i], 0) << "Stride dimensions must be nonzero.";

}

}

// 設定影象補齊畫素數

pad_.Reshape(spatial_dim_blob_shape);

int* pad_data = pad_.mutable_cpu_data();

if (conv_param.has_pad_h() || conv_param.has_pad_w()) {

CHECK_EQ(num_spatial_axes_, 2)

<< "pad_h & pad_w can only be used for 2D convolution.";

CHECK_EQ(0, conv_param.pad_size())

<< "Either pad or pad_h/w should be specified; not both.";

pad_data[0] = conv_param.pad_h();

pad_data[1] = conv_param.pad_w();

} else {

const int num_pad_dims = conv_param.pad_size();

CHECK(num_pad_dims == 0 || num_pad_dims == 1 ||

num_pad_dims == num_spatial_axes_)

<< "pad must be specified once, or once per spatial dimension "

<< "(pad specified " << num_pad_dims << " times; "

<< num_spatial_axes_ << " spatial dims).";

const int kDefaultPad = 0;

for (int i = 0; i < num_spatial_axes_; ++i) {

pad_data[i] = (num_pad_dims == 0) ? kDefaultPad :

conv_param.pad((num_pad_dims == 1) ? 0 : i);

}

}

// 設定影象膨脹畫素數

dilation_.Reshape(spatial_dim_blob_shape);

int* dilation_data = dilation_.mutable_cpu_data();

const int num_dilation_dims = conv_param.dilation_size();

CHECK(num_dilation_dims == 0 || num_dilation_dims == 1 ||

num_dilation_dims == num_spatial_axes_)

<< "dilation must be specified once, or once per spatial dimension "

<< "(dilation specified " << num_dilation_dims << " times; "

<< num_spatial_axes_ << " spatial dims).";

const int kDefaultDilation = 1;

for (int i = 0; i < num_spatial_axes_; ++i) {

dilation_data[i] = (num_dilation_dims == 0) ? kDefaultDilation :

conv_param.dilation((num_dilation_dims == 1) ? 0 : i);

}

// 只有對於尺寸1x1、平移步幅為1、影象不補齊的卷積核才將標誌位1x1置為true

is_1x1_ = true;

for (int i = 0; i < num_spatial_axes_; ++i) {

is_1x1_ &=

kernel_shape_data[i] == 1 && stride_data[i] == 1 && pad_data[i] == 0;

if (!is_1x1_) { break; }

}

// 配置通道數

channels_ = bottom[0]->shape(channel_axis_);

num_output_ = this->layer_param_.convolution_param().num_output();

CHECK_GT(num_output_, 0);

group_ = this->layer_param_.convolution_param().group();

CHECK_EQ(channels_ % group_, 0);

CHECK_EQ(num_output_ % group_, 0)

<< "Number of output should be multiples of group.";

if (reverse_dimensions()) {

conv_out_channels_ = channels_;

conv_in_channels_ = num_output_;

} else {

conv_out_channels_ = num_output_; // 設定卷積操作輸出通道數

conv_in_channels_ = channels_; // 設定卷積操作輸入通道數

}

// 處理權重引數和偏置引數

// - blobs_[0] holds the filter weights

// - blobs_[1] holds the biases (optional)

vector<int> weight_shape(2);

weight_shape[0] = conv_out_channels_;

weight_shape[1] = conv_in_channels_ / group_;

for (int i = 0; i < num_spatial_axes_; ++i) {

weight_shape.push_back(kernel_shape_data[i]);

}

// 從配置中讀取是否包含偏置項

bias_term_ = this->layer_param_.convolution_param().bias_term();

vector<int> bias_shape(bias_term_, num_output_);

if (this->blobs_.size() > 0) {

CHECK_EQ(1 + bias_term_, this->blobs_.size())

<< "Incorrect number of weight blobs.";

if (weight_shape != this->blobs_[0]->shape()) {

Blob<Dtype> weight_shaped_blob(weight_shape);

LOG(FATAL) << "Incorrect weight shape: expected shape "

<< weight_shaped_blob.shape_string() << "; instead, shape was "

<< this->blobs_[0]->shape_string();

}

if (bias_term_ && bias_shape != this->blobs_[1]->shape()) {

Blob<Dtype> bias_shaped_blob(bias_shape);

LOG(FATAL) << "Incorrect bias shape: expected shape "

<< bias_shaped_blob.shape_string() << "; instead, shape was "

<< this->blobs_[1]->shape_string();

}

LOG(INFO) << "Skipping parameter initialization";

} else {

if (bias_term_) {

this->blobs_.resize(2); // 如果包含偏置項,則blobs_為兩項

} else {

this->blobs_.resize(1); // 如果不包含偏置項,則blobs_只有一項(權重項)

}

// 初始化和填充權重

// output channels x input channels per-group x kernel height x kernel width

this->blobs_[0].reset(new Blob<Dtype>(weight_shape));

shared_ptr<Filler<Dtype> > weight_filler(GetFiller<Dtype>(

this->layer_param_.convolution_param().weight_filler()));

// 使用隨機數填充Xavier濾波核

weight_filler->Fill(this->blobs_[0].get());

// 如果啟用偏置,則初始化偏置濾波核

if (bias_term_) {

this->blobs_[1].reset(new Blob<Dtype>(bias_shape));

shared_ptr<Filler<Dtype> > bias_filler(GetFiller<Dtype>(

this->layer_param_.convolution_param().bias_filler()));

// 使用0填充偏置濾波核

bias_filler->Fill(this->blobs_[1].get());

}

}

kernel_dim_ = this->blobs_[0]->count(1);

// 計算權重offset

weight_offset_ = conv_out_channels_ * kernel_dim_ / group_;

// Propagate gradients to the parameters (as directed by backward pass).

this->param_propagate_down_.resize(this->blobs_.size(), true);

}

// 正向傳導矩陣運算函式

template <typename Dtype>

void BaseConvolutionLayer<Dtype>::forward_cpu_gemm(const Dtype* input,

const Dtype* weights, Dtype* output, bool skip_im2col) {

// 先將影象轉為列向量

const Dtype* col_buff = input;

if (!is_1x1_) {

if (!skip_im2col) {

conv_im2col_cpu(input, col_buffer_.mutable_cpu_data());

}

col_buff = col_buffer_.cpu_data();

}

// 矩陣乘法實現卷積運算

for (int g = 0; g < group_; ++g) {

caffe_cpu_gemm<Dtype>(CblasNoTrans, CblasNoTrans, conv_out_channels_ /

group_, conv_out_spatial_dim_, kernel_dim_,

(Dtype)1., weights + weight_offset_ * g, col_buff + col_offset_ * g,

(Dtype)0., output + output_offset_ * g);

}

}

// CPU正向傳導偏置(在上一步卷積計算結果上加上偏置)

template <typename Dtype>

void BaseConvolutionLayer<Dtype>::forward_cpu_bias(Dtype* output,

const Dtype* bias) {

caffe_cpu_gemm<Dtype>(CblasNoTrans, CblasNoTrans, num_output_,

out_spatial_dim_, 1, (Dtype)1., bias, bias_multiplier_.cpu_data(),

(Dtype)1., output);

}

// CPU反向傳導

template <typename Dtype>

void BaseConvolutionLayer<Dtype>::backward_cpu_gemm(const Dtype* output,

const Dtype* weights, Dtype* input) {

Dtype* col_buff = col_buffer_.mutable_cpu_data();

if (is_1x1_) {

col_buff = input;

}

for (int g = 0; g < group_; ++g) {

caffe_cpu_gemm<Dtype>(CblasTrans, CblasNoTrans, kernel_dim_,

conv_out_spatial_dim_, conv_out_channels_ / group_,

(Dtype)1., weights + weight_offset_ * g, output + output_offset_ * g,

(Dtype)0., col_buff + col_offset_ * g);

}

if (!is_1x1_) {

conv_col2im_cpu(col_buff, input);

}

}

// CPU計算權重的偏導

template <typename Dtype>

void BaseConvolutionLayer<Dtype>::weight_cpu_gemm(const Dtype* input,

const Dtype* output, Dtype* weights) {

const Dtype* col_buff = input;

if (!is_1x1_) {

conv_im2col_cpu(input, col_buffer_.mutable_cpu_data());

col_buff = col_buffer_.cpu_data();

}

for (int g = 0; g < group_; ++g) {

caffe_cpu_gemm<Dtype>(CblasNoTrans, CblasTrans, conv_out_channels_ / group_,

kernel_dim_, conv_out_spatial_dim_,

(Dtype)1., output + output_offset_ * g, col_buff + col_offset_ * g,

(Dtype)1., weights + weight_offset_ * g);

}

}

// CPU反向傳導偏置項

template <typename Dtype>

void BaseConvolutionLayer<Dtype>::backward_cpu_bias(Dtype* bias,

const Dtype* input) {

caffe_cpu_gemv<Dtype>(CblasNoTrans, num_output_, out_spatial_dim_, 1.,

input, bias_multiplier_.cpu_data(), 1., bias);

}

#ifndef CPU_ONLY

// GPU正向傳導

template <typename Dtype>

void BaseConvolutionLayer<Dtype>::forward_gpu_gemm(const Dtype* input,

const Dtype* weights, Dtype* output, bool skip_im2col) {

const Dtype* col_buff = input;

if (!is_1x1_) {

if (!skip_im2col) {

conv_im2col_gpu(input, col_buffer_.mutable_gpu_data());

}

col_buff = col_buffer_.gpu_data();

}

for (int g = 0; g < group_; ++g) {

caffe_gpu_gemm<Dtype>(CblasNoTrans, CblasNoTrans, conv_out_channels_ /

group_, conv_out_spatial_dim_, kernel_dim_,

(Dtype)1., weights + weight_offset_ * g, col_buff + col_offset_ * g,

(Dtype)0., output + output_offset_ * g);

}

}

// GPU正向傳導偏置項

template <typename Dtype>

void BaseConvolutionLayer<Dtype>::forward_gpu_bias(Dtype* output,

const Dtype* bias) {

caffe_gpu_gemm<Dtype>(CblasNoTrans, CblasNoTrans, num_output_,

out_spatial_dim_, 1, (Dtype)1., bias, bias_multiplier_.gpu_data(),

(Dtype)1., output);

}

// GPU計算資料項偏導

template <typename Dtype>

void BaseConvolutionLayer<Dtype>::backward_gpu_gemm(const Dtype* output,

const Dtype* weights, Dtype* input) {

Dtype* col_buff = col_buffer_.mutable_gpu_data();

if (is_1x1_) {

col_buff = input;

}

for (int g = 0; g < group_; ++g) {

caffe_gpu_gemm<Dtype>(CblasTrans, CblasNoTrans, kernel_dim_,

conv_out_spatial_dim_, conv_out_channels_ / group_,

(Dtype)1., weights + weight_offset_ * g, output + output_offset_ * g,

(Dtype)0., col_buff + col_offset_ * g);

}

if (!is_1x1_) {

conv_col2im_gpu(col_buff, input);

}

}

// GPU計算權重的偏導

template <typename Dtype>

void BaseConvolutionLayer<Dtype>::weight_gpu_gemm(const Dtype* input,

const Dtype* output, Dtype* weights) {

const Dtype* col_buff = input;

if (!is_1x1_) {

conv_im2col_gpu(input, col_buffer_.mutable_gpu_data());

col_buff = col_buffer_.gpu_data();

}

for (int g = 0; g < group_; ++g) {

caffe_gpu_gemm<Dtype>(CblasNoTrans, CblasTrans, conv_out_channels_ / group_,

kernel_dim_, conv_out_spatial_dim_,

(Dtype)1., output + output_offset_ * g, col_buff + col_offset_ * g,

(Dtype)1., weights + weight_offset_ * g);

}

}

// GPU反向傳導偏置項

template <typename Dtype>

void BaseConvolutionLayer<Dtype>::backward_gpu_bias(Dtype* bias,

const Dtype* input) {

caffe_gpu_gemv<Dtype>(CblasNoTrans, num_output_, out_spatial_dim_, 1.,

input, bias_multiplier_.gpu_data(), 1., bias);

}