hadoop shell命令遠端提交

阿新 • • 發佈:2018-11-02

hadoop shell命令遠端提交

一,hadoop shell命令遠端提交原理

hadoop shell命令執行目前很多場景下面主要通過 Linux shell來互動操作,無論對於遠端操作還是習慣於windows/web操作的開發人員而言,也是非常痛苦的事情。

在hadoop安裝包中的src\test\org\apache\hadoop\cli\util 目錄中,CommandExecutor.java地實現方式或許對大家有一定啟發。

如下是一段hadoop dfsadmin 命令執行的過程。

exitCode = 0;

ByteArrayOutputStream bao = new ByteArrayOutputStream();

PrintStream origOut =

PrintStream origErr = System.err;

System.setOut( new PrintStream(bao));

System.setErr( new PrintStream(bao));

DFSAdmin shell =

String[] args = getCommandAsArgs(cmd, "NAMENODE", namenode);

cmdExecuted = cmd;

try {

ToolRunner.run(shell, args);

} catch (Exception e) {

e.printStackTrace();

lastException = e;

exitCode = - 1;

} finally {

System.setOut(origOut);

System.setErr(origErr);

}

commandOutput = bao.toString();

return exitCode;

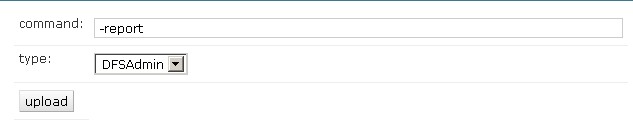

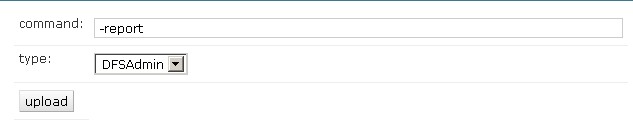

} 在開始階段,通過System.setOut和System.setErr來設定當前應用程式的標準輸出和錯誤輸出流方式是ByteArrayOutputStream。 初始化DFSAdmin shell之後,呼叫ToolRunner.run方法執行 args的命令引數。 當呼叫完成之後,重新設定標準輸出和標準錯誤輸出的方式為預設方式。 DFSAdmin物件類似於hadoop shell命令的 "hadoop dfsadmin " MRAdmin物件類似於hadoop shell命令的 "hadoop mradmin " FsShell 物件類似於hadoop shell命令的 "hadoop fs " 二,利用內建jetty方式,開發jetty servlet來實現一個基於web遠端方式提交hadoop shell命令的基本操作。 1, 設計一個html頁面向servlet提交命令引數,如下圖: 2,servlet程式編寫,如下:

2,servlet程式編寫,如下:

response.setContentType( "text/html");

if(request.getParameter( "select_type") ==null){

writer.write( "select is null");

return;

}

if(request.getParameter( "txt_command") ==null){

writer.write( "command is null");

return;

}

String type =request.getParameter( "select_type");

String command =request.getParameter( "txt_command");

ByteArrayOutputStream bao = new ByteArrayOutputStream();

PrintStream origOut = System.out;

PrintStream origErr = System.err;

System.setOut( new PrintStream(bao));

System.setErr( new PrintStream(bao));

if(type.equals( "1")){

DFSAdmin shell = new DFSAdmin();

String[] items =command.trim().split( " ");

try{

ToolRunner.run(shell,items);

}

catch (Exception e) {

e.printStackTrace();

}

finally{

System.setOut(origOut);

System.setErr(origErr);

}

writer.write(bao.toString().replaceAll( "\n", "<br>"));

}

else if(type.equals( "2")){

MRAdmin shell = new MRAdmin();

String[] items =command.trim().split( " ");

try{

ToolRunner.run(shell,items);

}

catch (Exception e) {

e.printStackTrace();

}

finally{

System.setOut(origOut);

System.setErr(origErr);

}

writer.write(bao.toString().replaceAll( "\n", "<br>"));

}

else if(type.equals( "3")){

FsShell shell = new FsShell();

String[] items =command.trim().split( " ");

try{

ToolRunner.run(shell,items);

}

catch (Exception e) {

e.printStackTrace();

}

finally{

System.setOut(origOut);

System.setErr(origErr);

}

writer.write(bao.toString().replaceAll( "\n", "<br>"));

} 上述程式主要用於簡單處理dfsadmin,mradmin,fs等hadoop shell,並且最終以字串列印輸出到客戶端 簡單測試 -report的結果,擷取部分圖片如下: Configured Capacity: 7633977958400 (6.94 TB)

Present Capacity: 7216439562240 (6.56 TB)

DFS Remaining: 6889407496192 (6.27 TB)

DFS Used: 327032066048 (304.57 GB)

DFS Used%: 4.53%

Under replicated blocks: 42

Blocks with corrupt replicas: 0

Missing blocks: 0

-------------------------------------------------

Datanodes available: 4 (4 total, 0 dead)

Name: 10.16.45.226:50010

Decommission Status : Normal

Configured Capacity: 1909535137792 (1.74 TB)

DFS Used: 103113867264 (96.03 GB)

Non DFS Used: 97985679360 (91.26 GB)

DFS Remaining: 1708435591168(1.55 TB)

DFS Used%: 5.4%

DFS Remaining%: 89.47%

Last contact: Wed Mar 21 14:37:24 CST 2012

CLASSPATH = "/usr/local/hadoop/conf"

for f in $HADOOP_HOME /hadoop -core - *.jar; do

CLASSPATH =${CLASSPATH} :$f;

done

# add libs to CLASSPATH

for f in $HADOOP_HOME /lib / *.jar; do

CLASSPATH =${CLASSPATH} :$f;

done

for f in $HADOOP_HOME /lib /jsp - 2. 1 / *.jar; do

CLASSPATH =${CLASSPATH} :$f;

done

echo $CLASSPATH

java - cp "$CLASSPATH:executor.jar" RunServer

exitCode = 0;

ByteArrayOutputStream bao = new ByteArrayOutputStream();

PrintStream origOut =

PrintStream origErr = System.err;

System.setOut( new PrintStream(bao));

System.setErr( new PrintStream(bao));

DFSAdmin shell =

String[] args = getCommandAsArgs(cmd, "NAMENODE", namenode);

cmdExecuted = cmd;

try {

ToolRunner.run(shell, args);

} catch (Exception e) {

e.printStackTrace();

lastException = e;

exitCode = - 1;

} finally {

System.setOut(origOut);

System.setErr(origErr);

}

commandOutput = bao.toString();

return exitCode;

} 在開始階段,通過System.setOut和System.setErr來設定當前應用程式的標準輸出和錯誤輸出流方式是ByteArrayOutputStream。 初始化DFSAdmin shell之後,呼叫ToolRunner.run方法執行 args的命令引數。 當呼叫完成之後,重新設定標準輸出和標準錯誤輸出的方式為預設方式。 DFSAdmin物件類似於hadoop shell命令的 "hadoop dfsadmin " MRAdmin物件類似於hadoop shell命令的 "hadoop mradmin " FsShell 物件類似於hadoop shell命令的 "hadoop fs " 二,利用內建jetty方式,開發jetty servlet來實現一個基於web遠端方式提交hadoop shell命令的基本操作。 1, 設計一個html頁面向servlet提交命令引數,如下圖:

2,servlet程式編寫,如下:

2,servlet程式編寫,如下:

response.setContentType( "text/html");

if(request.getParameter( "select_type") ==null){

writer.write( "select is null");

return;

}

if(request.getParameter( "txt_command") ==null){

writer.write( "command is null");

return;

}

String type =request.getParameter( "select_type");

String command =request.getParameter( "txt_command");

ByteArrayOutputStream bao = new ByteArrayOutputStream();

PrintStream origOut = System.out;

PrintStream origErr = System.err;

System.setOut( new PrintStream(bao));

System.setErr( new PrintStream(bao));

if(type.equals( "1")){

DFSAdmin shell = new DFSAdmin();

String[] items =command.trim().split( " ");

try{

ToolRunner.run(shell,items);

}

catch (Exception e) {

e.printStackTrace();

}

finally{

System.setOut(origOut);

System.setErr(origErr);

}

writer.write(bao.toString().replaceAll( "\n", "<br>"));

}

else if(type.equals( "2")){

MRAdmin shell = new MRAdmin();

String[] items =command.trim().split( " ");

try{

ToolRunner.run(shell,items);

}

catch (Exception e) {

e.printStackTrace();

}

finally{

System.setOut(origOut);

System.setErr(origErr);

}

writer.write(bao.toString().replaceAll( "\n", "<br>"));

}

else if(type.equals( "3")){

FsShell shell = new FsShell();

String[] items =command.trim().split( " ");

try{

ToolRunner.run(shell,items);

}

catch (Exception e) {

e.printStackTrace();

}

finally{

System.setOut(origOut);

System.setErr(origErr);

}

writer.write(bao.toString().replaceAll( "\n", "<br>"));

} 上述程式主要用於簡單處理dfsadmin,mradmin,fs等hadoop shell,並且最終以字串列印輸出到客戶端 簡單測試 -report的結果,擷取部分圖片如下: Configured Capacity: 7633977958400 (6.94 TB)

Present Capacity: 7216439562240 (6.56 TB)

DFS Remaining: 6889407496192 (6.27 TB)

DFS Used: 327032066048 (304.57 GB)

DFS Used%: 4.53%

Under replicated blocks: 42

Blocks with corrupt replicas: 0

Missing blocks: 0

-------------------------------------------------

Datanodes available: 4 (4 total, 0 dead)

Name: 10.16.45.226:50010

Decommission Status : Normal

Configured Capacity: 1909535137792 (1.74 TB)

DFS Used: 103113867264 (96.03 GB)

Non DFS Used: 97985679360 (91.26 GB)

DFS Remaining: 1708435591168(1.55 TB)

DFS Used%: 5.4%

DFS Remaining%: 89.47%

Last contact: Wed Mar 21 14:37:24 CST 2012

上述程式碼利用jetty內嵌方式開發,執行時候還需要載入hadoop 相關依賴jar以及hadoop config檔案,如下圖所示意:

CLASSPATH = "/usr/local/hadoop/conf"

for f in $HADOOP_HOME /hadoop -core - *.jar; do

CLASSPATH =${CLASSPATH} :$f;

done

# add libs to CLASSPATH

for f in $HADOOP_HOME /lib / *.jar; do

CLASSPATH =${CLASSPATH} :$f;

done

for f in $HADOOP_HOME /lib /jsp - 2. 1 / *.jar; do

CLASSPATH =${CLASSPATH} :$f;

done

echo $CLASSPATH

java - cp "$CLASSPATH:executor.jar" RunServer