安卓java c++ opencv3.4 視訊實時傳輸

阿新 • • 發佈:2018-11-05

安卓java c++ 視訊實時傳輸

要做一個視訊實時傳輸並別影象追蹤識別的專案。

本專案先採用TCP建立連結,然後在用UDP實時傳輸,用壓縮影象幀內為JPEG的方式,加快傳輸速率。

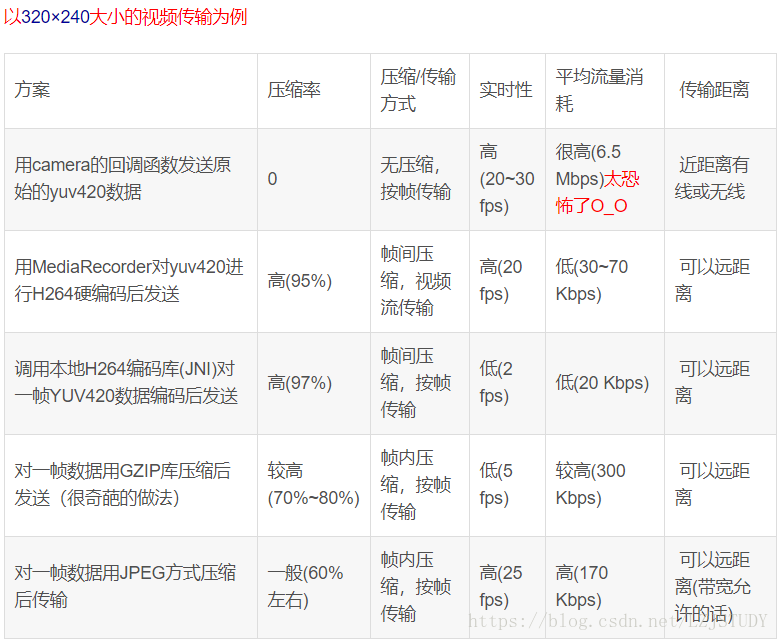

下圖是轉的。。。

我採用的是第五種方案,為了犧牲了流量(區域網不怕),選了高FPS的。

安卓程式碼參考的別人的參考這裡

public class MainActivity extends AppCompatActivity implements SurfaceHolder.Callback, Camera.PreviewCallback { private final int TCP_PORT = 6666; //TCP通訊的埠號 private final int UDP_PORT = 7777; //UDP通訊的埠號 private final String SERVER_IP = "152.18.10.101"; //伺服器端的IP地址 private Camera mCamera; private Camera.Size previewSize; private DatagramSocket packetSenderSocket; //傳送影象幀的套接字 private long lastSendTime; //上一次傳送影象幀的時間 private InetAddress serverAddress; //服務端地址 private final LinkedList<DatagramPacket> packetList = new LinkedList<>(); @Override protected void onCreate(Bundle savedInstanceState) { super.onCreate(savedInstanceState); setContentView(R.layout.activity_main); final SurfaceView surfaceView = (SurfaceView) findViewById(R.id.surfaceView); SurfaceHolder holder = surfaceView.getHolder(); holder.setKeepScreenOn(true); holder.addCallback(this); //開啟通訊連線執行緒,連線服務端 new ConnectThread().start(); } @Override public void surfaceCreated(SurfaceHolder holder) { //獲取相機 if (mCamera == null) { mCamera = Camera.open(); //開啟後攝像頭 Camera.Parameters parameters = mCamera.getParameters(); //設定預覽圖大小 //注意必須為parameters.getSupportedPreviewSizes()中的長寬,否則會報異常 parameters.setPreviewSize(960, 544); previewSize = parameters.getPreviewSize(); //設定自動對焦 parameters.setFocusMode(Camera.Parameters.FOCUS_MODE_CONTINUOUS_PICTURE); mCamera.setParameters(parameters); mCamera.cancelAutoFocus(); //設定回撥 try { mCamera.setPreviewDisplay(holder); mCamera.setPreviewCallback(this); } catch (IOException e) { e.printStackTrace(); } } //開始預覽 mCamera.startPreview(); } @Override public void surfaceChanged(SurfaceHolder holder, int format, int width, int height) { } @Override public void surfaceDestroyed(SurfaceHolder holder) { //釋放相機 if (mCamera != null) { mCamera.setPreviewCallback(null); mCamera.stopPreview(); mCamera.release(); mCamera = null; } } /** * 獲取每一幀的影象資料 */ @Override public void onPreviewFrame(byte[] data, Camera camera) { long curTime = System.currentTimeMillis(); //每20毫秒傳送一幀 if (serverAddress != null && curTime - lastSendTime >= 20) { lastSendTime = curTime; //NV21格式轉JPEG格式 YuvImage image = new YuvImage(data, ImageFormat.NV21, previewSize.width ,previewSize.height, null); ByteArrayOutputStream bos = new ByteArrayOutputStream(); image.compressToJpeg(new Rect(0, 0, previewSize.width, previewSize.height), 40, bos); int packMaxSize = 65500; //防止超過UDP包的最大大小 byte[] imgBytes = bos.toByteArray(); Log.i("tag", imgBytes.length + ""); //打包 DatagramPacket packet = new DatagramPacket(imgBytes, imgBytes.length > packMaxSize ? packMaxSize : imgBytes.length, serverAddress, UDP_PORT); //新增到隊尾 synchronized (packetList) { packetList.addLast(packet); } } } /** * 連線執行緒 */ private class ConnectThread extends Thread { @Override public void run() { super.run(); try { //建立連線 packetSenderSocket = new DatagramSocket(); Socket socket = new Socket(SERVER_IP, TCP_PORT); serverAddress = socket.getInetAddress(); //斷開連線 socket.close(); //啟動傳送影象資料包的執行緒 new ImgSendThread().start(); } catch (IOException e) { e.printStackTrace(); } } } /** * 傳送影象資料包的執行緒 */ private class ImgSendThread extends Thread { @Override public void run() { super.run(); while (packetSenderSocket != null) { DatagramPacket packet; synchronized (packetList) { //沒有待發送的包 if (packetList.isEmpty()) { try { Thread.sleep(10); continue; } catch (InterruptedException e) { e.printStackTrace(); } } //取出隊頭 packet = packetList.getFirst(); packetList.removeFirst(); } try { packetSenderSocket.send(packet); } catch (IOException e) { e.printStackTrace(); } } } } }

UDP資料包看這裡,實際上超不過1472位元組,並不是65507位元組,由乙太網的物理特性決定的。參考這裡: 網路-UDP,TCP資料包的最大傳輸長度分析

C++程式碼我自己寫的

這是all.h

#ifndef ALL_H #define ALL_H #include <stdio.h> #include <winsock2.h> #include <cv.h> #include <opencv2/core/core.hpp> #include <opencv2/highgui/highgui.hpp> #include <opencv2/imgproc/imgproc.hpp> using namespace cv; #pragma comment(lib,"ws2_32.lib") #define Formatted int TCP_PORT = 6666; //TCP通訊的埠號 int UDP_PORT = 7777; //UDP通訊的埠號 const int BUF_SIZE = 1024 * 1024;//1M的快取 WSADATA wsd; SOCKET sServer; SOCKET sClient; SOCKADDR_IN addrServ;//伺服器地址 char buf[BUF_SIZE]; int retVal; #endif

這是tcprun.h

#ifndef TCP_RUN #define TCP_RUN #include "All.h" class tcprun { public: tcprun(); ~tcprun(); int init(int port); private: }; tcprun::tcprun() { } int tcprun::init(int port) { if (WSAStartup(MAKEWORD(2, 2), &wsd) != 0) { printf("呼叫socket庫失敗"); return -1; } sServer = socket(AF_INET, SOCK_STREAM, IPPROTO_TCP); if (INVALID_SOCKET == sServer) { printf("無效套接字,失敗"); WSACleanup();//清理網路環境,釋放socket所佔的資源 return -1; } addrServ.sin_family = AF_INET; addrServ.sin_port = htons(port);//htons是將整型變數從主機位元組順序轉變成網路位元組順序, 就是整數在地址空間儲存方式變為高位位元組存放在記憶體的低地址處。//這裡是port埠3333 addrServ.sin_addr.s_addr = INADDR_ANY;//0.0.0.0(本機) retVal = bind(sServer, (LPSOCKADDR)&addrServ, sizeof(SOCKADDR_IN));//繫結套接字 if (SOCKET_ERROR == retVal) { printf("繫結失敗"); closesocket(sServer); WSACleanup(); return -1; } retVal = listen(sServer, 1); if (SOCKET_ERROR == retVal) { printf("監聽失敗"); closesocket(sServer); WSACleanup(); return -1; } sockaddr_in addrClient; int addrClientlen = sizeof(addrClient); sClient = accept(sServer, (sockaddr FAR*)&addrClient, &addrClientlen); if (INVALID_SOCKET == sClient) { printf("接收無效"); closesocket(sServer); WSACleanup(); return -1; } char buff[1024];//輸出內容 memset(buff, 0, sizeof(buff)); recv(sClient, buff, sizeof(buff), 0); printf("%s\n", buff); closesocket(sServer); closesocket(sClient); WSACleanup();//清理網路環境,釋放socket所佔的資源 } tcprun::~tcprun() { } #endif

TCP裡面的buff是為了測試是否TCP建立連線

這是新封裝的udp類

#ifndef UdpAndPic_H

#define UdpAndPic_H

#include "All.h"

class UdpAndPic

{

public:

UdpAndPic();

~UdpAndPic();

void makepic(SOCKET s, int width, int height);

int init(int port, int width, int height);

};

UdpAndPic::UdpAndPic()

{

}

UdpAndPic::~UdpAndPic()

{

}

int UdpAndPic::init(int port, int width, int height)

{

WORD wVersionRequested;//套接字型檔版本號

WSADATA wsaData;

int err;

wVersionRequested = MAKEWORD(2, 2);//定義套接字的版本號

err = WSAStartup(wVersionRequested, &wsaData);//建立套接字

if (err != 0) { return 0; }///建立套接字失敗處理

if (LOBYTE(wsaData.wVersion) != 2 || HIBYTE(wsaData.wVersion) != 2)

{

WSACleanup(); return 0;

} SOCKET SrvSock = socket(AF_INET, SOCK_DGRAM, 0);//建立套接字

SOCKADDR_IN SrvAddr;

SrvAddr.sin_addr.S_un.S_addr = inet_addr("0.0.0.0");//繫結服務端IP地址

SrvAddr.sin_family = AF_INET;//服務端地址族

SrvAddr.sin_port = htons(port);//繫結服務端埠號

bind(SrvSock, (SOCKADDR*)&SrvAddr, sizeof(SOCKADDR));

int len = sizeof(SOCKADDR);

memset(buf, 0, sizeof(buf));

SOCKADDR ClistAddr;

err = recvfrom(SrvSock, buf, BUF_SIZE, 0, (SOCKADDR*)&ClistAddr, &len);//等待接收客戶端的請求到來

if (err == -1)

{

printf("%s\n", "接收失敗");

}

//迴圈接收資料

printf("開始連線……");

makepic(SrvSock,width,height);//開始製圖

closesocket(SrvSock);//關閉套接字

WSACleanup();

return 0;

}

void UdpAndPic::makepic(SOCKET SrvSock,int width,int height)

{

namedWindow("video", 0);//用引數0代表可以用滑鼠拖拽大小,1不可以

while (true)

{

recv(SrvSock, buf, BUF_SIZE, 0);

printf(buf);

//buf為影象再記憶體中的地址

CvMat mat = cvMat(width, height, CV_8UC3, buf);//從記憶體讀取 /*CvMat用已有資料data初始化矩陣*/

IplImage *pIplImage = cvDecodeImage(&mat, 1);//第二個引數為flag,在opencv文件中查詢

cvShowImage("video", pIplImage);

//cvResizeWindow("video", width, height);//改變窗體大小

cvReleaseImage(&pIplImage);//cvDecodeImage產生的IplImage*物件***需要手動釋放記憶體***

memset(buf, 0, sizeof(buf));

if (waitKey(100) == 27)

break;//按ASCII為27的ESC退出

}

}

#endif在函式makepic中,要注意的是,cvDecodeImage產生的IPLImage物件需要手動釋放記憶體,不然就卡崩白屏。

我這裡是接收到client傳過來的位元組流,存到char *buf裡面,然後用CvMat從記憶體中讀取JPEG的資料。opencv裡有專門的函式可以解jpeg的,因為opencv 本來就依賴於libjpeg,在它上面解壓縮jpeg檔案。

最後是cpp

#ifndef TEST_RUN

#define TEST_RUN

#include "TcpRun.h"

#include "All.h"

#include "UdpAndPic.h"

int main()

{

tcprun *t = new tcprun();

UdpAndPic *u=new UdpAndPic();

if (t->init(6666) != -1)//6666是埠

{

printf("%s\n", "TCP正確,開始執行UDP…");

if (u->init(7777,640,480) != -1)//4444是埠,後面了兩個是CvMat用已有資料data初始化矩陣的寬和高

{

}

else

{

printf("%s\n", "UDP錯誤");

}

}

else

{

printf("%s\n", "TCP錯誤");

}

system("PAUSE");

return 0;

}

#endif