kubernetes實戰(五):k8s持久化安裝Redis Sentinel

1、PV建立

在nfs或者其他型別後端儲存建立pv,首先建立共享目錄

[[email protected] ~]# cat /etc/exports /k8s/redis-sentinel/0 *(rw,sync,no_subtree_check,no_root_squash) /k8s/redis-sentinel/1 *(rw,sync,no_subtree_check,no_root_squash) /k8s/redis-sentinel/2 *(rw,sync,no_subtree_check,no_root_squash)

下載yaml檔案

https://github.com/dotbalo/k8s/tree/master/redis/redis-sentinel

建立pv,注意Redis的空間大小按需修改

[[email protected] redis-sentinel]# kubectl create -f redis-sentinel-pv.yaml [[email protected]-master01 redis-sentinel]# kubectl get pv | grep redis pv-redis-sentinel-0 4Gi RWX Recycle Bound public-service/redis-sentinel-master-storage-redis-sentinel-master-ss-0redis-sentinel-storage-class 16h pv-redis-sentinel-1 4Gi RWX Recycle Bound public-service/redis-sentinel-slave-storage-redis-sentinel-slave-ss-0 redis-sentinel-storage-class 16h pv-redis-sentinel-2 4Gi RWX Recycle Bound public-service/redis-sentinel-slave-storage-redis-sentinel-slave-ss-1redis-sentinel-storage-class 16h

2、建立namespace

預設是在public-service中建立Redis哨兵模式

kubectl create namespace public-service # 如果不使用public-service,需要更改所有yaml檔案的public-service為你namespace。 # sed -i "s#public-service#YOUR_NAMESPACE#g" *.yaml

3、建立ConfigMap

Redis配置按需修改,預設使用的是rdb儲存模式

[[email protected] redis-sentinel]# kubectl create -f redis-sentinel-configmap.yaml [[email protected]-master01 redis-sentinel]# kubectl get configmap -n public-service NAME DATA AGE redis-sentinel-config 2 17h

注意,此時configmap中redis-slave.conf的slaveof的master地址為ss裡面的Headless Service地址。

4、建立service

service主要提供pods之間的互訪,StatefulSet主要用Headless Service通訊,格式:statefulSetName-{0..N-1}.serviceName.namespace.svc.cluster.local

- serviceName為Headless Service的名字

- 0..N-1為Pod所在的序號,從0開始到N-1

- statefulSetName為StatefulSet的名字

- namespace為服務所在的namespace,Headless Servic和StatefulSet必須在相同的namespace

- .cluster.local為Cluster Domain

如本叢集的HS為:

Master:

redis-sentinel-master-ss-0.redis-sentinel-master-ss.public-service.svc.cluster.local:6379

Slave:

redis-sentinel-slave-ss-0.redis-sentinel-slave-ss.public-service.svc.cluster.local:6379

redis-sentinel-slave-ss-1.redis-sentinel-slave-ss.public-service.svc.cluster.local:6379

建立Service

[[email protected] redis-sentinel]# kubectl create -f redis-sentinel-service-master.yaml -f redis-sentinel-service-slave.yaml [[email protected]-master01 redis-sentinel]# kubectl get service -n public-service NAME TYPE CLUSTER-IP EXTERNAL-IP PORT(S) AGE redis-sentinel-master-ss ClusterIP None <none> 6379/TCP 16h redis-sentinel-slave-ss ClusterIP None <none> 6379/TCP <invalid>

5、建立StatefulSet

[[email protected] redis-sentinel]# kubectl create -f redis-sentinel-rbac.yaml -f redis-sentinel-ss-master.yaml -f redis-sentinel-ss-slave.yaml

[[email protected] redis-sentinel]# kubectl get statefulset -n public-service NAME DESIRED CURRENT AGE redis-sentinel-master-ss 1 1 16h redis-sentinel-slave-ss 2 2 16h rmq-cluster 3 3 3d [[email protected]-master01 redis-sentinel]# kubectl get pods -n public-service NAME READY STATUS RESTARTS AGE redis-sentinel-master-ss-0 1/1 Running 0 16h redis-sentinel-slave-ss-0 1/1 Running 0 16h redis-sentinel-slave-ss-1 1/1 Running 0 16h

此時相當於已經在k8s上建立了Redis的主從模式。

6、dashboard檢視

狀態檢視

pods通訊測試

master連線slave測試

[[email protected] redis-sentinel]# kubectl exec -ti redis-sentinel-master-ss-0 -n public-service -- redis-cli -h redis-sentinel-slave-ss-0.redis-sentinel-slave-ss.public-service.svc.cluster.local ping PONG [[email protected]-master01 redis-sentinel]# kubectl exec -ti redis-sentinel-master-ss-0 -n public-service -- redis-cli -h redis-sentinel-slave-ss-1.redis-sentinel-slave-ss.public-service.svc.cluster.local ping PONG

slave連線master測試

[[email protected] redis-sentinel]# kubectl exec -ti redis-sentinel-slave-ss-0 -n public-service -- redis-cli -h redis-sentinel-master-ss-0.redis-sentinel-master-ss.public-service.svc.cluster.local ping PONG [[email protected]-master01 redis-sentinel]# kubectl exec -ti redis-sentinel-slave-ss-1 -n public-service -- redis-cli -h redis-sentinel-master-ss-0.redis-sentinel-master-ss.public-service.svc.cluster.local ping PONG

同步狀態檢視

[[email protected] redis-sentinel]# kubectl exec -ti redis-sentinel-slave-ss-1 -n public-service -- redis-cli -h redis-sentinel-master-ss-0.redis-sentinel-master-ss.public-service.svc.cluster.local info replication # Replication role:master connected_slaves:2 slave0:ip=172.168.5.94,port=6379,state=online,offset=80410,lag=1 slave1:ip=172.168.6.113,port=6379,state=online,offset=80410,lag=0 master_replid:ad4341815b25f12d4aeb390a19a8bd8452875879 master_replid2:0000000000000000000000000000000000000000 master_repl_offset:80410 second_repl_offset:-1 repl_backlog_active:1 repl_backlog_size:1048576 repl_backlog_first_byte_offset:1 repl_backlog_histlen:80410

同步測試

# master寫入資料 [[email protected]-master01 redis-sentinel]# kubectl exec -ti redis-sentinel-slave-ss-1 -n public-service -- redis-cli -h redis-sentinel-master-ss-0.redis-sentinel-master-ss.public-service.svc.cluster.local set test test_data OK # master獲取資料 [[email protected]-master01 redis-sentinel]# kubectl exec -ti redis-sentinel-slave-ss-1 -n public-service -- redis-cli -h redis-sentinel-master-ss-0.redis-sentinel-master-ss.public-service.svc.cluster.local get test "test_data" # slave獲取資料 [[email protected]-master01 redis-sentinel]# kubectl exec -ti redis-sentinel-slave-ss-1 -n public-service -- redis-cli get test "test_data"

從節點無法寫入資料

[[email protected] redis-sentinel]# kubectl exec -ti redis-sentinel-slave-ss-1 -n public-service -- redis-cli set k v (error) READONLY You can't write against a read only replica.

NFS檢視資料儲存

[[email protected] redis-sentinel]# tree . . ├── 0 │ └── dump.rdb ├── 1 │ └── dump.rdb └── 2 └── dump.rdb 3 directories, 3 files

說明:個人認為在k8s上搭建Redis sentinel完全沒有意義,經過測試,當master節點宕機後,sentinel選擇新的節點當主節點,當原master恢復後,此時無法再次成為叢集節點。因為在物理機上部署時,sentinel探測以及更改配置檔案都是以IP的形式,叢集複製也是以IP的形式,但是在容器中,雖然採用的StatefulSet的Headless Service來建立的主從,但是主從建立後,master、slave、sentinel記錄還是解析後的IP,但是pod的IP每次重啟都會改變,所有sentinel無法識別宕機後又重新啟動的master節點,所以一直無法加入叢集,雖然可以通過固定podIP或者使用NodePort的方式來固定,但是也是沒有意義而言的,sentinel實現的是高可用Redis主從,檢測Redis Master的狀態,進行主從切換等操作,但是在k8s中,無論是dc或者ss,都會保證pod以期望的值進行執行,再加上k8s自帶的活性檢測,當埠不可用或者服務不可用時會自動重啟pod或者pod的中的服務,所以當在k8s中建立了Redis主從同步後,相當於已經成為了高可用狀態,並且sentinel進行主從切換的時間不一定有k8s重建pod的時間快,所以個人認為在k8s上搭建sentinel沒有意義。所以下面搭建sentinel的步驟無需在看。

7、建立sentinel

[[email protected] redis-sentinel]# kubectl create -f redis-sentinel-ss-sentinel.yaml -f redis-sentinel-service-sentinel.yaml [[email protected]-master01 redis-sentinel]# kubectl get service -n public-servicve No resources found. [[email protected]-master01 redis-sentinel]# kubectl get service -n public-service NAME TYPE CLUSTER-IP EXTERNAL-IP PORT(S) AGE redis-sentinel-master-ss ClusterIP None <none> 6379/TCP 17h redis-sentinel-sentinel-ss ClusterIP None <none> 26379/TCP 36m redis-sentinel-slave-ss ClusterIP None <none> 6379/TCP 1h rmq-cluster ClusterIP None <none> 5672/TCP 3d rmq-cluster-balancer NodePort 10.107.221.85 <none> 15672:30051/TCP,5672:31892/TCP 3d [[email protected]-master01 redis-sentinel]# kubectl get statefulset -n public-service NAME DESIRED CURRENT AGE redis-sentinel-master-ss 1 1 17h redis-sentinel-sentinel-ss 3 3 8m redis-sentinel-slave-ss 2 2 17h rmq-cluster 3 3 3d [[email protected]-master01 redis-sentinel]# kubectl get pods -n public-service | grep sentinel redis-sentinel-master-ss-0 1/1 Running 0 17h redis-sentinel-sentinel-ss-0 1/1 Running 0 2m redis-sentinel-sentinel-ss-1 1/1 Running 0 2m redis-sentinel-sentinel-ss-2 1/1 Running 0 2m redis-sentinel-slave-ss-0 1/1 Running 0 17h redis-sentinel-slave-ss-1 1/1 Running 0 17h

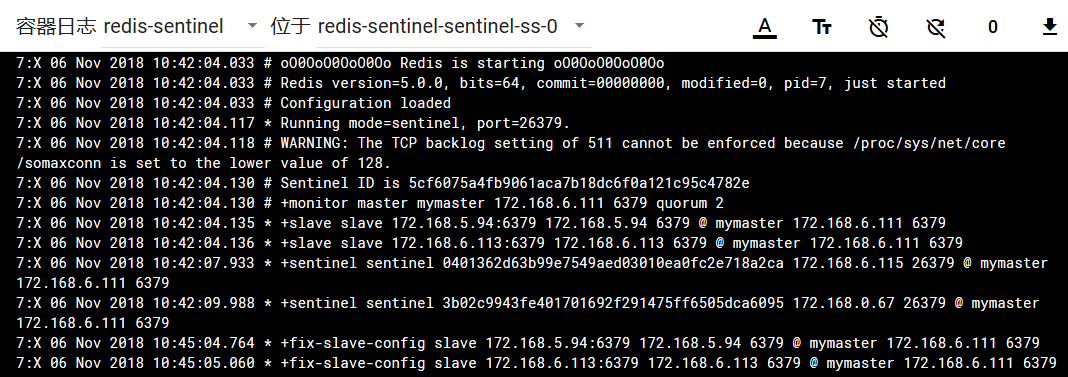

8、檢視日誌

檢視哨兵狀態

[[email protected] ~]# kubectl exec -ti redis-sentinel-sentinel-ss-0 -n public-service -- redis-cli -h 127.0.0.1 -p 26379 info Sentinel # Sentinel sentinel_masters:1 sentinel_tilt:0 sentinel_running_scripts:0 sentinel_scripts_queue_length:0 sentinel_simulate_failure_flags:0 master0:name=mymaster,status=ok,address=172.168.6.111:6379,slaves=2,sentinels=3

9、容災測試

# 檢視當前資料 [[email protected]-master01 ~]# kubectl exec -ti redis-sentinel-master-ss-0 -n public-service -- redis-cli -h 127.0.0.1 -p 6379 get test "test_data"

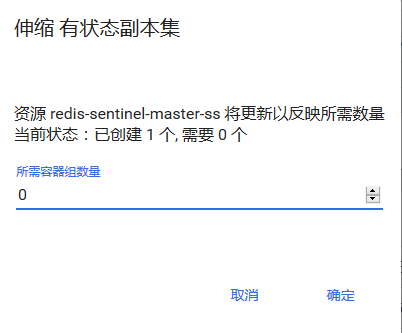

關閉master節點

檢視狀態

[[email protected] ~]# kubectl get pods -n public-service NAME READY STATUS RESTARTS AGE redis-sentinel-sentinel-ss-0 1/1 Running 0 22m redis-sentinel-sentinel-ss-1 1/1 Running 0 22m redis-sentinel-sentinel-ss-2 1/1 Running 0 22m redis-sentinel-slave-ss-0 1/1 Running 0 17h redis-sentinel-slave-ss-1 1/1 Running 0 17h

檢視sentinel狀態

[[email protected] redis]# kubectl exec -ti redis-sentinel-sentinel-ss-2 -n public-service -- redis-cli -h 127.0.0.1 -p 26379 info Sentinel # Sentinel sentinel_masters:1 sentinel_tilt:0 sentinel_running_scripts:0 sentinel_scripts_queue_length:0 sentinel_simulate_failure_flags:0 master0:name=mymaster,status=ok,address=172.168.6.116:6379,slaves=2,sentinels=3 [[email protected]-master01 redis]# kubectl exec -ti redis-sentinel-slave-ss-0 -n public-service -- redis-cli -h 127.0.0.1 -p 6379 info replication # Replication role:slave master_host:172.168.6.116 master_port:6379 master_link_status:up master_last_io_seconds_ago:0 master_sync_in_progress:0 slave_repl_offset:82961 slave_priority:100 slave_read_only:1 connected_slaves:0 master_replid:4097ccd725a7ffc6f3767f7c726fc883baf3d7ef master_replid2:603280e5266e0a6b0f299d2b33384c1fd8c3ee64 master_repl_offset:82961 second_repl_offset:68647 repl_backlog_active:1 repl_backlog_size:1048576 repl_backlog_first_byte_offset:1 repl_backlog_histlen:82961