聰哥哥教你學Python之爬取金庸系列的小說

阿新 • • 發佈:2018-11-07

話不多說,程式碼貼起:

# -*- coding: utf-8 -*- import urllib.request from bs4 import BeautifulSoup #獲取每本書的章節內容 def get_chapter(url): # 獲取網頁的原始碼 html = urllib.request.urlopen(url) content = html.read().decode('utf8') html.close() # 將網頁原始碼解析成HTML格式 soup = BeautifulSoup(content, "lxml") title = soup.find('h1').text #獲取章節的標題 text = soup.find('div', id='htmlContent') #獲取章節的內容 #處理章節的內容,使得格式更加整潔、清晰 content = text.get_text('\n','br/').replace('\n', '\n ') content = content.replace(' ', '\n ') return title, ' '+content def main(): # 書本列表 books = ['射鵰英雄傳','天龍八部','鹿鼎記','神鵰俠侶','笑傲江湖','碧血劍','倚天屠龍記',\ '飛狐外傳','書劍恩仇錄','連城訣','俠客行','越女劍','鴛鴦刀','白馬嘯西風',\ '雪山飛狐'] order = [1,2,3,4,5,6,7,8,10,11,12,14,15,13,9] #order of books to scrapy #list to store each book's scrapying range page_range = [1,43,94,145,185,225,248,289,309,329,341,362,363,364,375,385] for i,book in enumerate(books): for num in range(page_range[i],page_range[i+1]): url = "http://jinyong.zuopinj.com/%s/%s.html"%(order[i],num) # 錯誤處理機制 try: title, chapter = get_chapter(url) with open('D://book/%s.txt'%book, 'a', encoding='gb18030') as f: print(book+':'+title+'-->寫入成功!') f.write(title+'\n\n\n') f.write(chapter+'\n\n\n') except Exception as e: print(e) print('全部寫入完畢!') main()

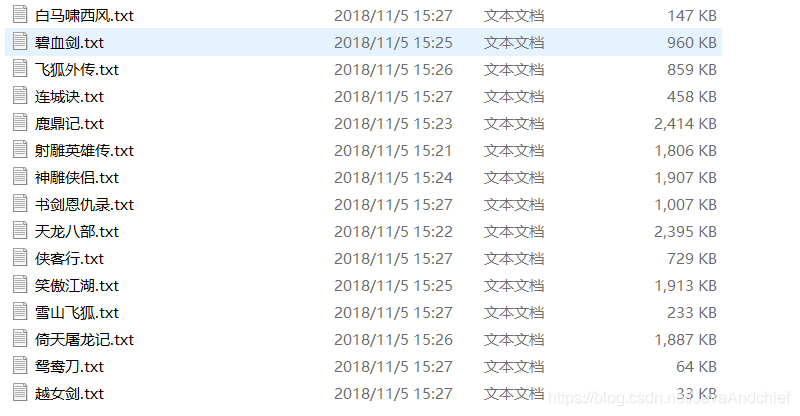

最終的結果是這樣的:

將對應的書寫入對應的txt,開啟閱讀,確實有點體驗不好,但是聰哥哥金點子,給你提建議:

通過如下網站,可將txt轉為pdf

http://www.pdfdo.com/txt-to-pdf.aspx

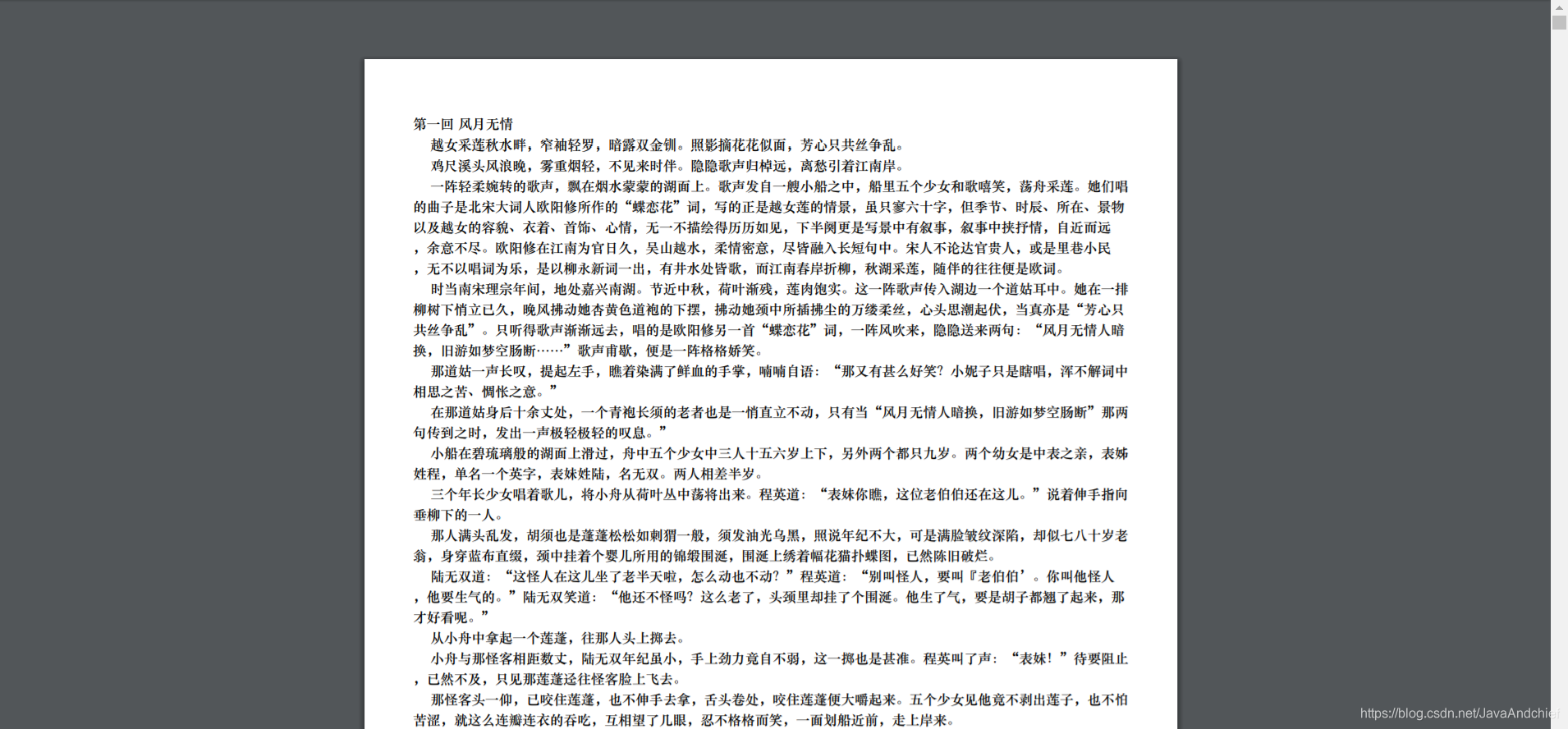

所以最後的結果是:

轉為pdf後,閱讀體驗更好了。希望這篇文章能給廣大的小夥伴們幫助。