移動端unet人像分割模型--2

阿新 • • 發佈:2018-11-09

前一篇blog裡提到的錯誤果然是mxnet網路的問題,pool5誤敲成pool4修改之後,ncnn就不再crash,不過ncnn的mxnet2ncnn這個工具應該多加一些診斷確保轉換的模型引數一致才對。

只是事情也沒那麼一帆風順,轉成ncnn後的預測結果死活不對。沒辦法,只能一層層去檢查,寫了幾個簡單的工具可以列印中間隱藏層的結果。

check.py

import os os.environ["MXNET_BACKWARD_DO_MIRROR"] = "1" os.environ["MXNET_CUDNN_AUTOTUNE_DEFAULT"] = "0" import sys import cv2 import mxnet as mx from mxnet import ndarray as F from skimage.transform import resize from skimage.io import imsave import numpy as np from unetdataiter import UnetDataIter import matplotlib.pyplot as plt from unet import build_unet np.set_printoptions(threshold=np.inf) def post_process_mask(label, img_cols, img_rows, n_classes, p=0.5): pr = label.reshape(n_classes, img_cols, img_rows).transpose([1,2,0]).argmax(axis=2) return (pr*255).asnumpy() def ncnn_output(label): #pr = label.reshape(channels, img_cols, img_rows).transpose([1,2,0]) pr = label.transpose([1,2,0]) return pr.asnumpy() def load_image(img, width, height): im = np.zeros((height, width, 3), dtype='uint8') #im[:, :, :] = 128 if img.shape[0] >= img.shape[1]: scale = img.shape[0] / height new_width = int(img.shape[1] / scale) diff = (width - new_width) // 2 img = cv2.resize(img, (new_width, height)) im[:, diff:diff + new_width, :] = img else: scale = img.shape[1] / width new_height = int(img.shape[0] / scale) diff = (height - new_height) // 2 img = cv2.resize(img, (width, new_height)) im[diff:diff + new_height, :, :] = img im = np.float32(im) / 255.0 return [im.transpose((2,0,1))] def main(): batch_size = 16 n_classes = 2 img_width = 256 img_height = 256 #img_width = 96 #img_height = 96 ctx = [mx.gpu(0)] # sym, arg_params, aux_params = mx.model.load_checkpoint('unet_person_segmentation', 20) #unet_sym = build_unet(batch_size, img_width, img_height, False) # unet = mx.mod.Module(symbol=unet_sym, context=ctx, label_names=None) sym, arg_params, aux_params = mx.model.load_checkpoint('unet_person_segmentation', 0) all_layers = sym.get_internals() print(all_layers.list_outputs()) unet = mx.mod.Module(symbol=all_layers['conv11_1_output'], context=ctx, label_names=None) #unet = mx.mod.Module(symbol=all_layers['pool5_output'], context=ctx, label_names=None) unet.bind(for_training=False, data_shapes=[['data', (batch_size, 3, img_width, img_height)]], label_shapes=unet._label_shapes) #unet.set_params(arg_params, aux_params, allow_missing=True) unet.set_params(arg_params, aux_params) testimg = cv2.imread(sys.argv[1], 1) img = load_image(testimg, img_width, img_height) unet.predict(mx.io.NDArrayIter(data=[img])) outputs = unet.get_outputs()[0] print(outputs[0].shape) output = ncnn_output(outputs[0]) print(output) #keys = unet.get_params()[0].keys() # 列出所有權重名稱 #print(keys) #conv_w = unet.get_params()[0]['trans_conv6_weight'] # 獲取想要檢視的權重資訊 #print(conv_w.shape) #print(conv_w.asnumpy()) # 檢視具體數值 #cv2.imshow('test', testimg) #cv2.imshow('mask', post_process_mask(outputs[0], img_width, img_height, n_classes)) #cv2.waitKey() if __name__ == '__main__': if len(sys.argv) < 2: print("illegal parameters") sys.exit(0) main()

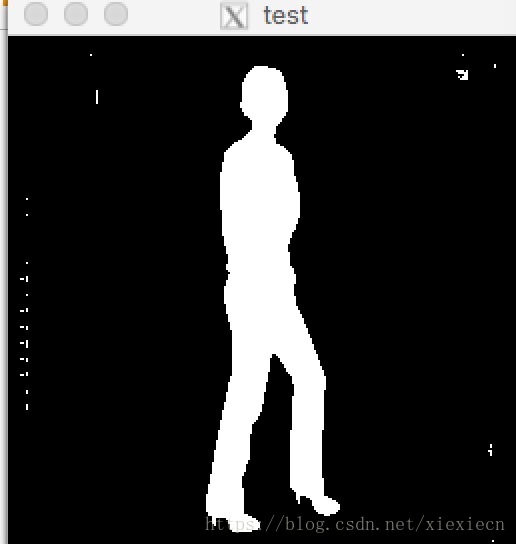

在這個基礎之上,發現是第一次反捲積就出了問題(mxnet神經網路trans_conv6的輸出)。結果完全不一致,按個人理解,反捲積演算法會出問題的可能性比較小,所以把mxnet這一層的權重值列印了出來(上面註釋掉的程式碼)。再在mxnet2ncnn的程式碼裡把對應的引數列印,最後發現是num_group出了問題,簡單處理就是把mxnet2ncnn.cpp裡的反捲積num_group固定為1,終於解決問題。得到正確的輸出結果:

中間還遇到一些ncnn和mxnet之間影象格式之類的轉換問題,特別是浮點數的處理,就不囉嗦了,直接上程式碼。

#include "net.h" #include <opencv2/opencv.hpp> #include <string> #include <vector> #include <time.h> #include <algorithm> #include <map> #include <iostream> #include <opencv2/opencv.hpp> using namespace std; using namespace cv; #define INPUT_WIDTH 256 #define INPUT_HEIGHT 256 int main(int argc, char** argv) { if (argc < 2) { printf("illegal parameters!"); exit(0); } ncnn::Net Unet; Unet.load_param("../models/ncnn.param"); Unet.load_model("../models/ncnn.bin"); cv::Scalar value = Scalar(0,0,0); cv::Mat src; cv::Mat tmp; src = cv::imread(argv[1]); if (src.size().width > src.size().height) { int top = (src.size().width - src.size().height) / 2; int bottom = (src.size().width - src.size().height) - top; cv::copyMakeBorder(src, tmp, top, bottom, 0, 0, BORDER_CONSTANT, value); } else { int left = (src.size().height - src.size().width) / 2; int right = (src.size().height - src.size().width) - left; cv::copyMakeBorder(src, tmp, 0, 0, left, right, BORDER_CONSTANT, value); } cv::Mat tmp1; cv::resize(tmp, tmp1, cv::Size(INPUT_WIDTH, INPUT_HEIGHT), CV_INTER_CUBIC); cv::Mat image; tmp1.convertTo(image, CV_32FC3, 1/255.0); std::cout << "image element type "<< image.type() << " " << image.cols << " " << image.rows << std::endl; // std::cout << src.cols << " " << src.rows << " " << image.cols << " " << image.rows << std::endl; //cv::imshow("test", image); //cv::waitKey(); //ncnn::Mat ncnn_img = ncnn::Mat::from_pixels(image.data, ncnn::Mat::PIXEL_BGR2RGB, image.cols, image.rows); // cv32fc3 的佈局是 hwc ncnn的Mat佈局是 chw 需要調整排布 float *srcdata = (float*)image.data; float *data = new float[INPUT_WIDTH*INPUT_HEIGHT*3]; for (int i = 0; i < INPUT_HEIGHT; i++) for (int j = 0; j < INPUT_WIDTH; j++) for (int k = 0; k < 3; k++) { data[k*INPUT_HEIGHT*INPUT_WIDTH + i*INPUT_WIDTH + j] = srcdata[i*INPUT_WIDTH*3 + j*3 + k]; } ncnn::Mat in(image.rows*image.cols*3, data); in = in.reshape(256, 256, 3); //ncnn::Mat in; //resize_bilinear(ncnn_img, in, INPUT_WIDTH, INPUT_HEIGHT); ncnn::Extractor ex = Unet.create_extractor(); ex.set_light_mode(true); //sex.set_num_threads(4); ex.input("data", in); ncnn::Mat mask; //ex.extract("relu5_2_splitncnn_0", mask); //ex.extract("trans_conv6", mask); ex.extract("conv11_1", mask); //ex.extract("pool5", mask); std::cout << "whc " << mask.w << " " << mask.h << " " << mask.c << std::endl; #if 1 cv::Mat cv_img = cv::Mat::zeros(INPUT_WIDTH,INPUT_HEIGHT,CV_8UC1); // mask.to_pixels(cv_img.data, ncnn::Mat::PIXEL_GRAY); { float *srcdata = (float*)mask.data; unsigned char *data = cv_img.data; for (int i = 0; i < mask.h; i++) for (int j = 0; j < mask.w; j++) { float tmp = srcdata[0*mask.w*mask.h+i*mask.w+j]; int maxk = 0; for (int k = 0; k < mask.c; k++) { if (tmp < srcdata[k*mask.w*mask.h+i*mask.w+j]) { tmp = srcdata[k*mask.w*mask.h+i*mask.w+j]; maxk = k; } //std::cout << srcdata[k*mask.w*mask.h+i*mask.w+j] << std::endl; } data[i*INPUT_WIDTH + j] = maxk; } } cv_img *= 255; cv::imshow("test", cv_img); cv::waitKey(); #endif return 0; }

至此,功能完成,有興趣的請移步:https://github.com/xuduo35/unet_mxnet2ncnn

另外,除錯過程發現,ncnn的中間層輸出和mxnet的輸出不是完全一致,可能是有一些引數或者運算細節問題,不影響最後mask結果,暫時就不管了。